Sponsored By: INDX

Tired of endless scrolling while looking for great long-form content? Download INDX and find your next great read today.

This sounds simple, but it will change the world so pay attention: The most significant consequence of the internet was that it pushed distribution costs to zero. The most significant consequence of AI is that it will push creation costs to zero.

You can essentially boil down the atomic activity of any company into:

- Create stuff

- Acquire customer to buy stuff

- Distribute that stuff

If you put that into the context of the income statement you would see:

- Cost of goods sold

- Sales and marketing expense

- Other operating expenses

The internet broke the third category of distribution, and now AI is going to break the first one. Innovations like GPT-3, DALL-E, and other AI tools will dramatically decrease the cost of producing all goods with a digital component (aka everything).

I don’t want to veer into hyperbole, but I’ve never been so excited and so scared simultaneously by a technology category. Theoretically, I knew these changes were coming, but I have been stunned by how quickly they have occurred. My current feeling is that in 5-10 years, the power dynamics of knowledge work will look radically different. Our relationship with information and creation will be fundamentally altered.

For some categories of companies, AI tooling will be a disruptive innovation—one that renders their entire business obsolete. For other categories, they will be a sustaining innovation that can allow them to serve a similar set of customers at decreased costs.

The biggest question to me is this: when all that is left to compete on is acquisition, what does our economy look like?

This article is what I’m using to try to answer that question.

What Are These AI Tools—and Why Now?

Whenever a technological revolution occurs sooner than expected, it is worth examining what inputs into the progress bar suddenly sped up.

In the case of AI, I would point to two-step changes. 1) the introduction of transformer models allowed for computers to understand text and 2) an astronomical shit-ton of money and computing power that was unlocked over the last few years. Moore’s law, the napkin math rule from the Intel co-founder that computing power doubles every two years, has started to run out of steam in CPUs but GPUs have taken up the banner. It turns out GPUs are great for running deep learning and other machine learning algorithms so whenever the solution to a problem was unclear you could theoretically answer it with “What if we just dumped more compute power into the system.” Money coming in from Google, Amazon, and OpenAI allowed these resource constraints to be removed.

What if you could see everything your curious friends are reading?

Introducing INDX— a social platform to share thought-provoking, long-form reads, and podcasts.

Ditch the doom scrolling and find your next great read today.

With the combination of these two step changes, we are now at the point where computers can understand context and then apply that context to a defined set of parameters. It isn’t perfect! There can be weaknesses in the language models or in the application of the text inputs, but it is dramatically ahead of where anyone would’ve predicted five years ago.

The basic flow for these tools is open text box, type out in plain English what you want the AI to do, the AI does it. That’s it! No more messy 10-step workflows or languages you need to learn. It is as simple as typing out what you want.

Two weeks ago my colleague Nathan compared this new generation of AI software to what came before as follows,

“Instead of software that mimics a paintbrush, we now have software that mimics the painter.”

I would extend his analogy—the new tools mimic the painter in both outputs and inputs. You can receive products that previously weren’t possible but you also have to prompt the software the same way you would prompt a human being. Sometimes direct instructions work, sometimes gentle prodding, but it is all a new paradigm by which we interact with software. Transformers and GPUs power/availability makes all this possible—and the results are wild.

Impressive Demos

So what does this look like in practice? Some of the craziest, most impressive demos I’ve ever seen. These are all products that have been released in the LAST 30 DAYS.

Best practice for newsletter writing is to “never link out of the essay” but I don’t care. You need to see these for yourself. Reading this on our website will be a better experience because the tweets will be directly embedded but even if you are on email reading this, make sure to click out and watch these demos.

First off, the flashiest thing that you’ve probably already seen is image generation. Tools like DALL-E from OpenAI allow images to be generated from text prompts. They charge a usage fee which sounds like a great business. However, on August 22nd, an open-source version called Stable Diffusion was released. It can be run locally and can generate any image you can think of. Enter a prompt, get a result—no payment beyond computing power required.

For example, do you want a “gentleman otter in a 19th century portrait”? You got it.

But that’s kind of small. What if we want to extend the image? It is as simple as clicking expand.

If you are a pro in photoshop but want to remix existing images, there is a Stable Diffusion plug-in for that too. Now photoshop doesn’t need to be conducted with clicking and hotkeys—it is all a text prompt away. But you know what? Photos are boringggg, if you’re more of a movie person, there is AI magic for that too.This tool does the same thing—it allows someone creating a movie to live edit in backgrounds, remove objects, all the tasks that would normally require teams of digital effect artists, all done with text prompts. (This one made my jaw drop.)

But what happens if you combine existing text prompt-generated images with other AI-powered voice modifiers and wrap it into a video?

This looks like the work of a team of visual effect pros doing long, hard labor. In actuality, it was just done by a lone engineer playing with tools. Insane.

But let’s say even that isn’t enough! What happens when we mix augmented reality, ecommerce, and Stable Diffusion. Well, you get something like this demo from Shopify.

Phew—deep breath. Keep in mind, this all only for the use case of image generation. It doesn’t include anything else. This same kind of automation is possible in all sorts of different fields.

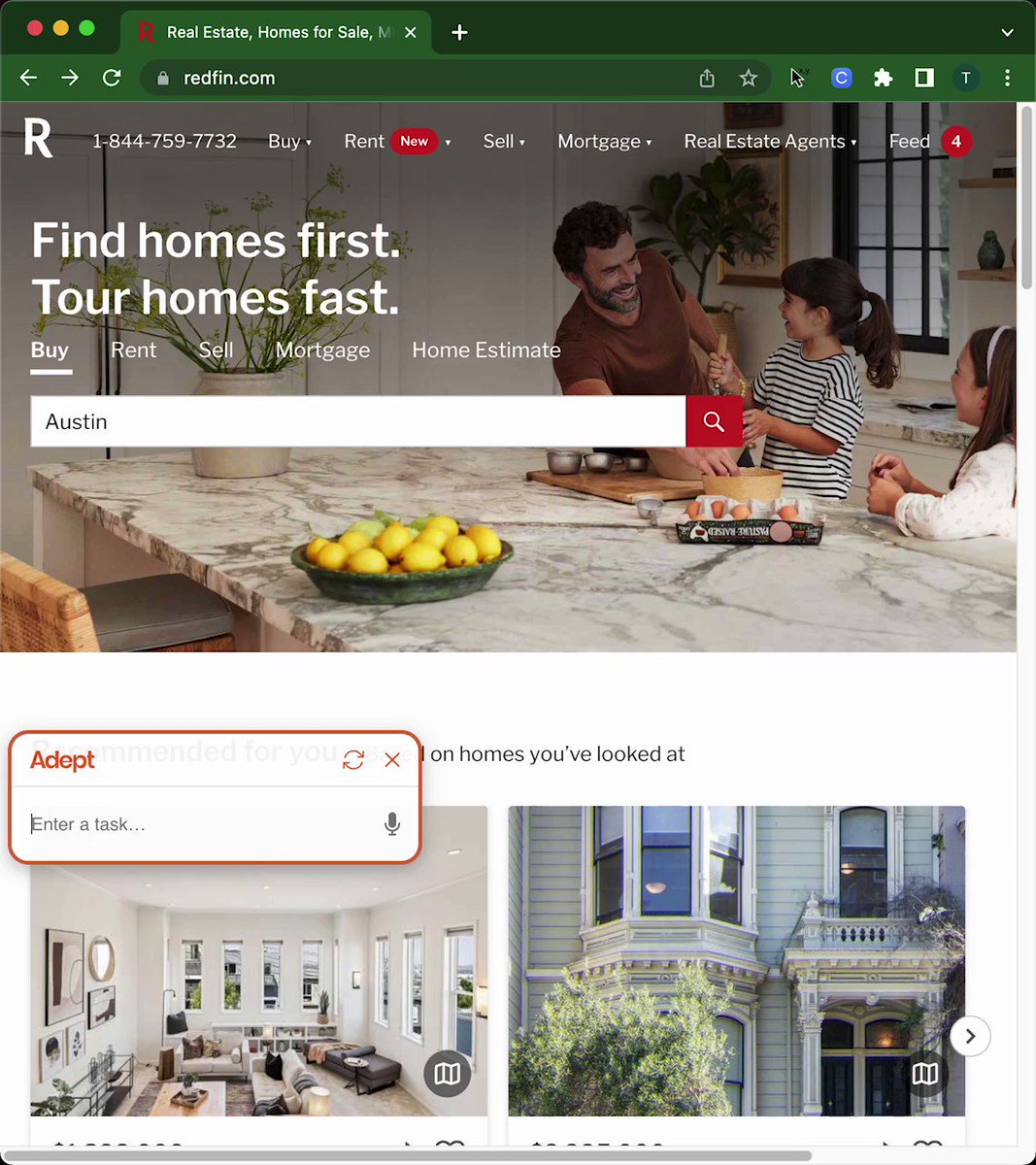

Of all the demos I’ve seen, the most impressive was released on Sep 14th. It was so good it honestly made me consider quitting my job to go work in AI. In the tweet below you’ll see a text screen managed via Google Chrome Plug-In that can do actions in software for you. This one is finding a house in Houston:

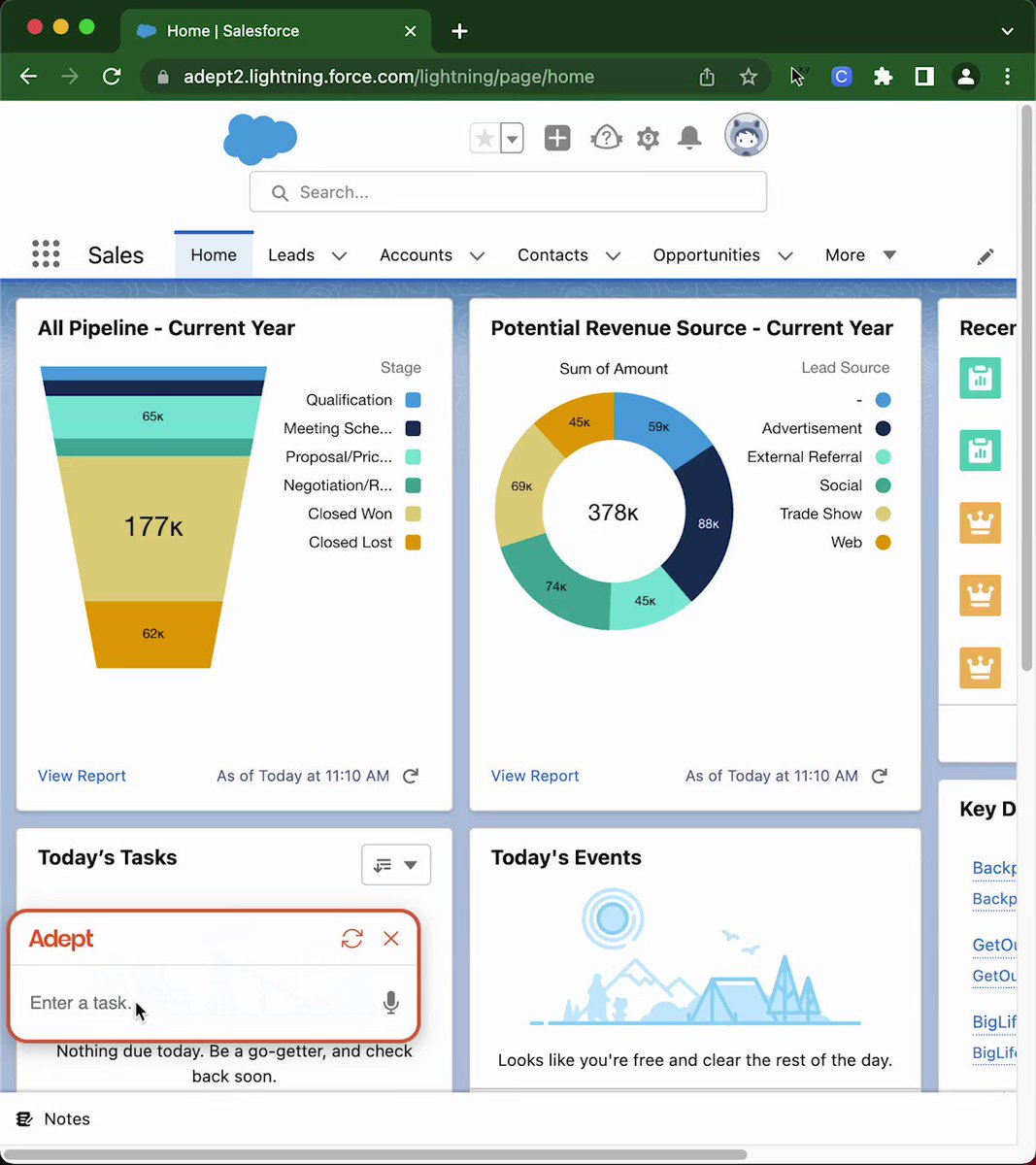

This use case will strike close to home for my readers who work with sales teams: it automates updating Salesforce. This tool alone probably puts 30% of Sales Operations teams out of work.

Here is the tool running calculations in excel. In a year or two, with integration into other BI tools and warehousing solutions, this will probably get rid of most entry level analytics roles. It can even stitch together actions across multiple pieces of software, just like a human being can do. You can see why I’m so excited and so scared by this. If we continue at even close to our current rate of progress, the vast majority of knowledge work will be automated away.So we’ve seen the demos and had our minds blown. Back to the question at hand: how does this play out for technology companies?

Downstream impacts

It is easy and/or intellectually lazy to point to technology as the thing that changes markets. Unfortunately that just isn’t the case. Truly disruptive technology companies are the pairing of novel tech with unique market conditions. Technology alone isn’t sufficient; a company needs to have incredible marketing, beatable competitors, and the right team. Anything missing will cause the whole enterprise to fail.

I think for most existing technology companies, these tools will only cement their market position. It will be easier for Tableau to put in a text prompt box then for an ML startup to build an entire visualization suite. So many of these models are based on academic papers and open source software that a team with a sufficiently large budget will probably be able to build a “good-enough” product. AI startups will have to ask if their breakthrough is defensible enough and revolutionary enough that it will justify replacing existing workflows. I’m not at sufficient confidence levels to say what fields will be replaceable or not, but I think power consolidation is the most likely outcome.

I’m much more bullish on startups that act as infrastructure providers for other companies. Whether that is API access like OpenAI has decided to do or whether that is custom models like C3.AI (public stock, $1.6B valuation, $252M in FY22 revenue), this feels like an instance where picks and shovels are better to sell then end applications. And of course, the most bullish use case is where startups can enable stuff that was previously unimaginable. I’m excited to see what a talented class of entrepreneurs can come up with.

My biggest hypothesis is that these tools will end up being more disruptive for individual workers than large companies. You can look at all the demos above and think of the jobs they make irrelevant. The disruption will happen in two broad categories:

Creation: Making stuff from scratch that acts as complete replacements of products that previously would’ve required human input.

Collaboration: Humans are paired with an AI tool to dramatically improve and speed up their work flows.

If I were to put a percentage to it I think 99% of the disruption will actually be on the collaboration side. The image creation demos are flashy but limited. Automating away rote, low-value work is where the majority of productivity gains will come from.

As always in the technology sector, new innovations enforce the power law. Top performers will no longer require support staff, they can just AI away the easy stuff. For example, Github CoPilot is an AI tool that allows software engineers to have a robot write code for them. Nothing fancy or complex! But it can allow an engineer to focus on writing differentiated code and not have to worry about the easy, monotonous stuff. This is good for individuals, but bad for the community in aggregate. What if 10x engineers suddenly become 15x engineers?

Of course, new jobs will be created to build and support these tools, but denying there will be negative consequences for many people is dumb. Of course automating away tons of people’s livelihood will harm them. But because technology like Stable Diffusion is free, and because these tools are so powerful, market forces will just pull towards the more efficient option.

What if the Medium isn’t the Message?

Using the internet to distribute something instantly at zero cost dramatically altered every aspect of society. The initially scaled use cases happened in obvious categories. Software over the cloud was predictable (and predicted by my peers of the day!). However, distribution costs being at zero changed far more than some niche technology categories.

The internet has altered how we coordinate physical labor from peer to peer connections to marketplaces. Media companies’ geographic arbitrage completely disappeared. Politicians realized that Twitter points were more valuable than policy. There are now thousands of people earning six figures selling pictures of their feet. This exercise could continue ad nauseum. All of these occurred because once you build a digital good or coordination mechanism, you can essentially scale it for free, with only acquisition costs to worry about.

AI will automate content creation costs to zero, and the effects will be far reaching. More and more power will accrue to those companies who have novel acquisition methods. In previous iterations of this newsletter, I’ve argued that “addiction will be the blood sacrifice required of consumers for businesses to win.” These tools will only exacerbate that dynamic.

Also interesting will be how AI affects product design itself. Arguably, the winning products will be those that feed the best data into their AI models. Techniques like diffusion mean that having great data sets isn’t always necessary, but it helps by speeding up development time and reducing cost. This dynamic will reward the products that can be redone to have better training inputs. TikTok is the perfect example of this. Every single aspect of the app is designed to better inform its recommendation algorithm (some people may include this algo in the AI bucket). From the buttons available, the swiping motion, and even the length of the content—all of them feed into a better algorithmic suggestion.

In aggregate this also means that the companies whose formats are more naturally translated into algorithmic prose will be justly rewarded. Companies like Substack will struggle because AI will simultaneously dramatically increase the volume of content being created, but text is incredibly difficult to build recommendations algos for.

In 1964, communication theorist Marshall McLuhan famously quipped that “the medium is the message.” I’m starting to wonder if that is outdated. If the algorithm is determining what medium is successful, what is the real determining factor?

The largest impact of AI is that it will fundamentally change our relationship with content itself. What is real, what is fake, what is made just for us—all of these things are going to be far murkier than they were before. We haven’t even covered the ethics of these tools today (this deserves a post in and of itself), but the whole thing is deeply complicated. The disruption potential on both an individual and societal level is something beyond any other invention of the past. And these changes aren’t happening in some hypothetical, far-off future—they are happening right now. Buckle up.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

.08.31_AM.png)

Comments

Don't have an account? Sign up!