Sponsored By: INDX

Tired of endless scrolling while looking for great long-form content? Download INDX and find your next great read today.

1

When Thomas Edison was inventing “moving pictures” all he could imagine was filming a live performance of the opera. He did not anticipate Gone With The Wind or Jaws. Surprisingly, the craft of cinematography was not obvious beforehand, and took decades to discover.

This happens with all radical new technologies: before they mature, our expectation of how they’ll work is only half right.

Now, considering recent advances in artificial intelligence, I believe we’re finally at the point where we can see what’s working, squint at it, and start to make out the shape of the half we didn’t—and perhaps couldn’t—imagine beforehand.

2

The first and most obvious thing most people imagine when asked to describe “AI” is a humanoid robot, like C-3P0, or perhaps a disembodied personality, like Her.

But perhaps this is the AI equivalent of imagining film would only be used to record live on-stage performances? Sure, it could be part of it, but it’s missing the more important thing.

The reason it’s natural to imagine AI in this way is because we assume machine intelligence follows similar stages of development as human intelligence. So we imagine systems that are capable of having conversations with us and performing a wide variety of simple tasks, like Siri and Alexa, rather than systems that can’t speak but can do one thing really well, like draw illustrations, play a video game, or use gyroscope data from a watch to detect what kind of workout we’re doing.

It’s becoming increasingly clear that the vast majority of useful AI is not about creating an artificial human personality. It is about creating tools that are endowed with intelligence, and capable of performing far more complex tasks than before.

In fact, I think this is so important, so overlooked, and will become so pervasive, that I think it makes sense to classify it as a new revolution in software. When we put it in this context, it makes the future a lot more clear.

What if you could see what your curious friend was reading?

Introducing INDX— A social platform to share thought-provoking, long-form reads, and podcasts.

Ditch the doom scrolling and find your next great read today.

3

If you take an expansive view of the history of software, there are two big revolutions that stand out:

- The shift from command-line interfaces to graphical user interfaces, which enabled many more people to use computers by removing the need to memorize a long list of commands.

- The shift from single-player computing to globally networked computing, which made computers vastly more useful, and exponentially increased the number of people who wanted to use computers.

Now, considering recent developments in artificial intelligence, I believe we’re entering a third major revolution in software: the shift from simple, direct manipulation of data to tools that are intelligent and capable of complex, unpredictable output. Instead of software that mimics a paintbrush, we now have software that mimics the painter.

This principle will be extended to all types of software: research, accounting, programming, web design, writing, video editing, truck driving, etc. Anywhere you can train a machine learning model to perform a complex task, someone will perform a machine learning model to perform a complex task.

In a lot of fields this won’t mean taking humans out of the loop entirely. Instead, it will mean making humans more efficient by building intelligent new capabilities into their tools.

4

A perfect recent example of this is how the field of image generation is unfolding.

The original demos of products like Midjourney and DALL·E 2 were about typing in a prompt like “kangaroo in front of a beautiful sunset” and seeing an output like this:

(I generated this using Stable Diffusion for this post.)

It’s cool and stunning that it works! But this one-shot approach is really just the beginning.

A week ago, a new image generation algorithm called Stable Diffusion was released, but unlike Midjourney or DALL·E 2, it was open sourced. Anyone could download it and run it on their own hardware, or build it into their own products. The demos that we are seeing in the past week are pretty mind boggling.

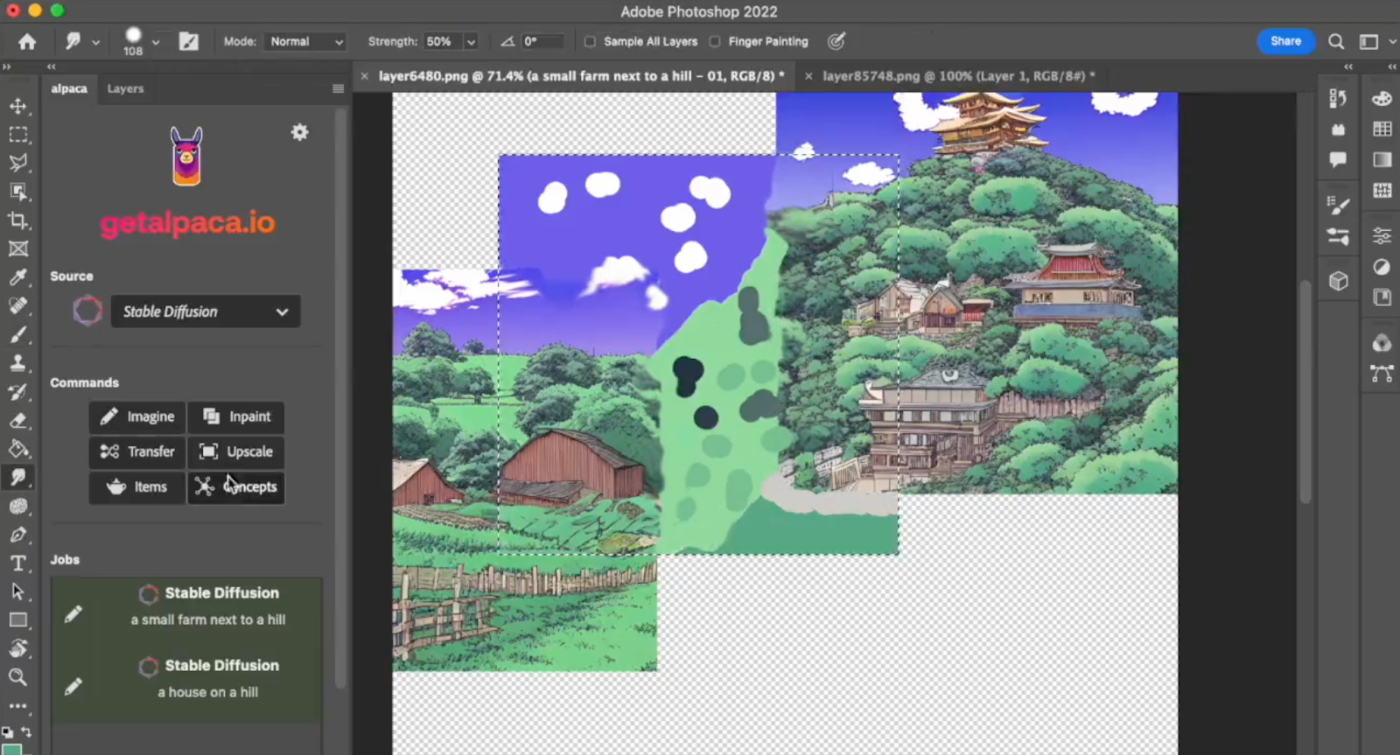

For example, someone made a plugin for Photoshop called Alpaca that allows you to not only insert images generated by Stable Diffusion, but to select regions of the screen and have the AI fill in the details.

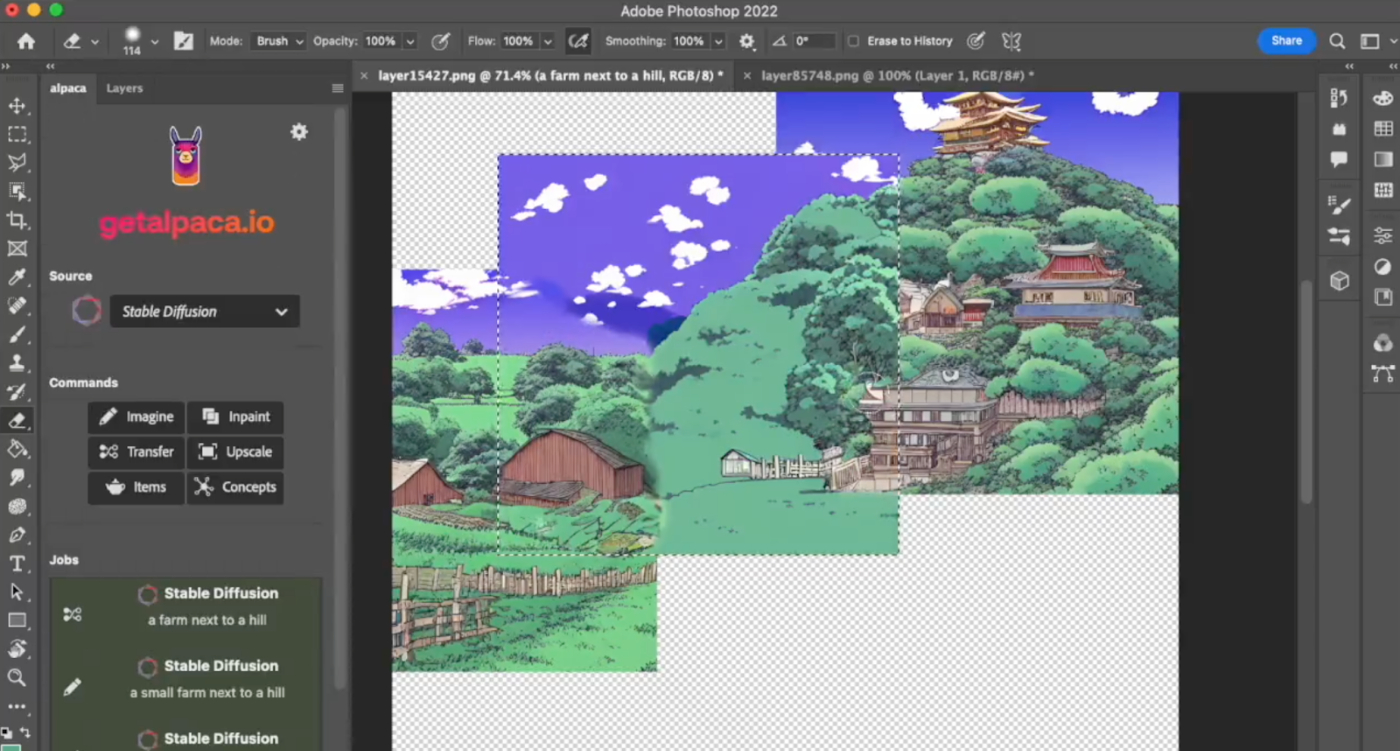

Here’s a screenshot showing a crudely-painted “bridge” between two images that were AI generated. Using Alpaca, you can click a button, describe what you’d like the details to look like, and have it filled in for you.

Before:

After:

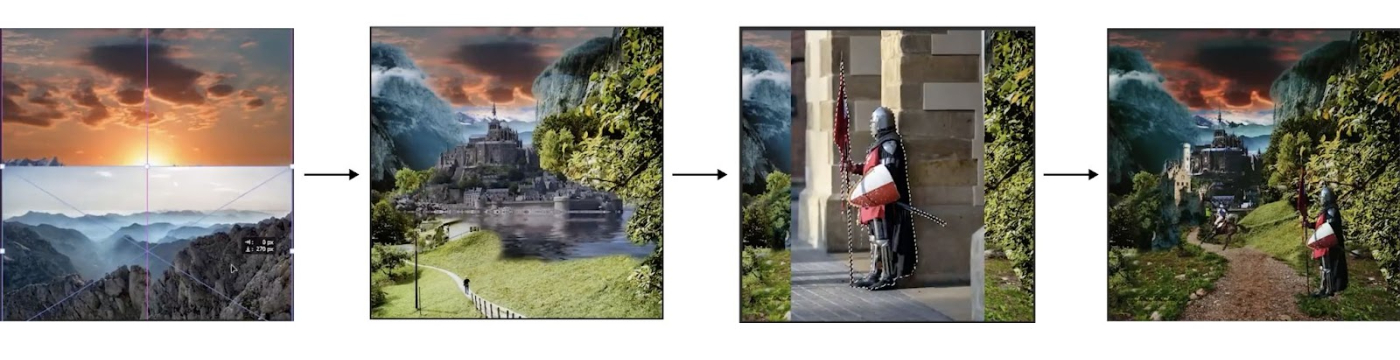

Another guy went even further, and created some pretty stunning illustrations by roughly photoshopping a lot of elements together into a sort of collage (sky, castle, knight, etc) and feeding it into Stable Diffusion to create new original work.

Here are some screenshots of the collage coming together:

And here are some of the outputs that the algorithm gave back:

Pretty wild!

But beyond the “holy shit” feeling, it’s important to look deeper into what workflows like this tell us about how the future will unfold. The process of even creating this shows you how many layers of intelligence are involved at each step of the way. Searching for images on the web to find all the raw ingredients for the collage is made easier by AI. Separating objects of interest (like a knight) from the background is made easier by AI. The list could go on, and this is just one particular workflow.

Instead of just having one big AI that does everything, maybe the future is going to be about having lots of small, modular tools with built-in intelligence that can be combined and recombined to suit our needs.

It wasn’t what we imagined, but it’s a lot more useful.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!

Great points here:

There have been two modern revolutions in software: (1) command-line interfaces to GUI/graphical interfaces (which abstracts away the need to memorize specific language) and (2) single-player computing to networks.

"Now, considering recent developments in artificial intelligence, I believe we’re entering a third major revolution in software: the shift from simple, direct manipulation of data to tools that are intelligent and capable of complex, unpredictable output.

**Instead of software that mimics a paintbrush, we now have software that mimics the painter.**"

That's a powerful concept here!