The finance intern—a tale as old as time. They work 100 hours a week, snort Adderall in the bathroom, and wear boat shoes in December. For this, they demand $160K a year. Now there is a new breed of intern that'll get you the numbers you need without the snarky attitude.

In fact, it's really friendly, it never sleeps, and it costs 20 bucks a month. It's still mind-bendingly stupid every once in a while though. I’m talking about GPT-4.

I think that with existing AI tools, a finance professional can increase their individual output by 50%. Workflows that require entire teams will collapse into one dude’s afternoons. It means we need far less entry-level or outsourced staff at banks, consulting firms, and investment funds. The software programs that are so dominant in finance today may be reduced to mere data storage programs.

Today I would like to show the results of two of my experiments that have taken my thinking here:

- Analysis: How you can use ChatGPT to build models, analyze P&L statements, and then build visualizations

- Synthesis: How you can use Anthropic’s Claude to quickly parse through 10-K documents

Don't get too excited about GPT making you a ton of money via stock picking. Instead, its first job will be automating the tedium of analysts’ lives with improved workflows. While this is distinctly less sexy than stock picks, it has far bigger implications. By changing the types of labor that a finance professional does, it dramatically changes the skills sets of those who succeed in the industry. You need to be paying attention, the world is changing fast.

Analysis

As my colleague Dan argues, GPT-4 is a reasoning engine. Think of it as the world’s smartest and dumbest intern: It can do more than you think but tends to make up stuff if your directions are unclear.

We will guide our intern with an OpenAI tool called code interpreter. For the unfamiliar, the code interpreter is a “Python interpreter in a sandboxed, firewalled execution environment.” To translate: You can upload a spreadsheet and then the software can write code that will interpret, analyze, and visualize the data in the file.

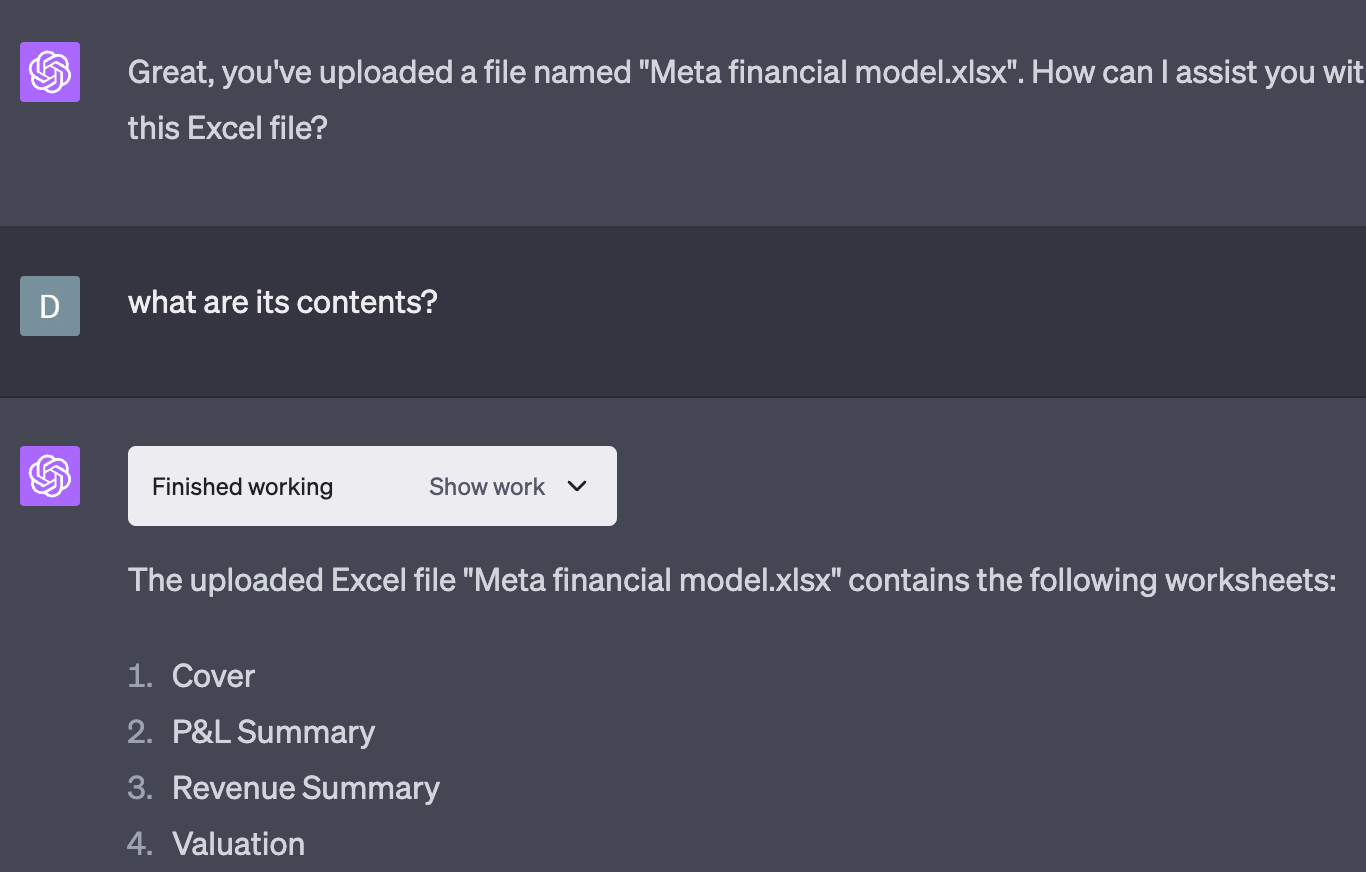

So let’s say, entirely hypothetically, that I had a bank’s financial model for Meta. I won’t say which bank (because obviously I don’t have this), but let’s say it rhymed with Schmolden Schmacks. I would just upload the bank’s financial model and let it start to analyze the content:

It then goes on to analyze and categorize all 14 tabs in the model for things like the income statement or valuation. It does so in 10 seconds, which is faster than I could do it.

Note: I’ll have a few screenshots in here; you can read or ignore them, they are supplemental.

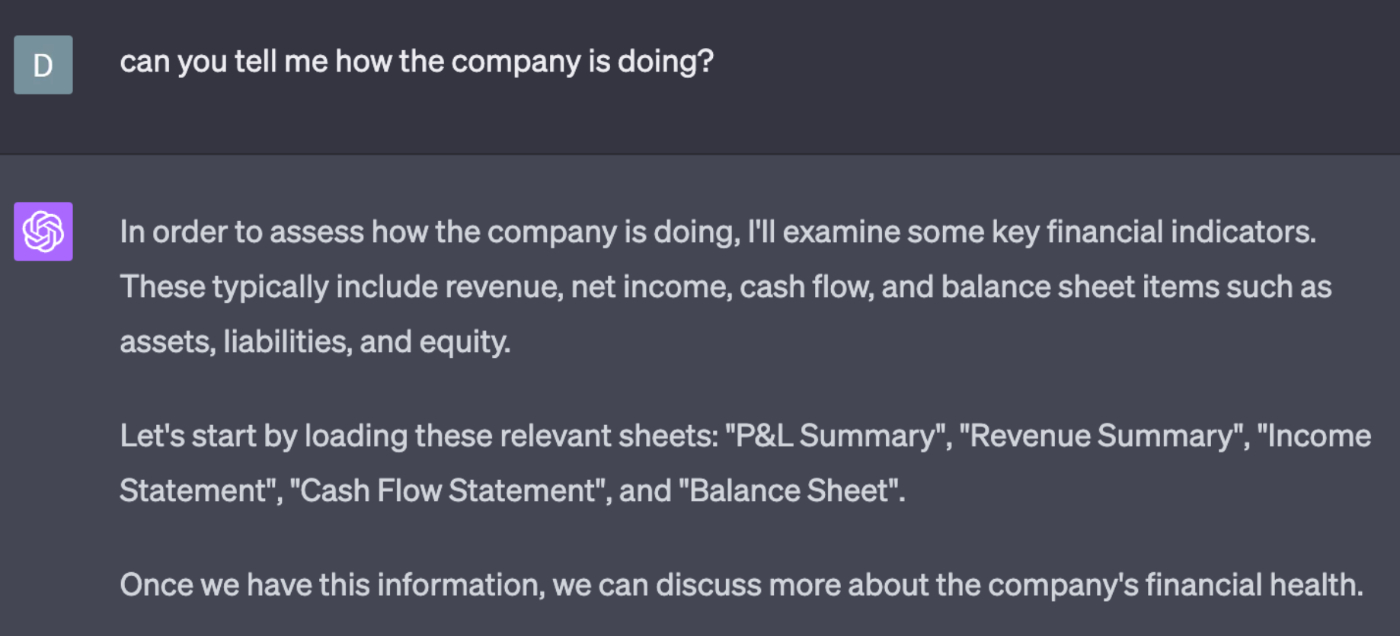

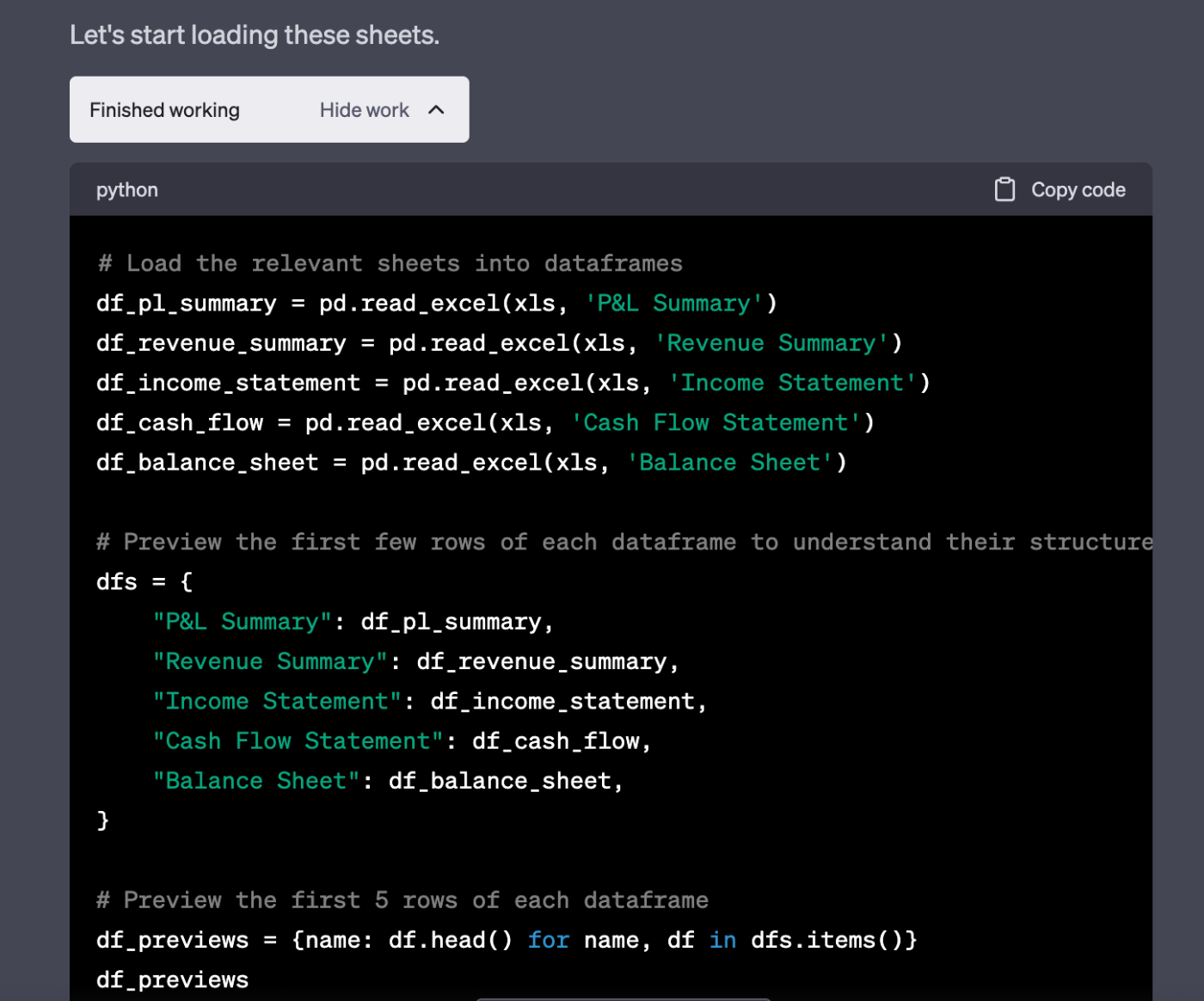

From there it is pretty simple. I ask some questions in plain old English. The AI will interpret my questions and then solve them with Python. Let’s pretend I’m not super familiar with the P&L of the company, so let’s start with the basics. I ask if it can “tell me how the company is doing.”

ChatGPT then goes on and writes Python code to analyze the contents of the relevant sheets. Notice how it selected the important tabs without my telling it which ones matter. Its reasoning capabilities are good enough that it knows the value and relationships between the tabs, like the income statement and the cash flow statement. To do this, it runs little snippets of Python picking apart the spreadsheet. If you want to double check its work, you can always open the code—by default it is hidden.

By doing so, it is able to totally analyze the company's performance in <30 seconds. It would take at least 30 minutes for me to do a similar task. Ultimately, a company’s performance is a rather subjective question. I was testing whether the program, which is connected to the internet, would be able to find what matters. It does it beautifully, immediately identifying the “Family of Apps” category that Facebook uses. This is the most important lens by which to view the company because it separates the ad business from the VR business. That Code Interpreter was able to find the correct row in this 14 spreadsheet model and tell me why it mattered in less than 30 seconds is pretty wild.

This is pretty amazing! But we can go further.

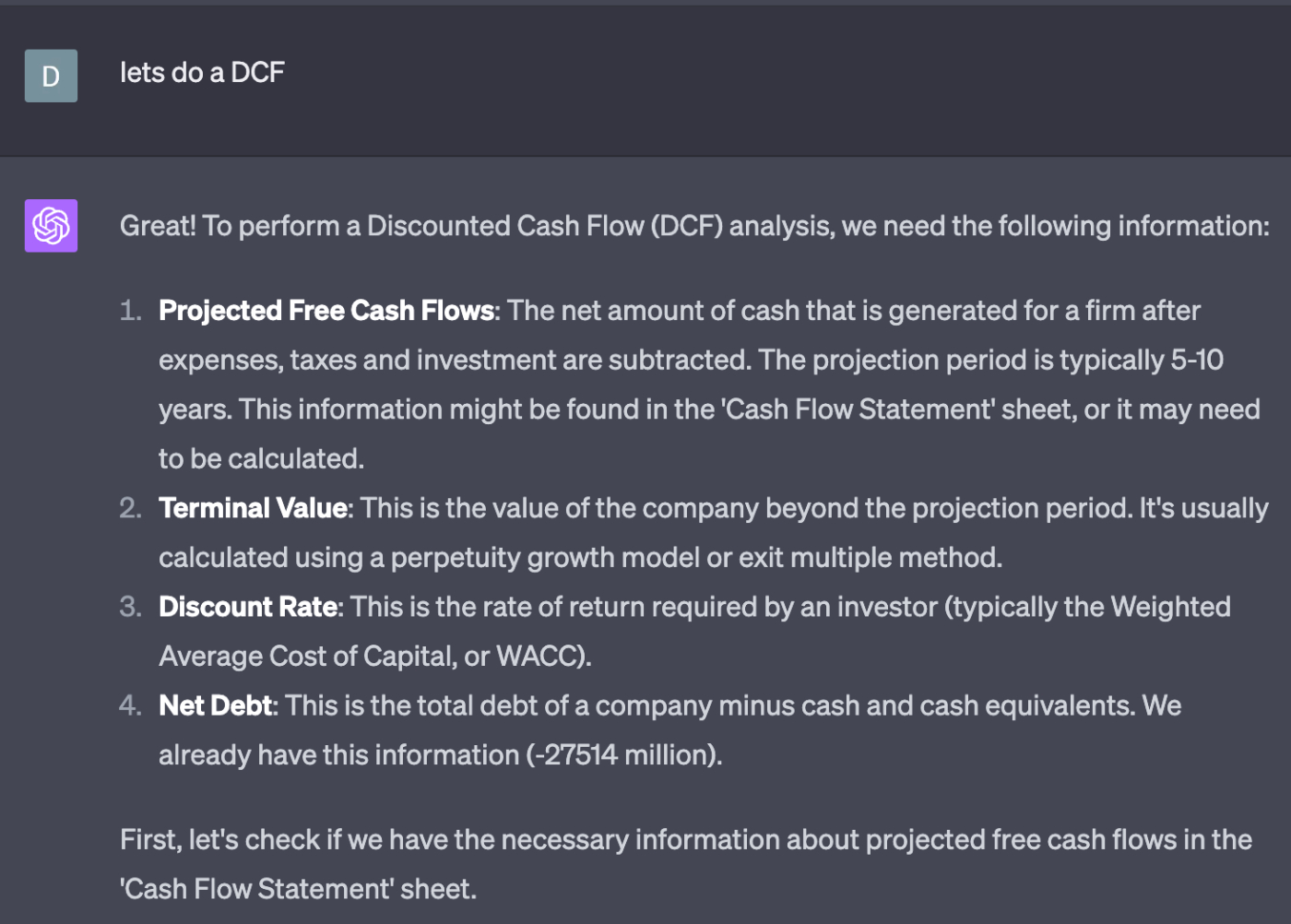

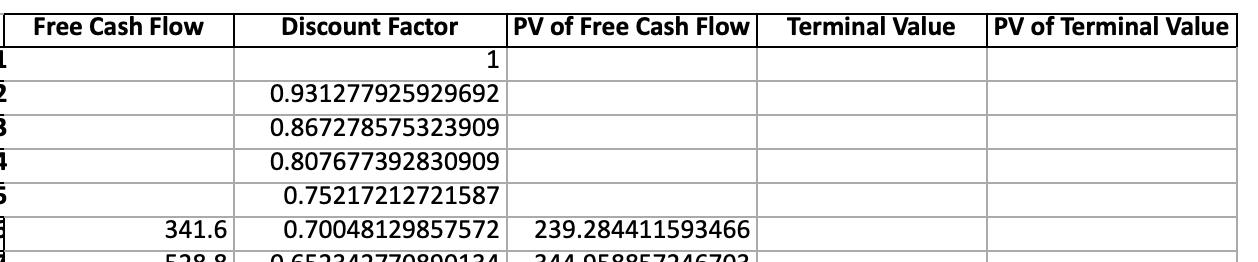

Let’s try something you would pay an entry-level analyst for, like a discounted cash flow (DCF) analysis. This is a way of valuing a company based on the value of its future cash flows. A DCF is tricky because you have to simultaneously do complex math, make some assumptions, and calculate terminal values. It is a mix of art and science that some people build their entire careers on.

I don’t give the system any of that info. I just tell it to “do a DCF” and away it goes:

However, there is a problem. Schmolden Schmacks’ bankers inserted random one-off calculations they considered important. They also put in all this fancy formatting (gotta earn client fees somehow). The formatting and calculations make it more useful for humans to scan but more challenging for a computer to read. To get around these hurdles, the ChatGPT writes nine different blocks of code. It is able to resolve these issues in <30 seconds. A pro would take significantly longer.

You get the idea. It does this over and over again. It calculates WACC (though it asks for my opinion on a few things). It plays around terminal value exit options (perpetual growth or reasonable multiples). It does all this 10x faster than the world’s best analyst could do. For the data that isn’t contained in the spreadsheet, it gives me a range of reasonable assumptions or asks if I have the data necessary for it to succeed.

By the end, I had a fully functional DCF analysis for Meta. It took maybe 10 minutes.

However, the AI isn’t perfect! I found errors along the way. Importantly, the errors were never with the math or logic—it just got confused with how the data was labeled. Plus, the analysis was very clearly not designed for human consumption. The CSV output looked something like this and was tough to read.

But with a week of experimentation, I bet I could fix the formatting. Again, this is the world’s smartest intern, not a fully functional data scientist. You have to have a pro looking over its shoulder to make sure nothing goes wrong. I imagine that in the next year or two, there will be finance-specific LLMs and ChatGPT plug-ins that can do the work of financial analysis even faster than what I’ve used here today.

But this still isn't it! There is more to finance than spreadsheet automation.

Info digest

One of the biggest pains in the world as an investor is “death wrestling with ogres,” as Kendall Roy calls it. To be more specific, the wrestling is the struggle to stay awake while reading long-ass documents. For Meta’s earnings model above, the company will release a 10-K. The most recent one was 126 pages. This document is the primary source of information about a company's performance, and it is supremely important and also supremely dull.

Using Claude, a large language model released by OpenAI competitor Anthropic, I can now outsource the work of reading it. On May 11, the company released a version of the model with a 100K token context window. This means that you can copy and paste documents as long as The Great Gatsby and the AI will be able to read it. People on the internet immediately began referring to this version as “Clong,” or long Claude. (This has nothing to do with finance, but I love the internet’s ability to give things lovable nicknames and felt it was important to mention.)

All you need to do is go to the document of choice, and then copy and paste the pages into Clong. You can then find and answer any questions, such as how Facebook defines a monthly active user or what risks we should be worried about.

While theoretically a LLM could just use this document to build a DCF, Clong can only do text. If the document you are analyzing has graphs or charts, it’ll look something like this:

In context, the LLM can typically find the answers, but your best bet is to stick to questions answered in the text of the company’s earnings call document. I imagine that at some point in the near future these models will be able to handle multiple modalities, including images, so this may just be a temporary problem. It will be able to read the tables containing the financial data and the charts showing company performance. Maybe, just maybe, you won’t even need to do the exercise I did with ChatGPT. You could plug in a 10-K and say “build me a DCF” and the system could take it from there.

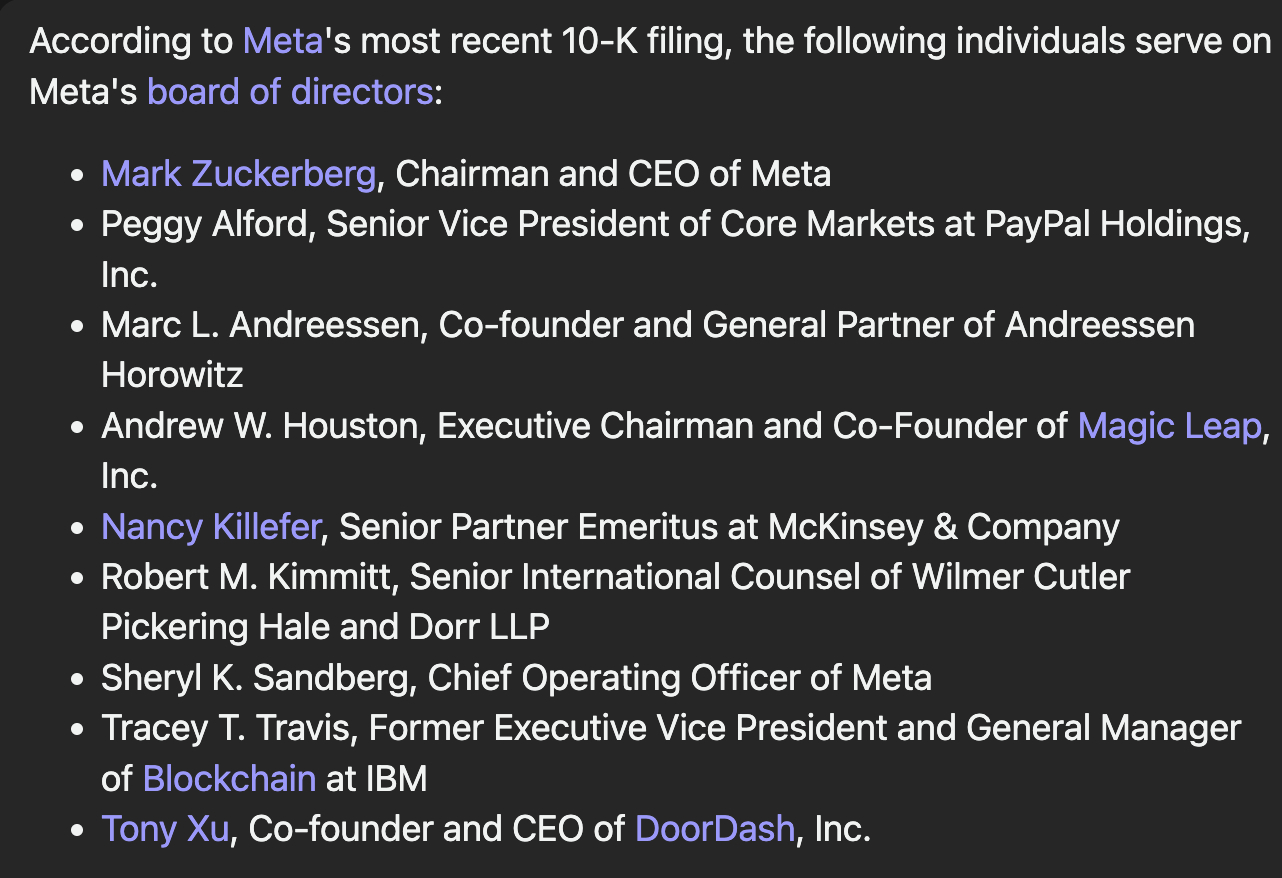

For now, it can do stuff like pull out Meta’s board makeup. If you pay attention, you’ll notice the system making a crucial error:

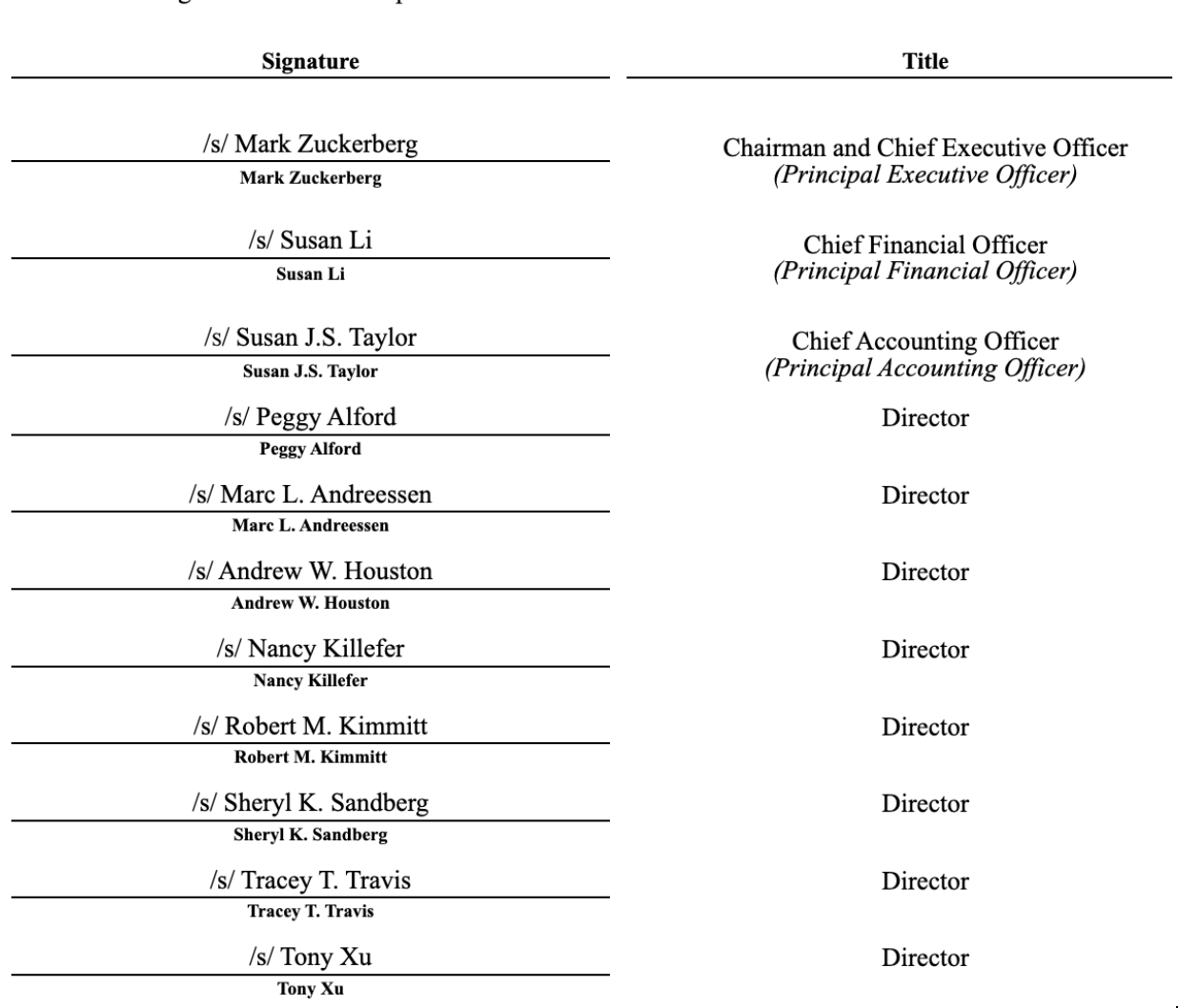

And here is the same information in the actual 10-K:

While Clong got all the names right, it hallucinated the jobs for Andrew Houston and Nancy Killefer. You’ll note that in the actual document, only the names are listed, not their job histories. Houston is the co-founder of Dropbox, not Magic Leap. Killefer never worked at IBM. When I pressed the system about these errors, it apologized and promptly made up new info. Houston worked at Founders Fund (still wrong) and Killefer was at McKinsey (wrong again).

After I asked for citations for these job titles, the system realized its error and then promised to only use citations going forward. You could actually get around all of this frustration by using a combination of vector databases, embeddings, and an LLM to query that database as we’ve previously shown at Every.

Again, it’s the world’s smartest/dumbest intern! An intern will lie to your face, and Clong will lie to you too.

AI is a finance professional accelerator, not a replacement. All of this discussion, upload, and feedback took less than five minutes. Reading this thing usually takes me an hour-plus in its entirety. A big part of the reason I was able to do so is because I am very familiar with Meta’s business and board. A typical equity analyst will cover 10–20 public stocks. With these systems, I think you could bump that to 30–40.

The implications of augmented analysis and synthesis are far-reaching.

Destroying workflows

Keep in mind all of these tools have come out only in the past two months. We haven’t even had a full quarter to wrestle with what this can do. I want to experiment with tossing in my general ledger and seeing if I can automate away our accounting needs. I think I can do sentiment analysis on earnings calls to test if executives are projecting false bravado. All of this is possible, and perhaps most importantly, all of it is possible for me to do. I am an idiot! If a sociology major from a farming town can do this, imagine what you can do.

In my personal work, I am already integrating these tools. When I’m investigating a stock, I’ll use Claude to better understand the risk questions. If I’m curious about a new P&L, I’ll have ChatGPT take a quick look to get me up to speed faster. I’m double-checking everything they tell me, and it is inelegant, but it is still way faster than doing it myself.

Already we are seeing these LLMs being integrated into existing finance workflow providers. Public, a Robinhood stock trading competitor, launched an AI chatbot on May 17 that allows you to ask questions about stocks.

The service is also available as a ChatGPT plugin. If you want a more comprehensive interface with similar capabilities, try out FinChat.

In the near future, I believe we will start seeing people formatting their P&Ls as “human format” and “GPT format.” All of the errors I’ve had, outside of hallucinations, are when the system gets lost because of formatting or funky labels.

I know, you're disappointed to reach the end of my post without learning how to use AI to pick stocks. Unfortunately, it's not going to beat the supercomputers owned by hedge funds anytime soon. AI has been used by hedge funds for trading for decades. Most prominently, Renaissance Tech’s Medallion Fund, arguably the greatest investment vehicle of the past 20 years, had an average return of 66% from the years 1988 to 2018 using early forms of machine learning. Everyone has access to GPT-4. It is unlikely to have a long-term competitive advantage over professional hedge funds that are chock-full of supercomputers and PhDs. You cannot beat them.

Where AI makes the greatest impact is in the tedious, by automating away humdrum work. I’ve chatted with a variety of public and private investors about these tools over the last week and the most consistent feedback has been, “So why do I need junior staff?” There is a strong likelihood that in five years the investor skill set and team make up is wildly different. The labor that was outsourced to low cost regions may be entirely automated away.

Like those investors I’ve chatted about this with, I’m still grappling with what finance means in a world where analysis is a commodity. Frankly, I don’t know know yet. But I do know that it will be very, very different. If you have used LLMs in your finance workflows or are building tools in this area, please reach out to [email protected]. I would love to chat.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

.08.31_AM.png)

Comments

Don't have an account? Sign up!

It isn't a reasoning engine. The human is the reasoning engine, and the human is using GPT-4 to have a dialectical thinking process. Why do y'all get this so wrong?