Was this newsletter forwarded to you? Sign up to get it in your inbox.

The problem with the internet is that we have too much and can find too damn little. In our palms, we hold the total sum of human knowledge, but most of the time, that isn’t enough to be genuinely useful. Due to a combination of SEO-optimized garbage and the technical challenge of organizing the world’s information, surfacing the answers we’re looking for on Google obliges us to navigate by knowledge star charts, triangulating our position in the digital universe with funky search terms like “best hiking trails AND iceland AND reddit.”

But “best hiking trails AND iceland AND reddit” is simply not how humans think. As I’ve discussed previously, much of our intellectual labor happens in the subconscious, in the elements of taste that make up how we process thought. We think in vague terms, like, “Flights to Iceland are cheap, I’ve heard the hiking there is pretty good.” Put simply: There is a vibe to how we think that is lost when we transform our questions into something Google search can understand.

The good news is that LLMs are the first technology that is explicitly trained on those subconscious elements of thought. Rather than rely on boolean searching—the borderline hieroglyphic set of logic rules you can use to make Google search more precise—you can fine-tune an LLM to think the way you do. That way, instead of mustering up the perfect search terms, you only have to wave in the direction of what you are looking for. You can type, “I’ve heard Iceland hiking is good, anything under five miles that's kinda epic?” and get a list of solid options instead of getting stuck in SEO hell.

I call it “vibes-based search.” And it represents a profound evolution in the way we find and interact with information online.

By making a large language model the principal technology in a search engine, vibes-based search evolves the process beyond carefully chosen keywords and star maps to something more direct and actionable. But because it understands how we think, it can also help us find what we want without knowing exactly what it is that we are looking for. Put another way, it represents an evolution beyond information and answers, to understanding.

I recognize that this description is a little dense and art-schooly. In plain English, LLMs have three new capabilities that change the nature of search:

- Intuitive leaps: LLMs can answer poorly written questions with spooky-accurate answers. Even when users only vaguely gesture towards what they are trying to say, LLMs can intuit what they actually mean.

- Ingest and understand new materials: Because it’s easy to integrate an LLM into an existing product, you can incorporate search into essentially any dataset. Even better, the LLM can “understand” the material, allowing you to surface answers, not just key phrases.

- Co-create new answers: Generative AI allows you to generate new insights from existing data or remix multiple datasets together easily. It means search becomes a creative piece of software, as flexible as a programming environment.

Vibes-based search is an amalgam of these three capabilities. It means that you can write, code, and create in a way that traditional search can’t match. Let me show you what I mean.

Dumb prompt, smart answer

I have a particular ear for melodies and absolutely no talent for lyrics. I’ll start humming a song that is stuck in my head, but I’ll make up my own words because I have no idea what the song actually is. I imagine that when my wife and I were dating, this was mildly cute. Now, going into year four of our relationship, I am confident it has progressed to annoying. (Though she would never complain.)

While driving last week, I heard screeching guitars in my head and spontaneously started singing, “Na na na na na na na na.” Normally, I would give up at this point without identifying the song. However, this is one problem that vibes-based search can solve. I pulled up Google and queried: “There is a song that came out that went like nah nah nahh nah na nahhh na naa nahhhh. what song is that? i think it was angry and emo.”

Boom—immediate success. Google’s take on LLM search gave me an answer.

Source for all images: Author’s screenshot.It was My Chemical Romance! Great band. Great song, albeit one that is embarrassingly titled “Na Na Na (Na Na Na Na Na Na Na Na Na).”

LLMs are more intuitive than traditional search because they grasp the meaning behind your words, not just the words themselves. This makes vibes-based search helpful when you’re doing analytical work, too. For example, last week I wrote an article arguing that despite slowing investment, now is a great time to build a SaaS company because AI dramatically reduces costs. In the back of my head, I remembered stumbling across a data point about how software companies weren’t forecasting a ton of growth. Normally—though I admit that I should probably come up with a better note-taking system—it would be an excruciatingly difficult thing to find. I’d probably spend 30 minutes Googling, fumbling with Twitter search, and writing specific search queries in my email inbox. Instead, I just asked X.AI’s Grok 2 model, “Are software companies growing fast this year?”

You’ll note that this is a terrible question. It’s incredibly vague, with no clear parameters about what I mean by “fast.” I don’t even say that I’m looking for data on it. But the system was able to intuit what I meant. Grok gave me a five-part overview of various statistics around startup growth rates, followed by a horizontal scroll of tweets related to the topic at hand. And there, like magic, was the tweet I had seen three months ago.

I have an experience like this multiple times a week. I’ll search for something weird or vague, ranging from dishes I can cook with specific ingredients to the definition of a technical concept, and the answer will appear. Hours of work that I used to have to put in just to navigate the internet have evaporated. And part of that comes down to no longer having to rely on the same old datasets.Attach the AI to any dataset

A search algorithm is typically constrained by the data it has access to. Google can scour the web. The search bar in your Photos album can look at the pictures you have uploaded. Vibes-based search is different because, in addition to surveying the datasets it’s plugged into (including ones that aren’t all that searchable on the public web, such as in the Twitter example earlier), a model comes equipped with a basic knowledge of the world. The most useful heuristic for this capability is that the LLM is capable of “understanding.” If you search for information in a dataset, it’ll use its trained knowledge and intuition to understand what you are really looking for.

Because most chatbots are designed to have large context windows—i.e., when you prompt the model, you can copy and paste tens of thousands of words—you can pair that training with your own datasets with relatively low friction. I have folders full of PDFs of papers and articles on my desktop. Instead of desperately scanning them for a quote I need, I’ll often drop them into Anthropic’s Claude model, and ask it to pull any relevant passages for my idea (along with the page number, so I can double-check). Every CEO Dan Shipper does similar exercises with his notes and journal, using ChatGPT to search and analyze datasets that would otherwise be too large or unwieldy to scan through.

You can also apply LLMs to existing datasets. My favorite application is Consensus, which I use to browse through academic research. According to Consensus, the tool “run[s] a custom fine-tuned language model over our entire corpus of research papers and extract[s] the Key Takeaway from every paper.” From there, it’ll score the relevance of the takeaways based on the search query you put in.

This is remarkably different from Google Scholar, Consensus’ biggest competitor. One problem in science is that there are too many papers being published. From 2016 to 2022, the number of academic papers indexed in major databases increased from 1.92 million to 2.82 million. It is simply impossible for any researcher to read them all. Academics end up relying on the papers with the most citations, whether or not they contain the most compelling research, because citations are a handy shorthand for quality.

LLMs allow you to drill down into the studies, because they can read all those papers for you and filter them on the basis of content, not citations. Similar tools exist for patents, and I imagine they will soon be available for essentially any type of dataset that requires extensive reading.

Co-create with the AI

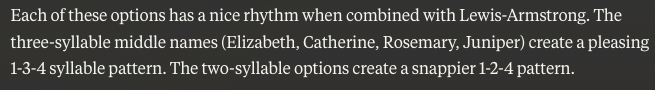

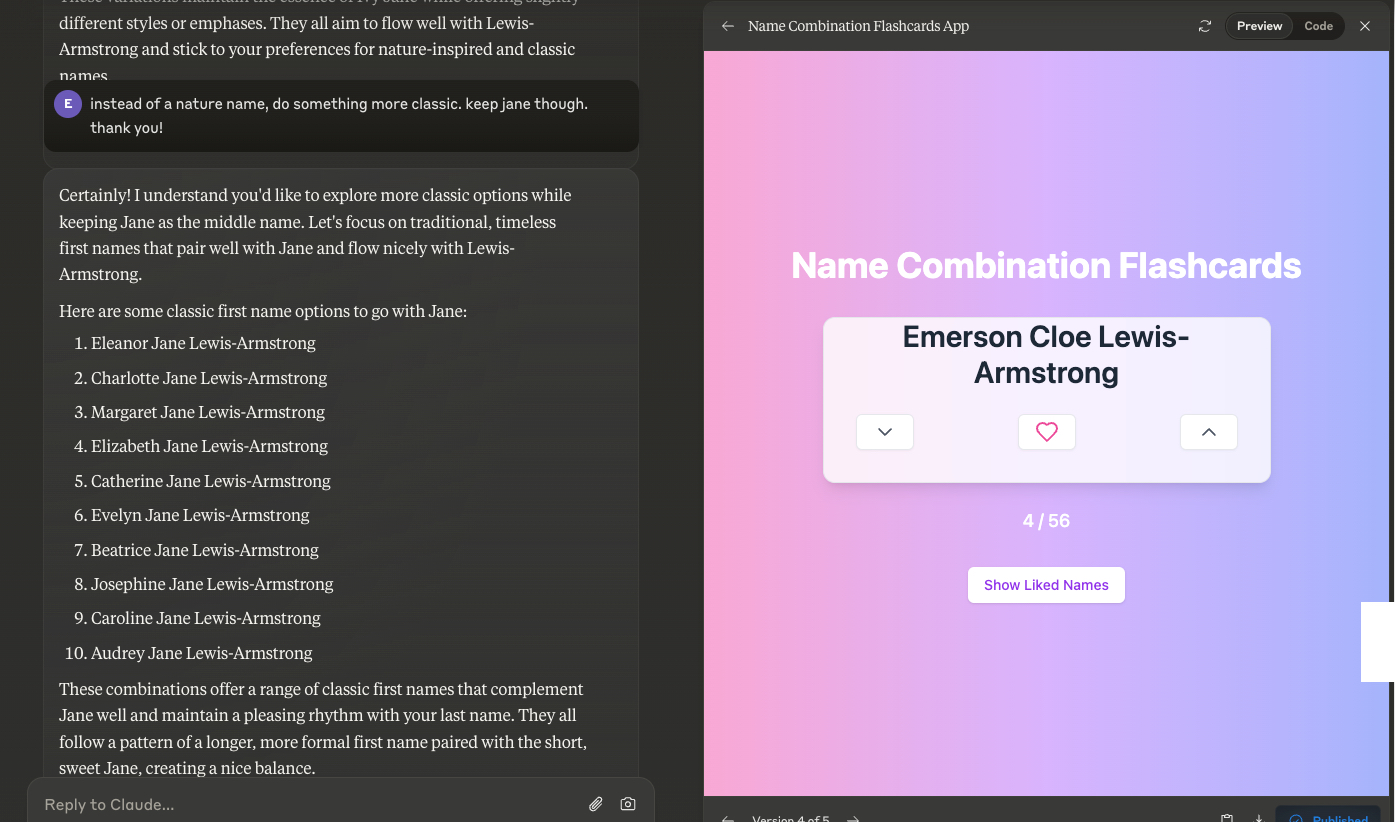

My wife and I are celebrating our third anniversary this year, and we have yet to agree on a shared last name. We just haven't gotten around to it. This isn’t a big deal to us, but it is somewhat problematic given that we will have to name our newborn in eight weeks or so. We need not only a last name, but first and middle names, too. To make matters even more complicated, we have opposite tastes in names. She loves classic, gender-neutral names like Austen and Sutton, while I love a good nature noun, like Willow. Because my wife is an English Ph.D. candidate, she also has strong feelings on things like phonetic patterns, while I have a soft spot for family names.

This is, like, way, way too many variables for any Google query. You can’t make a list of all of them, add “Reddit” at the end, and hope for the best. However, LLMs excel at this type of multi-variable problem. So we’ve been using Claude to generate ideas that bring together our respective sensibilities.

Below is my first search with Claude. Note that I was handwavy regarding what we are looking for.

Instantly, we had about 20 options from Claude. It had searched for what we were looking for, thought through our parameters, and created net new name combinations that I hadn’t seen before. Most impressively, it intuited what I meant by “have the right rhythm.”This is vibes-based search at its best. Not only did it pick up on the sensibility of the type of names we are drawn to, with a balance between nature and classics; it also applied the vibe, by creating new data for me to search through.We can take this even further. Now that Claude had scouted out a list of acceptable names, I needed a better interface to help me rank the options. Claude Artifacts lets you whip up interactive web apps on the fly, turning Claude's answers into little digital toys that you can share with others. And you don’t have to code a single line.

Using Artifacts, I made a web app that allows us to flip through the names and give a thumbs up to the ones we really like. By “made,” I mean I told Claude that I wanted an app, and the AI did the rest. You can try it yourself.This isn’t search in the traditional sense. It also isn’t the "answer engine" that some AI startups are billing themselves as. It is new, weird, different.

You can transform any type of dataset with vibes. Got a large amount of financial data? Try Julius, which can easily answer questions about it and create visualizations out of spreadsheets. Data in your CRM? Try Attio. Not sure where the data is stored? Try AWS. Even if you roughly know where the data is stored—such as my gut sense that the software growth point came from X—AI allows you to search through it more efficiently. Essentially every category of software and knowledge has large amounts of difficult-to-parse data that would benefit from vibes-based search.

What are the opportunities?

Google can almost certainly integrate LLMs into its search results. It already has, as I demonstrated above. However, vibes-based search isn’t merely about initial result efficacy. It’s about co-creating knowledge. It’s my wife and me looking for baby names, refining some of them, testing out new combinations, and searching for more names to add to the mix. It’s a digital ouroboros where search leads to new acts of creation, which brings you back to search, which leads to creating something even bigger and crazier—all in one interface.

The natural question is how startups can use this tech to win market share. There are three attributes that suggest that a market is ripe for vibe-based search:

- Data complexity and volume: Markets with vast, complex datasets that are difficult to navigate using traditional search methods

- Need for intuitive understanding: Industries where users often struggle to articulate their exact needs, or where context is crucial

- Google is doing it badly: Kind of a joke, kind of not. Google Scholar is only mildly useful. Google Travel is bad. If you poke around, you’ll find all sorts of rough edges that are ready to be peeled off.

Thanks to the intuitive capabilities of LLMs, we're entering an era where our tools can match our vibe. I am being deliberately colloquial with my word choice here. “Vibe” is appropriate because there is something casual and easy for users with this evolution in search. Most of the time in technology, an increase in capability is accompanied by an increase in complexity: Formulas in Excel made you more capable than a calculator but required you to learn a new type of language. Vibes-based search is magic because it does the opposite. You type dumber queries and get smarter results. When paired with the ease of attaching an LLM to a previously unsearchable dataset, vibes-based search becomes a new category of software. It’s one that is already attracting startup interest—You.com just raised $50 million to pursue a similar vision while Perplexity is looking to raise $250 million at a $3 billion valuation. The race is on.

Four years ago, I made a harrowing choice. Despite a lifetime of creating spreadsheets, no writing experience outside of Instagram captions, and only 25 subscribers to my name (most of whom were blood relatives), I decided that I would become a writer.

Now, I’m publishing two full essays a week to an audience of more than 78,000 people. Since I started publishing online, I’ve reached number one on Hacker News multiple times, written cover articles for magazines, and been read by my heroes. Going from no experience and no skills to this outcome has only been possible with one thing: AI.

Large language models are a gift. They are not replacements for creativity or skill, as some have feared—they’re amplifiers of ambition. You can create better work, faster.

I’m teaching a course called How To Write With AI to teach you how. We will use techniques like the ones discussed in this piece to improve our writing. If you want to join us, registration ends September 15, and you can sign up here.

Evan Armstrong is the lead writer for Every, where he writes the Napkin Math column. You can follow him on X at @itsurboyevan and on LinkedIn, and Every on X at @every and on LinkedIn.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

.png)

Comments

Don't have an account? Sign up!

Love this! Just shared it on LInkedin - Human Centric AI is where it's at! Thank you!!!

Great post ... but you nearly lost me in the first paragraph, stating that we have 'the total sum of human knowledge in our hands'.

Excellent. Every on a roll this week.

There is a special vibe to Evan's articles. I wonder about the writing with AI course.