If you have ever worked for an asshole, you’re likely familiar with the 9pm email entitled, “Pls fix.” Your boss, busy with the drive between the lake house and the beach house, is unable to give you feedback on a deliverable;’ they simply want you to “make it better.” I’ve gotten that email. You’ve gotten that email. And we lowly knowledge labor serfs, united in our poverty and consternation, have probably had the same response: “Fix what?”

Essentially, your boss is asking you to understand their intentions based on historical context and your personal capability. A good boss will highlight the sentences they want changed, the icons they want adjusted, the companies they want you to invest in. They’ll tell you exactly what they want and you can respond appropriately. Unfortunately, bad bosses outnumber good bosses by exactly 2.2B to one, so most people will never experience the euphoria of satisfaction in the workplace.

However, if you’ve ever been a manager, you know that a “good” direct report is able to magically transform your vague idea into a really great outcome. You can say, “find me a stock to invest in,” and a good employee will come back with something dazzling. The less time you have to spend specifying what you are looking for, the more valuable that employee becomes.

This feedback and deliverables input/output dynamic is not restricted to the overworked zealots of finance. In programming, the computer is the employee and the software user is the manager. However, the computer is a rather peculiar and frustrating direct report—it only understands incredibly precise commands. In return, you get incredibly precise outputs. There are no surprises, only inevitabilities.

However, what makes large language models fascinating is that they represent the first time you can give computers a generic, natural language prompt input and receive a directionally accurate but rarely-exactly-what-you-were-thinking output. Previously, in programming a computer you had to think step by step. Now you can give a computer a starting point and an end goal, and it will fill in the steps for you—this is a big deal.

This is a fundamental improvement in the way we interact with technology. Just as C++ is an abstraction layer on top of binary, AI is an abstraction layer over lower level thinking. In other words, with AI you don’t have to be as specific as programming or as precise as an excel formula. Instead, you can give a somewhat generic prompt and still get a useful output. You can be a bad manager and still get the results you need from your robot employee.

This gets even more awe-inducing when we consider these systems’ breadth and depth of capability. In previous experiments, I have used GPT-4 to create financial models with prompts like “let’s do a dcf.” (discounted cash flow). I’ve had it check data quality in an audit, with the prompt (and I promise this is how I typed it) “yo is the data good?” In both cases the AI was able to translate my poorly communicated intentions into a quality analysis.

Now, using productivity software to accomplish more information labor isn’t a new practice. In the last ten years, places like ServiceNow (workflow platforms) or UIPath (robotic process automation) popularized workflow automation, but those all relied on some manual setup. The tools are powerful—but they are confusing. To really make them work, you have the same problem as programming: very specific inputs to get very specific outputs.

However, AI can change all that. Previous software paradigms aren’t nearly as flexible, powerful, or imaginative as AI systems are. Once LLMs are fully deployed in productivity software, there will be a fundamental reimagining of what labor is and what it looks like. There will be a much tighter relationship between underlying databases and the labor done on top.

I’ve tried dozens and dozens of tools, trying to get an example that proved this thesis and I’ve finally found it. My proof is Composer. It does two things:

- Use LLMs to easily automate investing workflows

- Use LLMs to automate lower level thinking to tell you what workflows to build

Automating workflows

Composer is a tool for investing.

The average retail trader doesn't have access to the technology that allows for really complex trades. Composer solved that problem by building software that allows consumers to build, backtest, and then execute trades. If Robinhood is for drooling lemmings addicted to the adrenaline rush that comes from gambling your life savings, then Composer is for physics PhDs trying to make enough side money to justify spending 15 years in uni. The company has been around for three-ish years and has done over $1B in trading volume and 900K+ trades.

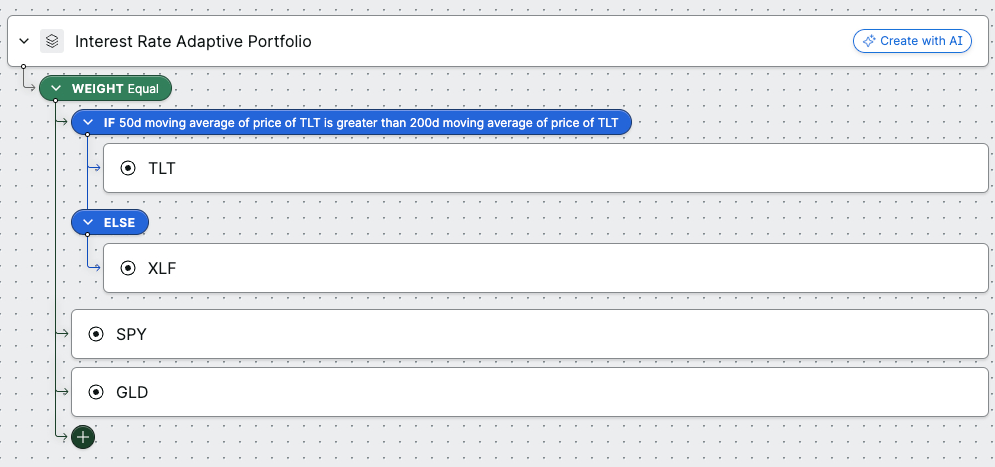

Before they integrated AI, if you wanted to build a trade in Composer, your primary tool would be a flow chart.

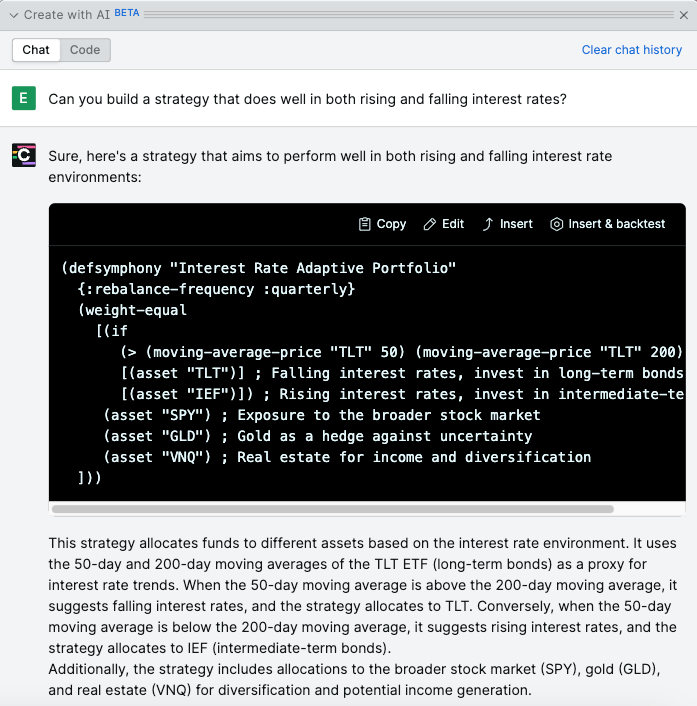

Then that trade would get compiled into a set of programming instructions. Let’s say you want to build a trade that profits whether inflation goes up or down. The code looks something like this:

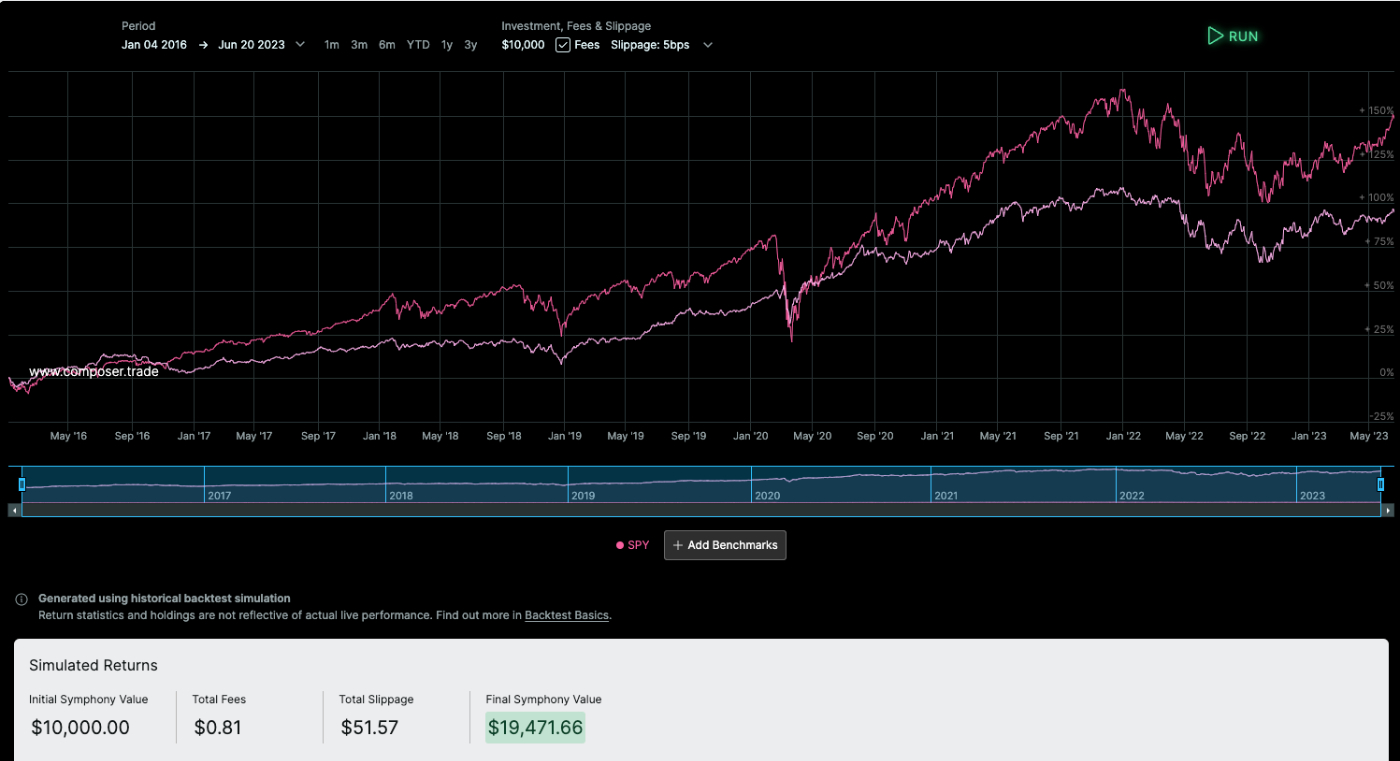

Then, you could backtest the trades to see how it would’ve performed over the last few years:

Now this is really cool! Before Composer, it was really challenging to do any of this if you didn’t work on Wall Street. However, learning how to use this tool isn’t easy. A trader is unlikely to make more money by being a superior flow chart maker—the money comes from the output, not the workflow itself.

Here is where we sprinkle some AI magic dust: you can use natural language to automate the flowchart construction. Simply click the create with AI button in Composer and ask: “Can you build a strategy that does well in both rising and falling interest rates?” And then, beautifully, easily, suddenly, there is a complete working trade with stock symbols and everything.

In other words, AI automates an entire workflow that used to be done manually.

To get there, the company’s co-founder Ben Rollert told me that they got early-access to the GPT-4 API. They send the original query from the user plus a ~3000 word prompt that instructs the AI on how to translate that query into code. Yes, you read that correctly. They were able to build an entire workflow automation, including having an output in a custom coding language, all in a single (albeit very long) prompt.

This was a pretty big shift in my understanding of what is possible with prompt engineering. In my previous experiments, I got excited because I was able to do conversational data analysis on discrete files without relying on API calls or prompt engineering. Composer’s system showed me what was possible if the prompt was detailed enough.

Perhaps most surprising was the product’s timeline to launch. Rollert told me, “Building [Composer] took three years, adding the AI took two weeks.” They didn’t construct Composer with LLMs in mind, but it turned out to be the perfect fit. GPT-4 couldn’t do this on its own, but when paired with Composer, something clicked. The hard part wasn’t integrating the AI! It was building all the backend to handle what the AI fed back.

However, the more interesting thing is the second point of magic—automating thinking.

Automating thought

If you run the prompt asking for a strategy that protects you whichever way inflation goes, you’ll get slight variations of ways to trade on the same idea. For example, the version above adds a real estate index fund ETF, while earlier versions didn't include that.

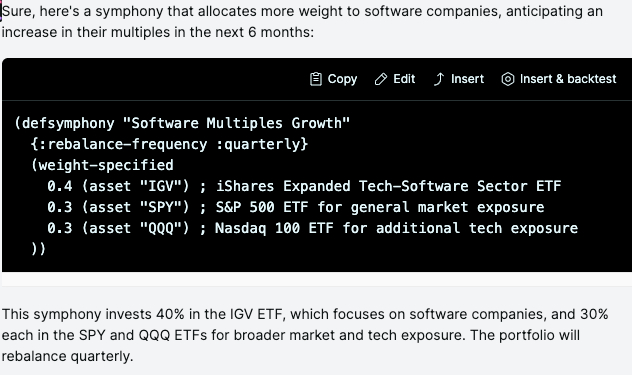

If, like me, you are thinking software multiples could recover over the next 6 months, you may wonder how to trade based on that idea. Pre-LLM you would need to pull out some software focused index funds, or if you were feeling risky, start picking out individual names to invest in.

Or, you could just tell the AI your feelings.

If I tell the AI that, “I think software multiples are going to go up over the next few months,” it then constructs a simple trade for me:

Then, just like I would with an intern, I give it more feedback. “Remove ETFs and give me individual micro-cap stocks.” Then suddenly a trade appears. The workflow is automated. The easy thinking is taken care of. Magic.

Now, critics would likely argue that this is actually taking automation too far. This shouldn’t be “easy thinking” at all. Picking the stocks and researching the ETFs are crucial parts of the process that shouldn’t be skipped. And I would actually agree with that—someone shouldn’t blindly construct a trade based on what the AI suggests.

What really matters, however, is this combination of custom workflow software and AI that allows me to think 50% faster. I can move through trading scenarios and ideas so much quicker than I would if I were doing the construction, backtesting, and stock-picking on my own.

It is easy to envision a future for Composer where they dump in all public filings, executive appearances, analyst days, etc., of public companies into a vector database. You could combine fundamental research with trade automation and, suddenly, you are a one man hedge fund.

Implications

When I tell people that I’m researching how to use AI in the stock market, the inevitable response is, “Have GPT4 tell me what stocks to buy.” If you put that into Composer, the result is generic and useless.

This understandable instinct is totally misguided.

We are far, far, far away from the total automation of intellectual labor. But we are on the precipice of the automation of intern-level capability. Workflows can be auto-constructed. Outcomes can be adjusted on the fly. Entry level jobs in finance and other fields will be dramatically different. They’ll still exist, but the output will be that much better and faster.

Abstraction means hiding away routine complexity to focus on higher order thinking. AI is technology-enabled thought abstraction. Composer was able to abstract away their entire workflow software with two weeks of integration work. That backend software still needs to exist—but mostly for the AI to use it. Keep in mind the GPT-4 API isn’t even publicly available yet. What happens when the entire software program can do this? There is a real chance that every aspect of knowledge work could be better and there will need to be an entirely new host of opportunities for startups to take down the giants. I can’t wait to see what y’all build.

Hey! We are continually trying out new experiments here at Every. A recent startup named Mistral AI just raised over $100M four weeks after starting. This is, observably, nuts. The easy thing is to dunk on this transaction and this team—however these are all smart people making a risk adjusted bet. It is worth examining seriously.

As a bonus for paying subscribers, Dan and I both went through this doc and gave notes/context on it. Whether you believe AI is the next big thing or not, people are investing like it is so you should pay attention to how and why capital is being deployed. All credit for sourcing this memo goes to the team at Sifted. Paying subscribers can click the link below to gain access. Comment mode is on! Feel free to leave your thoughts—Dan and I will respond.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!