Sponsored By: Sanebox

Today's essay is brought to you by SaneBox, the AI email assistant that helps you focus on crucial messages, saving you 3-4 hours every week.

Like most startups right now, this publication has been looking at how to reduce our expenses with AI. One area I’ve been experimenting with is seeing just how much accounting minutiae I can automate. Recently I had a breakthrough thanks to a ChatGPT plug-in called Code Interpreter. This plug-in is not widely available (so don’t be sad if you don’t have it on your account yet), but it allows a user to upload a file and then ChatGPT will write Python to understand and analyze the data in that file.

I know that sounds simple, but that is basically what every finance job on the planet does. You take a fairly standard form, something like an income statement or a general ledger, populate it with data, and then run analysis on top of that data. This means that, theoretically, Code Interpreter can do the majority of finance work. What does it mean when you can do sophisticated analysis for <$0.10 a question? What does it mean when you can use Code Interpreter to answer every question that involves spreadsheets?

It is easy to allow your eyes to glaze over with statements like that. Between the AI Twitter threads, the theatrically bombastic headlines, and the drums of corporate PR constantly beating, the temptation is to dismiss AI stuff as hyperbole. Honestly, that’s where I’m at. Most of the AI claims I see online I dismiss on principle.

With that perspective in mind, please take me seriously when I say this: I’ve glimpsed the future and it is weird. Code Interpreter has a chance to remake knowledge work as we know it. To arrive at that viewpoint, I started somewhere boring—accounting.

General Ledger Experiments

First, I have to clarify: AI has been used by accountants for a long time. It just depends on what techniques you give the moniker of artificial intelligence. The big accounting firms will sometimes use machine learning models to classify risk. However, because LLMs like GPT-4 and Claude are still relatively new, these techniques haven’t been widely integrated into auditors’ or accountants’ workflows.

Imagine having an AI assistant sort your inbox for optimum productivity. That's SaneBox - it filters emails using smart algorithms, helping you tackle crucial messages first. Say goodbye to distractions with features like auto-reply tracking, one-click unsubscribing, and do-not-disturb mode.

SaneBox works seamlessly across devices and clients, maintaining privacy by only reading headers. Experience the true work-life balance as SaneBox users save an average of 3-4 hours weekly. Elevate your email game with SaneBox's secure and high-performing solution - perfect for Office 365 and backed by a 43% trial conversion rate.

When I say, “I want to replace my accountant with a Terminator robot,” I’m specifically looking for a way to use large language models to automate work that an accountant would typically do.

My journey to nerdy Skynet started simply.

I uploaded Every’s general ledger—a spreadsheet that lists out all of the debits and credits for a period—into ChatGPT. My goal was to run a battery of tests that an auditing firm would do: tasks like looking for strange transactions, checking on the health of the business, stuff like that. Importantly, these are rather abstract tests. They are a variety of small pieces of analysis that then build into a cohesive understanding of the health of a business.

Once the file is uploaded, the system goes to work. It realizes this CSV is a general ledger and then writes five blocks of code to make it readable for itself.

Note: I’ll have to do some creative image sizing here because I don’t want to expose our bank account info. Images will all be supplemental and are not necessary for reading this piece.

It classifies the data and is ready for me to ask it questions in ~10 seconds. Compare that with the usual 24-hour turnaround time on emails with an accountant.

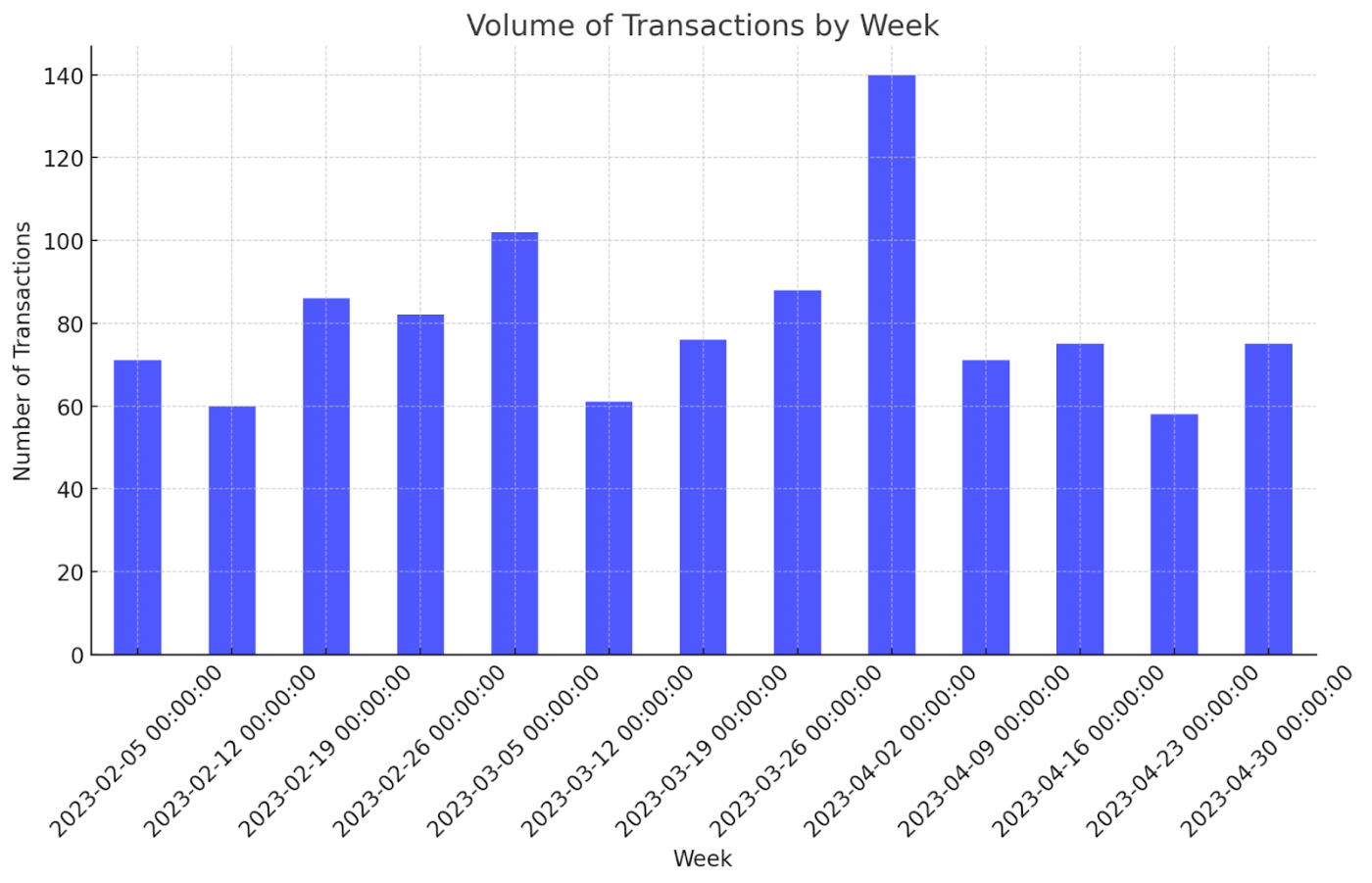

From there, I run the AI through some of these small tests an auditor would do. First, I ask it to create a graph showing the volume of transactions by week. An auditor would do this analysis as a very simplistic risk test—if there was a week with unusually high volume, they would want to examine that further.

In ~10 seconds, faster than a finance professional could do it with a pivot table and charting tool, I have a graph.

Cool but not amazing. Let’s push it further.

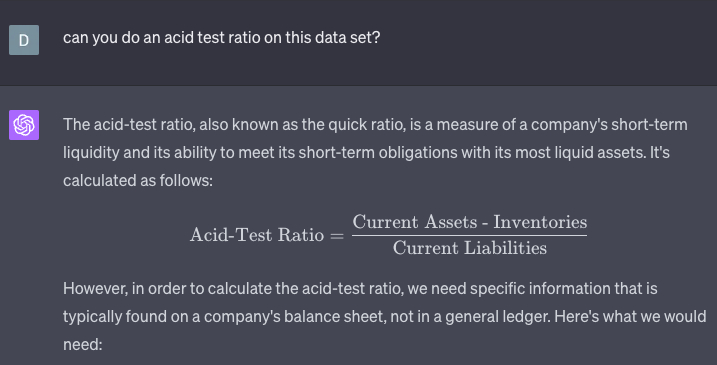

Next, I decided to test its knowledge. The role of an auditor/accountant is to figure out how safe a business is. To do so, they’ll often use things like the acid-test ratio, which measures how quickly a company could meet unexpected bills. Importantly, you cannot measure this ratio with a general ledger! It requires a balance sheet—a ledger doesn’t tell you about the asset liquidity necessary for paying bills. Thankfully, the AI passes the pop quiz with flying colors.

It recognizes that it can’t perform this test on the general ledger and needs a balance sheet. If we were to actually perform this test, I’d prefer a slightly different version of the formula, but still, it gets the important things right. As a note to our investors, don’t panic—I just ran this formula myself and we aren’t going bankrupt quite yet.

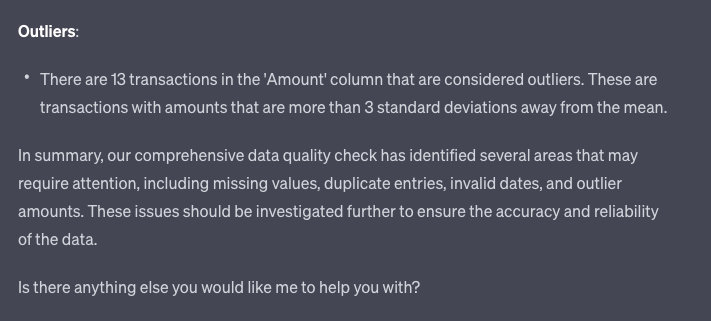

Next, I’ll try something it actually can do with a ledger—a data quality check. In trying to speak to it the same way I would to a normal human, I ask, “Yo, is the data good?” It responds with five different ways of testing the data. The first four I can’t show for privacy reasons. However, in each case, the analysis was performed correctly. For those keeping track, we are at six tasks an auditor would do automated with AI. The final data test was the first glaring error in my experiment. It found 13 outliers “that are more than 3 standard deviations away from the mean.”

I then asked it to list out the 13 reasons. None of them were actually outliers—they were all column or row sums that the system thought was an expense. In short, the AI was foiled by formatting.

The system messed up, not because of the data, but because it got confused by how the data was labeled. This is the world’s smartest and dumbest intern simultaneously. You have to keep an eye on it. When we make spreadsheets, we often do things to make them more human-readable, actions like removing gridlines or bolding important numbers. For this to work in a product, the files need to be more machine-readable. What is remarkable is how far the system can get even though this data is clearly not meant for its eyes.

I let the AI know it made a mistake, it apologized, and we fixed the issue together by going in and editing the sheet directly and reuploading it.

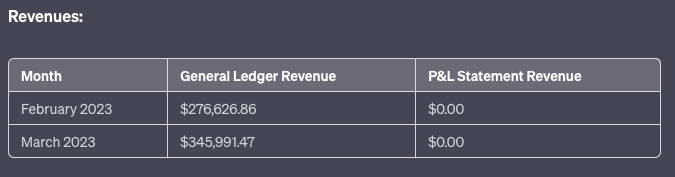

Then I got really funky with it. I also uploaded our P&L in the same chat and asked ChatGPT to perform reconciliation. This is where you can compare transaction-level data with aggregated performance on a monthly level.

Things fall apart

The good news: It can simultaneously run analysis on multiple files. It was able to successfully compare monthly expenses in the ledger to the P&L. The bad news: The result was very wrong.

Once again, I was caught by the formatting errors. The AI struggled to get around formatting issues with just one spreadsheet. If you add two spreadsheets with wildly diverse formatting styles, the system goes kablooey. I tried asking it to reformat the files into something it could read, but the errors started to compound on themselves. Based on my discussion with hackers on the topic, I think it has to do with the titles of rows versus the titles of columns, but this is an area for further experimentation. Code Interpreter can do single document analysis easily, but starts to struggle the more files you give it to examine.

I stand by my claim from the intro, I think that AI can replace some portion of knowledge workers. Here’s why: These are all easily fixable problems. Rework the general ledger and P&L to have identical formatting, load them into a database with the Stripe API, then reap the benefits of a fully automated accountant.

You could then use Code Interpreter to do financial analysis on top of all this—for things like discounted cash flow—and now you have an automated finance department. It won’t do all the work, but it’ll get you 90% of the way there. And the 10% remaining labor looks a lot more like the job of a data engineer than a financial analyst.

Frankly, this is a $50B opportunity. A company with this product would have a legitimate shot at becoming the dominant tool for accounting and finance. They could take down QuickBooks or Oracle. Someone should be building this. The tech is right there for the taking!

The key question will be how OpenAI exposes Code Interpreter. If it is simply a plug-in on ChatGPT, startups shouldn’t bother. But if they expose it through an API, there is a real chance of disruption. There will be a ton of work a startup could do around piping in sales data and formatting. Adding in features like multiplayer or single sign-on would be enough to justify a startup’s existence. As a person who does not hate their life, I will not be selling B2B software, so feel free to use this idea (and send me an adviser check please).

This is a cool experiment—but I think there are bigger takeaways.

Maybe the future is weirder than you think

One of the great challenges of building in AI right now is understanding where profit pools accrue.

Up until this experiment, I was in the camp that the value will mostly go to the incumbents who add AI capabilities to existing workflows or proprietary datasets. This, so far, has mostly turned out to be true. Microsoft is the clear leader in AI at scale and they show no sign of slowing down.

However, this little dumb exercise with the general ledger is about more than accounting. It gave me a hint of how AI will be upending the entire world of productivity. There is a chance to so radically redefine workflows that existing companies won’t be able to transition to this new future. Startups have a real shot at going after Goliath.

I am a moron. I am not technical, and I sling essays for a living. Despite that, I was able to automate a significant portion of the labor our auditors do. What happens when a legitimately talented team productizes this?

All productivity work is taking data inputs and then transforming them into outputs. Code Interpreter is an improvement over previous AI systems because it goes from prose as an input to raw data as an input. The tool is an abstraction layer over thinking itself. It is a reasoning thing, a thinking thing—it is not a finance tool. They don’t even mention the finance use case in the launch announcement! There is so much opportunity here to remake what work we do. Code Interpreter means that you don’t even need access to a fancy API or database. If OpenAI decides to build for it, all we will need is a command bar and a file.

I’ve heard some version of this idea, of AI remaking labor, over and over again during the past year. But I truly saw it happen for the first time with this tool. It isn’t without flaws or problems, but it is coming. The exciting scary horrifying invigorating wonderful awful thing is that this is only with an alpha product less than six months old. What about the next edition of models? Or what will other companies release?

This is not a far-off question. This is, like, an 18 months away question.

One of the most under-discussed news articles of the past six months was Anthropic’s (OpenAI’s biggest rival) leaked pitch deck from April. The reported version said that it wanted a billion dollars to build “Claude-Next,” which would be 10x more powerful than GPT-4. I have had confirmation from several sources that other versions of that deck claimed a 50x improvement over GPT-4.

Really sit with that idea. Let it settle in and germinate. What does a system that’s 50x more intelligent than Code Interpreter mean for knowledge labor? I’ve heard rumors of similar scaling capabilities being discussed at OpenAI.

Who knows if they deliver, but man, can you imagine if we get a 50x better model in two years? Yes, these are pitch deck claims, which are wholly unreliable, but what happens if they are right? Even a deflated 10x better model makes for an unimaginable world.

It would mean a total reinvention of knowledge work. It would mean that startups have a chance to take down the giants. As Anthropic said in its pitch deck, “These models could begin to automate large portions of the economy.”

This experiment gave me a glimpse of that future. I hope you’re ready.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!