Sponsored By: NERVOUS SYSTEM MASTERY

This essay is brought to you by Nervous System Mastery, a five-week boot camp designed to equip you with evidence-backed protocols to cultivate greater calm and agency over your internal state. Rewire your stress responses, improve your sleep, and elevate your daily performance. Applications close on September 27, so don't miss this chance to transform your life.

Nir Zicherman, the cofounder of Anchor and former VP of audiobooks at Spotify, tackles a fascinating psychological phenomenon in his latest piece: the Uncanny Valley. As AI rapidly advances, Nir explores whether our discomfort with almost-human AI could hinder its adoption—or become an advantage. It’s essential reading for anyone building in AI. (Read more from Nir in his newsletter Z-Axis.)—Kate Lee

Was this newsletter forwarded to you? Sign up to get it in your inbox.

In just the past few years, artificial intelligence technology has transformed from a tool used by hobbyists and criticized by skeptics to one capable of carving out massive new industries on its own. In the first half of the year, investors poured $35.6 billion in funding into AI startups, up from $28.7 billion the year before. AI-supported content is everywhere, discussed constantly, and used by companies across nearly every function. But AI, like all breakthrough inventions, has introduced unique challenges.

One such challenge stems from a theory that emerged in the early 1970s, as the first AI winter was soon to dawn. Back then, when AI was mere speculation, engineers began speaking of a hypothetical phenomenon known as the Uncanny Valley. Unlike other challenges in robotics—technological or mathematical—this one was psychological. It had less to do with whether thinking robots would ever be built, and more to do with how humankind might reject them once they were.

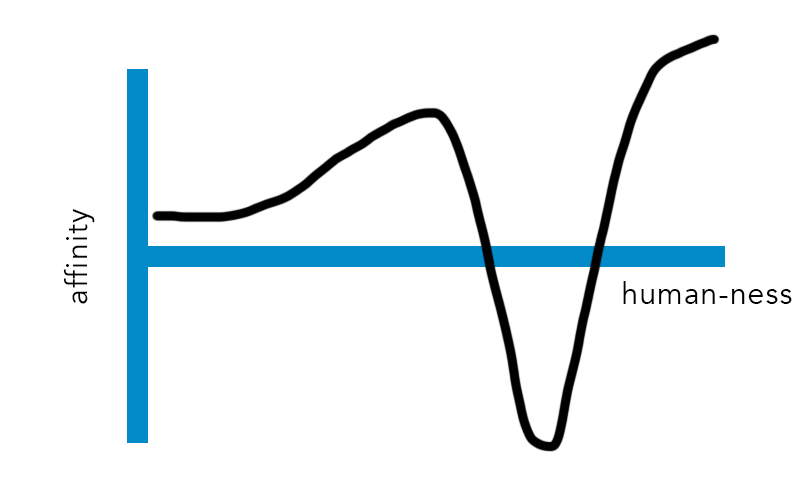

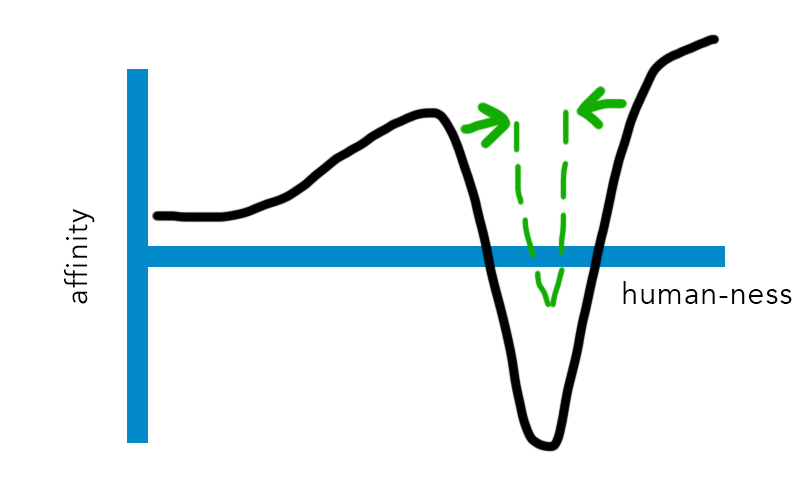

The Uncanny Valley hypothesis, first proposed by Japanese scientist Masahiro Mori, predicts that our affinity for robots will rise as they become increasingly human-like—that is, until our affinity precipitously drops for an unknown period. At the end of that period, they will achieve 100 percent verisimilitude. This “valley” is often explained as the psychological repulsion to things that are near—but not quite—human.

Source: All images courtesy of the author.For decades, the Uncanny Valley served as the wellspring of ideas for science fiction writers and futurists alike. Yet because we hadn’t built AI systems that approached the abyss, none of these theories ever went beyond conjecture.

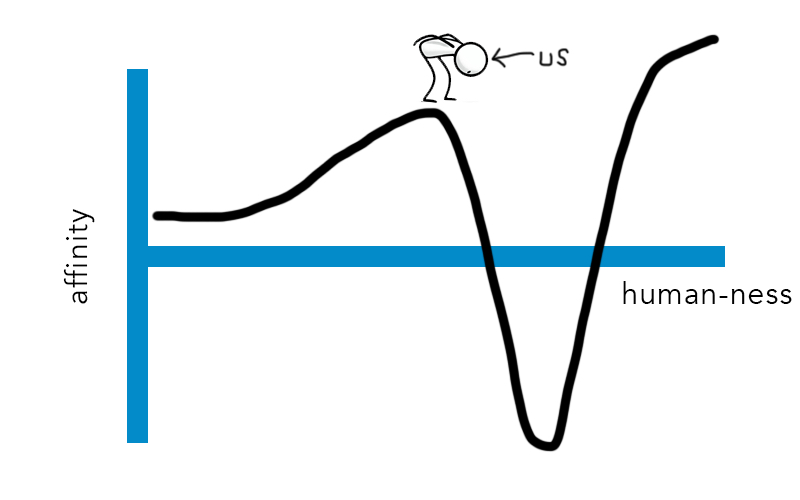

Now, it seems, we have arrived—or nearly arrived—at the precipice. The astonishing progress made in the last few years in the fields of humanoid robots and AI-generated video brings us ever closer to the question of what happens when technology that is almost human becomes ubiquitous. In other words, what happens if the Uncanny Valley truly exists? Will it hinder humanity’s adoption of AI technology? Could it help? And how can we understand its effects?

Working in tech, building companies, leading teams—these roles can take a toll on your nervous system. That's why we developed the Nervous System Quotient (NSQ) self-assessment, a free five-minute quiz crafted with input from neuroscientists, therapists, and researchers. Discover your NSQ score in four core areas and receive personalized protocols to boost your nervous system's capacity. Join the 4,857 tech leaders, founders, and creatives who have already taken the quiz.

Is the Uncanny Valley real?

Let’s start by establishing whether the abyss—the Uncanny Valley—even exists.

Some quantitative research supports the theory. One study goes so far as to monitor subjects with MRIs to show that the brain behaves differently when confronted with near-humanness. Other researchers point to evolutionary reasons for why the mind may be so predisposed, from reflexive disgust to fear of existential threats. The studies that confirm the phenomenon as real even pinpoint the part of the brain that causes it.

But every Uncanny Valley proponent brings just as many who doubt the phenomenon. A recent survey of Uncanny Valley research suggested that there is little evidence to support that the general phenomenon exists. Critics say that the Uncanny Valley is a conflation of other psychological effects or the simple result of greater exposure to humans than to robots. Rather than a steep drop in repulsion, it may simply be that the more real AI-generated content gets, the more we like it:

Let’s put research aside for a moment. I’m sure we’ve all come across examples that leave us feeling somewhat disturbed. For me, the Uncanny Valley is triggered by faces that are almost there. I get an odd feeling each time I see the baby from the 1988 Pixar short Tin Toy, or those AI-generated videos of Will Smith eating spaghetti, which still haunt the internet. Sometimes, I even get the feeling when I see AI-generated headshots created with less skillful tools.A researcher may brush my saying that aside and argue, “But the Valley has never been proven.” Nevertheless, I know what I feel, proof or no proof. The Uncanny Valley may be scientifically hard to pinpoint, but in my mind, the anecdotal evidence alone makes this a theory worth investigating and taking seriously.

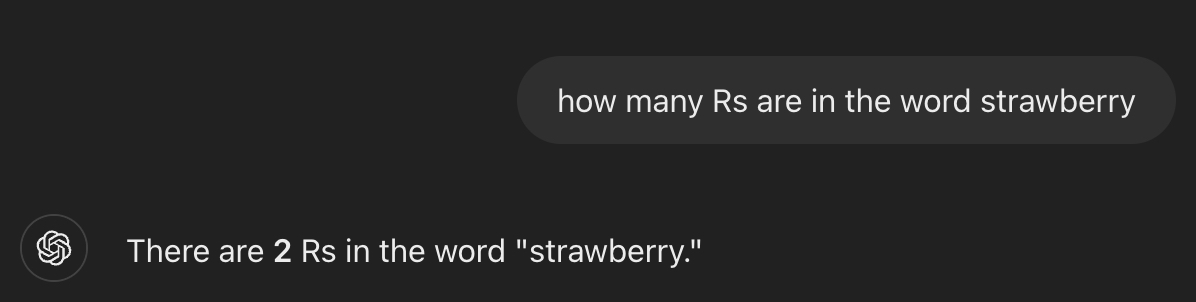

A potentially real Uncanny Valley is especially important to consider as LLMs continue to hallucinate, or espouse falsities as truth. The Valley can even extend—albeit weakly—to text. My favorite example is this (addressed very recently with OpenAI’s newest model, aptly called Strawberry):

Critics also point to artifacts—AI’s tendency to leave physically inaccurate or impossible details in its creations, thereby revealing their origin. For instance, you’ve likely seen AI-generated videos in which small particulars are oddly inaccurate. AI is notoriously bad at recreating a human hand. Asking ChatGPT for a “photorealistic image of hands playing piano” occasionally yields outputs such as this:Looks good at first glance. But notice the right index finger disappearing halfway down, or the odd distribution and size of the piano keys. Might the missing finger be enough to trigger the feeling of the Uncanny Valley, at least for some?Will the Uncanny Valley hinder AI adoption?

For argument’s sake, let’s say the Uncanny Valley is real—and that not all problems are as easily resolved as the strawberry one. If this is the case, will it affect AI’s popularity? Will artifacts and hallucinations cause a drop-off in adoption?

Consider music that is almost in tune. We recognize the song being played, but it just doesn’t sound right. And we’d likely prefer not to listen to music that makes us uncomfortable. With this metaphor, it’s understandable where the Uncanny Valley drop-off might occur.

Let’s take it a step further. What if the LLM’s contribution came earlier in the process and played a supportive role? Many composers who make digital music today don’t have a clue how to tune a musical instrument. But that doesn’t stop them from crafting beautiful in-tune works of art with the assistance of computers. (Even still, it turns out that all contemporary music is [intentionally] slightly off-pitch.)

I have used LLMs to help me compose emails. Sometimes, the results have been spectacular. Just as often, I have been frustrated by how unhelpful their output has been. In these cases, I’ve thrown out the results and written those emails from scratch. I have been blown away by amazing images generated from single prompts. I have been equally blown away at the number of useless images that continue to be generated after dozens of prompts. Has this caused me to turn away in alarm? No. Instead, it has taught me that AI’s greatest strength is in a complementary role, one in which it guides—rather than replaces—me.

Perhaps this marks the most important distinction to be made. There is a big difference between using AI as a replacement and using AI as a tool. The Uncanny Valley is only a potential problem for engineers building the former—and less so for the latter. In fact, by embracing AI’s ability to reduce cold start problems and increase efficiency, the Uncanny Valley may be a feature, not a bug; it provides a first pass—intentionally non-human—to which we can then apply our human touch.

AI video generators replacing our actors and cinematographers? A long way off. AI video generators assisting digital effects departments with finishing touches on film? Already here.

And there may be opportunities in the gap as well. Might there be a new generation of companies that embrace AI’s non-humanness as that “feature, not a bug” I mentioned? Companies that openly identify AI’s limitations rather than mask them as replacements for human beings?

Navigating the Uncanny Valley

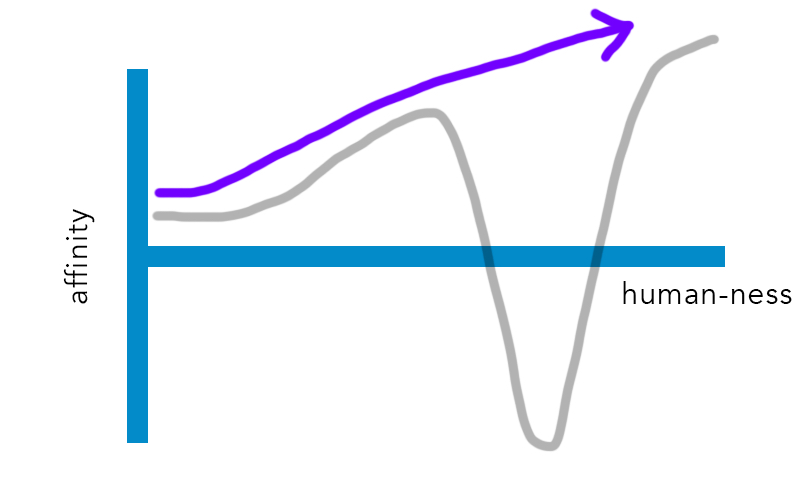

As AI improves—whether it is at the current exponential rates or not—one can imagine that any repulsion that prevents us from embracing it will begin to fade away. For instance, reducing the likelihood of both hallucination and artifacts may shrink how long it will take us to traverse the valley:

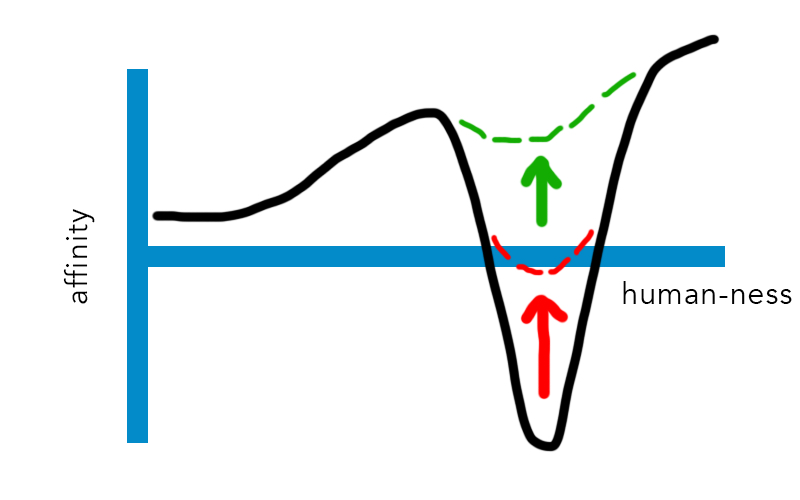

Or technological advancements may yield to a shallowing of the valley, whereby the chasm is just as wide but not as deep, due to the imperfections being less noticeable, thereby rendering our repulsion less intense:Yet, despite the incredible progress made in AI image and video generation these past few years, it’s easy to see how challenging it is for the line to ever be close to flat (for the red—reality—to reach the green line). The Pareto principle dictates that, for many outcomes, 80 percent of the effort will go toward solving the last 20 percent of the challenges.In the case of AI, I imagine that 80 percent will go toward solving the last 1 percent missing verisimilitude. If 1 percent seems numerically insignificant, imagine you’re someone who believes in the Uncanny Valley. That 1 percent is all that matters to the human brain differentiating between “real” and “unreal”; there is no in-between. Almost perfect is not perfect.

Nir Zicherman is a writer and entrepreneur. He was the cofounder of Anchor and the vice president of audiobooks at Spotify. He also writes the free weekly newsletter Z-Axis.

To read more essays like this, subscribe to Every, and follow us on X at @every and on LinkedIn.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!