Technology changes how we see the world—and ourselves. If we want to save our conception of human creativity and intellect, we need to get clear on what LLMs can do and what humans are uniquely capable of. That’s what I’m attempting to do with this piece from the archives. If you’re interested, you can also watch it as a newly created video essay on YouTube, Spotify, or Apple Podcasts. —Dan Shipper

I remember when I first saw GPT-3 output writing: that line of letters hammered out one by one, rolling horizontally across the screen in its distinctive staccato. It struck both wonder and terror into my heart.

I felt ecstatic that computers could finally talk back to me. But I also felt a heavy sense of dread. I’m a writer—what would happen to me?

We’ve all had this experience with AI over the last year and a half. It is an emotional rollercoaster. It feels like it threatens our conception of ourselves.

We’ve long defined the difference between humans and animals by our ability to think. Aristotle wrote: “The life of the intellect is the best and pleasantest for man, for the intellect more than anything else is man; therefore, a life guided by intellect is the best for man.”

Two thousand years later, the playwright and short story author Anton Chekhov agreed in his novella, Ward No. 6: “Intellect draws a sharp line between the animals and man, [and] suggests the divinity of the latter.”

The primacy of thinking and the intellect as the feature by which we define ourselves has become even more salient as we’ve moved from an economy driven by industrial labor into one driven by knowledge. Indeed, if you’re reading this, you probably put a lot of stock into what you know. After all, that’s what knowledge work is all about. If we define ourselves and our value this way, it’s no wonder LLMs seem scary.

If AI can now write, and, worse, think, what’s left that makes humans unique?

I think LLMs will change knowledge work. In doing so, they’ll change how we think of ourselves, and which characteristics we deem uniquely human. But these days I’m not particularly scared. In fact, I’m mostly filled with excitement.

My sense of self has changed—and that’s a good thing. ChatGPT has made me see my intellect and role in the creative process differently than I did before. It doesn’t replace me; it just changes what I do. It’s possible to achieve this feeling of excitement by 1) getting a clearer conception of what LLMs actually do and don’t do, and 2) expanding your view of what you are and what you are capable of.

Let’s talk about what that looks like. To start, we have to understand what the intellect is.

What is the intellect?

For the purposes of this article, the intellect is the thing that humans uniquely have that animals don’t. This is a fuzzy definition, by design. It reflects what feels threatened by AI: that which makes us human.

In reality, the intellect is a gigantic combination of brain processes that look like thinking. Thinking, the intellect, the mind—these are all different processes that we lump under a single heading. That’s why it’s easier to define it via negativa, by what it is not—it’s whatever animals don’t do. (We now know that animals do have thinking processes that look a lot like what we might call intellect, but that hasn’t filtered into our popular conceptions of self. Read Frans de Waal’s classic book, Are We Smart Enough to Know How Smart Animals Are?, for an excellent overview of animal intelligence.)

Our fuzzy definition of the intellect is why our first encounter with ChatGPT and its ilk can be so terrifying. It touches a lightning rod within us. For millennia we’ve set ourselves apart by a strange, amorphous, many-dimensional thing called intelligence—and suddenly there is something encroaching on our turf. Because it can do some of the things we associate with the intellect, we feel both excited, because we’re no longer alone, and threatened—because we might be replaceable.

In order to regain our sense of self and place in the world, we need to redefine what we mean by “intellect.” We need to create a new sense of separation between what humans do and what AI can do. We need to redefine “intellect” so as to make it work in an AI-driven world.

Fortunately, we’ve done this before, and technology can help.

Psychology is a branch of science that is full of concepts that are fuzzy in the same way the “intellect” is. Take schizophrenia and bi-polar disorder. Neither is detected by a blood test, or even has a consistent set of symptoms. Instead, they’re characterized as syndromes: a set of often associated symptoms that can vary from case to case.

The problem is that there are significant overlaps between schizophrenia and bipolar disorder that can both cause psychosis and hallucinations. As late as the 1960s, schizophrenia and bipolar disorder were lumped together under a single heading and understood to have a similar underlying cause. But it turns out that technology—specifically, the discovery of the drug lithium—was the key ingredient that we needed to pull them apart.

It all started with guinea pigs.

Pharmacological dissection of mental illness

In the late 1940s, a doctor named J.F. Cade, who apparently had entirely too much time on his hands, discovered that the urine of manic patients was toxic to guinea pigs. (I can’t believe I just typed that sentence, but, like, we all have our kinks, I guess.)

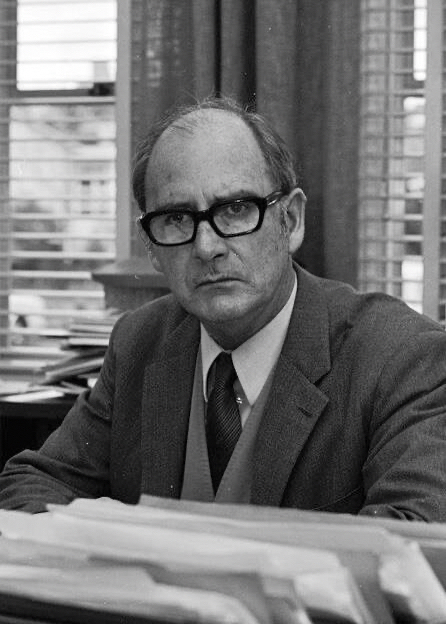

Do not let this man around your guinea pigs. Source: Wikipedia.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

.png)

Comments

Don't have an account? Sign up!