Sponsored By: rabbit

Get rabbit’s first AI device – r1 –Your pocket companion

Discover the r1, rabbit's first AI device, a pocket companion designed to revolutionize your digital life. With r1, speaking is the new doing – it understands and executes your commands effortlessly.

Experience a streamlined digital life with rabbit's AI-powered OS, learning and adapting to your unique way of interacting with apps.

Ready to transform how you interact with technology? Join the waitlist for the r1's launch on January 9th.

2023 was the year AI went mainstream. The big question in 2024 is: how far can this generation of AI technology go? Can it take us all the way to superintelligence—an AI that exceeds human cognitive ability, beyond what we can understand or control? Or will it plateau?

This is a tricky question to answer. It requires a deep understanding of machine learning, a builder’s sense of optimism, and a realist’s level of skepticism. That’s why we invited Alice Albrecht to write about it for us. Alice is a machine learning researcher with almost a decade of experience and a Ph.D. in cognitive neuroscience from Yale. She is also the founder of re:collect, which aims to augment human intelligence with AI.

Her thesis is that this generation of AI will peter out performance-wise fairly quickly. In order to get something closer to superintelligence, we need a different approach: the augmentation, rather than replacement, of human intelligence. In other words, we need cyborgs.

I found this piece illuminating and well-argued. I hope you do, too. —Dan

In the year-plus since the world was introduced to Chat-GPT, even your Luddite friends and relatives have started talking about the power of artificial intelligence (AI). Almost overnight, the question of whether we will soon have an AI beyond what we can understand or control—a superintelligence (SI) that exceeds human cognitive ability across many domains—has become top of mind for the tech industry, public policy, and geopolitics.

I’ve spent much of my career straddling human cognition, cognitive neuroscience, and machine learning. In my Ph.D. and postdoctoral work, I studied how our minds compute the vast amounts of information we process on a second-by-second basis to navigate our environments. As a data scientist, machine learning researcher, and founder of re:collect, I’ve focused on what we can learn about humans from the data they generate while using technology, as well as how we can use that knowledge to augment their abilities—or, at the very least, make their interactions with technology more bearable.

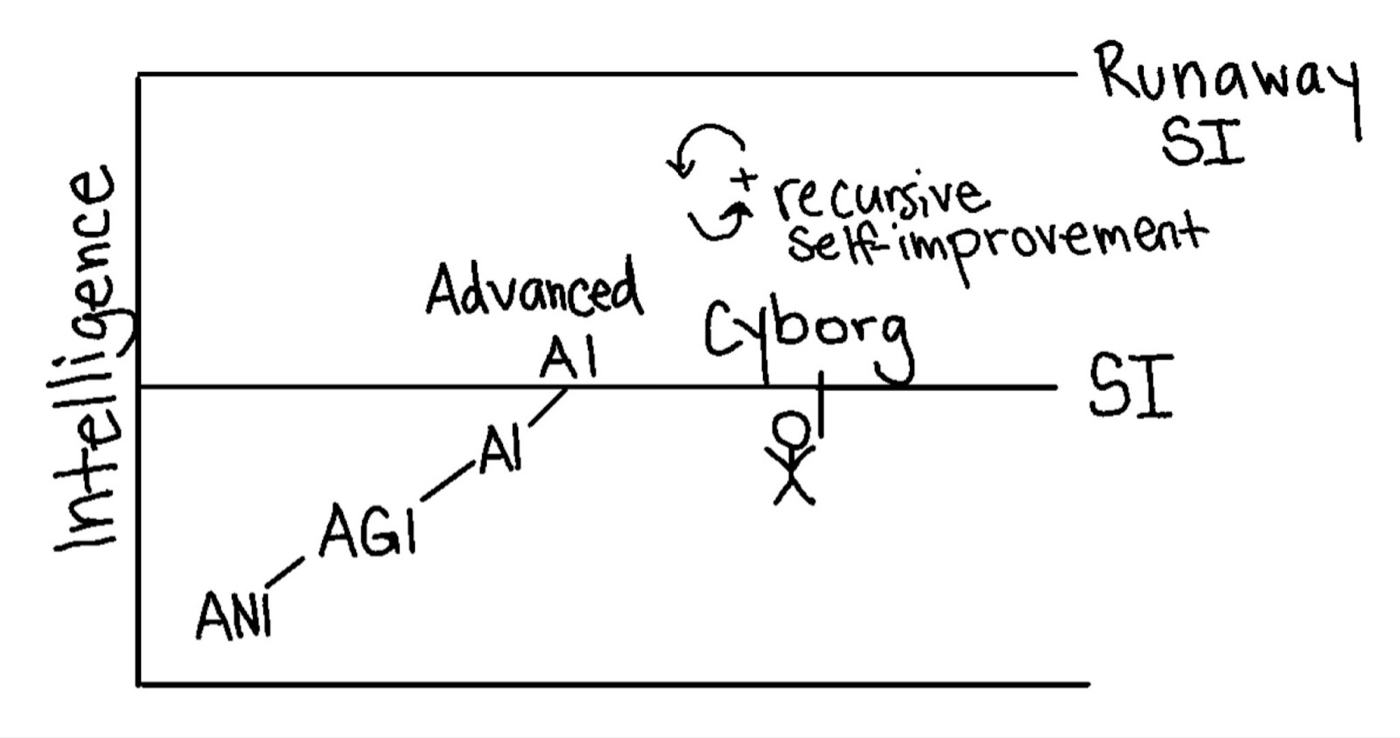

Even with this experience, I know that it can be daunting to understand all the terms about AI that people throw around. Here’s a simple explainer: generally speaking, the goal of AI is to create an artificial intelligence that matches human-level intelligence, while SI is an intelligence beyond what even the most intelligent human is capable of. Sometimes, SI is imagined as a machine that outpaces human intelligence. But it can also be a human that’s been augmented—or even a group of humans working collectively, like a team at a startup.

The fear that SI may become a “runaway intelligence,” like the Singularity Ray Kurzweil has written so much about, comes from the theory that these intelligent systems might discover how to create even more intelligent systems—which could lead to machines that we can’t control, and that may not share our cultural or moral norms.

Different pathways to superintelligence. Source: the author.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!