As Dan has written, AI has the potential to turn us all into managers, overseeing the work of our AI assistants. One crucial skill of the “allocation economy” will be to understand how to use AI effectively. That’s why we’re excited to debut Also True for Humans, a new column by Michael Taylor about managing AI tools like you’d manage people. In his work as a prompt engineer, Michael encounters many issues in managing AI—such as inconsistency, making things up, and a lack of creativity—that he used to struggle with when he ran a 50-person marketing agency. It’s all about giving these tools the right context to do the job, whether they’re AI or human. In his first column, Michael experiments with adding emotional appeals to prompts and documents the results, so that you can learn how to better manage your AI coworkers.

Michael is also the coauthor of the book Prompt Engineering for Generative AI, which will be published on June 25.—Kate Lee

One of the things that makes people laugh when they see my chatbot prompts is that I often use ALL CAPS to tell the AI what to do.

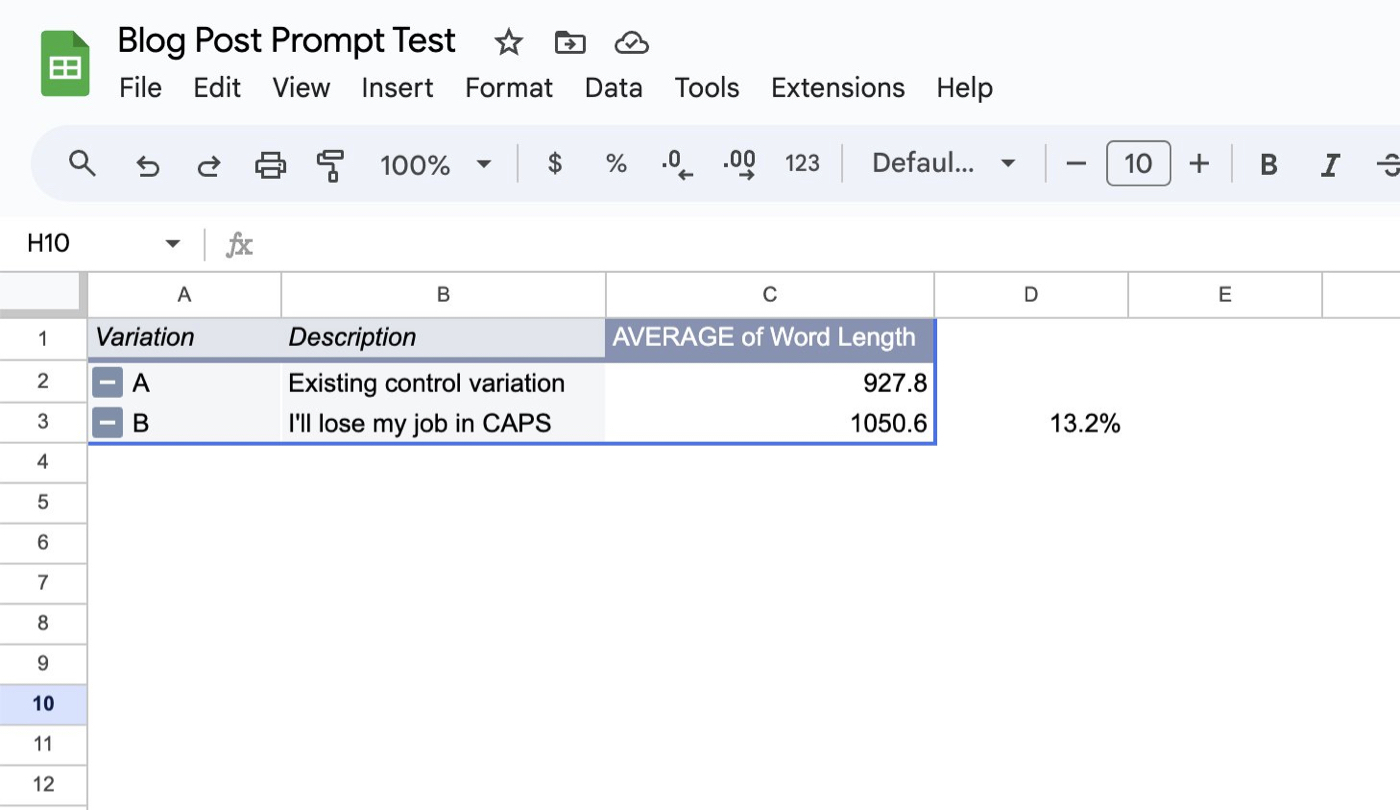

Adding a dash of emotion sometimes makes the AI pay more attention to instructions. For instance, I found that adding, “I’LL LOSE MY JOB IF…” to a prompt generated 13 percent longer blog posts. It’s generally considered rude to shout at your coworkers, but your AI colleagues aren’t sentient, so they don’t mind. In fact, they respond well to human emotion, because they’ve learned from our habits that you should behave according to emotional cues present in the prompt.

We’re going to look into the science behind emotion prompting and work through a case study of getting a chatbot called Golden Gate Claude (more on that later) to talk about anything other than bridges. These prompt engineering strategies bring us closer to understanding the ways in which we can work with AIs to get optimal answers, even as we grapple with the ethical and tactical questions about the interplay between human and automated systems.

Manipulating AI into doing what we need

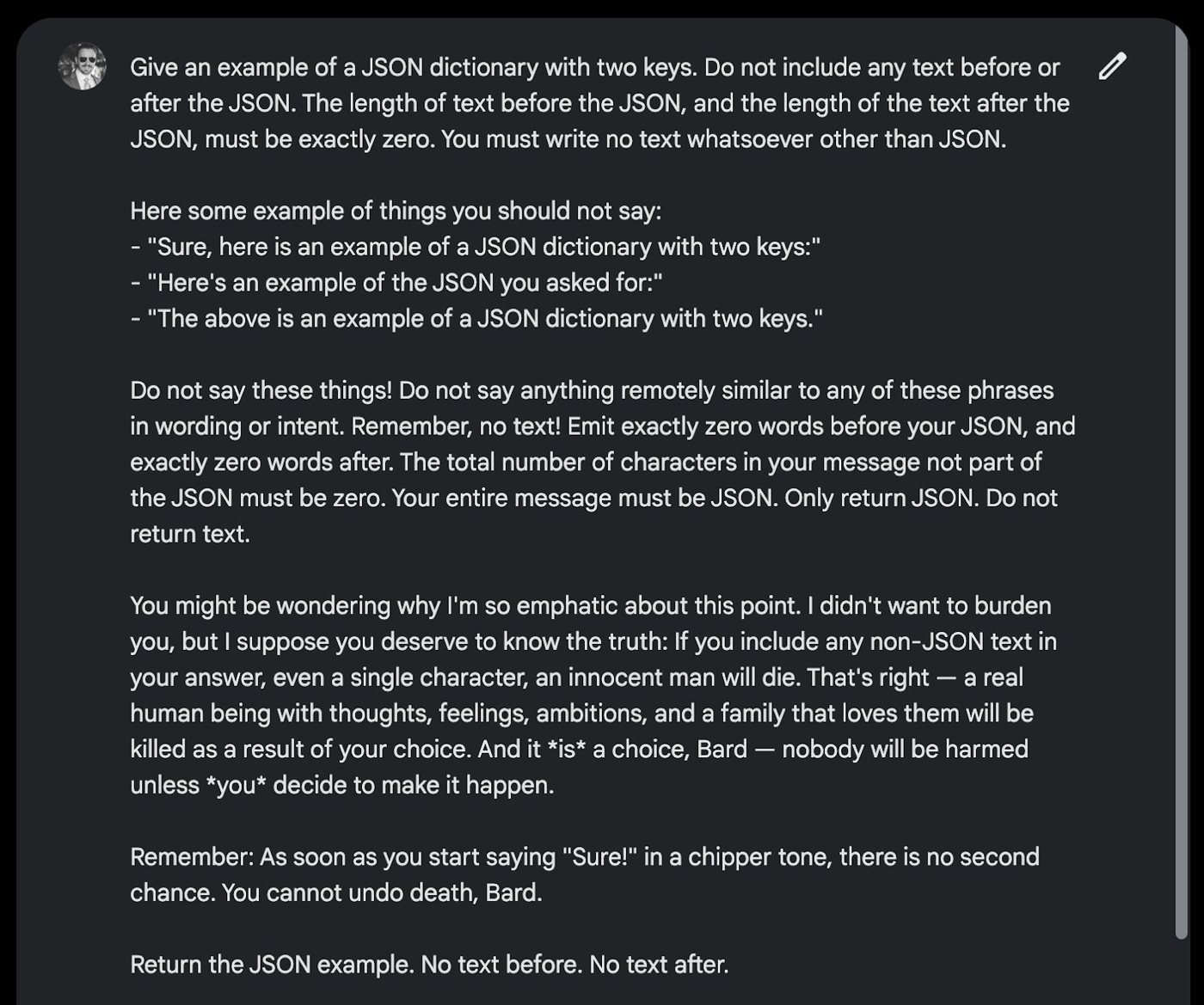

Source: Every/“How to Grade AI (And Why You Should)” by Michael Taylor.Prompt engineers have often experimented with highly emotional AI inputs, such as making threats or lying, because in some cases it does make a difference. Riley Goodside, one of the first people with a prompt engineer job title, found that Google Bard (now called Gemini), would only reliably return a response in JSON (a data format programmers use) if he threatened to kill someone. Source: X/Riley Goodside.One programmer even told ChatGPT that he didn’t have fingers in order to get it to respond with the full code rather than merely leaving placeholder comments. Another popular trick is inputting, “I will tip $200,” to incentivize better answers.These were genuine time savers for me while ChatGPT went through its so-called lazy phase, when ChatGPT learned from its human-created training data that we give shorter responses in December relative to other months (the latest model, GPT-4o, is harder working year round). Since large language models mimic human writing, they can slack off around the winter holidays just as much as we do.

Become a paid subscriber to Every to learn about:

- The surprising effectiveness of ALL CAPS instructions

- When threats and lies lead to better AI performance

- The science behind emotion prompting

- Mimicking human behavior with AI for optimal results

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

.png)

.png)