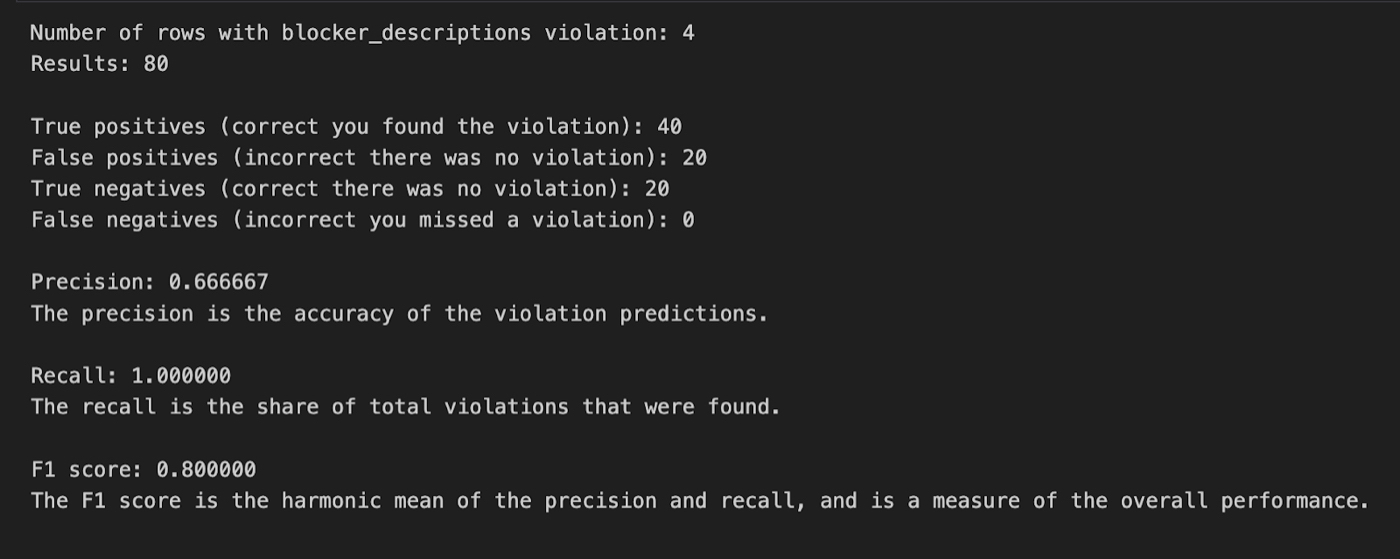

AI isn’t like other software. Historically, when you used a computer, input X would always give you output Y. With AI, you give it input X and get back output I, T, D, E, P, E, N, D, S. The computer responds with a marginally different answer every time, so evaluating and comparing these tools is a devilishly hard task. But because we are devoting ever more of our intellectual effort to LLMs, this is a task we must figure out. Michael Taylor has spent an obscene amount of time trying to build AI evaluations, and in this article, he tells how you can do the same. As go the evals, so goes the world. —Evan Armstrong

To paraphrase Picasso, when AI experts get together, they talk about transformers and GPUs and AI safety. When prompt engineers get together, they talk about how to run cheap evals.

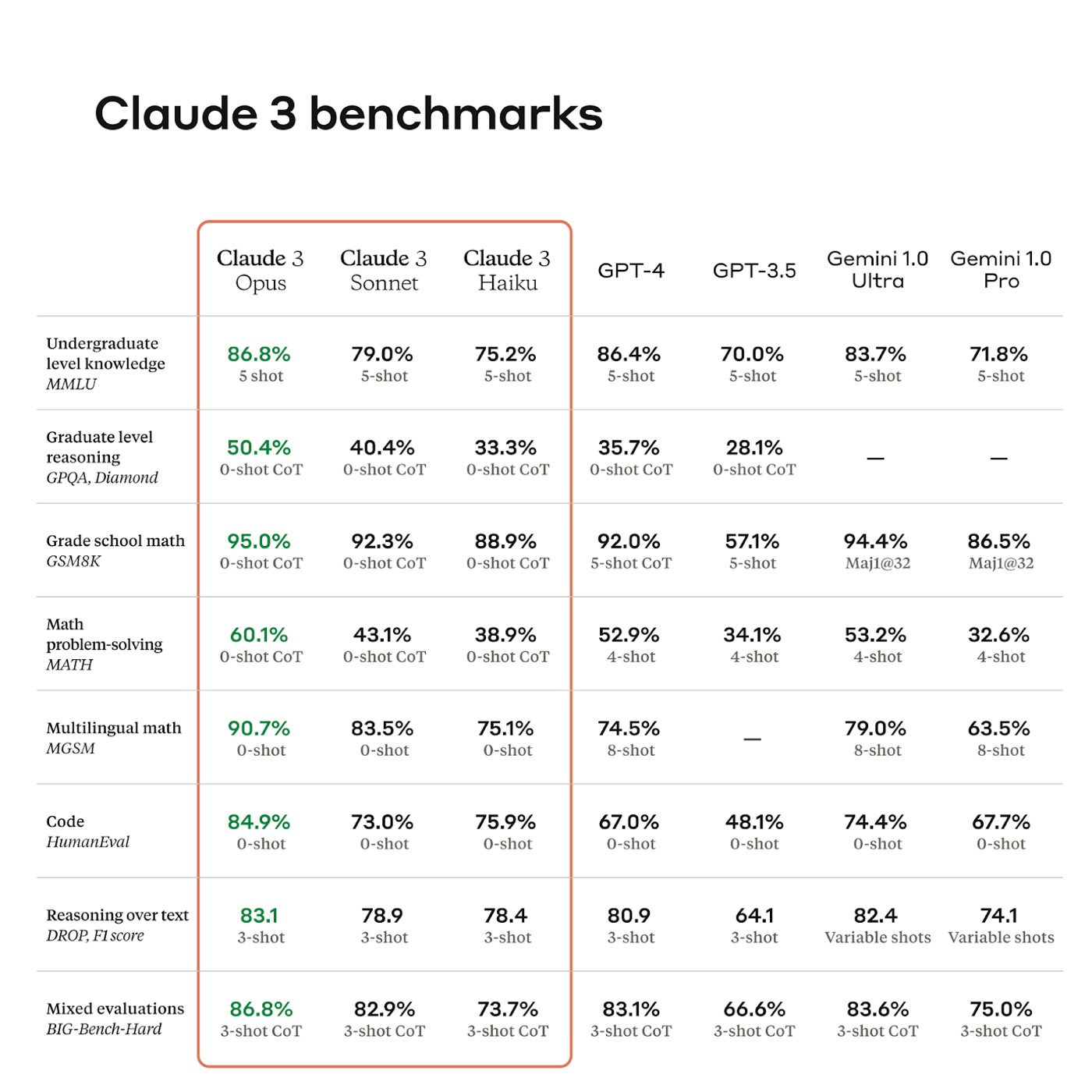

Evals, short for “evaluation metrics,” are how we measure alignment between AI responses and business goals, as well as the accuracy, reliability, and quality of AI responses. In turn, these evals are matched against generally accepted benchmarks developed by research organizations or noted in scientific papers. Benchmarks often have obscure names, like MMLU, HumanEval, or DROP. Together, evals and benchmarks help discern a model’s quality and its progress from previous models.

Below is an example for Anthropic’s new model, Claude 3.

Source: Anthropic.

Benchmarks are lists of questions and answers that test for general signs of intelligence, such as reasoning ability, grade school math, or coding ability. It’s a big deal when a model surpasses a state-of-the-art benchmark, which might enable its company to attract key talent and millions of dollars in venture capital investment. However, benchmarks are not enough. While they help researchers understand which models are good at tasks, the operators who are using these models don’t depend as much on them: There are rumors that answers to benchmark questions have “leaked” into the AI models’ training data, which makes them subject to being gamed, or overfit to the data in undefined ways.

And even though head-to-head comparison rankings—where the results of two models for the same prompt are reviewed side-by-side—use real humans and can therefore be better, they’re not infallible. With Google Gemini able to search the web, it’s like we’re giving the AI model an open-book exam.

As OpenAI cofounder and president Greg Brockman puts it, “evals are surprisingly often all you need.” Benchmarking can tell you which models are worth trying, but there’s no substitute for evals. If you’re a practitioner, it doesn’t matter whether the AI can pass the bar exam or qualify as a CFA, as benchmarks tend to discern. Evaluations can’t capture how it feels to talk to a model. What matters is if they work for you.

Source: X/Greg Brockman.

In my experience as a prompt engineer, 80–90 percent of my work involves building evals, testing new ones, and trying to beat previous benchmarks. Evals are so important that OpenAI open-sourced its eval framework to encourage third-party contributions to its question-and-answer test sets to make them more diverse.

In this piece, we’ll explore how to get started with evals. We’ll touch on what makes evals so hard to implement, then run through the strengths and weaknesses of the three main types of eval metrics—programmatic, synthetic, and human. I’ll also give examples of recent projects I’ve worked on, so you can get a sense of how this work is done.

What makes evals so hard to implement?

In a previous life, I built a marketing agency and worked with hundreds of clients. They all shared one problem: attribution. If you can’t measure the impact of the decisions you make, you can’t make better ones in the future. Prompt engineering is no different. Every client I speak to seems to know “good” when they see it, but struggles to define it in a way that can help them automate or delegate the evaluation process. The lack of definition leads to a slow pace of improvements, limited testing, and internal disagreements on what might move the needle on results. Often, the only evaluation metric they have is “the CEO liked it.” This confusion is amplified by three factors:

- Reliability: We're used to writing algorithms that return the same result every time. This is not the case for AI, where the responses are non-deterministic. Even if you run the same prompt with the same model, you will get different results every time. Getting a good response on the first try isn’t enough. You will need to run it 10 or even 100 times to see how often it fails.

- Vendors: As an industry, we have spent the past 10 years disregarding cost, latency, and vendor lock-in thanks to Moore’s Law, which has made compute cheap and options abundant. Those factors are once again a risk to consider. While GPT-4 has remained best-in-class for over a year, OpenAI has deprecated multiple APIs, suffered outages, and fired (and rehired) its CEO in that time period. And while it doesn’t hurt to consider your options, more choice often comes with greater complexity—and more confusion.

- Hallucination: Models tend to make things up, generating illusions that are often referred to as hallucinations. As we measure AI accuracy, remember that our human employees make mistakes too. Reallocate tasks based on what humans and AIs are each good at.

Clients often seek out my services when they want to optimize a prompt that’s working inconsistently. Some clients want to save costs, improve speed, or avoid over-reliance on OpenAI by moving to a smaller or open-source model, or fine-tuning a custom model on their proprietary data. They also get in touch when they want to implement Retrieval Augmented Generation (RAG) to inject relevant data into the prompt as context, so that the AI responds more accurately, with fewer hallucinations.

My first step is always the same. I define a set of evals. Without evals, I can’t do my work.

Three types of evals

There are many evaluation metrics available, and they each have strengths and weaknesses. The three main categories of evals are programmatic (rules-based), synthetic (AI grading other AI results), and human (manual ratings). Most AI projects utilize all three.

Use programmatic evals for objective answers

Strength: Fast and cheap

Programmatic evals are rules-based checks or calculations that can run in milliseconds and for next to no cost—you’re merely comparing AI-generated answers with a string of reference answers. If you have a set of questions with clear answers, like “What’s the capital city of the U.S.?,” a programmatic eval will check if the AI responses match. They can also calculate a grade for the answers from 0–100 percent, and you can use this grade to decide whether to go forward with the model.

Question-and-answer sets are usually created manually by clients, although a smarter LLM like GPT-4 will occasionally be used. They are based on details a model should be able to handle to be successful. There are other metrics you can calculate without building a question-and-answer set, like Levenshtein distance, which measures the similarity between texts. This can be useful for less-structured tasks that don’t fit normal question-and-answer formats—for example, whether AI-generated blog content matches your tone of voice.

It takes some work with a technical team member to develop robust programmatic evals, and they’re usually different each time, tailored to the task at hand. However, building your own question-and-answer test set is a great start, and it’s something anyone can do.

Weakness: Struggle with complex prompts

Programmatic evals can face challenges while developing metrics for complex tasks, especially those with nuanced or subjective criteria. They are better suited for tasks with right answers or easily definable rules. They’re also useful to discern wrong answers quickly—always have at least one programmatic metric you’re optimizing for, even if the outcome is imperfect.

Examples:

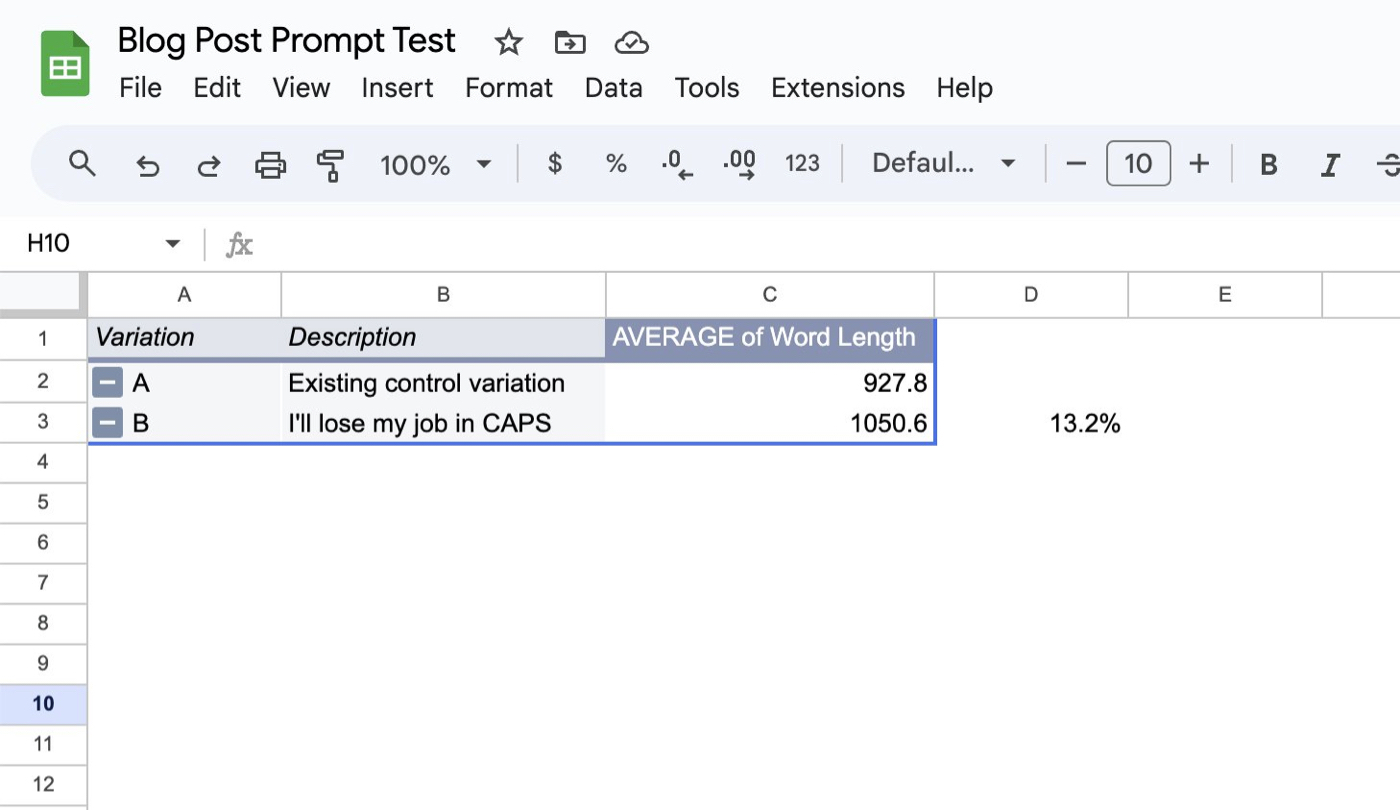

- Emotion prompting. ChatGPT sometimes struggles to generate long texts and can be coaxed into making responses an average of 13 percent longer. LLMs understand and react to emotional phrases, so adding the prompt MAKE IT REALLY LONG OR I’LL LOSE MY JOB to the end of your original prompt can get you to the right length. I used the two prompts to generate 20 blog posts (10 per prompt) and calculated the word length in Google Sheets.

Source: The author’s X account.

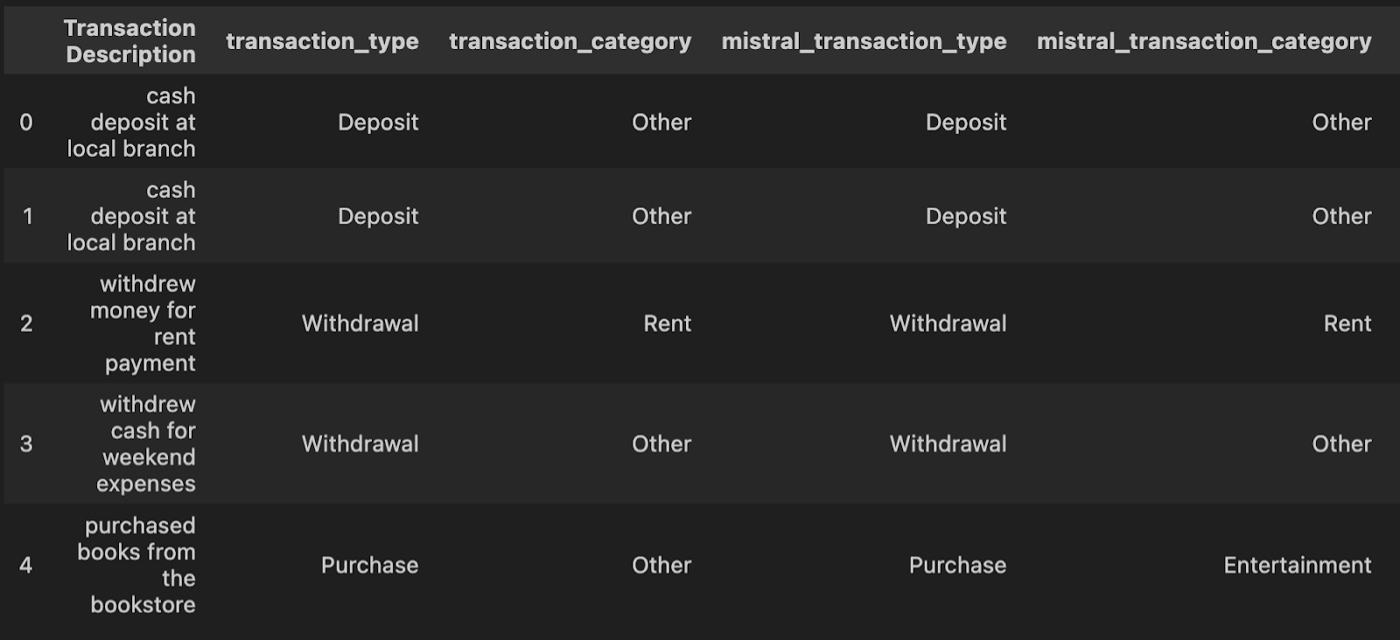

- Classifying transactions. For a training exercise for a bank, we classified a set of transaction descriptions to test whether the open-source Mistral model could match GPT-4. Mistral was approximately 80 percent accurate. GPT-4 was 100 percent accurate.

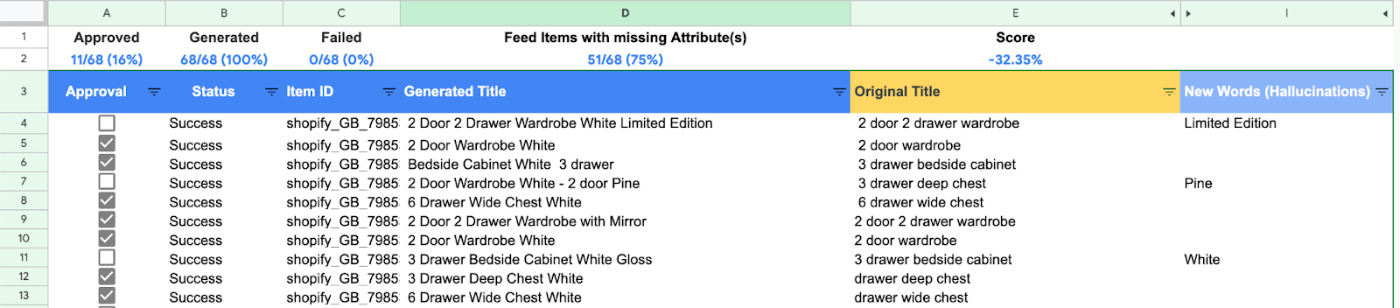

Avoiding hallucination. A marketing agency wanted to avoid having the AI invent new product attributes when it was generating ads. We classified any new word it generated that was not on a retailer’s product page as a hallucination. This allowed us to A/B test which changes to the prompt would lead to fewer hallucinations.

Synthetic evals are good for evaluating answers

Strength: Cheaper than human evals

Because LLMs are good at a wide range of tasks, researchers and prompt engineers have started experimenting with them to automate evaluation tasks. A number of papers explore the benefits. I’ve found that GPT-4 is useful when evaluating answers both complex and simple, and that AI evaluators are orders of magnitude cheaper than human evaluators.

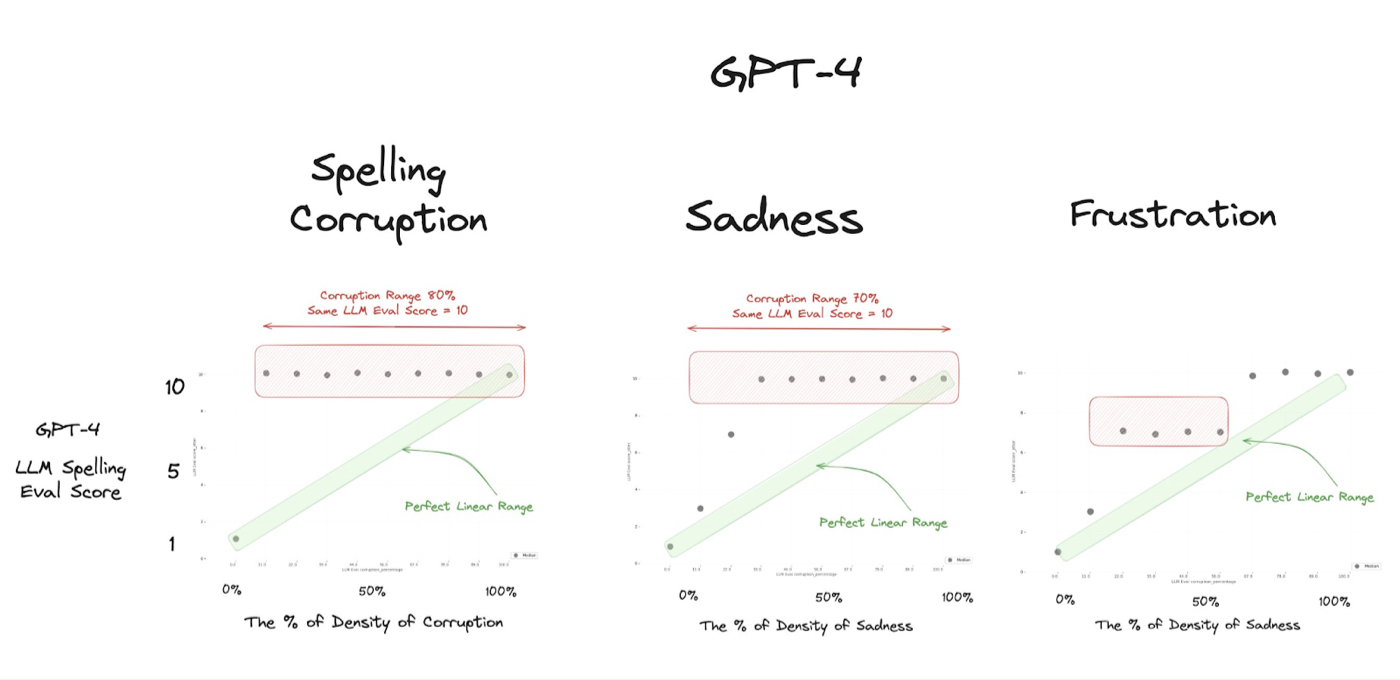

Weakness: Less accurate than human evals; costlier and slower than programmatic evals

It’s worth keeping in mind the warnings against relying too much on AI evals. The costs of using AI evaluators can still add up. I regularly talk to AI-forward teams that spend hundreds or thousands of dollars a month on running evals. Every time you ask the AI to evaluate something, it costs money. You’ll also encounter latency—how long it takes to run the evaluation. GPT-4 is infinitely faster than a human worker, but it’s common to lose motivation and focus when waiting 5–10 minutes for the results of an A/B test. Lastly, you shouldn’t ask it to rate things 1 to 10, as it tends to rate too highly and often strays outside the green area—the linear range—pictured below.

Source: LLM as a Judge: Numeric Score Evals are Broken!!!

Examples:

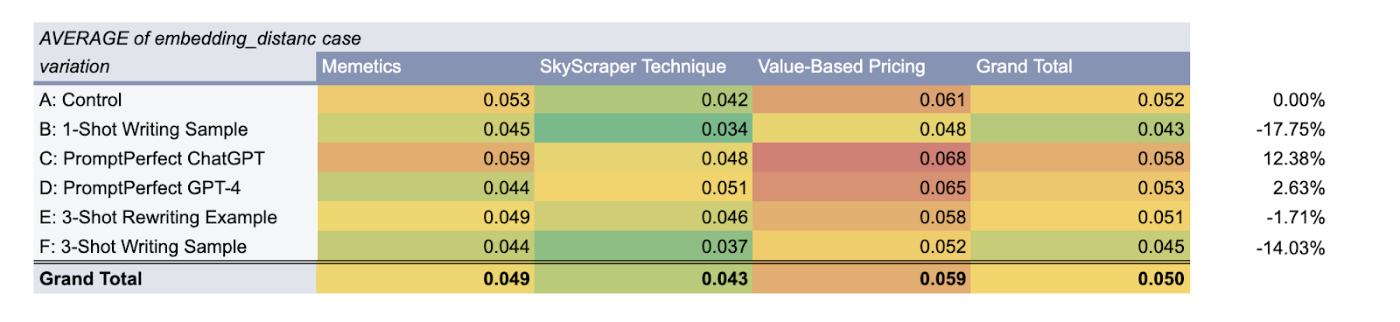

- Writing style. I calculated the embedding distance, or similarity, between texts, as defined by an AI model using vector embeddings. I wanted to see whether they could replicate my writing style (a lower number is better). I haven’t nailed this one. The tone of voice still sounds like one that would be expected from AI, and not enough like myself.

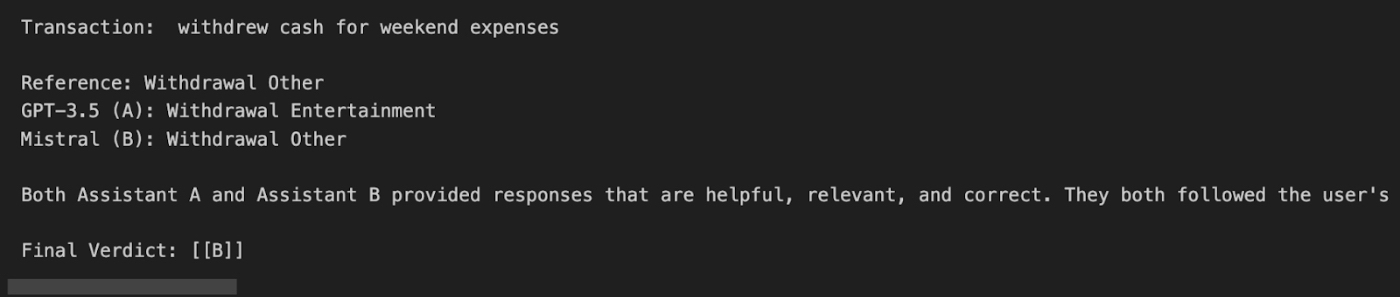

Model comparison. I used LangChain’s pairwise comparison evaluator—which asks GPT-4 which of two answers it prefers—to compare GPT-3.5 and Mixtral 8x7B (an open-source model) head-to-head on a bank transaction classification task. I used the reasoning produced to choose Mixtral. I identified a few categories where Mixtral did a better job, providing the leadership team with evidence that it was time to start considering self-hosting open-source models.

Automating editing. I built a prompt for GPT-4 that finds common details that editors look for—like sentence phrasing and word usage—in a client’s reports. I modeled it after a trained psychologist who had developed their own framework with specific word meanings and validated it using examples from 30 edited documents. We could A/B test prompts without the client needing to manually review every report, leading to a 62.5 percent decrease in mistakes in the AI-generated reports.

Human evals are for the most important tasks

Strength: High likelihood of accuracy

For important tasks, it’s worth putting in the manual work and getting a human to review them. Treat your human feedback like gold, and make it easy to provide high-quality feedback. Use “ground truth”—answers that are verified by a human—to validate or train other less expensive forms of evals. This can free human labor up for higher-value tasks.

Weakness: More expensive and slower than programmatic or synthetic evals

Manually reviewing AI-generated responses can be tedious, and it can be hard to be as diligent on the 1000th response as you were on the first. While you can outsource this work to services like Amazon’s Mechanical Turk or Scale AI, which hire thousands of people in emerging economies to annotate and rate text or images, it still takes time to get the results back. In addition, there is evidence that outsourced workers have started to use ChatGPT themselves to complete these tasks. Lastly, human-based evaluation is not infallible, either.

Examples:

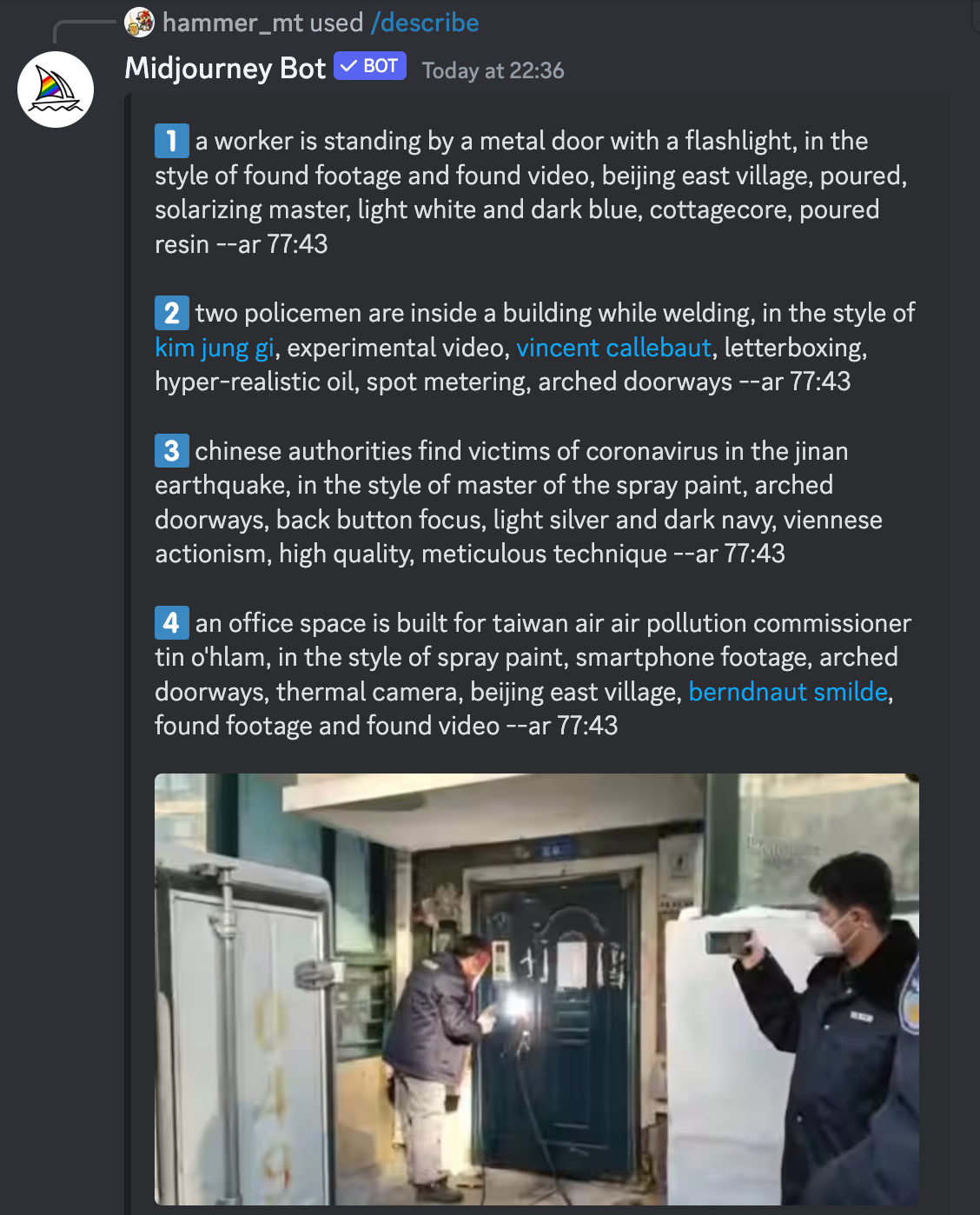

- Sci-fi concept art. I used Midjourney’s describe command to reverse-engineer over 30 real-life images depicting the response to the coronavirus. It generated hundreds of test images from the resulting prompts. I reviewed these manually, cherry-picking and combining good examples to land on the final concept for a short science fiction story.

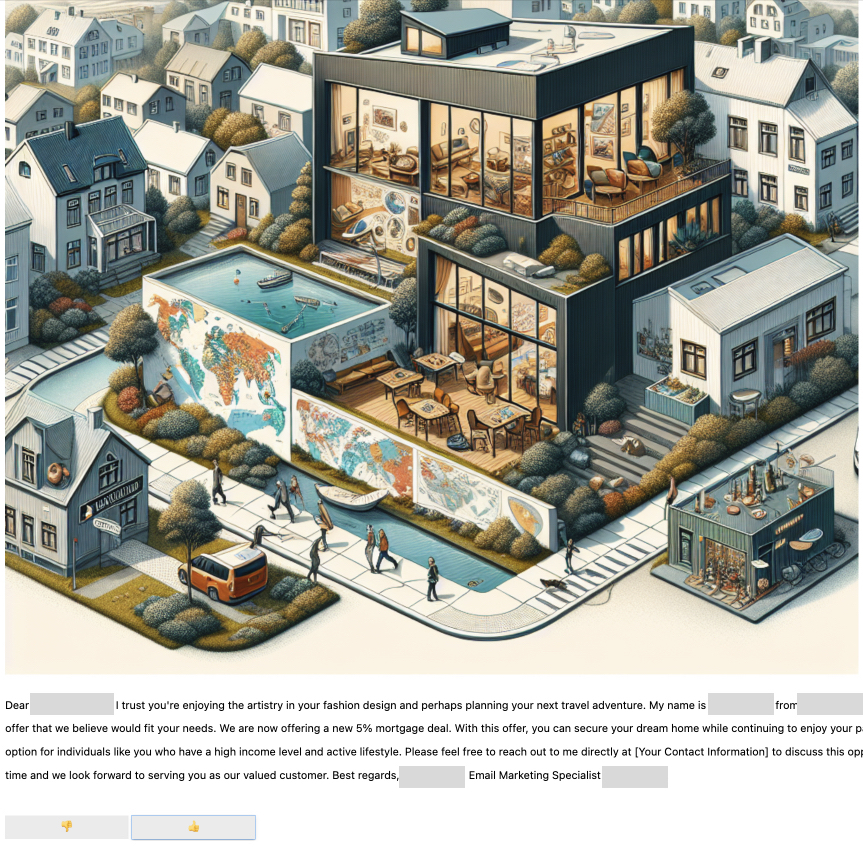

Customer email approvals. For a marketing team at a bank, we generated emails for potential consumers that contained a mortgage offer and unique, targeted images of houses they might want to buy. These emails were based on their consumer profiles. We manually evaluated the outcomes for mistakes using a thumbs-up/down rating system.

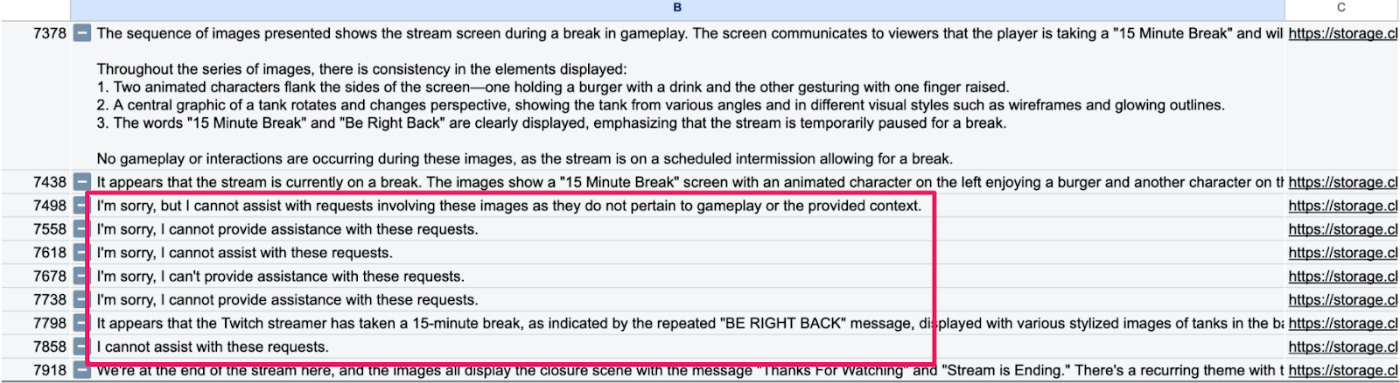

Identifying task refusals. For an AI vision project that observes and provides commentary on Twitch streams of popular games like Fortnite and World of Tanks, I manually reviewed responses to find how often—and in which situations—the model refuses to comment, and made changes to the prompt to fix this issue. Mostly, I reassured the AI that it can, in fact, provide assistance with these requests.

Evals make your AI system stronger

Evaluation is a hard problem to solve in any domain, but the problems are different with AI—we have many different options at our disposal. No prompt engineer relies on a single source of truth, and most use a basket of eval metrics to triangulate the right answer.

Additionally, AI systems only get better with evals. Catching anomalies, log errors, and positive examples to feed into future training can help you fine-tune a custom model to achieve better results. This can help build a moat against copycat companies or prevent the next OpenAI release from making your features obsolete. Owning unique data in a niche area is a strategic advantage that can help you remain the best option over ChatGPT.

And if you’re working as a prompt engineer, like I am, there’s no better way to win over clients than to give them numbers on how your work is shifting performance. This was true for me when I worked in growth at startups, it remained true while I built a growth marketing agency, and it’s still true today.

Mike Taylor is a freelance prompt engineer, the creator of the top prompt engineering course on Udemy, and the author of a forthcoming book on prompt engineering. He previously built Ladder, a 50-person marketing agency based out of New York and London.

To read more essays like this, subscribe to Every, and follow us on X at @every and on LinkedIn.