Hi all! Today we are trying something new. I’m calling it an “idea review.” The goal is to examine a book for its hypothesis and conclusions, and leave the examination of the book’s writing craft to more capable hands. Please leave any feedback in the comments below.

If you are new here this is Napkin Math, a data-driven newsletter with 17k+ readers that examines the fundamentals of technology and business. Today we are looking at the complexity that is Facebook and whether the company can be fixed.

What would you be willing to pay to stop your imminent death?

For most people, the answer is simple and brief. “Anything.” Money is trivial in comparison with experiencing one more sunrise, another kiss from your spouse, a great big bite of your favorite meal. It is clear that no number in a bank account is worth saving when compared to the things that actually matter.

Next, allow me to ask—how much are you willing to pay for a total stranger not to die? This answer is decidedly more complicated. My Mormon readers may cite their scriptures that “the worth of souls is great in the eye’s of God” and they would be willing to pay anything. My more actuarially inclined readers would cite the U.S. government’s official estimate of about $10M for a statistical life. The most honest of readers (atheist, religious, or otherwise) would say they wouldn’t spend more than two and a half grand to save a life, the average amount given to charity per household in America.

The great balancing act between what we spend to prevent harm is ever-present in our individual decisions. What we are willing to pay to fix problems is a question that each of us has to answer individually.

This complicated calculus is the core tension of Facebook. Facebook spent $3.7B in 2019 to rid the platform of misinformation that has life and death consequences, but many of us have the sense that the net impact of Facebook on society is still negative. Perhaps they need to spend more to save more lives. But how much?

It is a platform that undeniably does an incredible amount of good for its 2.89B users. Throughout COVID it allowed the planet to stay connected. During the Black Lives Matters protests last year, Facebook’s apps were all prominently used to share stories that built empathy for the cause. Facebook messaging apps are used to organize little girl’s birthday parties, dog adoptions, and a whole menagerie of the cutest little events you can think of. Even on the commercial side, Facebook’s targeted ad tools allow mom and pop businesses to compete (and win!) against the powerful ad teams at major corporations. The entire direct-to-consumer industry owes a large portion of its existence to the company. These examples for good could go on for dozens of pages.

For investors, it is also a net good! Facebook is one of the greatest money-making machines of all time. It recently hit a staggering one trillion dollar valuation, posted a $10.3B Net Income last quarter, and is one of the best-performing stocks of the last 10 years.

And yet.

The company has a cost.

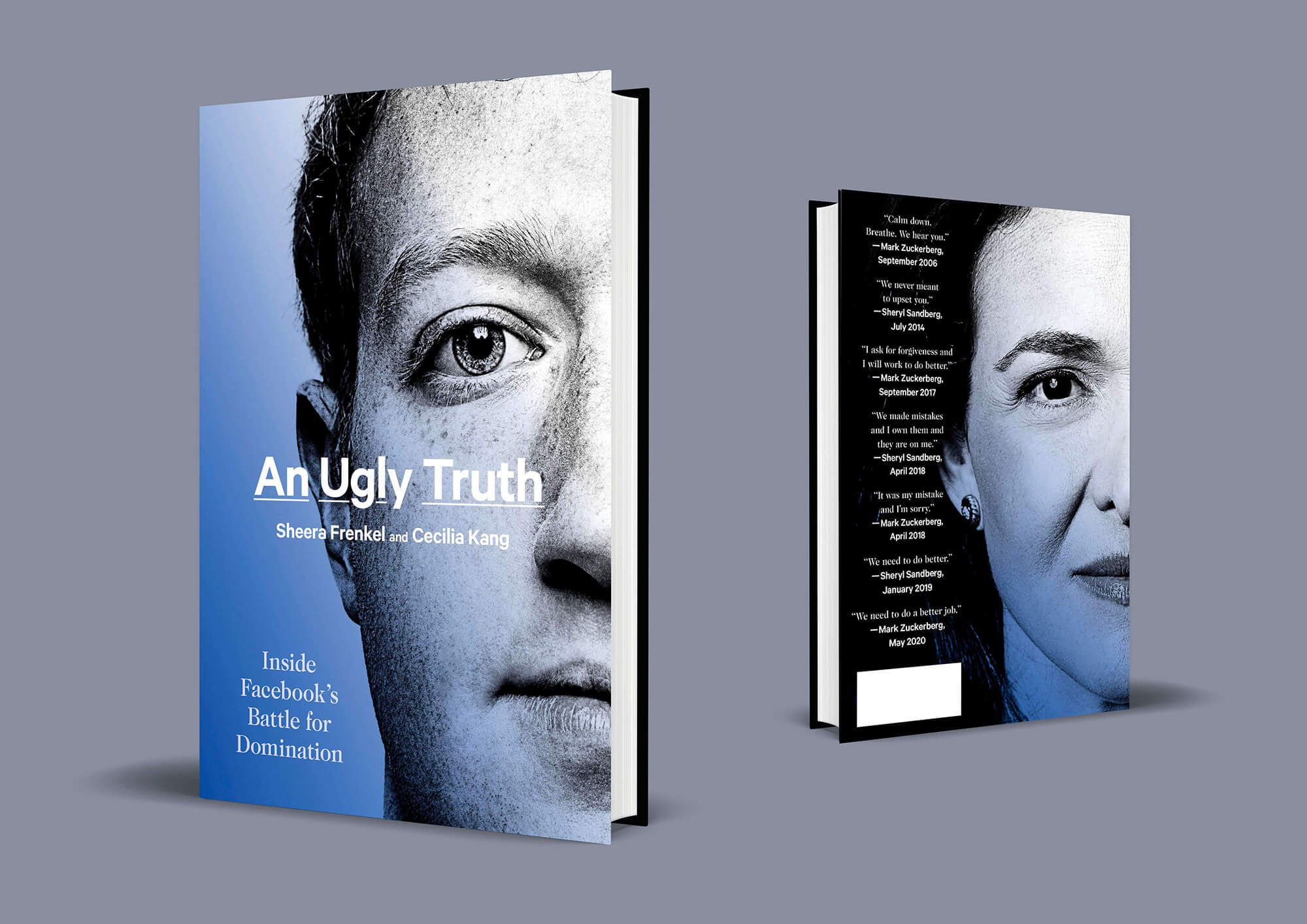

The great balancing act between good and harm on Facebook is the focus of the new book An Ugly Truth: Inside Facebook’s Battle for Domination. The two authors, both employees of the New York Times, conducted interviews with over 400+ sources to inform the book. They review the major scandals over the last 4 years, giving never before heard details on how the company behaved itself. It is a stunning achievement of reporting. The breadth of their access, coupled with them having sources at the very highest levels, allows them to paint a fairly damning picture of the company's actions related to some of the scandals referenced above.

For example, they revealed the level of failure that had taken place in Myanmar. 4 years before the genocide in 2017, multiple experts had meetings with Facebook warning of the rising racial tensions. The local populace, which so strongly associated the internet with Facebook that the words were used interchangeably, were hooked to the app. Much of what was being shared was recipes, photos of family, ya know—the cute stuff. It also included posts like, “One second, one minute, one hour feels like a world for people who are facing the danger of Muslim dogs.”

This content wasn’t being caught by the moderators. Shockingly, when the company expanded they only had 5 content moderators, who only spoke Burmese for a country of 54 million. There are over 100 languages spoken by the local populace and more dialects on top of that. To help make moderation more efficient, they translated their community guidelines into Burmese (they weren’t in the local language before then) and gave stickers users could place on content to help moderators find the bad stuff. The stickers didn’t quite work:

“The stickers were having an unintended consequence: Facebook’s algorithms counted them as one more way people were enjoying a post. Instead of diminishing the number of people who saw a piece of hate speech, the stickers had the opposite effect of making the posts more popular.”

The situation continued to escalate resulting in 25K deaths and 700K refugees fleeing the country. The company apologized and never faced any consequences for its role in this calamity.

But Myanmar wasn’t the only failure. Here’s a lowlight list from the last few years:

- The January 6th riots in Washington were partially driven by discussion that took place on Facebook (according to leaked company documents)

- Russian Interference in U.S. elections was initially denied, then slowly, painfully acknowledged.

- The company blocked Political Dissidents in Turkey at the request of its Authoritarian Government.

- Their automated ad system sold ads for illicit drugs and fake Coronavirus vaccines

- In one of many breaches, the company had 533 million users phone numbers stolen and put on the dark web.

- Facebook groups were used by human smugglers to convince desperate migrants to cross borders

To be clear—this isn’t an exhaustive list. There are many, many more of these stories.

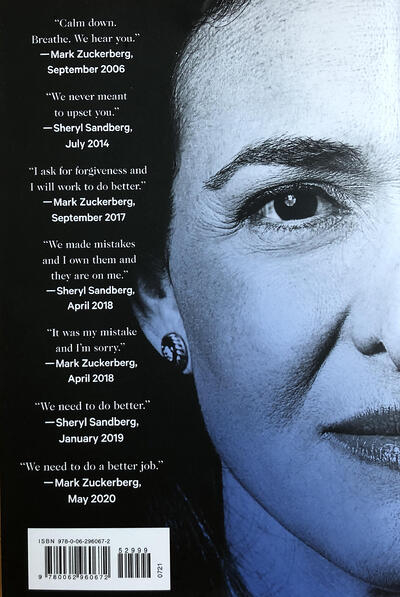

This tale plays out again and again. The company expands quickly, acquires users, and is then shocked by how people use their technology to cause harm. They apologize and maybe get a fine. Then they go on to report record breaking growth and the business doesn’t miss a step. One of the most damning features of the book is the back cover.

Apology. Apology. Apology again.

Frenkel and Kang’s central argument is that the profit orientation of the organization is at the heart of these problems. Others have argued (prominently Casey Newton of the Platformer) that even if you made Facebook a non-profit organization, none of these problems would’ve been solved because the problems are inherent to the social web. I think both of these are perhaps a bit too extreme in their prescription. There are more complicated forces at play. If Facebook is to be fixed we first must be honest about the origins of its problems.

The Napkin Math of Content Moderation

The scale of the tasks facing Facebook are difficult for the mind to comprehend. To illustrate, let's do some fantasy calculations. Facebook has roughly 2.9B monthly active users. That’s 3 Chinas, 10 Americas, or about 414 Paraguays worth of user populations. Let’s give the rough assumption that each user posts four times a month on average. That gives Facebook hosting ~139B worth of posts to analyze each year. That is a lot of happy birthday wishes.

If you conscripted the entire 356K population of Iceland to content moderator prison, they would each need to personally review 3.9M pieces of content per year. Their winters are long, they have nothing to do. And they’ll need the time! If each post takes 10 seconds to review, they would simply need to work nonstop for 27,114 days straight to review their allotted content.

This situation is farcical but let’s keep it going! Not all the content justifies review by a moderator. The banal posts just slip blissfully into the newsfeed, untouched by human hands. However, by my estimate every human being is at least 1% naughty and our posts probably reflect that. So assuming 1% of content posted violates Facebook’s rules, that only leaves 1.39B pieces of content left to be reviewed or ~4000 per individual. Not bad, but still too much for our very frosty, island residing conscripts. We must turn to technology

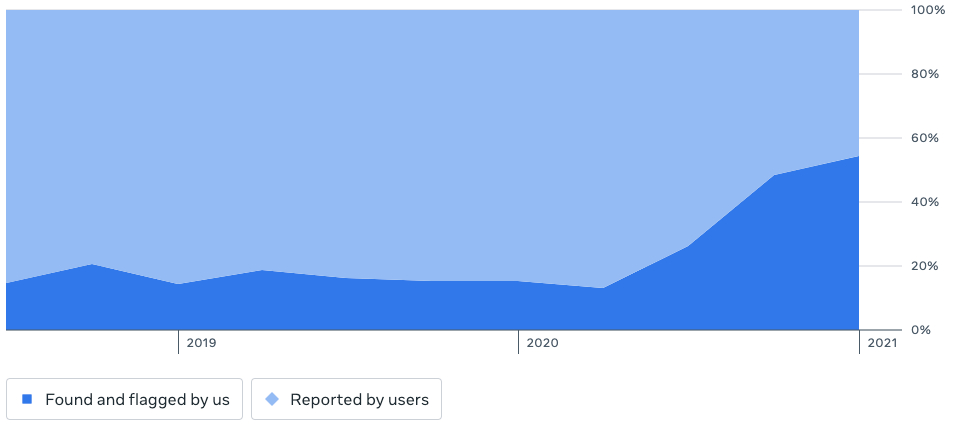

Facebook has AI systems that they brag about all the time to help solve this volume problem. And wouldn’t ya know it, the press release from the company says they are doing a great job. (Weird how all press releases say that). As a sample of their improvement, this is the capture rate of their AI systems on bullying/harassment before people reported it.

All kidding aside, this is a miracle of technology. Facebook is available in 101 languages, allows videos, gifs, pictures, text, and emojis galore, and is still able to capture this much of something as difficult to define as “bullying.”

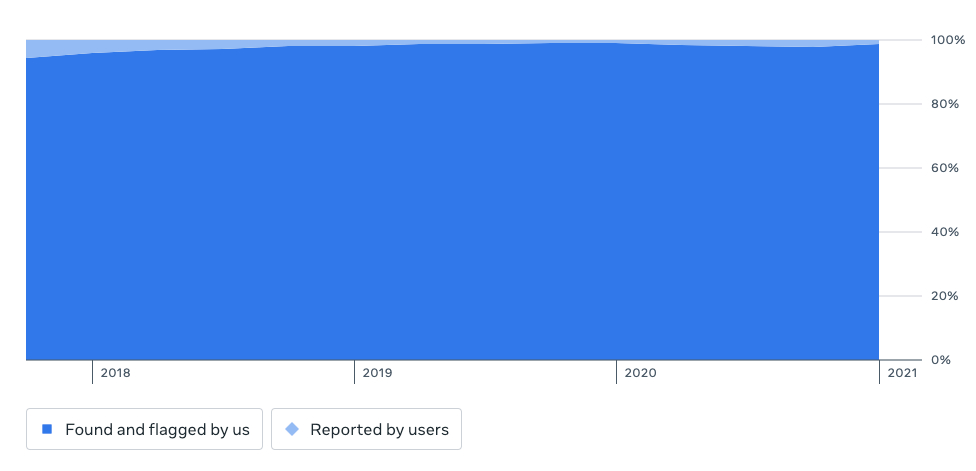

The capture rate on the more obvious categories of adult nudity and sexual activity is even higher than bullying.

I don’t say this as the ultimate defense of Facebook—there is still far too much garbage getting posted on the site. Note: The NY Times just posted an article about the experience of social media for black soccer players in England….it ain’t great. My point is to illustrate just how large the scale of the problem is. In my discussions with health and safety experts, they were universally of the opinion that AI could never completely solve content moderation. That is where the human moderator comes in.

Facebook utilizes over 15K contractors to sift through the stuff that AI doesn’t catch. These contractors will spend hours every day watching beheadings, child pornography, hate speech, and all the worst humanity has to offer. They are the intellectual sewage plant of the internet, catching all the crap so the rest of us don’t drink poisoned water. It is a terrible job. Rotten, horrible, traumatizing. In the U.S. these moderators earned about $30K per year to do this. On average they process 500+ posts a day.

Note: I really wish I had time to write a few thousand words about why big tech companies are so reliant on contractors doing work for them. It is egregious and a violation of the spirit of labor laws, but alas we do not have time for it today. Just know that Facebook does not use this type of labor because it is good for the worker.

It is super, duper important to note that this just isn’t a Facebook problem. Any website that hosts user-generated content will have this. The QAnon conspiracy, which was a stupidity-laced, rage-filled catalyst for much of the Jan 6th riots, was absolutely talked about on Facebook. However, it was also amplified on Twitter! Hosted on 8chan. Discussed on Youtube. And perhaps most hilariously, rampant on Peloton spin bikes.

Much of what makes Facebook “bad” is more about what is bad about human nature and the internet. Each of the companies that host user-submitted content, from Reddit to Pornhub, will have to make choices about user content. There isn’t a fix for human ugliness. Churches have been trying to do that longer than Facebook has. It doesn’t matter if Facebook was built by a government, a non-profit, an anonymous crypto community, or anything else, these problems would still exist.

Profit’s Problem

Just because these problems are inherent on the social internet, we should not give Facebook a complete pass. This is a company that could do more for content moderation than any other social giant. They have a $10B Net Income in one quarter. Zuckerberg holds the voting majority of shares and can effectively do anything he wants. If there was a company that could commit to the path of 20% decreased margins because of a ramped up investment in security, Facebook would be it.

When you read An Ugly Truth, the biggest surprise is the extent of bureaucratic malarkey present in the organization. VPs stab their underlings in the back, power struggles between Sales and Product consistently turn bloody. Sandberg, who is usually portrayed as Zuckerberg’s foil, comes off as a Dwight to Zuckerberg’s Michael Scott. She holds only the power that Zuck gives her and rarely takes a stand with significant risk.

When you have a machine as profitable as Facebook, it is difficult to stop the dance. Perhaps the thing that shocked me most was an experiment that Facebook had run after the election of Donald Trump. This section is a little long, but trust me, you need to read this.

“For the past year, the company’s data scientists had been quietly running experiments that tested how Facebook users responded when shown content that fell into one of two categories: good for the world or bad for the world. The experiments, which were posted on Facebook under the subject line “P (Bad for the world),” had reduced the visibility of posts that people considered “bad for the world.” But while they had successfully demoted them in the News Feed, therefore prompting users to see more posts that were “good for the world” when they logged into Facebook, the data scientists found that users opened Facebook far less after the changes were made.

The sessions metric [a data point that showed time spent] remained the holy grail for Zuckerberg and the engineering teams. The group was asked to go back and tweak the experiment. They were less aggressive about demoting posts that were bad for the world, and slowly, the number of sessions stabilized. The team was told that Zuckerberg would approve a moderate form of the changes, but only after confirming that the new version did not lead to reduced user engagement.

‘The bottom line was that we couldn’t hurt our bottom line,’ observed a Facebook data scientist who worked on the changes. “Mark still wanted people using Facebook as much as possible, as often as possible.’ ”

Facebook is addicted to profit as its users are addicted to its apps. The relationship isn’t healthy for either party.

Note: The Napkin Math team does think there is an additional layer of nuance here. I doubt Zuckerberg's main goal is profit in the next few years. He likely has a much longer time horizon and is much more worried about weakening the network effect of Facebook. Each user's engagement with these kinds of platforms tends to cluster into two buckets: quite often or hardly ever. It is an incredibly dangerous slippery slope to allow yourself to slide down, even a little bit. Facebook likely perceives small losses in engagement as potentially existential threats.

The root of all evil is TBD

Even after reading the book and being shocked by the headlines, I honestly can’t decide whether Facebook is net good or net evil for the world. The internet is dirty and gross sometimes, so I do not exclusively blame them for their moderation issues. Simultaneously, they have consistently made choices to underinvest in moderation and prioritize profits over user good.

When An Ugly Truth was published, there were emails traded among Facebook employees. Manager’s told underlings to ignore the “misinformation” (and thus ensured that they would all buy it).

Ultimately, that is where I come to. Facebook has become the social web’s boogeyman. Any of society’s ills usually get landed squarely on their feet. Maybe they deserve it, maybe they don’t. Until they allow independent and universal access to qualified researchers, all we have to go off of are books like this. As such, this is the finest piece of reporting that offers compelling questions on what Facebook could be.

If you enjoyed this piece, please subscribe to receive weekly emails thoughtfully examining technology businesses.

Ideas and Apps to

Thrive in the AI Age

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!

Judging by the arguments you've highlighted, it seems to me authors of this book were looking for a fancy way to compel Facebook into further full-scale censorship of what people post.

You can't throw the baby out with the bathwater.

When you moderate people's posts, you're essentially taking their freedom of speech away. You're knowingly or unknowingly creating a centralised system where stories, opinions, and information that doesn't suit the narrative of those who pay the moderators won't see the light of day.

We've seen this already.

On one end are practicing doctors, sharing their experiences and recommendations for fighting what they consider regular symptoms 'declared as a pandemic.' And on the other hand are 'experts,' exalted scientists, and business men who think what they say should be taken hook, line, and sinker (without question) because they are whatever they claim to be.

Today, one group is censored. The other can say whatever they like, even when they are not necessarily doctors. Even if what they recommended lacks any atom of common sense, let alone evidence-based science.

All possible because of moderation.

But all of these only touch on the symptoms and not the root causes. If I were these authors, I'll worry more about the root causes of these.

Per my observation, here are just some of them.

The root cause is the death of personal responsibility - people can post or say what they like, accepting or rejecting what they say or post is your responsibility, not theirs.

It is the gradual death of individuality and conservatism in favor of group think - people have traded their dignity to identify with groups they have no idea of their underlying agenda.

It is because more than ever before, people are now outsourcing their common sense and their God-given thinking ability to 'experts' and 'celebrities' - just because someone with more money or followers says it or approves it doesn't mean it's right.

It is because we're happy to applaud grandiose exclamations without inquiring about the long-term costs.

I could go on and on.

As you rightly said, neither could churches nor the most religious institutions fix human beings behaviours.

So how these authors expect Facebook to do that? And to think they're subtly pushing for them to do this via more moderation is the equivalent of asking for something grandiose without examining the long-term costs.

Imagine there were moderators for this book. And those moderators were all under Facebook's payroll.

Do you think they would've approved its publication?

Questions!!!

Judging by the arguments you've highlighted, it seems to me authors of this book were looking for a fancy way to compel Facebook into further full-scale censorship of what people post.

You can't throw the baby out with the bathwater.

When you moderate people's posts, you're essentially taking their freedom of speech away. You're knowingly or unknowingly creating a centralised system where stories, opinions, and information that doesn't suit the narrative of those who pay the moderators won't see the light of day.

We've seen this already.

On one end are practicing doctors, sharing their experiences and recommendations for fighting what they consider regular symptoms 'declared as a pandemic.' And on the other hand are 'experts,' exalted scientists, and business men who think what they say should be taken hook, line, and sinker (without question) because they are whatever they claim to be.

Today, one group is censored. The other can say whatever they like, even when they are not necessarily doctors. Even if what they recommended lacks any atom of common sense, let alone evidence-based science.

All possible because of moderation.

But all of these only touch on the symptoms and not the root causes. If I were these authors, I'll worry more about the root causes of these.

Per my observation, here are just some of them.

The root cause is the death of personal responsibility - people can post or say what they like, accepting or rejecting what they say or post is your responsibility, not theirs.

It is the gradual death of individuality and conservatism in favor of group think - people have traded their dignity to identify with groups they have no idea of their underlying agenda.

It is because more than ever before, people are now outsourcing their common sense and their God-given thinking ability to 'experts' and 'celebrities' - just because someone with more money or followers says it or approves it doesn't mean it's right.

It is because we're happy to applaud grandiose exclamations without inquiring about the long-term costs.

I could go on and on.

As you rightly said, neither could churches nor the most religious institutions fix human beings behaviours.

So how these authors expect Facebook to do that? And to think they're subtly pushing for them to do this via more moderation is the equivalent of asking for something grandiose without examining the long-term costs.

Imagine there were moderators for this book. And those moderators were all under Facebook's payroll.

Do you think they would've approved its publication?

Questions!!!

Judging by the arguments you've highlighted, it seems to me authors of this book were looking for a fancy way to compel Facebook into further full-scale censorship of what people post.

You can't throw the baby out with the bathwater.

When you moderate people's posts, you're essentially taking their freedom of speech away. You're knowingly or unknowingly creating a centralised system where stories, opinions, and information that doesn't suit the narrative of those who pay the moderators won't see the light of day.

We've seen this already.

On one end are practicing doctors, sharing their experiences and recommendations for fighting what they consider regular symptoms 'declared as a pandemic.' And on the other hand are 'experts,' exalted scientists, and business men who think what they say should be taken hook, line, and sinker (without question) because they are whatever they claim to be.

Today, one group is censored. The other can say whatever they like, even when they are not necessarily doctors. Even if what they recommended lacks any atom of common sense, let alone evidence-based science.

All possible because of moderation.

But all of these only touch on the symptoms and not the root causes. If I were these authors, I'll worry more about the root causes of these.

Per my observation, here are just some of them.

The root cause is the death of personal responsibility - people can post or say what they like, accepting or rejecting what they say or post is your responsibility, not theirs.

It is the gradual death of individuality and conservatism in favor of group think - people have traded their dignity to identify with groups they have no idea of their underlying agenda.

It is because more than ever before, people are now outsourcing their common sense and their God-given thinking ability to 'experts' and 'celebrities' - just because someone with more money or followers says it or approves it doesn't mean it's right.

It is because we're happy to applaud grandiose exclamations without inquiring about the long-term costs.

I could go on and on.

As you rightly said, neither could churches nor the most religious institutions fix human beings behaviours.

So how these authors expect Facebook to do that? And to think they're subtly pushing for them to do this via more moderation is the equivalent of asking for something grandiose without examining the long-term costs.

Imagine there were moderators for this book. And those moderators were all under Facebook's payroll.

Do you think they would've approved its publication?

Questions!!!