Sponsored By: Notion

6 months free of Notion with unlimited AI.

Now, you can access the limitless power of AI, right inside Notion, to build and scale your company and team with one tool.

Notion is used by thousands of startups around the world as their connected workspace for everything from engineering specs to new hire onboarding to fundraising. When applying, select Napkin Math in the drop-down partner list and enter code NapkinMathXNotion at the end of the form. Apply by March 1!

If you’ve spent enough time in startups, you’ve likely heard of the concept of the killer app: an application so good that people will buy new, unproven hardware just for the chance to use the software. It is a foundational idea for technology investors and operators that drives a whole host of investing and building activity. Everyone is on the hunt for the one use case so magical, so powerful that makes buying the device worth it.

People are hunting with particular fervor right now as two simultaneous technology revolutions—AI and the Apple Vision Pro—are underway. If a killer app emerges, it’ll determine who gains power in these platform shifts. And because our world is defined by tech companies, it will also determine who is going to have power in our society in the next decade.

But here’s the weird thing: The killer app, when it’s defined as a single app that drives new hardware adoption, is kinda, maybe, bullshit. In my research, there doesn’t appear to be a persistent pattern of this phenomenon. I’ve learned, instead, that whether a device will sell is just as much a question of hardware and developer ecosystem than that of killer application.

If we want to build, invest in, and analyze the winner of the next computing paradigm, we’ll have to reframe our thinking around something bigger than the killer app—something I call the killer utility theory. But to know where we need to go, we need to know where to start.

Where did the killer app come from?

Analysts have been wrestling with the killer app for nearly 40 years. In 1988, PC Week magazine coined the term “killer application” when it said, “Everybody has only one killer application. The secretary has a word processor. The manager has a spreadsheet." One year later, writer John C. Dorvak proposed in PC magazine that you couldn’t have a killer app without a fundamental improvement in the hardware: “All great new applications and their offspring derive from advances in the hardware technology of microcomputers, and nothing else. If there is no true advance in hardware technology, then no new applications emerge.”

This idea—in which the killer app is tightly coupled with new hardware capabilities—has been true in many instances:

- VisiCalc was a spreadsheet program that was compatible with the Apple II. When it was released in 1979, it was a smashing success, with customers spending $400 in 2022 dollars for the software and the equivalent to $8,000–$40,000 on the Apple II. Imagine software so good you would spend 10x on the device just to use it.

- Bloomberg Terminals and Bloomberg Professional Services are specialized computers and data services for finance professionals. A license costs $30,000, and the division covering these products pulls in around $10 billion a year.

- Gaming examples abound, ranging from Halo driving Microsoft Xbox sales to The Breath of the Wild moving units of the Nintendo Switch. Every generation of consoles will have something called a “system seller,” where the game is so good that people will spend money to access it.

So this type of killer app—software that drives hardware adoption—does exist, and it has emerged frequently enough to be labeled a consistent phenomenon. However, if you are going to bet your career or capital on this idea, it is important to understand its weaknesses. There is one crucial device that violates this rule—the most important consumer electronic device ever invented. How do we explain the iPhone?

How does Apple violate the killer app theory?

When Steve Jobs launched the iPhone in 2007, he pitched it as “the best iPod we’ve ever made.” He also argued (incorrectly) that “the killer app is the phone.” Internet connectivity, the heart of the iPhone’s long-term success, was only mentioned about 30 minutes into the speech! And when it first came to market, it didn't even allow third-party apps. Jobs was sure that Apple could make better software than outside developers could. (Wrong.)

Even when he was finally convinced that the App Store should exist, it launched one year later with only 500 apps. By comparison, the Apple Vision Pro already has 1,000-plus native apps, just a few weeks after its release.

To figure out why people bought the iPhone, I read through old Reddit posts, blogs, and user forums. I tried to find an instance of someone identifying the iPhone’s killer app at launch. The answers were wide-ranging, from gaming apps to productivity use cases to even a flashlight app, but there wasn’t one consensus pick.

So how did the iPhone go on to dominate when it didn’t have any must-have apps to begin with? One argument is that it was the App Store itself that was the killer app. By making it easy to download and build apps, Apple made the iPhone a winner. But Apple itself contradicts this theory with its own devices.

When the Apple Watch was released in 2015, it had 3,500 apps. Not one of them was a hit—despite the device launching with more apps than the iPhone and with an App Store, and the porting over many of the existing apps from the iPhone ecosystem. It wasn’t until around the watch’s third generation that Apple was able to figure out that its primary use case was health and wellness, neither of which is really app-reliant. They’re more a function of sensors and design.

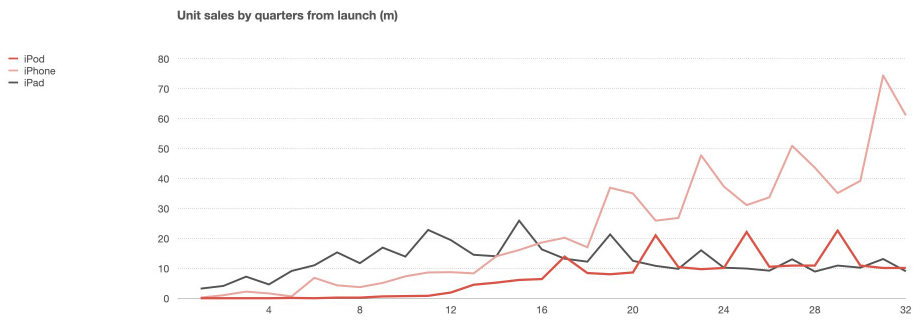

Shoot, pretty much all Apple devices start slow relative to the scale they eventually reach. It usually takes them 2–3 generations before sales start to soar.

Source: Benedict Evans.

Despite a total lack of singular killer apps, the iPhone and iPad have achieved enormous profits and scale. Even beyond Apple and the iPhone, this exception applies to many forms of personal computing, from desktops to laptops. Every year millions of laptops, cellphones, and tablets are sold without a killer app, even without the benefit of Apple’s App Store. How can this be?

Killer utility theory

To answer this question, I propose a new theory on the relationship between hardware and software. I call it the killer utility theory.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

.08.31_AM.png)

Comments

Don't have an account? Sign up!

As much as I want to disagree, but it kinda make sense.

The intense competition among big companies prevents them from thriving and doesn't allow for the sharing of any kind of ecosystem