Was this newsletter forwarded to you? Sign up to get it in your inbox.

For the past 24 hours, I’ve felt like Robin Williams fresh out of the jungle in Jumanji, screaming,“What year is it?”

In the 1990s, Microsoft became a personal computer giant by using someone else's technology to make its Windows operating system and forming a partnership with cutting-edge chipmaker Intel to power its computers.

In the 2020s, Microsoft is getting even bigger by using the transformers invented by Google and forming a partnership with OpenAI for its large language models.

Over the course of two days, Microsoft announced an incredible number of product updates at its Build developer conference. The company’s press team sent Every a list of the new products and features that was about 100 pages long. In all of that, two things mattered:

- A new line of personal computers that have AI integrated at the operating system level

- A new AI agent studio that allows not only individuals to automate tasks, but entire teams

While OpenAI had the Scarlett Johansson Her voice (oops!) and Google had a man in a bathrobe running around the stage at their events, Microsoft’s presentation (which Dan attended) was fairly drab, consisting of software executives droning through PowerPoint slides. But don’t let this flatness fool you. Microsoft has the scale and power to make AI a reality for the 1.4 billion personal computers running Windows (whether their customers want it or not).

Local scale, local chips, local automation

The big questions whenever a company says its products “do AI” are where the compute happens and how the model gets data to execute its tasks. For example, ChatGPT gets its data from the prompts users enter, files they upload, and its built-in memory of previous instructions. It crunches everything together on a cloud-based GPU and then—presto!—spits out the answer.

Being able to do stuff with powerful chips built into your device makes AI applications run faster and your devices far more private (sorry, FBI). As a result, developers who build AI in their applications can make experiences that feel natural and fast. You won’t experience that awkward pause as the AI sends a request to a nearby data center and waits for the answer to bounce back. Right now, most of your generative AI applications are reliant on a server farm in Wisconsin.

Microsoft’s new personal computers are a big deal because they incorporate new types of compute and new places for models to get their data. The company call these computers “Copilot+ PCs.”

To accomplish this, Microsoft announced a switch to an Arm architecture using Qualcomm Snapdragon X series chips (if that is word salad to you, don’t worry—it basically means they have new chips that make the computer go fast). Apple has used this same Arm architecture with its own M line of chips instead of Qualcomm’s for a few years, which is partly why MacBooks have been so much faster than Windows PCs. Now Microsoft is bragging that Surface laptops with this setup are 58 percent faster than the M3-powered MacBook Air.

In most computers, local AI computing power comes from a Nvidia GPU. Copilot+ PCs have an NPU (neural processing unit) dedicated to AI tasks. Microsoft says the latter “uses less power and is far more efficient at AI tasks than a CPU or GPU,” so you get the benefits of local compute.

That local power allows Microsoft to perform AI workflows with local data that would be challenging to do with a cloud-based system. The most significant launch at Build was software called “Recall” that is only available on Copilot+ PCs. Your computer records everything that happens on your screen and uses AI for semantic search across all of that data. Rather than relying solely on keywords or image search, Recall can understand the logic and intentions of what you are asking for. No more lost files or forgotten conversations—Recall can find all of it. (Much of the discourse on Twitter was that this feature is “an invasion of privacy.” News flash: Windows already knows what you are doing on your computer! That’s how it works. Now it just remembers your actions and has a search bar that works.)

Last week, after the OpenAI and Google presentations, I argued that the end state of AI software would be a “meta-layer” over all workflow software. AI will automate the tedious tasks, making all the annoying clicking, dragging, and dropping go poof. OpenAI’s attempt to create this meta-layer is horizontal software, with an ever-present desktop and mobile app. Google’s attempt is with the Chrome Browser and Android mobile operating system. The Copilot+ PC is the Microsoft version of this strategy: It will try to build the software meta-layer by utilizing its own operating system.

While only these companies’ newer devices will have the GPUs and NPUs necessary for this version of AI software, as the models improve, it’s reasonable to assume these capabilities will come pre-installed. As I mentioned earlier, AI models work because of data and computational power. The operating system is ideally positioned for access to these two requirements. Factor in that Microsoft already has the Bing search engine and the Edge web browser, and the pieces come together for a compelling AI personal computing stack that only Google could potentially match. But Google’s market penetration, lacking the install base of PCs, would be mostly restricted to mobile devices.

Recall and Copilot+ are the first steps on that journey. As Dan discussed yesterday, GPT-5 is coming within a year, and early indications are that it will be a genuine leap forward. This week Microsoft came off as confident that scaling laws would hold. With that in mind, it doesn’t feel unreasonable for Recall to transform into an agent platform that automates your tasks.

This is not a sci-fi future or a widely speculative blog post. This is a reasonable forecast, with incremental product steps already understood, of what should be available in the next three years or so.

But what made Build so impressive is that AI wasn’t limited to personal computing. It extended to the enterprise.

Connecting the enterprise dots

Copilot+ PCs allow for local memory and compute, but for knowledge work, you need data that is held in disparate SaaS applications, in the cloud, and on other people’s computers. The good news is that Microsoft has API connections to SaaS applications, sells database cloud services with Azure, and does device and identity management with its product Entra ID.

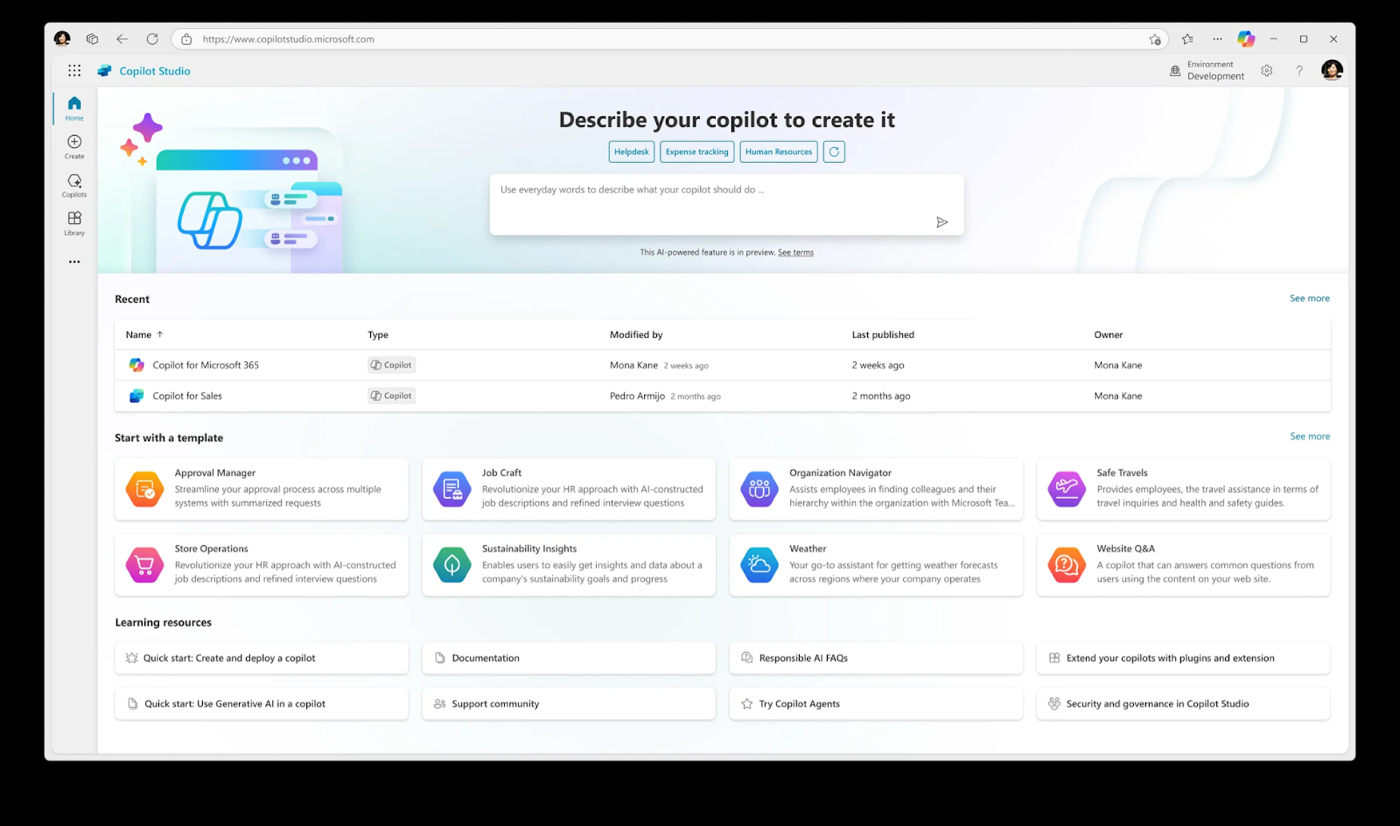

At Build, Microsoft announced the first step toward building AI to connect all these sources of data. Copilot Studio, as it’s named, allows for knowledge workers to automate repetitive tasks (such as transferring data from one source to another) without having to write code. You describe what you want the AI to do in natural language, click a few buttons to connect it to the necessary data sources, and voilà, you have automation. Previously that automation had to be triggered by opening up a chat window and prompting it with natural text queries, but now it can happen autonomously in the background.

Source: Microsoft.Copilot Studio is housed within the Microsoft Power Platform, a suite of software focused on business process automation. As of July 2023, this suite of software was at over a $2 billion revenue run rate, grew 72 percent year over year, and had over 30 million users. That Copilot is being housed there is an explicit endorsement of the use case I’m describing.

To be fair, this type of automation software has existed for a while. Robotic process automation (RPA) company UiPath did $1.3 billion in revenue last year selling similar levels of automation. The difference is that Microsoft offers Azure databases, connectivity to all your apps, and—with these LLMs—a way to utilize it all with natural language. It is an existential threat to UIpath, which is hedging its bets by investing in a $220 million seed round for a French foundation model company, called “H,” that competes against OpenAI.

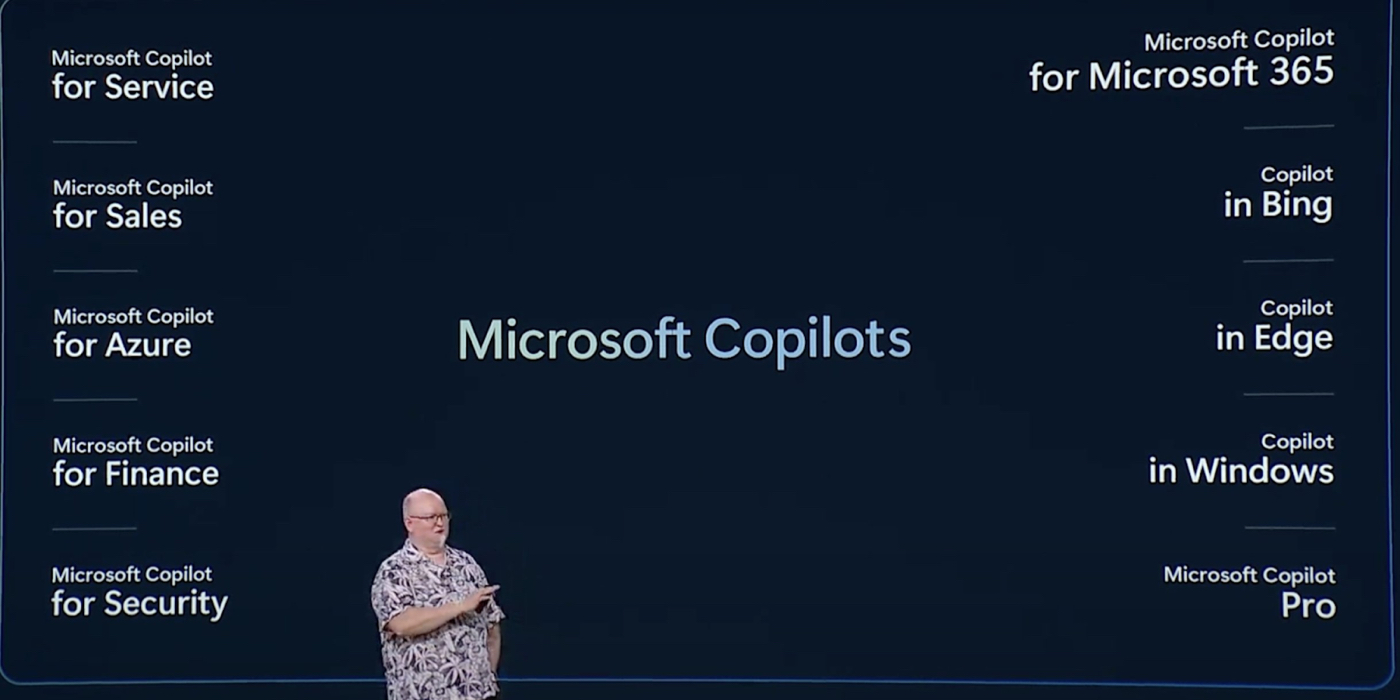

Microsoft also embedded Copilots across all over its applications, resulting in this absolute cacophony of branding.

Source: YouTube.There are a few software interfaces where these apps all connect (mostly Microsoft Teams and Copilot Studio), but just like with personal computers, the vision is clear. Over time, there will be fewer individual Copilots for individual apps and one overarching Copilot, acting as a meta-layer across an enterprise’s data and its workflows.

Models are sexy, but insufficient

The OpenAI and Microsoft teams are confident that they can significantly improve their models’ reasoning and agentic capabilities. Anthropic is also training a model that has four times more compute than its current largest model. These headlines are both scary and exciting, representing a genuine innovation in human-computer relationships.

They are also little more than science projects.

Models are powerful, but they only matter when they can be easily used. Without data, sufficient compute, or integration with applications, they don’t matter. Microsoft’s presentation stood out to me for its tedium. The humdrum work of APIs and databases is the labor that will bring AI to billions of people around the world. This week, Microsoft made a concrete, tangible step toward that future by embracing boring.

Evan Armstrong is the lead writer for Every, where he writes the Napkin Math column. You can follow him on X at @itsurboyevan and on LinkedIn, and Every on X at @every and on LinkedIn.

Ideas and Apps to

Thrive in the AI Age

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools