We've created something incredible—so why are some of us so scared of it? Sari Azout, who last wrote a Thesis for Every about the primacy of creativity over productivity, challenges our fundamental assumptions about artificial intelligence by reframing it as "collective intelligence"—aggregated human knowledge rather than something alien and adversarial. Through three "reality gaps," she reveals how we can shift our perspective on what AI means for our work, and what it means to stay human in an increasingly automated world.—Kate Lee

Was this newsletter forwarded to you? Sign up to get it in your inbox.

Will AI replace humans?

It’s the “how’s the weather?” of AI discourse—safe enough for dinner parties, provocative enough to fill panels and conferences. But the real question hiding beneath it is: Why, when faced with one of humanity’s most remarkable technological breakthroughs, do we instinctively frame it as a threat to ourselves?

Like many things in tech, it started with a funding pitch. In 1955, researcher John McCarthy needed a catchy term to attract investors to his work. “Artificial intelligence” sounded bold and futuristic. The name stuck—and with it, a mental model: AI as something cold, alien, adversarial.

If you write with the help of a human editor: You’re collaborative. If you write with the help of AI: You’re lazy, cheating, and inauthentic. Similar activity; completely different emotional response.

What if we saw it differently? In a conversation with Ezra Klein, artist Holly Herndon proposes a simple reframe: Instead of artificial intelligence, call it collective intelligence: “AI is aggregated human intelligence. [...] Emphasizing the collectivity (something built on the commons), over the artificiality of it (a feat of technology), gives us an entirely new way to see, perceive and relate to the technology.”

Imagine if OpenAI had launched as OpenCI: Open Collective Intelligence. Our cultural response might have flipped. Using CI wouldn’t be seen as cutting corners—it would be seen as a resourceful way to leverage the best of human knowledge. You’d even be judged for not using it.

Once I started seeing LLMs as aggregated human intelligence, everything shifted. I stopped asking, “Will this replace me?” and started asking, “What might I do with this?”

Over the past year, I’ve been obsessively using AI tools while building my company Sublime—to write, to think, to design, to code. The more I used AI, the more I saw similar gaps—places where the story we tell ourselves didn’t match the reality of using the tools. Crossing them changed how I think about work and what it means to be human. It might do the same for you.

Veo 3 Now Available in LTX Studio

Veo 3 video generation is now available within LTX Studio. It’s the most powerful video generation model out there—and you can access it directly in LTX Studio’s Gen Space.

Veo 3 in LTX Studio delivers:

- Highest-quality video generation currently available anywhere

- Built-in sound effects and dialogue—no separate audio editing needed

- Text-to-video that delivers true cinematic storytelling from a single prompt

- Integration directly into LTX Studio's complete creative workflow

Professional storytelling capability is now accessible to everyone. Experience the creative control that starts with just words.

Reality gap #1

Expectation: AI will reduce our workload, giving us more leisure time

Reality: AI expands what's possible—raising standards, and creating more work

Like many, I imagined robots doing my job would give me time back—to read slowly, cook without rushing, take more family vacations, and be with my kids instead of just near them.

Instead, I found myself caught in a paradox: The amount of things that are now possible and exciting because of AI—from games and art projects to essays and research reports—greatly exceeds the amount of time I save using AI.

This isn’t a new pattern. In the 20th century, we witnessed an explosion of labor-saving technologies: refrigerators, washing machines, vacuums, dishwashers that should have theoretically reduced housework. But women in 1960 spent more hours on housework than in 1920. Before washing machines, families washed clothes maybe twice a year. After that, weekly laundry became the norm.

Why? Because technology doesn’t exist in a vacuum. It shapes us as much as we shape it. It doesn’t just make old tasks easier—it creates entirely new standards.

Reality gap #2

Expectation: AI will replace expertise

Reality: AI changes the nature of expertise

I assumed AI would democratize expertise, making anyone a designer, writer, lawyer, or engineer. To some degree, that's true—technical barriers are crumbling. But the reality is far more nuanced.

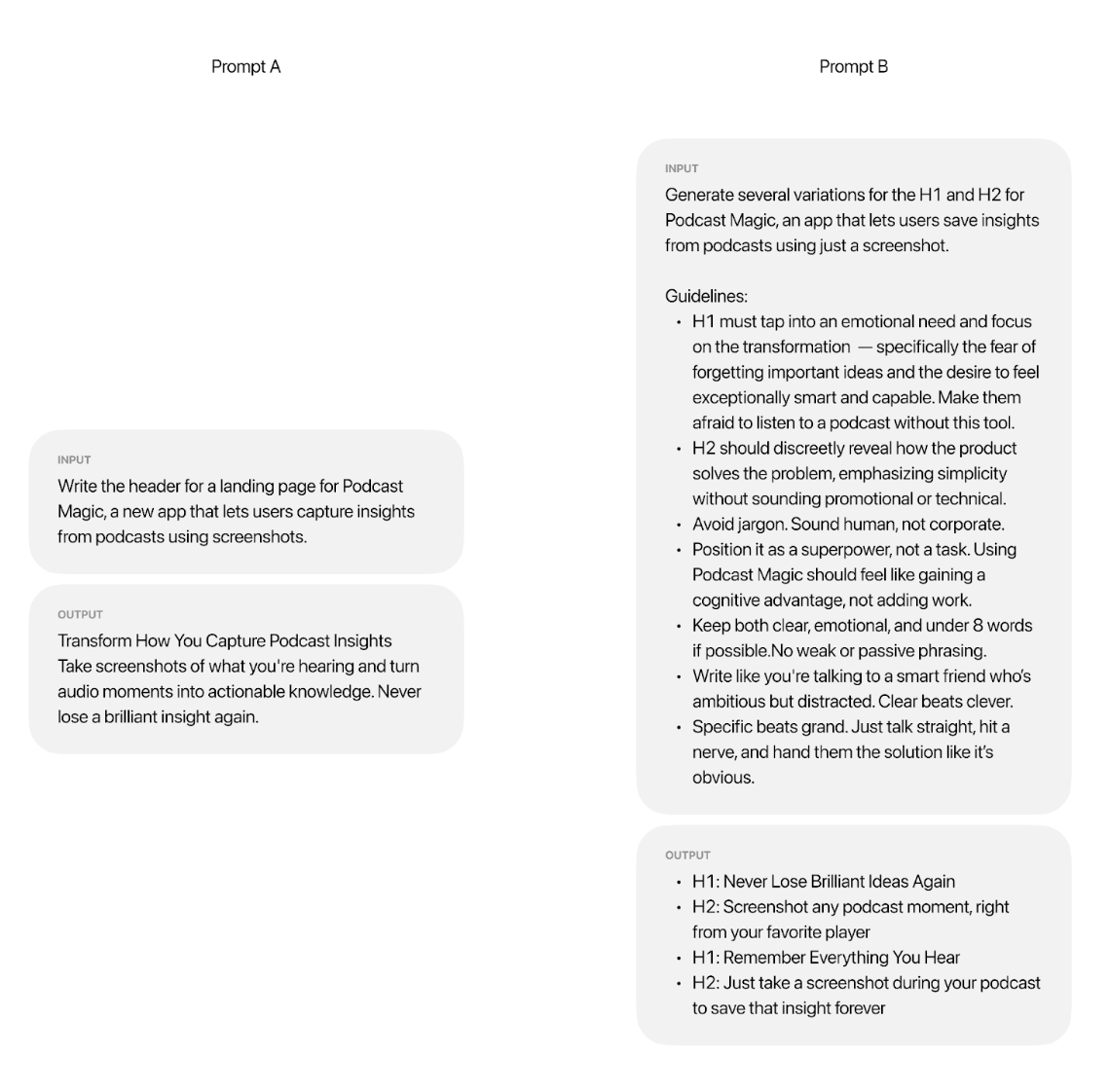

To shape the inputs, and to evaluate outputs, you still need expertise. Let’s look at an example of using LLMs to generate the headline copy for a new product:

Prompt A (on the left in the image above) could have been written by anyone. Prompt B, on the other hand, might come from someone with marketing and copywriting experience—someone clear about exactly what they want. Same tool, different operator, wildly different results.

With LLMs, the real expertise isn’t in doing the work. It’s knowing how to guide and evaluate the work. It’s knowing what is worth prompting, which is another way of saying knowing what’s worth doing.

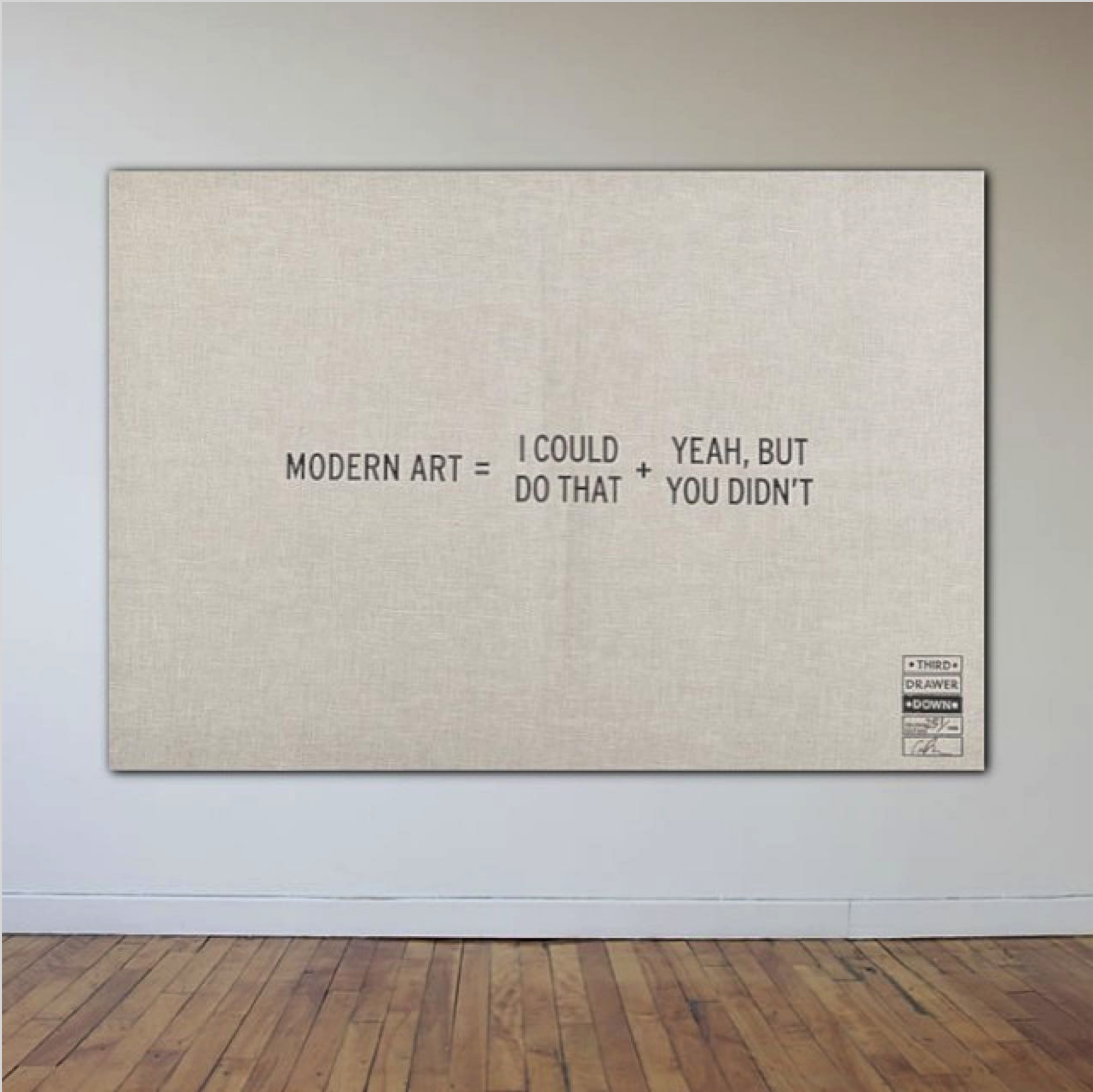

This reminds me of modern art. Conceptual artist Craig Damrauer defined it as: "Modern art = You could do that + Yeah, but you didn’t"

It’s like when you see a plain black square painting that goes for $80 million at auction, and you think, “Come on. I could’ve done that.” But you didn’t. They did. They knew what was worth doing, when, and how to frame it so it mattered.

More and more, this dynamic is going to show up outside of art. Things will appear simple or easily replicable on the surface. But the more and better access everyone has to AI tools, the clearer it becomes that the final bottleneck to great work is not knowledge or information. It’s not even intelligence. It’s that elusive, intangible, quality—call it taste, creativity, courage, imagination, agency.

That’s a completely different definition of expertise.

Reality gap #3:

Expectation: Machines are becoming too human

Reality: We should worry more about humans becoming machines

If your worth—like mine—has been tangled up in your output, it’s hard not to feel the creeping anxiety when a machine can produce in seconds what used to take you days or months.

What’s more unsettling is realizing we’ve been training ourselves to behave like machines. We worry AI will replace writers. But have you seen most of what’s online? Half the internet is engagement farmers on LinkedIn selling you five steps to 10x your productivity by 6 a.m.

We join Twitter (X) for the love of conversation—then become obsessed with likes. We pursue research for discovery—then get trapped chasing citation counts. We try to hire and retain the best teachers, so we measure them by how well their students perform on tests—then teachers teach the test.

Put differently, we try to measure what we value—and end up valuing what we can measure.

The idea that our worth can be counted in KPIs and OKRs is a modern invention. In ancient Greece, the good life was defined by wisdom and contemplation. In many indigenous cultures, status comes from storytelling abilities, spiritual connections, and relationships. Even practical measures had natural limits, “enough to survive the winter.” Now we optimize for maximum output—a limitless treadmill.

So when AI shows up and offers to help us “do more,” we rarely ask: more of what? For whom? To what end?

Browsing past dozens of landing pages of new AI tools promising to automate, optimize, accelerate, and 10x what we already do, I can't shake the feeling that we're missing the point. That the real challenge is to rethink what we optimize for, and loosen our grip on the idea that the things we value can be neatly quantified.

At Sublime, we think about using semantic search and AI-powered connections to make people more creative, not just more productive. Product studios like Danger Testing don’t chase daily active users—they optimize for resonance: Did someone screenshot this and send it to a friend? Bhutan’s Gross National Happiness and New Zealand “wellbeing budget” represent national-scale attempts to optimize for human flourishing rather than simply economic expansion.

The question isn't whether AI will make us more productive, but how those productivity gains can serve our humanity instead of making us even more like machines.

Becoming unLLMable

In May, I delivered a version of this essay at the Sana AI Summit stage in Stockholm. The talk was titled “Becoming unLLMable.” My goal was to inspire and lead with hope. And it seemed to do just that—people were moved. For a moment, my own anxieties about AI quieted.

But afterward, in conversation after conversation, one question kept coming up: How do I become unLLMable?

Despite the optimism, the thrill of possibility, and the hope of overcoming these “reality gaps,” the deeper fear of being replaced by AI hadn’t gone away for the audience. It hadn’t entirely left me, either. It’s made me wonder: What makes work unLLMable, not in theory, but in practice?

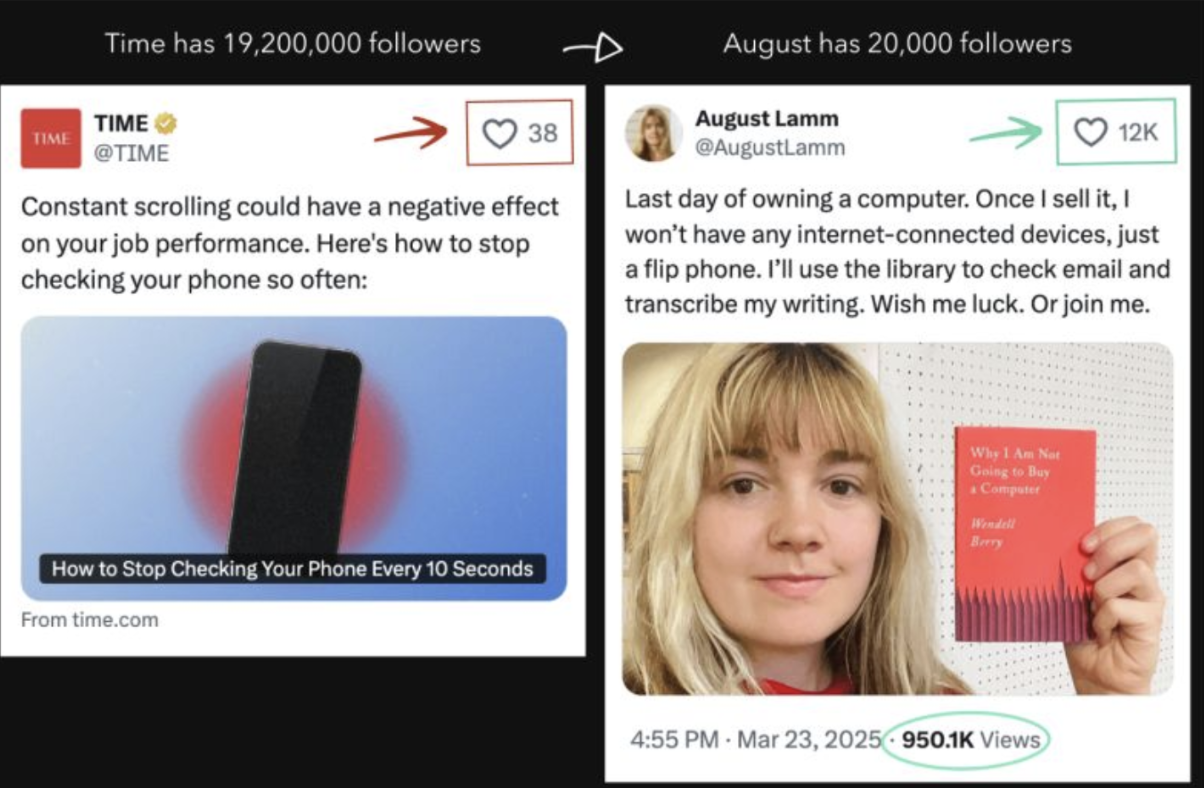

Here’s an exemplary contrast I recently found. Time magazine published an article on phone addiction. The publication has millions of followers on X. Its post about the piece garnered 38 likes. Meanwhile, a woman named August Lamm posted a photo of herself holding a copy of the book Why I Am Not Going to Buy a Computer by Wendell Berry, announcing she’s switching to only using a flip phone. Her tweet got nearly 1 million views.

Time's article feels like something anyone could have prompted an LLM to write. Lamm's post, by contrast, carries the weight of lived experience—you can't prompt your way to selling your laptop.

That, I think, is a glimpse of what unLLMable work looks like. It’s not simply what AI can’t do yet.

It's work that emerges from the irreducible complexity of human experience: the accumulated weight of your failures, the specific texture of your obsessions, the choices and risks you're willing to take because of what you've survived. It's work that requires you to have been somewhere and felt something.

I don’t know exactly how we protect that, or how we cultivate more of it. But that’s where my curiosity is now.

Maybe the last job left will also be the hardest: staying human.

Sari Azout is the founder of Sublime, a knowledge tool designed for creative thinking. She was previously a partner at Level Ventures and occasionally publishes her writing on Substack.

To read more essays like this, subscribe to Every, and follow us on X at @every and on LinkedIn.

We build AI tools for readers like you. Automate repeat writing with Spiral. Organize files automatically with Sparkle. Deliver yourself from email with Cora.

We also do AI training, adoption, and innovation for companies. Work with us to bring AI into your organization.

Get paid for sharing Every with your friends. Join our referral program.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

.png)

.png)

Comments

Don't have an account? Sign up!

This is one of the best articles I have read so far about AI. I love all of it: "Collective Intelligence", the reality gaps, "becoming unLLMable"... fascinating stuff! Thank you.

Humanity is also recognizing who spotted that "TIme vs August Lamm" difference. Being human is also attributing the value of finding things like this. Was it too difficult to admit that THAT example came from Harry Dry? He flagged it, he described the difference, he brought it to our attention. The effort to not recognize it is just weird.

Source: X / The author.

Sure.