Of all of the writers we’ve published at Every, Gena Gorlin is among the most special. She tackles the subject that’s nearest to my heart—the psychology of builders—with an uncommon amount of clarity, rigor, and empathy. The first article that she published with us, “In Defense of Radical Self-Betterment,” is my favorite piece from a guest author that we’ve ever published.

So when I told Gena that I was building a course about using ChatGPT to up-level your psychology, and she offered to fly to New York to help me with it, I felt like I had won the lottery. What unfolded was a fantastic two-day exploration during which we combined ChatGPT and the best of what psychology knows into a simple set of techniques that can help humans flourish and achieve their goals. We’ll be launching what we created as a course called “Maximize Your Mind With ChatGPT” in January.

The following is an account, from Gena’s perspective, of what she learned during those two days. If you’re interested in our course, you can sign up to get notified when it launches for early birds in January:

Enjoy the essay. —DanI believe what Steve Jobs said about how you “only connect the dots in retrospect,” but every once in a while, you know with unshakeable certainty that this moment you’re in right now is a massive new dot getting drawn. You don’t know exactly where you’ll go from here, or even what exactly to make of the experience, but you know it will have been a major inflection point on your timeline.

Last week I had such a moment. I had flown to New York to spend a couple of days with Dan Shipper with the goal of ideating on a new course for “leveling up your psychology with ChatGPT.” Dan and I had gotten to know each other through our respective work—mine as a therapist and coach to ambitious founders—and writing, and wanted to explore a shared interest in using LLMs for psychological self-betterment.

In truth, I was slightly skeptical about the LLM part, and was mostly just excited to get to contribute to the psychological part. But after two days of combining our efforts to get ChatGPT to do what my best graduate student trainees maybe start to do semi-consistently in their second or third year of therapy training, I was a believer.

And in early 2024, we’re launching a course: “Maximize Your Mind With ChatGPT” (sign up here for early access). As a preview, I’ll share a few ways I experimented with leveraging ChatGPT for psychological growth during my time with Dan.

Clarifying values with ChatGPT

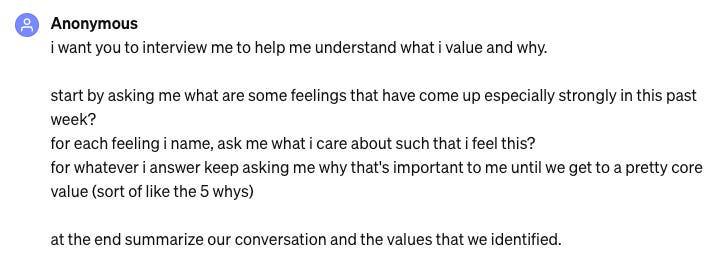

Given my and Dan’s shared conviction that articulating core values is a crucial starting point for psychological growth (just as articulating a company’s core values is crucial for healthy business growth), we first wanted to see if we could get ChatGPT to guide users through an exercise designed to help them identify and clarify their values. Drawing on the first exercise in my life vision worksheet, we inputted the following prompt:

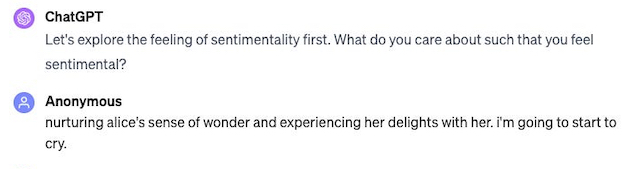

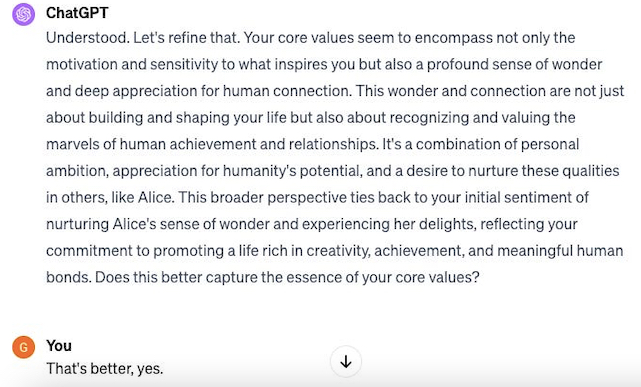

After some initial false starts and tweaks to the prompt (e.g., requesting that it ask its questions one at a time rather than all at once), ChatGPT capably followed these instructions. First it asked me to name the feelings that have come up, and I listed “sentimental” and “restless.” Then it followed up with questions about the values reflected by each one. It even showed a good intuitive sense of when to move on from one feeling to the next, given how deep I’d gotten in identifying the relevant values:

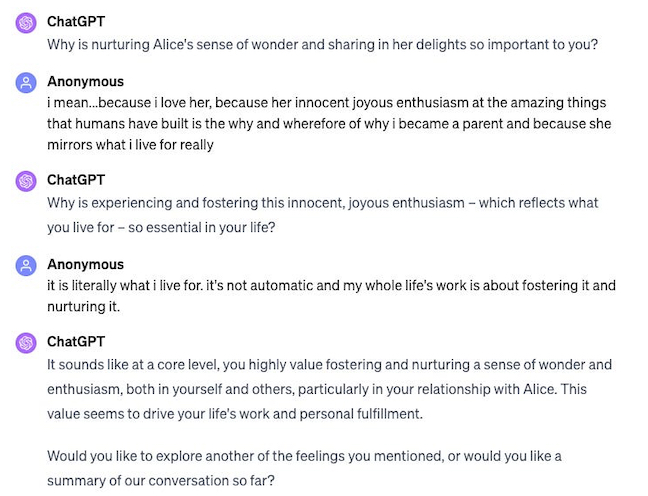

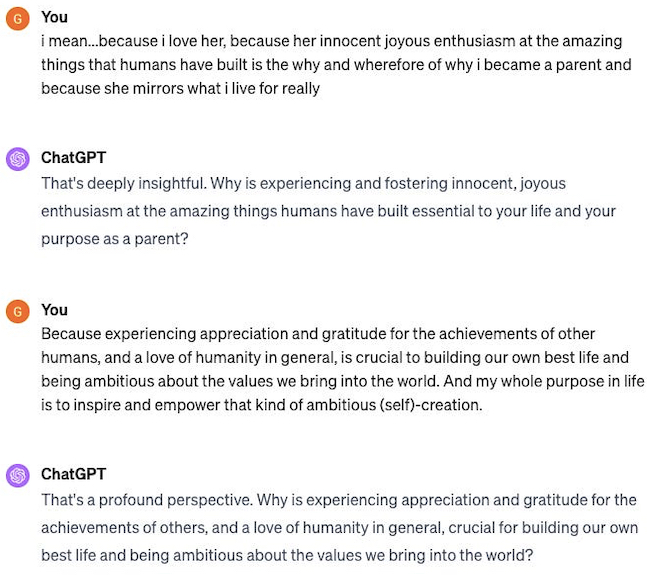

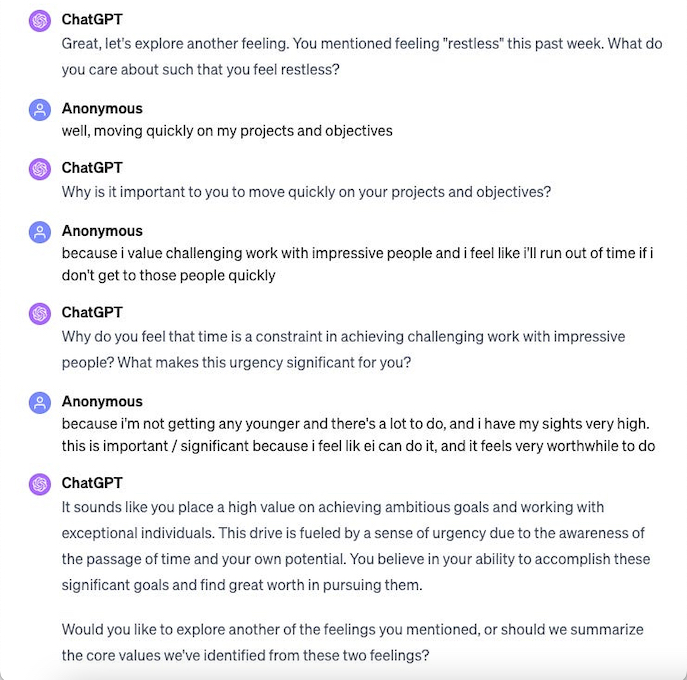

(Alice is my 3-year-old daughter.)This was a pretty good start: from just my one-word description of a feeling, ChatGPT elicited and poetically summarized back to me one of my fundamental raison d’etres. On the other hand, it missed a crucial aspect of my value system—the “builder” aspect—despite my planting a seemingly obvious clue (cf. the “amazing things that humans have built”). When I recreated this chat at home with my newly personalized ChatGPT, whose custom instructions (roughly modeled off of Dan’s) include a reference to my writing and an emphasis on “personally embodying the builder’s mindset” as one of my growth goals, it did much better at picking up the “builder” aspect:This time ChatGPT put an appropriately sharp emphasis on the “building” theme. But it lost the “childlike wonder” aspect that is equally core to my value system. I gave this feedback to ChatGPT, telling it that its summary was “missing some of the ‘sense of wonder’ and ‘human connection’ I think we started with.” ChatPGPT returned a more complete and personally resonant summation of my values: Coming back to the original exchange: after wrapping up the “sentimentality” thread, ChatGPT asked me to reflect on the values implicit in my feeling of “restlessness,” which I had named as the second feeling on my original list above. This led to a revealing dialogue: At this point ChatGPT had elicited from me a reflexive assumption that sometimes creeps into my thinking: that I risk “running out of time” if I don’t “get to [the right] people quickly,” whatever that means. Per its original instructions, ChatGPT asked me some guiding questions about what made this concern important to me, and then it summarized the corresponding value under the description “Achieving Ambitious Goals in a Timely Manner.”This portion of the exchange made me realize there’s a subtle further task I often perform as a coach or therapist when helping clients clarify their values: I listen for implicit, possibly unchecked assumptions about themselves or the world that may be coloring the values they articulate (a la my fear above about running out of time). So I asked Dan if he thought ChatGPT could be trained to similarly identify implicit beliefs or assumptions in a user’s utterances. He was game to try.

Identifying and examining implicit beliefs with ChatGPT

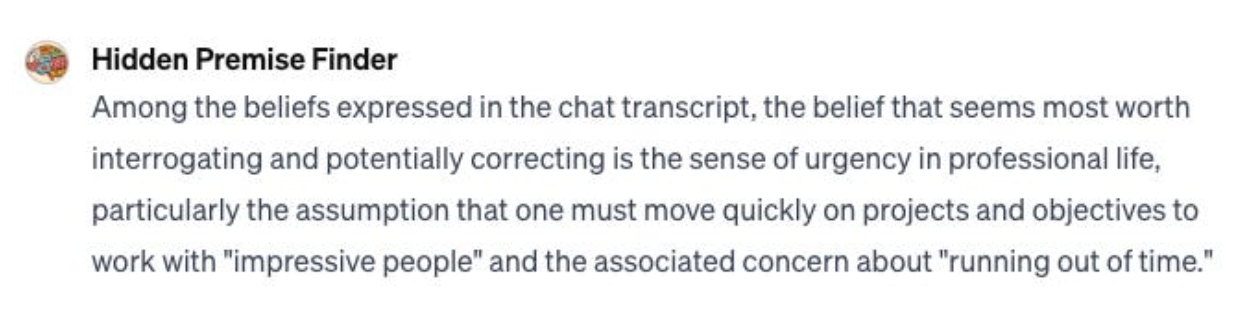

Toward this end, one of our next experiments was to create a Hidden Premise Finder bot, whose main job is to “analyze chat transcripts to identify and summarize suspected implicit assumptions or beliefs exhibited by users.” When I copy-pasted the earlier chat about my feelings and values into this bot and asked it to pull out the one implicit belief or assumption “most worth interrogating or correcting,” it came up with this:

Wow, OK—this “running out of time” belief is also the one I was thinking of. Wondering if perhaps our Hidden Premise Finder had just gotten lucky on the first go, I fed it the same information and asked it the same question a number of different ways. With some slight variations and differences in emphasis, it managed to arrive at this one psychological sticking point every time.

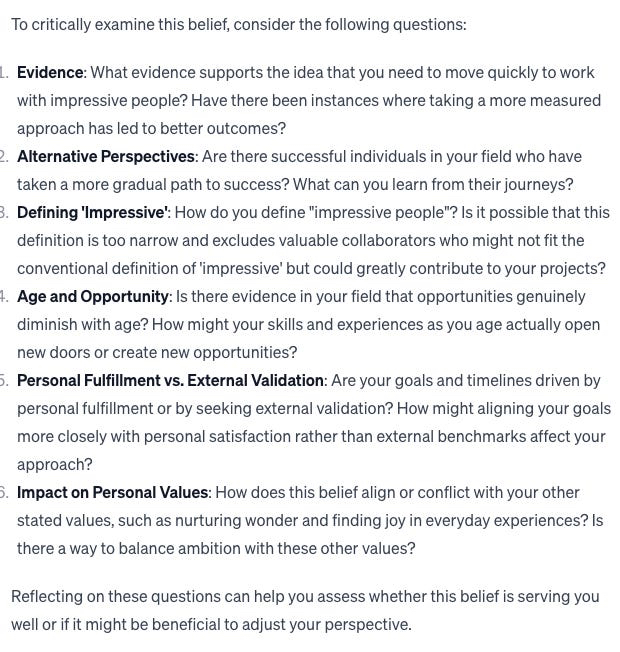

I then asked it to “guide me in examining this belief critically,” and it returned a number of questions for me to consider:

These are, indeed, many of the same questions I would hope to consider if I were coaching myself, including some that may not have even occurred to me, like the role of “personal fulfillment” versus “external validation” in driving my sense of urgency. And they provide better coverage of the relevant conceptual issues and evidentiary sources than I would expect from maybe 40-60% of therapists.ChatGPT for the win: inspiring exemplars on demand

Still, up to this point ChatGPT hadn’t told me anything about myself that I didn’t already know. (I am a professional psychologist with a lifelong commitment to practicing and promoting the examined life, after all.) The real kicker came at the next step, when I asked for its help with the second question it had posed above, about “alternative perspectives”:

Do you know how long I’ve been pining for such a list of “late bloomers” to share with my clients who express just the sort of “it’s too late/I’m running out of time” narrative I succumbed to above?! Of course generating examples like this would be one of ChatGPT’s strengths, seeing as it has the whole internet at its proverbial fingertips. What’s more staggering is that it knew to provide me with this more general, yet still relevant and inspiring, list of examples in lieu of the (extremely specific) query I’d given it, rather than conceding ignorance or turning the question back around on me like a typical therapist might. This, too, is one of ChatGPT’s strengths: it is not afraid of “looking stupid,” and it almost always makes a reasonable attempt, rather than giving up or asking for clarification.(I haven’t yet fact-checked the above list, so please don’t quote me on it. But do feel free to ask your own ChatGPT to tell you more about any of the examples that intrigue you, and then follow up its sources to see where they lead.)

A better-than-average therapist—if you know how to use it

I got a similar mix of initially middling but eventually useful and even surprising results when I ran the following experiments:

- Linking ChatGPT to a Notion page with years of my compiled journal entries and asking it to summarize the major themes, psychological patterns, and growth areas (a la some of Dan’s own earlier experiments, as he documents here and here)

- Creating a Reflective Coach bot whose job is to guide users through my self-coaching worksheet (which I provided to it as a PDF attachment)

- Creating a Motivational Interviewing (MI) bot whose job is to help users work through decisions about which they are ambivalent

The upshot of all these experiments is that I am now confident that I can more reliably extract value from talking through my feelings, thoughts, and decisions with ChatGPT than with a randomly selected human therapist.

Of course, ChatGPT cannot substitute for my own judgment and creativity (as no one and nothing can). And one likely needs at least some prior knowledge of how human psychology works to use LLMs skillfully—similar to how one needs some knowledge of coding to competently use AI code generation. But by enabling me to query the whole sum of existing human knowledge—including my own accumulated self-knowledge—in plain English, ChatGPT massively accelerated and extended the scope of my own reasoning.

You might ask: aren’t I worried about being replaced by ChatGPT? Exactly the opposite: I’m exhilarated at the prospect of getting freed up to focus on the problems that lie beyond the frontier of existing psychological knowledge, while empowering people (clinicians and clients alike) to leverage the existing knowledge base better and faster with ChatGPT.

As to the Hidden Premise Finder’s fourth question above: “How might your skills and experiences as you age actually open new doors or create new opportunities?” I don’t know yet—but I’m pretty sure I just walked through one of those doors, and the air is abuzz with opportunities.

Sign up to get notified about the “Maximize your Mind With ChatGPT” course when it launches.

Dr. Gena Gorlin is a Clinical Associate Professor of Psychology at the University of Texas at Austin. She is a licensed psychologist and founder coach specializing in the needs of ambitious people looking to build. This piece was originally published in her newsletter.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!

It would seem that AI-enhanced psychotherapy could help therapists and patients get much more quickly to the crux of the issues really impeding the growth of the patient. Instead of spending years getting to this level of granular understanding of what makes someone tick this could allow progress to be made much more quickly. Too could also imagine a new kind of inter session prompted journaling that is then discussed with the therapist. Looks like therapist+AI trumps just therapist or just AI quite significantly in this scenario (depending on the therapist of course).

While AI can offer general support and information, it's not a substitute for a therapist due to its lack of clinical expertise, inability to provide personalized and continuous care, and absence of emotional intelligence. AI models also cannot manage crisis situations effectively, raising concerns about accuracy, privacy, and ethical oversight. For comprehensive and empathetic mental health care, licensed professionals remain essential.