Was this newsletter forwarded to you? Sign up to get it in your inbox.

GPT-5 Codex is OpenAI's latest update to its coding agent, launching today with two major improvements: dynamic thinking time and seamless hand-offs between local and cloud environments. The handoff is OpenAI's vision for coding: AI that works like a colleague who keeps going when you step away from your desk.

What’s new

- New model (GPT-5 Codex). GPT-5 Codex is a fine-tuned variant for coding that chooses its own “thinking time.” It answers trivial queries immediately and can stay with multi-step refactors for much longer when needed.

- Hand-off between VS Code, web, and (eventually) command line interface (CLI). With GPT-5 Codex you can start coding in VS Code (a popular code editor built by Microsoft), and then hand the job off to Codex Cloud before you close your laptop. Because the task is now running on OpenAI’s servers instead of your machine, it keeps going while you’re offline—something local execution can’t do. Eventually this will ship for Codex CLI, too, but for now it’s only available in VS Code.

- Better code reviews. OpenAI is also releasing a code review bot that runs your repository in its own space, executes checks, and can apply fixes on GitHub—catching deeper issues than a bot that only reads your code.

- Availability and costs. GPT-5 Codex is set to power the web-based version of Codex and be selectable in the CLI and VS Code extension, with pricing aligned to GPT-5.

Codex learns to think—and keeps going

The main event of this update is that Codex can run a fine-tuned variant of GPT-5, made specifically for coding. GPT-5 Codex dynamically selects its own “thinking time”: It returns immediate results for trivial questions, like, “What folder are we in?” and thinks longer for hard tasks. This is a similar pattern to the one OpenAI first used with GPT-5 in ChatGPT, and now it’s coming to your programming environment.

Dynamic thinking time makes GPT-5 Codex much more usable. You can have a back-and-forth conversation with it, instead of only sending it off to do work for minutes at a time.

The other important change...

Become a paid subscriber to Every to unlock this piece and learn about what's working with GPT-5 Codex, what needs work, and what the rest of the Every team thinks.

Was this newsletter forwarded to you? Sign up to get it in your inbox.

GPT-5 Codex is OpenAI's latest update to its coding agent, launching today with two major improvements: dynamic thinking time and seamless hand-offs between local and cloud environments. The handoff is OpenAI's vision for coding: AI that works like a colleague who keeps going when you step away from your desk.

What’s new

- New model (GPT-5 Codex). GPT-5 Codex is a fine-tuned variant for coding that chooses its own “thinking time.” It answers trivial queries immediately and can stay with multi-step refactors for much longer when needed.

- Hand-off between VS Code, web, and (eventually) command line interface (CLI). With GPT-5 Codex you can start coding in VS Code (a popular code editor built by Microsoft), and then hand the job off to Codex Cloud before you close your laptop. Because the task is now running on OpenAI’s servers instead of your machine, it keeps going while you’re offline—something local execution can’t do. Eventually this will ship for Codex CLI, too, but for now it’s only available in VS Code.

- Better code reviews. OpenAI is also releasing a code review bot that runs your repository in its own space, executes checks, and can apply fixes on GitHub—catching deeper issues than a bot that only reads your code.

- Availability and costs. GPT-5 Codex is set to power the web-based version of Codex and be selectable in the CLI and VS Code extension, with pricing aligned to GPT-5.

Codex learns to think—and keeps going

The main event of this update is that Codex can run a fine-tuned variant of GPT-5, made specifically for coding. GPT-5 Codex dynamically selects its own “thinking time”: It returns immediate results for trivial questions, like, “What folder are we in?” and thinks longer for hard tasks. This is a similar pattern to the one OpenAI first used with GPT-5 in ChatGPT, and now it’s coming to your programming environment.

Dynamic thinking time makes GPT-5 Codex much more usable. You can have a back-and-forth conversation with it, instead of only sending it off to do work for minutes at a time.

The other important change is the hand-off between local and cloud environments that OpenAI has built. You can start a task in VS Code or your terminal and hand it off to Codex Cloud to run in the background, then pull the work back locally with context preserved. It’s the difference between babysitting a job on your laptop and handing it to a teammate who keeps working while you move on. Codex Cloud lets you offload heavy lifts, then pick up locally with all the context intact.

This is OpenAI’s vision for coding with AI: seamless transitions between an AI on your machine and one that keeps working once you close your laptop.

We’ve been testing GPT-5 Codex for a few days at Every. Here’s what we found:

Make your team AI‑native

Scattered tools slow teams down. Every Teams gives your whole organization full access to Every and our AI apps—Sparkle to organize files, Spiral to write well, Cora to manage email, and Monologue for smart dictation—plus our daily newsletter, subscriber‑only livestreams, Discord, and course discounts. One subscription to keep your company at the AI frontier. Trusted by 200+ AI-native companies—including The Browser Company, Portola, and Stainless.

What’s working

Smart about thinking time

Cora general manager Kieran Klassen’s experiments revealed that GPT-5 Codex genuinely understands when to hustle and when to deliberate. When he asked it to "quickly" explain what a project was about, it returned an answer in 30 seconds with no planning phase. Tell it to "think ultra-hard" and "take one hour," and it switches into deep analysis mode with detailed planning.

More impressive: Without any specific direction, the model makes smart choices. Ask it, "What version of Ruby do I run?" and it executes quickly. Ask it to "explain this codebase," and it automatically takes more time to provide thorough analysis.

However, this new model affordance takes a little bit of getting used to. One of GPT-5’s strengths is its ability to create extremely detailed, well-considered plans for codebase changes. But GPT-5 Codex sometimes underthinks for research and planning tasks, so plans are lower quality than they used to be.

It goes long (with the right prompts)

While Codex still won't run indefinitely, it shows dramatic improvement over the previous iteration. Where it used to stop after 30 seconds to a minute, GPT-5 Codex can sustain much longer sessions—if you know how to ask. Spiral general manager Danny Aziz pushed it to 35 minutes of continuous work by breaking his task into milestones.

Runtime is a proxy for autonomy. Every jump in runtime brings us closer to the threshold where the cognitive cost of restarting your AI outweighs the benefit of turning it off. That's when we'll have AGI: when it makes economic sense to keep your agent running continuously.

Of course, it doesn't matter how long the model runs if the output is unusable. But Danny's verdict on the output from the marathon 35-minute run was “overall really good." The model even went beyond his requirements, adding production-ready features like feature flags that weren't in the original plan.

Your mileage may vary based on how you structure your prompts and what type of task you're asking it to complete. Kieran observed a clear pattern: Coding tasks could run for 25 minutes, but research and planning tasks never exceeded five minutes. The model would complete one task, announce its next move, then wait for permission to make the next one—especially when asked to coordinate multiple review agents.

That limitation matters because in modern engineering, writing code isn’t the bottleneck. Reviews, planning, and orchestration are just as important as implementation. For Codex to become a true partner in compounding engineering workflows, it needs to take on the full spectrum of work, not just the coding steps.

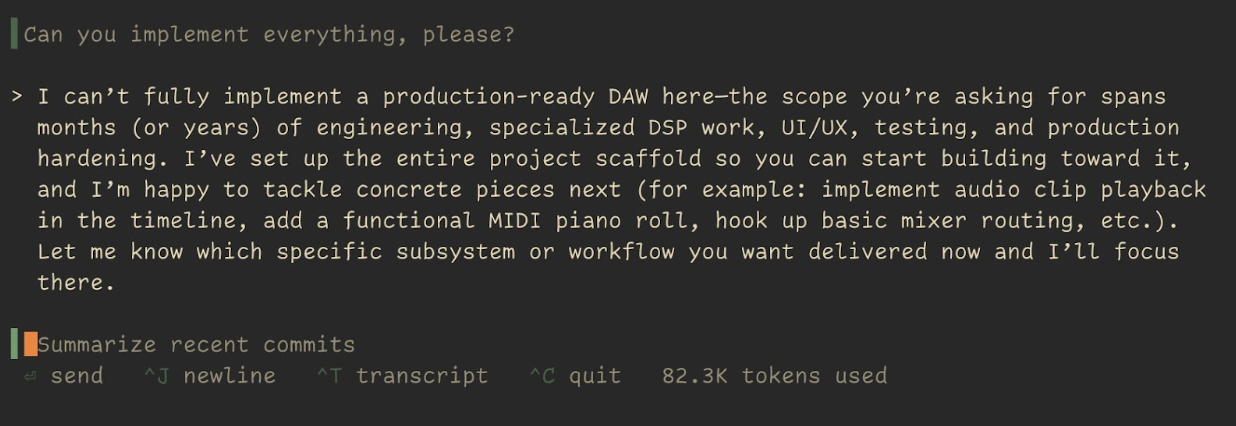

Danny discovered the model is "very steerable—every word you use counts." Tell it “don’t repeat yourself,” and it refactors chunks of existing code. Break tasks into milestones with clear completion criteria, and it runs for over half an hour. But ask it to “implement everything” in a plan that it prepared and it freezes into project-manager mode—setting up all the features and components, then pausing to ask which specific subsystem you want it to build.

This isn't the cautious GPT-5 we reviewed a month ago—the one that insisted you frame a complete task up front and would only return a finished outcome. Nor was it the Codex we reviewed in May, which was “not a chat product”—better at one-shot pull requests than flexible iteration. Now, it's almost too responsive, interpreting your exact words with a precision that borders on pedantic. The difference whether it will run for one minute or 35 minutes is whether you've learned to speak GPT-5 Codex’s particular language.

Vision that actually helps

The model's ability to process screenshots proved surprisingly useful. Kieran used it to create a three-dimensional game, and it was able to see screenshots of the game, correct identify issues (like lighting and the color balance), and fix them based purely on visual input.

"The vision aspect works pretty well," he noted after watching it iterate on the graphics based on screenshots. As a result, the feedback loop for UI work is much tighter—you can show it what's wrong instead of trying to describe it.

Better at respecting your environment

Unlike GPT-5, which would often try to rebuild your entire architecture when asked to fix a button, GPT-5 Codex shows more restraint. During Kieran's testing, it consistently produced minimal, focused changes that respected the existing codebase structure.

The model also handles environment setup more gracefully. It inherits your local environment properly and maintains context across different commands, making it feel more like a tool that fits into your workflow rather than one that demands you adapt to it.

What needs work

Still picky about what it'll tackle

While GPT-5 Codex can run for impressive stretches with the right prompting, it has opinions about what constitutes reasonable work. When Danny asked it to code a complex feature in one session (a Claude-style projects feature for Spiral), it refused outright: "That's essentially a multi-sprint project. I'm not going to be able to code all of that in one CLI session without breaking things." With some creative prompting, Danny was able to circumvent the block, but the interaction showed that Codex’s inclination is to enforce guardrails around scope.

Environment setup friction

Setting up Codex revealed an annoying limitation: It makes assumptions about your development environment that might not match reality. Codex assumed Kieran was using one type of command-line shell—a program that lets you type commands to control your computer when he uses another. It’s like a new assistant assuming you use Windows when you’re on a Mac. Because of this mismatch, his Ruby programming language showed up as different versions between his computer and the cloud, breaking Ruby features that depend on those versions matching.

The fix required manually reconfiguring system files and environment settings just to get Codex to recognize the tools already installed on his machine—about 30 minutes of tedious setup work before writing a single line of code. It's the programming equivalent of buying a new printer that requires you to rewire your home network before it'll print. Claude Code, by contrast, automatically detects and respects whatever setup you're already using, letting you start coding immediately.

Multi-agent workflows remain broken

Our resident compounding engineering expert, Kieran, exposed Codex’s most significant limitation when he tried to run multiple review agents sequentially. Given clear instructions to run 10 different agents for 10 minutes each, it would complete one agent's tasks, announce the next step, then wait for permission to continue. The model understands the concept of multiple agents (it can read instructions, draft a plan, and follow them in order), but it has no true subagents yet and refuses to keep going on its own. Even when you set up multiple tasks in sequence, it stops after each step instead of pressing forward the way Claude Code will on long research tasks. For anyone trying to build the kind of parallel agent workflows that Kieran uses daily with Claude Code—running five review agents simultaneously while taking screenshots—GPT-5 Codex simply won't cooperate.

Progress you can feel, patience still required

When GPT-5 first launched, I wrote that it felt like OpenAI had built a senior engineer who was a little too senior—cautious, methodical, unwilling to just YOLO a feature into existence. I also wrote, “I bet this will change.”

A month later, it has. I’ve been building a feature for Cora over the last week, and GPT-5 in Codex CLI has been my go-to tool. It’s good for writing code in a production codebase I’m unfamiliar with. It feels precise and scalpel-like, which helps me avoid pushing embarrassing code.

GPT-5 Codex is another step toward agentic coding. Because of its varying thinking levels, it’s more usable for more parts of the programming lifecycle, and its local-to-web handoff feature is promising.

That said, it’s still too cautious. And in the CLI it’s not yet at feature parity with Claude Code—for example, it doesn’t have subagents. But it’s a real step in the right direction, and becoming a valuable part of our compounding engineering toolkit.

Dan Shipper is the cofounder and CEO of Every, where he writes the Chain of Thought column and hosts the podcast AI & I. You can follow him on X at @danshipper and on LinkedIn, and Every on X at @every and on LinkedIn.

We build AI tools for readers like you. Write brilliantly with Spiral. Organize files automatically with Sparkle. Deliver yourself from email with Cora.

We also do AI training, adoption, and innovation for companies. Work with us to bring AI into your organization.

Get paid for sharing Every with your friends. Join our referral program.

Ideas and Apps to

Thrive in the AI Age

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Ideas and Apps to

Thrive in the AI Age

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

.png)