In Mike Taylor’s work as an AI engineer, he’s found that many of the issues he encounters in using AI tools—such as their inconsistency, tendency to make things up, and lack of creativity—he used to struggle with when he ran a 50-person marketing agency. It’s all about giving AI models the right context to do the job, just like with humans. In the latest piece in his column Also True for Humans, about managing AIs like you’d manage people, Mike outlines the rise of New Taylorism, his thesis that management techniques for AIs and humans are converging, and that prompting belongs in the business school, not the computer science lab.—Kate Lee

Was this newsletter forwarded to you? Sign up to get it in your inbox.

Every time you rewrite a prompt because Claude misunderstood you, you’re learning to be a better manager.

I know this because I’ve lived it from both sides. Building a 50-person marketing agency taught me more about working with AI than engineering ever did: The techniques that make AI agents reliable—clear direction, sufficient context, well-defined tasks—are identical to the techniques that make human teams effective.

But AI lets you practice without consequences. An agent won’t get annoyed if you ask it to do the same task 15 times. It won’t hold a grudge when you give unclear instructions. It won’t gossip about how disorganized you are, or get upset when things don’t work out.

The CTO of Moondream captured this dynamic in a recent tweet:

AI does not get its feelings hurt. (Courtesy of X.)

AI does not get its feelings hurt. (Courtesy of X.)

This makes AI the perfect management training ground.

Good management is a measurable economic advantage. The World Management Survey, a decade-long research project by Stanford and London School of Economics economists, found that roughly a quarter of the 30 percent productivity gap that America has over Europe comes from differences in management quality alone. Now AI is democratizing access to that advantage. Anyone who works with AI is getting a crash course in management, whether they realize it or not.

I call this convergence of AI engineering and management practices “New Taylorism,” after Frederick Winslow Taylor, the mechanical engineer who pioneered scientific management in the 1880s. He stood over factory workers with a stopwatch, timing their every movement, then redesigned their jobs into micro-tasks that could be measured, standardized, and optimized. But his attempts to make workflows even more efficient failed—because who likes to be a cog in a machine? His workers went on strike. AI, on the other hand, does not resent being asked to do the same task 50 times until you get it right.

I’ll show you the three management principles that you can learn from AI: how to give clear direction, orchestrate a team (aka agent coordination), and think strategically about what’s worth building in the first place. Now you can practice being a better manager with an AI that forgives your mistakes.

Prompting belongs in the business school

The atomic unit of working with AI is the prompt: a discrete task with clear boundaries and evaluable output. It’s the equivalent of the assignment you’d give an employee. In my work as a prompt engineer, I’ve discovered that the prompt is a gateway drug into better management techniques. Once you spend hours optimizing how you brief AIs, watching how radically small changes impact results, you realize you could do the same with your human coworkers.

Zhengdong Wang, a senior research engineer at Google DeepMind, received this advice from a consultant friend for managing “hapless” new interns: “You gotta treat them like they’re Perplexity Pro bots,” meaning give extremely clear instructions, don’t assume context, spell out exactly what you want, and check their work carefully. The same techniques that work for AI chatbots are now being applied to humans.

The five principles of prompting I developed work equally well as management techniques for humans:

- Give direction. Describe the desired style in detail, or reference a relevant persona. Whether you’re briefing Claude or a junior designer, “match the energy of Apple’s product pages—minimal, confident, lots of whitespace” is a much better instruction than “make it pop.”

- Specify format. Define the rules and required structure of the response. If you want bullet points on a deck, tell the AI.

- Provide examples. Insert a diverse set of test cases where the task was done correctly. Give the AI an example of a piece of content marketing, or series of tweets that worked well in the past.

- Evaluate quality. Identify errors and rate responses, testing what drives performance. Just like you give your team feedback, don’t publish AI output without a plan to measure outcomes.

- Divide labor. Split complex goals into steps chained together. This is exactly how you would approach a product launch—writing down all the steps that need to happen and tracking progress on each.

In fact, I drew from my marketing agency experience to develop these principles in 2022, pre-ChatGPT. I wanted prompting techniques that wouldn’t break every time a new AI model dropped, so I focused on what works for both biological and artificial intelligences.

Prompting is a management skill that belongs in the business school, not the computer science department. And it’s only getting easier. The technical parts of prompt engineering are being automated away, and the latest models can rewrite prompts to get better performance on a task. All humans need to do is to decide what task to do, define how it should be done, and collect or annotate a number of “good” examples of that task being done.

Managers have been vibe coding forever

Yet work rarely stays as simple as one assignment or one prompt, for both humans and AI. Eventually, you’re juggling multiple tasks, passing the output of one to the input of another, deciding what to delegate. You move from writing prompts to orchestrating systems—in other words, managing the progress of many different tasks.

This is the second way in which AI teaches you management: how to run a team.

Orchestration skills are, once again, not new—managers have been vibe coding forever. “Getting good results out of a coding agent feels uncomfortably close to getting good results out of a human collaborator,” said Simon Willison, co-creator of the Django framework.

Consider a scene that plays out in every tech company: A product manager writes a description of a new feature. An engineer builds the feature. The product manager doesn’t read every line of code, but she can tell whether the button is in the wrong place, whether the flow feels clunky, and whether it solves the customer’s problem.

The skills that make someone effective at conducting multiple AI agents are the same that have always made someone an effective engineering manager: clear requirements, good taste, and knowing when to push back versus when to trust your team’s judgment on implementation details.

Orchestration teaches you to run a project—to see the whole board, sequence the work, and know when to intervene or let things run. Every time you take Claude’s output and feed it into the next prompt, or break a big task into smaller pieces for different agents to handle, you’re practicing the same skills you’d need to manage a human team through a complex deliverable.

The age of the idea guy

Once AI can build almost anything you ask for, the real skill becomes deciding what to build.

This is the third management skill AI forces you to develop: strategy.

Think about what makes a project succeed or fail. You need market research to make sure you’re building the right thing. You need domain expertise to understand the core problem. You have to find potential customers and sell them on your solution. You need to extrapolate from limited data points—a few customer interviews, a handful of failed experiments. You need to think multiple moves ahead, because if you can vibe code it in a day, so can your competitors.

The strategic frameworks business schools have taught for decades, such as Hamilton Helmer‘s 7 Powers (which describes how companies can build lasting advantages through things like network effects) and Michael Porter‘s five forces (which maps competitive pressures across industries), are even more important because they’re the only source of differentiation left now that AI can write code.

Alex Danco of venture capital firm Andreessen Horowitz recently wrote that as AI makes some parts of a job wildly more productive, the parts that can’t be automated become the bottleneck—and therefore the reason you get paid. The judgment calls, the problem-solving, and the strategic bets commands a premium.

Call it the revenge of the idea guy. The appellation used to be a bit of an insult: someone with a grand vision and no follow-through. Now that AI has made execution easier, vision is more valuable. The new idea guy is someone who can identify which problems are worth solving for whom, then translate that into directions an AI can execute.

Where humans shine: The messy middle

AI is decent at proposing options for your strategy. Give Claude 1,000 pages of context—market research, competitive analysis, customer interviews—and it can synthesize a reasonable strategic direction faster than most consultants. It can also execute precise, well-defined instructions with mechanical reliability.

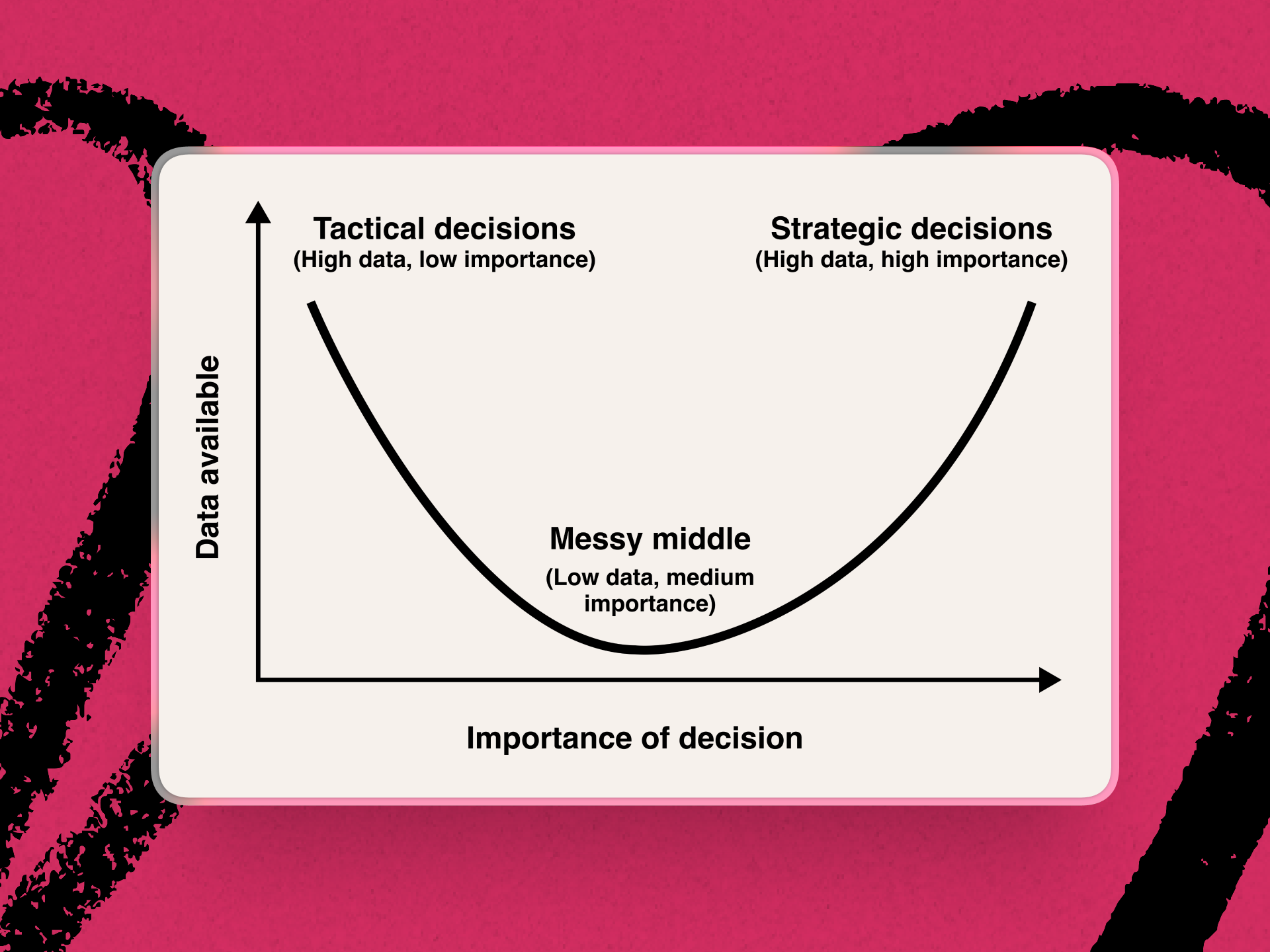

Where AI falls apart is the tactical thinking in between. I call this the NCO gap, borrowing from military structure. Officers set strategy from headquarters, and enlisted soldiers execute orders on the ground. The sergeants who bridge that gap are non-commissioned officers. They understand command intent and ground truth, and translate strategy into action under conditions of uncertainty.

Every’s Dan Shipper has written about how humans are shifting from makers to “model managers“ as AI handles more execution. I’d push this further: We’re middle managers, the NCOs of today.

When I’m debugging a complex issue—diagnosing what broke, weighing the business stakes, deciding whether to patch it fast or fix it right—AI consistently fails. It can’t bridge the gap between ”something’s wrong” and “here’s what to do about it.” It certainly can’t say, “If we change our approach, we can avoid this problem entirely.”

Claude Code knows how to diagnose why your app is slowing to a crawl, and once diagnosed, it knows the fix. But a human engineer who intimately knows the codebase knows where to look first. ChatGPT can research podcast guests and summarize transcripts, but nobody wants to listen to an AI interviewer. Gemini can read all your customer service documents and execute policy for a specific query, but human reviewers catch the new fraud patterns that might not match any template.

AI handles the top (synthesis) and the bottom (execution). Humans own the middle (translation under uncertainty).

Researchers saw this play out when they had Claude play Pokémon. Claude understood the overall objective—to become champion—and could execute individual moves with precision. But it got stuck in the first cave of the game, Mt. Moon, for over 48 hours. The solution required maybe seven moves, something I navigated when I was 12 years old. Claude knew where it was going and could execute any command perfectly, but it couldn’t figure out the medium-level tactics to translate movement into progress when it got stuck in Pokémon.

Humans are great at this kind of extrapolation. Maybe it’s a trick you only learn as a biological intelligence, trying to survive in the real world, where you can never gather enough information but still must make a decision. We have evolved to be great at decision-making under uncertainty, so we can extrapolate from a small number of datapoints and intuit a good enough guess to get us on the right path.

What comes next

After years of building AI systems and, before that, building a company of humans, I’ve learned that the skills are the same. AI skills, management skills—they’re all leadership skills, and AI is giving everyone a medium to practice them.

That’s the true promise of New Taylorism—not that we can finally optimize workers like machines, which was Taylor’s dream and his failure, but that we can learn, through machines, how to lead better, and then bring those skills to the humans who need good leadership.

Most people won’t notice they’re getting a mini-MBA and becoming better managers. They’ll think they’re just getting better at AI.

This column has always been about the overlap between managing AI and managing humans, and now I want to push that idea further. In the pieces ahead, I’ll treat common AI failures as MBA case studies. We’ll go deep on the messy middle, where the real leverage lives. And we’ll document and explore this new era of management, one prompt at a time.

The messy middle is where we belong. It turns out it’s also where we learn.

Mike Taylor is a co-author of the O’Reilly-published Prompt Engineering for Generative AI and an AI engineer. You can follow him on X at @hammer_mt and on LinkedIn.

To read more essays like this, subscribe to Every, and follow us on X at @every and on LinkedIn.

We build AI tools for readers like you. Write brilliantly with Spiral. Organize files automatically with Sparkle. Deliver yourself from email with Cora. Dictate effortlessly with Monologue.

We also do AI training, adoption, and innovation for companies. Work with us to bring AI into your organization.

Get paid for sharing Every with your friends. Join our referral program.

For sponsorship opportunities, reach out to [email protected].

Help us scale the only subscription you need to stay at the edge of AI. Explore open roles at Every.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

.png)

Comments

Don't have an account? Sign up!