“Few new products have exploded into the American marketplace like AI chatbots. Unhindered by the recession, chatbots are racking up millions of users and are increasingly being offered by the world’s largest tech companies.”

Confession: I made up that quote. Actually, I adapted it.

Here’s the original version, from a news article written almost exactly 40 years ago (Jan. 9, 1983) about the rise of the personal computer:

“Few new products have exploded into the American marketplace like the personal computer. Unhindered by the recession or by cutbacks in government spending, personal computers are racking up billions of dollars in sales and are increasingly being sold in department stores and discount houses like so many vacuum cleaners.”

Computers were not new at that time, and neither are AI chatbots now. But sometimes there are moments in a technology’s maturation where a critical boundary is crossed, a breakthrough product is released, and the growth graphs go vertical.

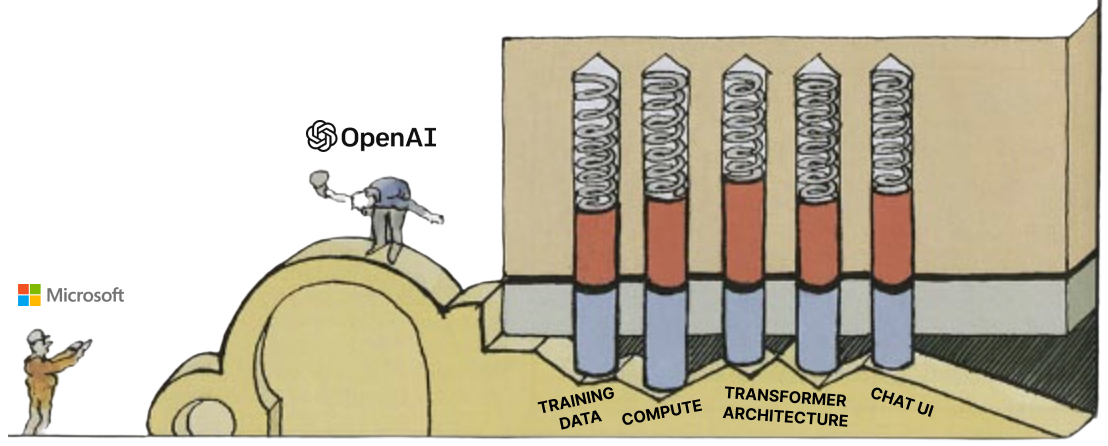

In order to cross such a boundary, multiple factors must come together at the same time. It's like opening a lock: all the pins must align to enter the next room.

(I made this meme using a graphic from the excellent book, The Way Things Work, about which I wrote previously.)

In November 2022, when ChatGPT launched, the final pin clicked into place: it proved you could package LLM technology as a relatively simple chatbot, position it as an alternative to Google, and gain faster adoption than perhaps any other consumer product in history.

Once the world realized the demand for LLM-powered chatbots was much greater than anyone (including ChatGPT creator OpenAI) expected, significant change became inevitable. Yesterday Microsoft announced it will build a ChatGPT-style experience powered by GPT-3 directly into Bing, and the day before that Google announced its own AI chatbot named Bard. Casey Newton from Platformer summed up the developments with the headline, “Microsoft kickstarts the AI arms race.”

There are obvious questions like “Are the AI’s algorithms good enough?” (probably not yet) and “What will happen to Google?” (nobody knows), but I’d like to take a step back and ask some more fundamental questions: why chat? And why now?

Most people don’t realize that the AI model powering ChatGPT is not all that new. It’s a tweaked version of a foundation model, GPT-3, that launched in June 2020. Many people have built chatbots using it before now. OpenAI even has a guide in its documentation showing exactly how you can use its APIs to make one.

So what happened? The simple narrative is that AI got exponentially more powerful recently, so now a lot of people want to use it. That’s true if you zoom out. But if you zoom in, you start to see that something much more complex and interesting is happening.

Narratives have network effects

I remember using GPT-3 for the first time in 2020 and thinking how cool it was, but having no idea what I could use it for. It made a moderate splash in the tech zeitgeist, and a small but passionate developer community formed around it, but eventually most of us moved on. It wasn’t on an obvious trajectory to transform all software, the way it seems it is today.

When the story of the current moment is eventually told, it’ll either gloss over this part or argue that it didn’t take off two years ago because the technology wasn’t good enough yet. But I don’t think that’s true. The real problem is the narrative was having trouble beating the cold start problem.

In order to make AI like GPT-3 useful, developers have to believe it will be worth it to integrate it into their applications, or try and build new applications around it. And for those applications to take off, users have to believe the AI will be good enough, too. But how do beliefs update?

Most people base their beliefs on the consensus of the people around them, and they’re reluctant to buy into big changes to their worldview. The bigger the belief change, the more visceral evidence and social proof is required. So narratives like, “It’s worth it to build using GPT-3” or, more broadly, “AI is capable of transforming the user experience of many categories of software” need to build social momentum in order to break through.

The best way to do so is to have a technical demo that is so good that it makes people open their minds to new possibilities and start spreading the good word. As awesome as GPT-3 is, the original demo didn’t hit hard enough to cause the cascade we’re seeing now.

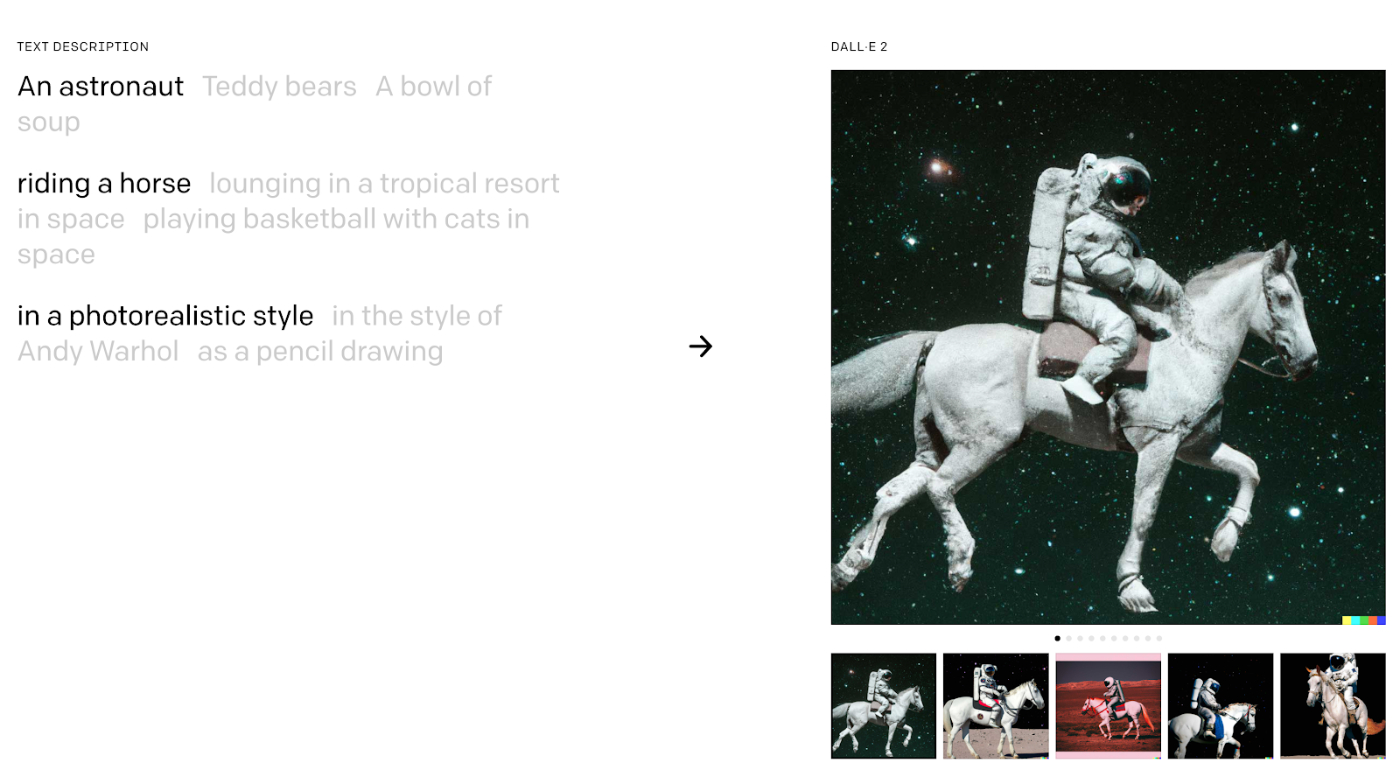

This leads me to a surprising hypothesis: perhaps the ChatGPT moment never would have happened without DALL-E 2 and Stable Diffusion happening earlier in the year!

Worth a thousand words

My first reaction to GPT-3 may have been, “Neat, but not sure how I’d use this,” but my first reaction to DALL-E 2 was more memorable; it was a true “holy cow” moment.

Many people felt similarly mind-blown. We had seen AI-generated images before, but they weren’t very good. DALL-E 2 was on a completely different level.

Images hit you on a visceral level more deeply than text. When you generate text using GPT-3, it takes cognitive effort to figure out if it makes sense or not. Once you read it, it can seem like something a regular person would say—perhaps even a bit more robotic or repetitive. The output isn’t quite on par with quality human writing—whereas with DALL-E 2 and Stable Diffusion, the output is clearly far beyond what 99% of humans are capable of creating.

Also, it was a big deal that there were two high-quality image generation models that came out around the same time. It sent a clear message: this is reproducible. There will be competition and iterative improvement, and you, as a developer, won’t get locked in by one monopolistic provider.

A prepared mind

Once we all had our minds blown by the quality images that were being generated by AI, we were prepared to believe more generally that AI could be useful and high quality.

We started reading text generated by algorithms in a new light. Meanwhile, startups like Jasper that had already been growing quickly started growing even faster. More developers started tinkering around with GPT-3 to see what it could do. I was one of them! When I launched Lex it made a bigger splash than I ever could have imagined. People noticed that these products tended to succeed, and started building their own tools using GPT-3. Investors made grand declarations that “generative AI” was the hot new category.

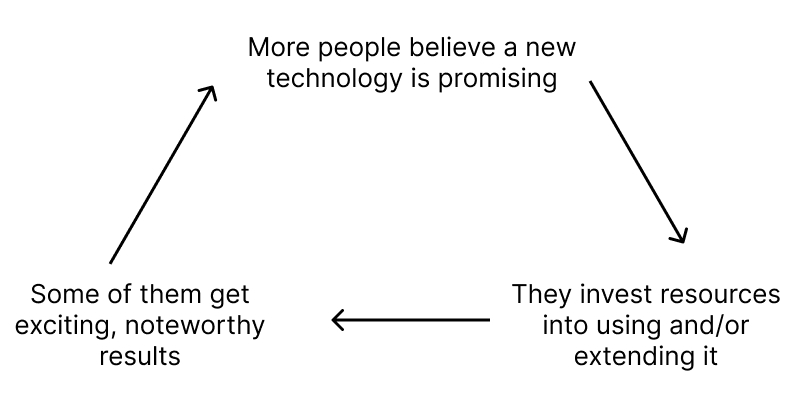

This compounding growth was driven by a simple logical loop:

The loop keeps running until there are no longer exciting results emerging from the technology.

Right now, AI developers are having a field day—it’s very easy to get good results when you launch an AI product Partially this is happening because the underlying technology has so much potential that hasn’t been realized yet, but also a significant part of the traction they’re getting is happening because people are just excited about AI. It won’t last. Eventually, AI will become normalized into another standard tool we use while we’re thinking about other things.

(Note that the AI hype will play out differently from crypto, which is only useful to the extent that other people want to trade it with you. With AI there are fewer capricious boom-and-bust cycles, since it has more single-player utility and higher barriers to entry for financial speculation.)

My colleague Evan over at Napkin Math went so far as to call the current hype around AI a bubble. The opening line of the piece was:

Bubbles are when people buy too much dumb stuff because they think there is someone dumber than them they can sell said stuff to.

This is true, but not the whole truth. Bubbles also happen when people get excited about the possibilities of an invention that isn’t good enough yet. The guy retweeting the umpteenth “GPT-powered Q&A with a set of documents” app is not doing it because he is trying to pump and dump a speculative asset. He just thinks it’s cool! In a year it won’t feel as exciting, and we will likely see a slower rate of new GPT-powered apps and side projects, but that’s OK.

This is how progress happens

When we think about the history of technological progress, we tend to imagine serious inventors creating world-changing technology like cars, electricity, and airplanes. And when we think about new technologies being deployed today, it’s easy to fall into a form of cynicism that interprets all builders as blindly chasing money, and all enthusiasts as dupes, falling for empty hype.

But the past has more in common with the present than it may seem. There were hucksters back then, too. And today’s inventions will be remembered as reverently as the past’s.

The bottom line for builders is to tune out the noise and focus on making it through to the boring part, where people are using your tool all day, rather than tweeting about it.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!