Sponsored By: Reflect

This essay is brought to you by Reflect, an ultra-fast notes app with an AI assistant built in directly. Simplify your note-taking with Reflect's advanced features, like custom prompts, voice transcription, and the ability to chat with your notes effortlessly. Elevate your productivity and organization with Reflect.

This is the second in a five-part series I’m writing about redefining human creativity in the age of AI. Read my first piece about language models as text compressors, and my prequel about how LLMs work.

Was this newsletter forwarded to you? Sign up to get it in your inbox.

You can’t get energy for free. It can be neither created nor destroyed, just moved around.

That's more or less what computers were able to do with text on their own for a long time. Barring a disk failure, text was always conserved, often moved around, sometimes crudely transformed.

But they almost never created it. Other than doing a spell check, if you were seeing text on a computer, it was probably because some human, somewhere, had typed it.

Language models changed this entirely.

Now, you and I can type a few sentences into ChatGPT and watch it expand, character by character, line by line, into something new—composed out of thin air, just for you. Language models take your text and stretch it into a different shape, like glass heated and blown through a tube.

What had previously been an inert collection of bits—a line of characters extending across a screen—is now something different, something potentially alive. When you feed a piece of text to a language model, the text is like an acorn turning into a tree. The acorn itself contains instructions for the tree it will become, and the language model becomes rich dirt, water, and warm summer sun.

In short, language models are free energy for text. Let’s talk about how we can use that function for creative purposes.

A world where every question contains an answer

Language models create a world where every question can be expanded into an answer.

This is critical for creativity because asking questions is a uniquely human thing to do.

Apes, for example, share many human characteristics, including their social nature and a proto-culture that allows them to pass some of what they learn on to their offspring. But they are different from humans in at least one important respect: Apes don’t ask questions.

Question-asking creates room for answers, and answers create room for more questions. This is the first step to getting new ideas and building new things.

Over the centuries, if an answer to a question exists, it’s become increasingly findable for the questioner. Books can answer questions so long as you have the wherewithal to search through them. Google brought us even closer: Many of our most common questions became just a search query away.

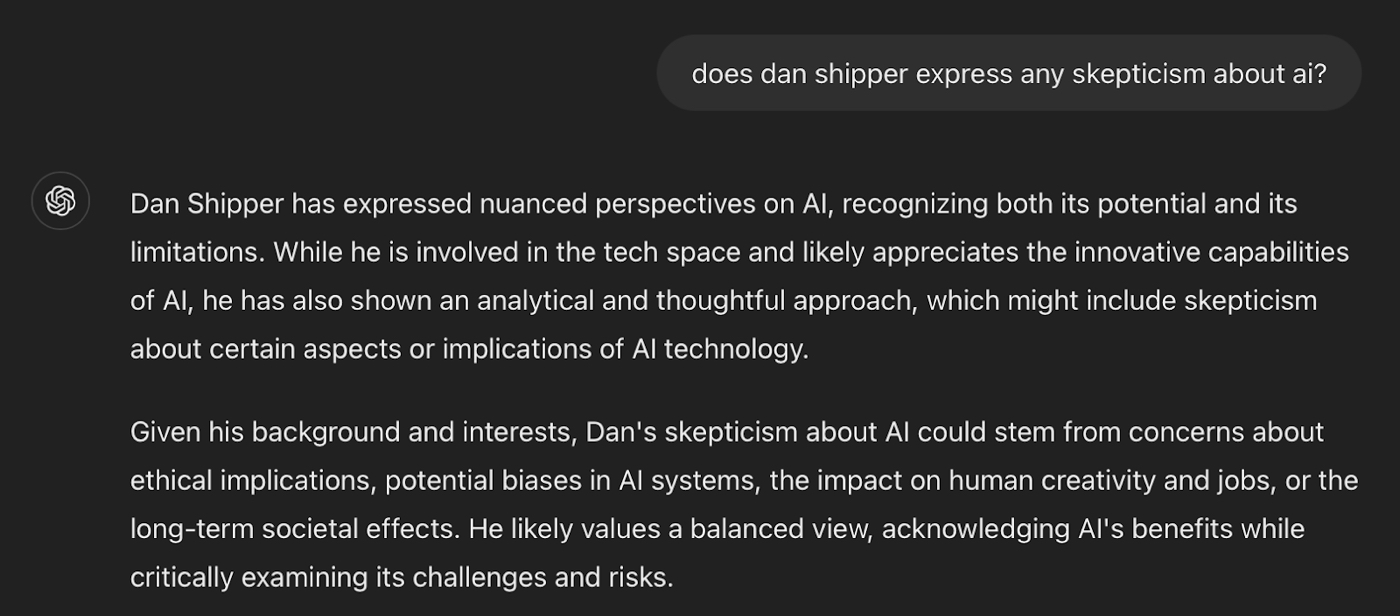

But there are certain of humanity’s answers that have heretofore remained stubbornly unGoogleable. Google only has answers to questions that have already been asked and answered before. For example, try Googling “does Dan Shipper express any skepticism about AI?” I bet you’ll have a difficult time finding a succinct answer.

Language models love questions like this, though:

All screenshots courtesy of the author.They expand any question into an answer. Because language models always predict what comes next in a sequence, the question itself points to the beginning of its answer.

Anyone who spends time around children and listens to the ceaseless questions they ask will know why this is so important. In the past, a question implied a quest to find an answer. Today, questions are answers already—all they need is expansion through language models.

Let’s talk about some of the most useful kinds of expansions.

Language models as comprehensive expanders

If you want to get a comprehensive understanding of any broad domain of human knowledge, language models can help. Comprehensive expansions from language models are a lot like your own personal Wikipedia written in real time about whatever topic you care most about.

“Tell me about the history of kings in the Roman Empire.”

“What do I do if I find a tick on my arm?”

“What are the top strategies for pricing negotiations with enterprise customers?”

Comprehensive expansions are high-level basic explainers, not modified for any specific intended audience, but pitched to everyone.

These questions could be answered with varying levels of speed and quality prior to ChatGPT, but, I would argue, ChatGPT and other AI models are far better resources. First, because they’re fast. And second, because you can ask follow-up questions.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!

Great post Dan, thanks for sharing some of your writing processes!

Hi Dan, greatly enjoyed reading Part One "Compressors" and this Part Two "Expansion" and was looking forward to Part Three "Translation" + four & five, but couldn't find them anywhere.

Did you get around to write them & am I looking in the wrong places?

Many thanks in advance