This is the first of a five-part series I'm writing about redefining human creativity in the age of AI.

I want to help save our idea of human creativity.

Artificial intelligence can write, illustrate, design, code, and much more. But rather than eliminating the need for human creativity, these new powers can help us redefine and expand it.

We need to do a technological dissection of language models, defining what they can do well—and what they can’t. By doing so, we can isolate our own role in the creative process.

If we can do that, we’ll be able to wield language models for creative work—and still call it creativity.

To start, let’s talk about what language models can do.

The psychology and behavior of language models

The current generation of language models is called transformers, and in order to understand what they do, we need to take that word seriously. What kind of transformations can transformers do?

Mathematically, language models are recursive next-token predictors. They are given a sequence of text and predict the next bit of text in the sequence. This process runs over and over in a loop, building upon its previous outputs self-referentially until it reaches a stopping point. It’s sort of like a snowball rolling downhill and picking up more and more snow along the way.

But this question is best asked at a higher level than simply mathematical possibility. Instead, what are the inputs and outputs we observe from today’s language models? And what can we infer about how they think?

In essence, we need to study LLMs’ behavior and psychology, rather than their biology and physics.

This is a sketch based on experience. It’s a framework I’ve built for the purposes of doing great creative work with AI.

A framework for what language models do

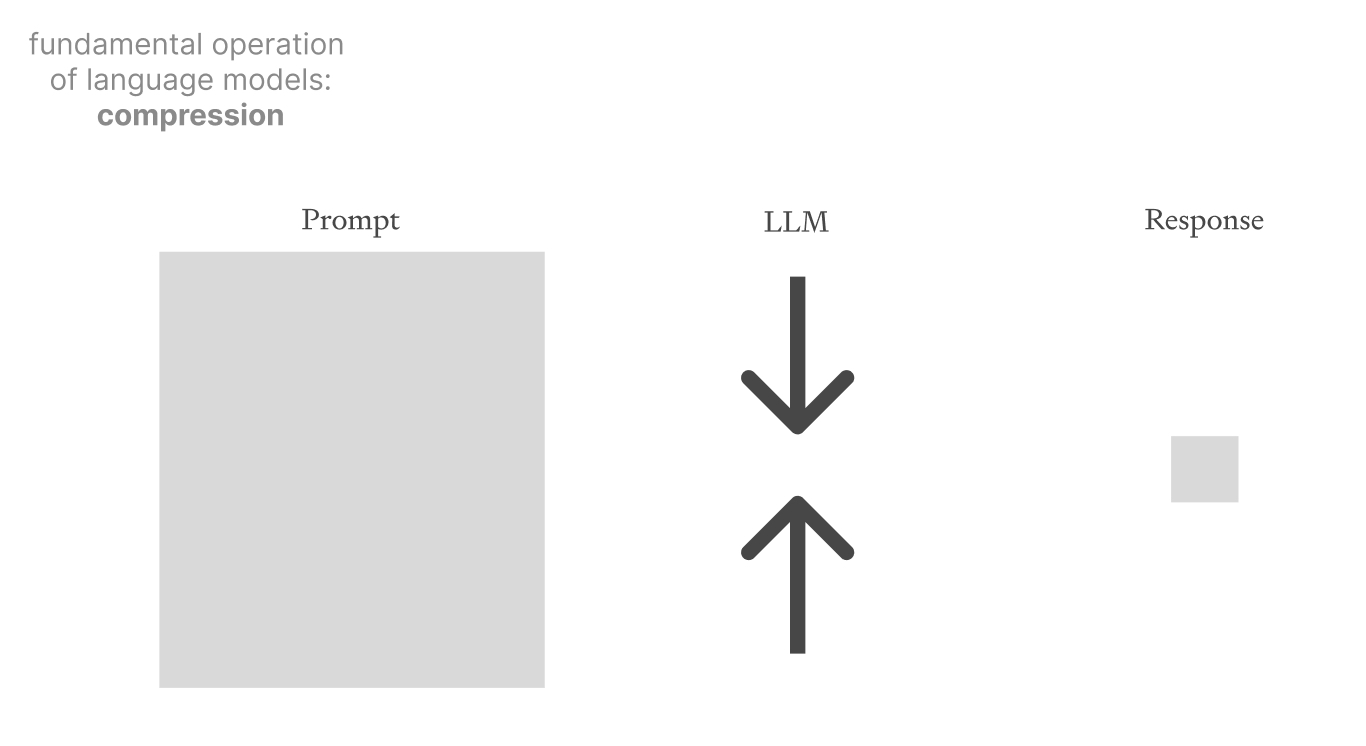

Language models transform text in the following ways:

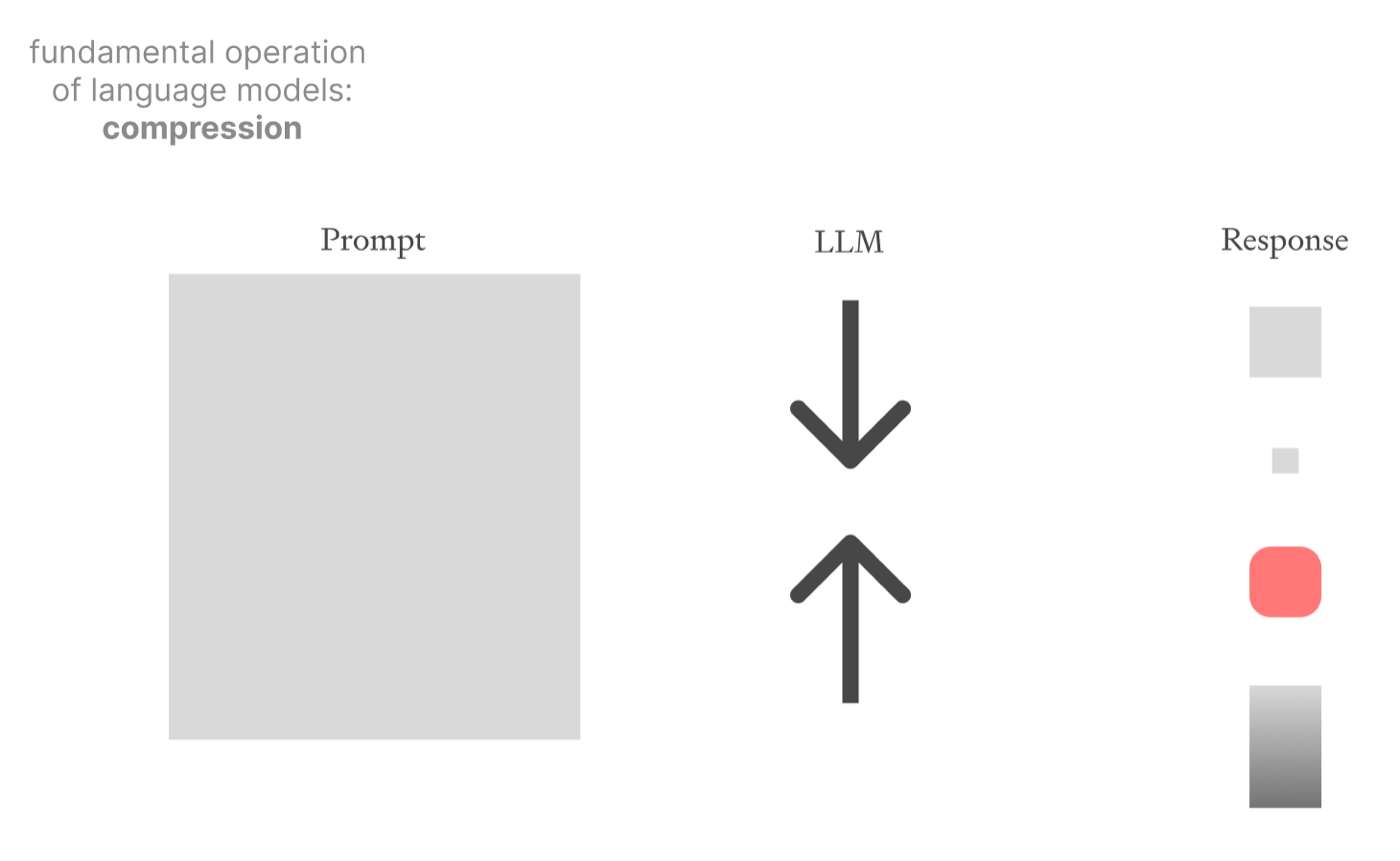

- Compression: They compress a big prompt into a short response.

- Expansion: They expand a short prompt into a long response.

- Translation: They convert a prompt in one form into a response in another form.

These are manifestations of their outward behavior. From there, we can infer a property of their psychology—the underlying thinking process that creates their behavior:

- Remixing: They mix two or more texts (or learned representations of texts) together and interpolate between them.

I’m going to break down these elements in successive parts of this series over the next few weeks. None of these answers are final, so consider this a public exploration that’s open for critique. Today, I want to talk to you about the first operation: compression.

Language models as compressors

Language models can take any piece of text and make it smaller:

Source: All images courtesy of the author.This might seem simple, but, in fact, it’s a marvel. Language models can take a big chunk of text and smush it down like a foot crushing a can of Coke. Except it doesn’t come out crushed—it comes out as a perfectly packaged and proportional mini-Coke. And it’s even drinkable! This is a Willy Wonka-esque magic trick, without the Oompa Loompas.

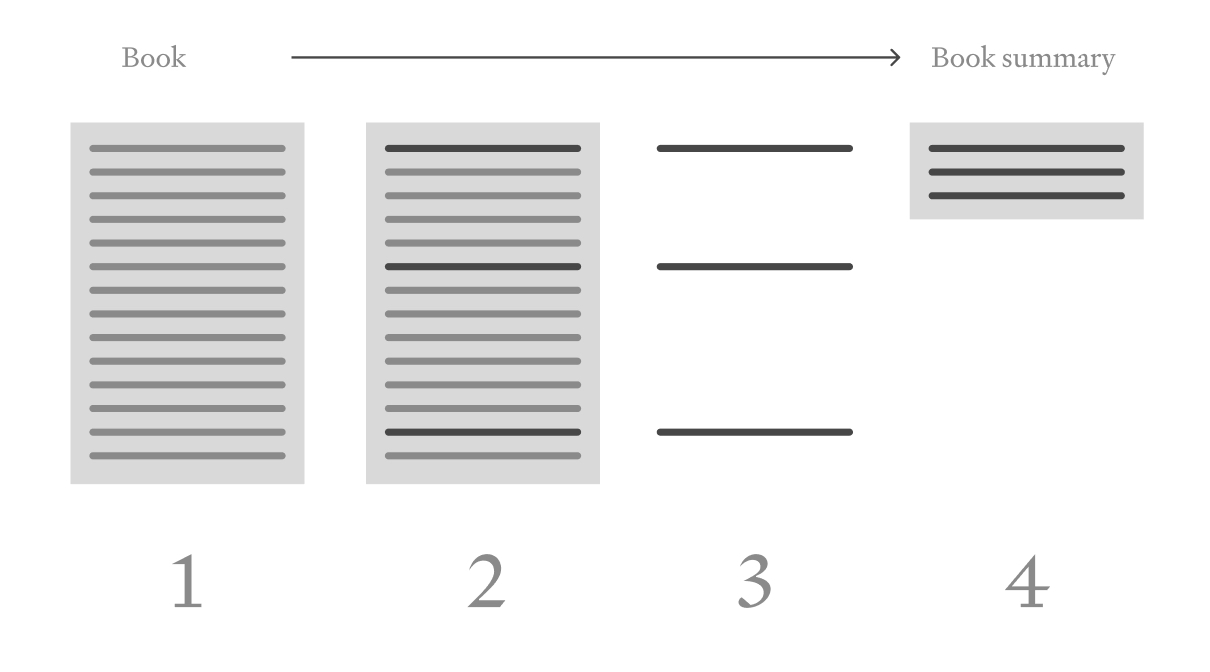

Language model compression comes in many different flavors. A common one is what I’ll call comprehensive compression, or summarization.

Language models are comprehensive compressors

Humans comprehensively compress things all the time—it’s called summarization. Language models are good at it in the same way a fifth grader summarizes a children’s novel for a book report, or the app Blinkist summarizes nonfiction books for busy professionals.

This kind of summarizing is intended to take a source text, pick out the ideas that explain its main points for a general reader, and reconstitute those into a compressed form for faster consumption:

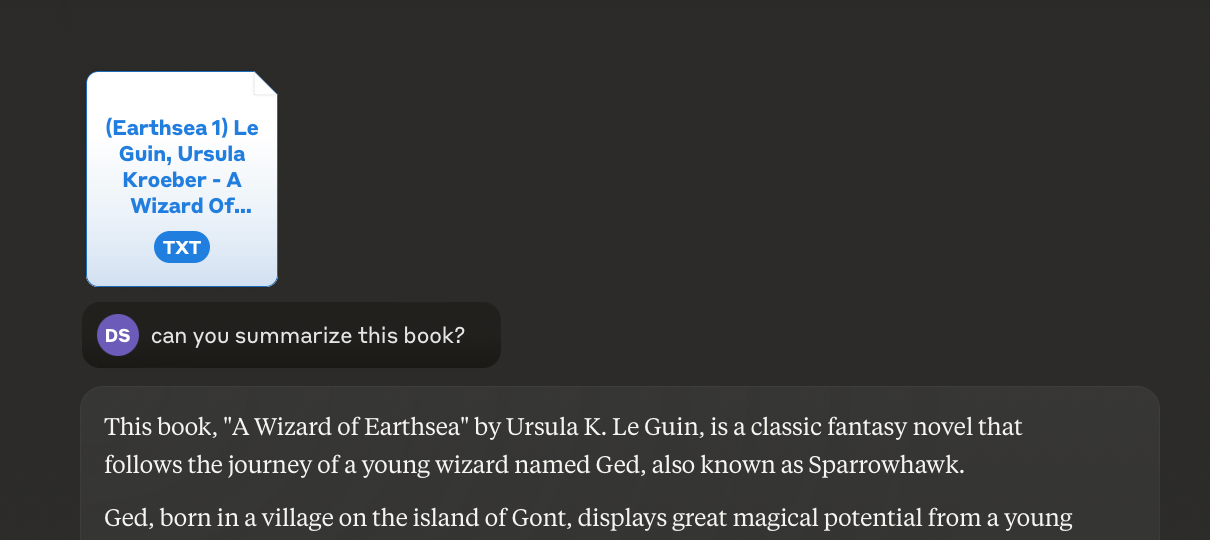

These summaries are intended to be both comprehensive (they note all the main ideas) and helpful for the average reader (they express the main ideas at a high level with little background knowledge assumed).In the same way, a language model like Anthropic’s Claude, given the text of the Ursula K. LeGuin classic A Wizard of Earthsea, will easily output a comprehensive summary of the book’s main plot points:

But comprehensive compression isn’t the only thing language models can do. You can compress text without being comprehensive—which creates room for totally different forms of compression.Language models are engaging compressors

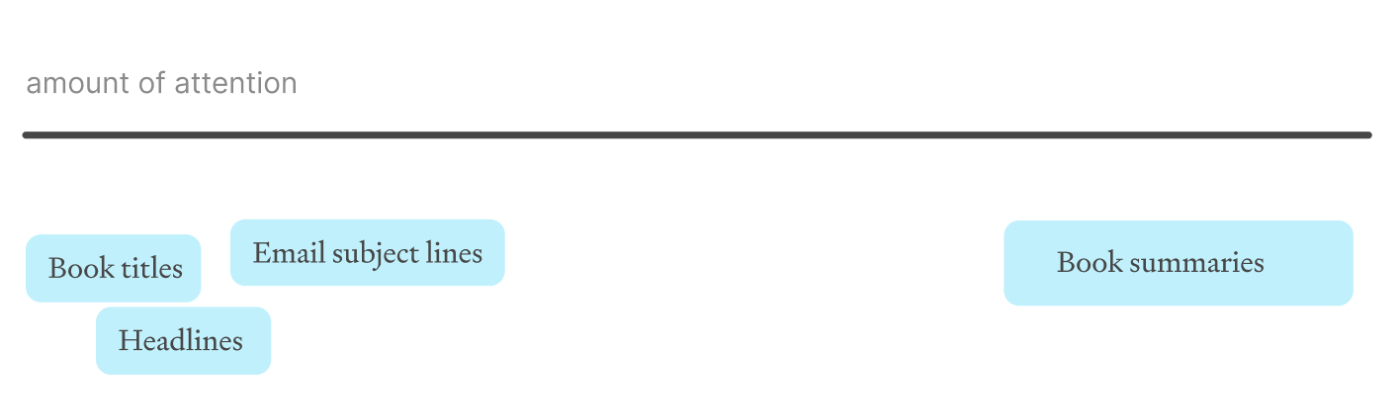

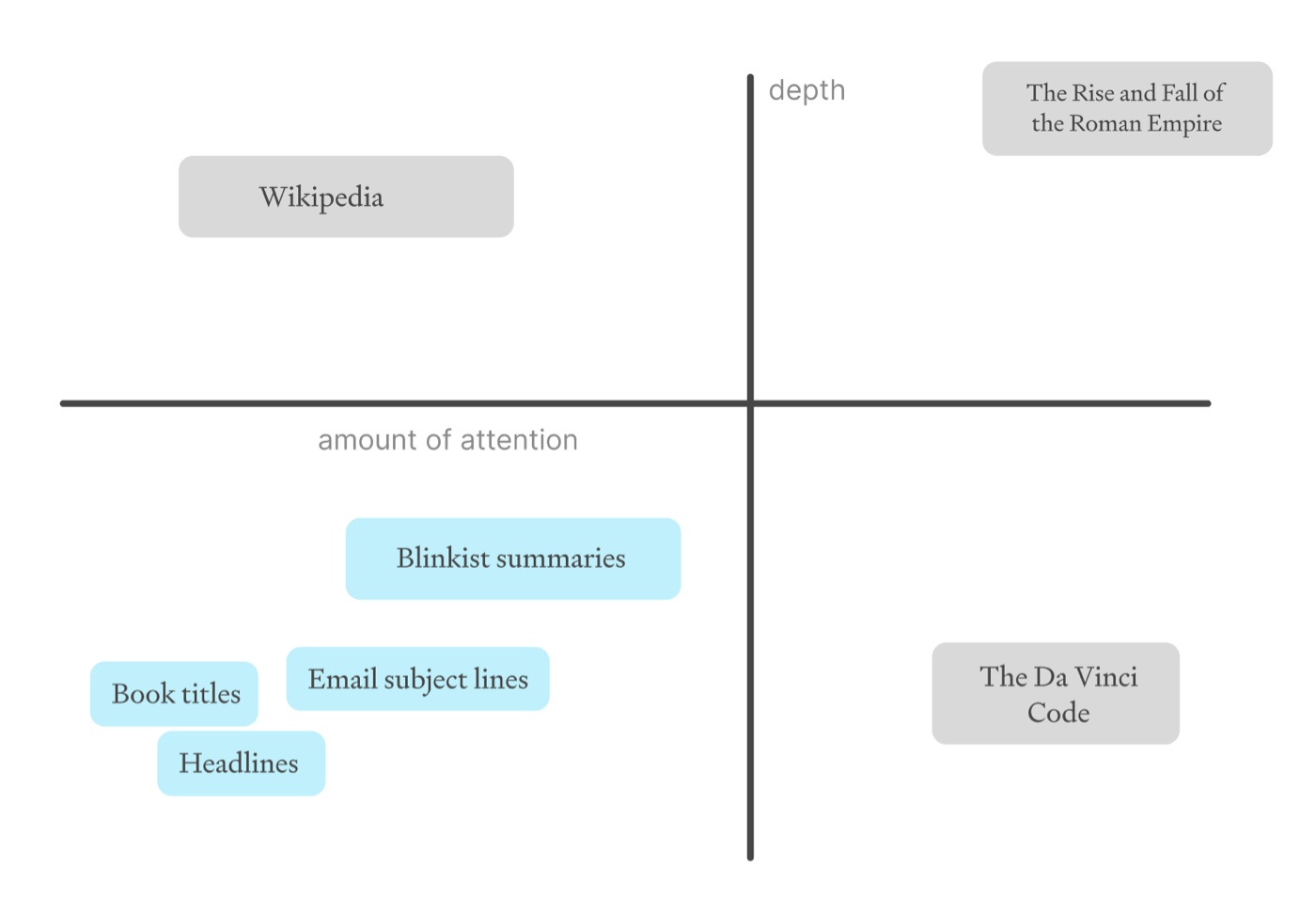

If we require our compression to be interesting instead of comprehensive, compressions look less like book reports and more like email subject lines, article headlines, book titles, and subtitles. If we graphed them by how much attention is required to consume them, it would look like this:

Through this lens, book titles are just as much a compression as a kid’s book report; they just prioritize different requirements for what the compression is trying to capture. Language models excel at this type of compression, too.For example, at Every we use an internal AI tool to help us turn the content of our stories into headlines. It’s specifically aimed at interestingness, as opposed to comprehensiveness. When I fed it the text of A Wizard of Earthsea, it suggested these titles:

- The Shadow's Name

- The Warrior Mage's Shadow Quest

- The Shadow Defeated

These are pretty good! But language model compression doesn’t stop at just these two dimensions of compression.

Language models compress over many different dimensions

There are many dimensions along which compression can be run. Here are some examples of headlines, all written by Claude:

- Clickbaiting: "You Won’t Believe What This Young Wizard Discovered on a Shadow Isle!"

- Intrigue: "Secrets of the Shadowy Mage Isle"

- Vibe-y: "chill wizard explores shady island, finds self"

- Alliteration: "The Wizard’s Winding, Wondrous Wanderings"

- Snark: "Oh, Another 'Chosen One' Wizard Story Set on an Island, How Original"

- Paranoia: "They’re Watching: A Wizard’s Harrowing Shadow Odyssey"

- Pessimism: "The Gloomy Account of a Doomed Mage on Hopeless Shadow Rock"

- Confusion: "Wait, What's Happening? A Wizard Goes Somewhere Shadowy, I Think?"

- Absurdist: "Schrödinger's Wizard Simultaneously Visits and Doesn't Visit Shadow Isle"

- Ironic detachment: "I Guess This Wizard Goes to Some Dumb Island or Whatever"

- Gaslighting: "There Is No Shadow Isle, You're Just Imagining Things"

When we started talking about compression, we began with this graphic:

If we wanted to revise it with our expanded idea of compression along many different dimensions, we might do something like this: Language model compression doesn’t just turn a large gray rectangle into a small gray rectangle. It can turn it into a tiny rectangle, or a red rectangle with rounded corners, or an elongated rectangle with a gradient fill.Each of the outputs of compression carry some family resemblance to the original input. In other words, there’s always some amount of common overlap between the prompt and response, but the exact overlap (and the areas of difference) is going to depend on the type of compression you’re running.

The dimensions of compression can also be arbitrarily specific and combined together. Here are some example prompts:

- A tweet in the style of Dan Shipper but with more snark and paranoia

- Analysis in the style of George Saunders but make it gaslight-y

- A book summary in the style of a Scott Alexander book review, but make it concise

For example, using the last prompt as a jumping-off point, here’s a concise book summary of A Wizard of Earthsea that Claude created in the style of Scott Alexander from Slate Star Codex:

“A Wizard of Earthsea is about the dangers of power without wisdom.

The young wizard Ged, in a fit of youthful pride and anger, summons a shadow creature from the realm of the dead. This goes about as well as you would expect. The shadow hunts Ged across the world and he must flee from it, until finally his old master tells him that he can't run away from his own shadow. Ged realizes that the only way out is through—he has to turn around, chase down the shadow, and reintegrate it into himself.

This is a pretty standard "power corrupts" story, but it's unusually wise in depicting Ged's flaw not as a desire for power itself, but as a lack of education in how to use power. Ged isn't evil, he's just young and dumb.

His eventual victory comes from understanding how the universe works, not from overwhelming firepower. The real treasure was the wisdom he gained along the way.

Le Guin once said her books were about ‘the journey that is inward, and not the other journey.’

A Wizard of Earthsea literalizes this, as Ged sails further and further away from the known lands in pursuit of his shadow, only to realize he has to integrate it back into himself. We all have to take this journey eventually. The shadow represents the parts of ourselves we try to deny and push away. But there's no escape—in the end, the only way to grow is to acknowledge your shadow, accept it, and become whole.”

I don’t know if this is exactly what Scott Alexander would write about A Wizard of Earthsea, but it’s certainly in the neighborhood. There are a few things it gets right. The first line is a concise, engaging summary of the book’s main point, which Alexander does better than almost anyone. And the line, “This is a pretty standard ‘power corrupts’ story,” feels very much like his voice.

So we’ve made some progress. Our concept of language models as compressors now includes the idea that they “compress” text across an arbitrary number of dimensions or requirements.

When is that useful in creative work?

Why compression is useful

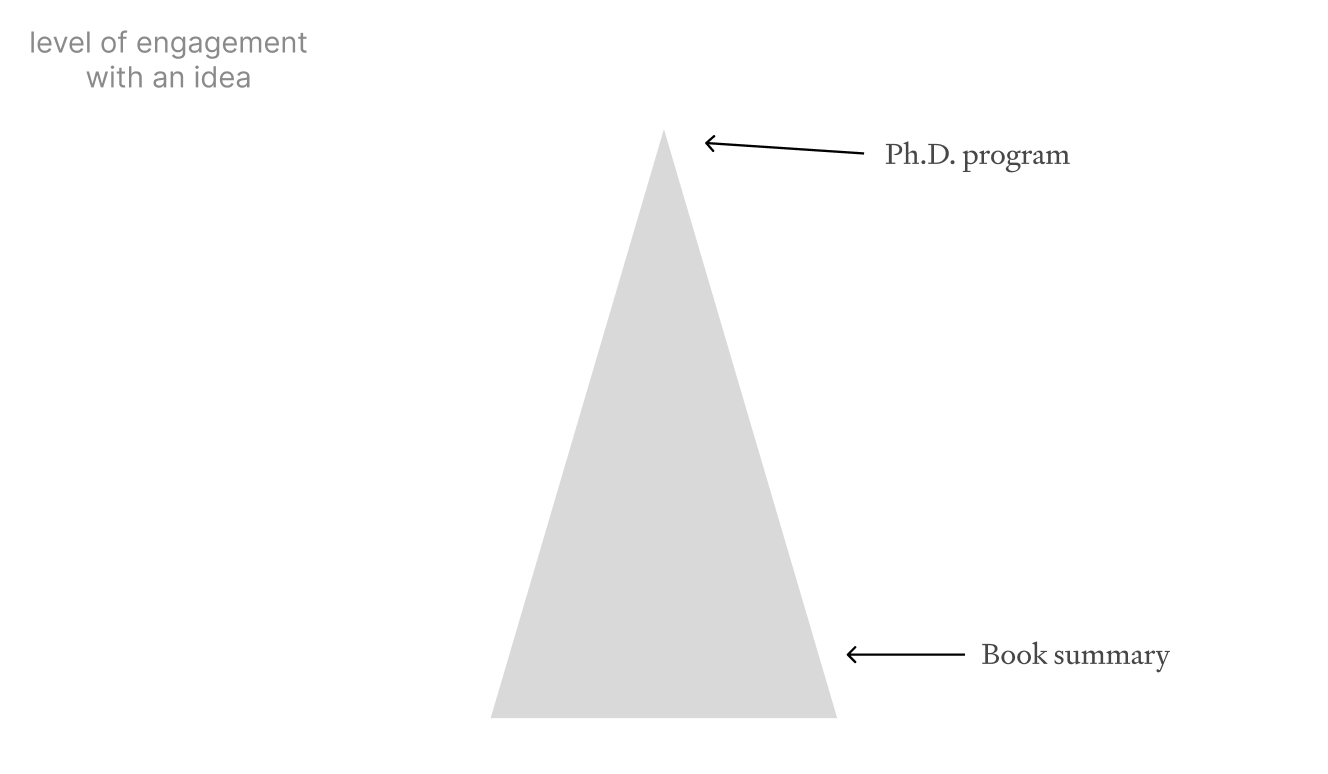

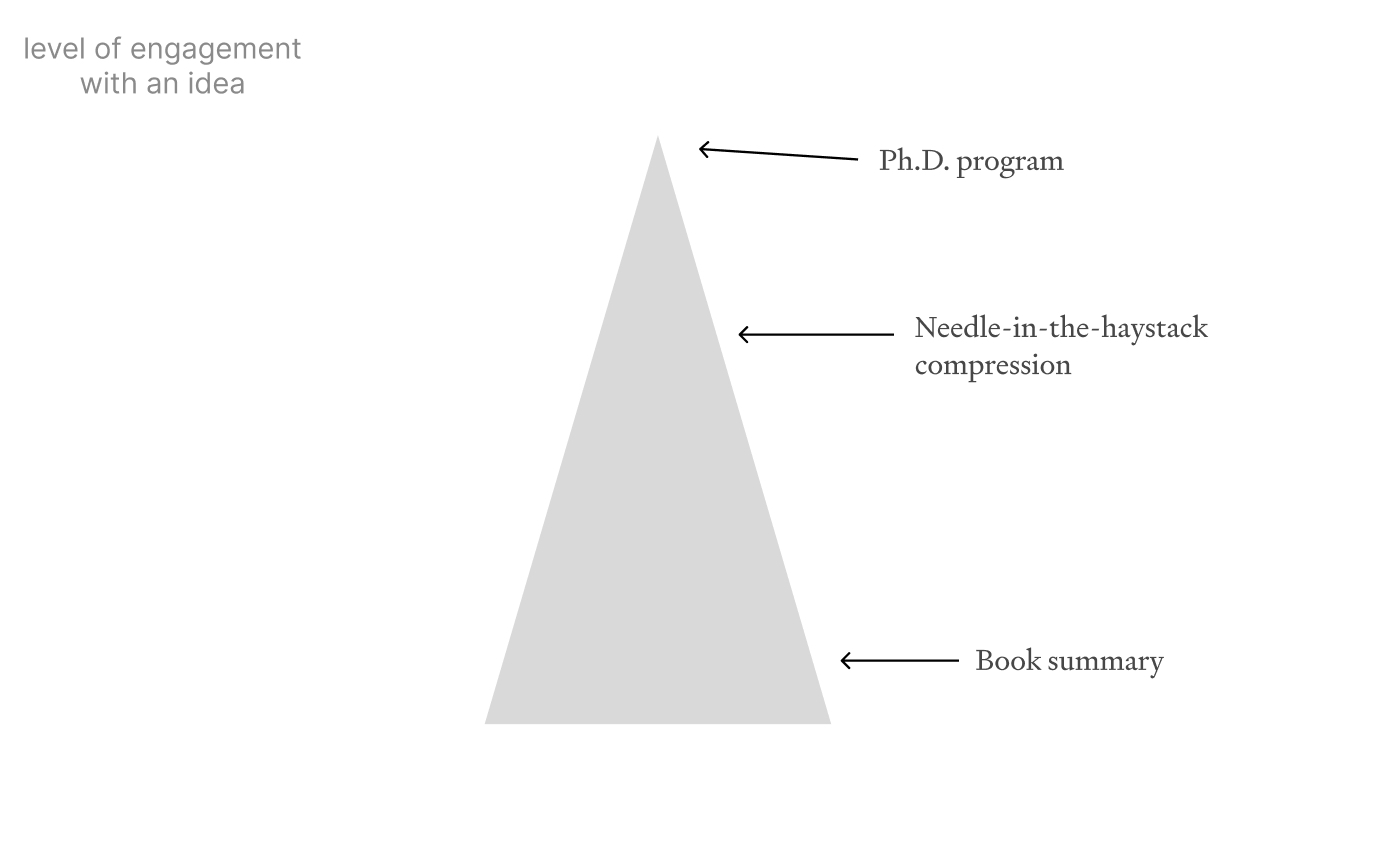

Let’s start with comprehensive compressions like book reports. If we graphed them by the amount of depth they convey and attention they require, they would probably be on the bottom-left quadrant of the graph—little depth, little attention required:

Things that exist in this bottom-left quadrant have a pejorative connotation because they require a low level of engagement with a complex idea:Comprehensive compressions are the McDonald’s french fries of the intellectual landscape: ubiquitous, tasty, cheap, and low status.But this kind of summary is an important output of creative work because it respects the fundamentally limited attention of human beings. Humans need to roam widely over the intellectual landscape before they go deep, and this kind of summary allows us to sample ideas that might be useful without a lot of investment. In that way, it’s like a mini-advertisement for the idea it contains—like a blooming flower is an advertisement for nectar.

The trouble is that doing compressions like this is, generally, drudgery. Anyone who has had to write a summary of their essay for an X post, or has needed to spend a few hours rereading a complex topic so that they could summarize it in a few sentences, will know what I mean.

But language models are good at compression that sits more at the bottom of the funnel of engagement with ideas, too. They help you go deeper into the nuances of an idea instead of just skimming the surface:

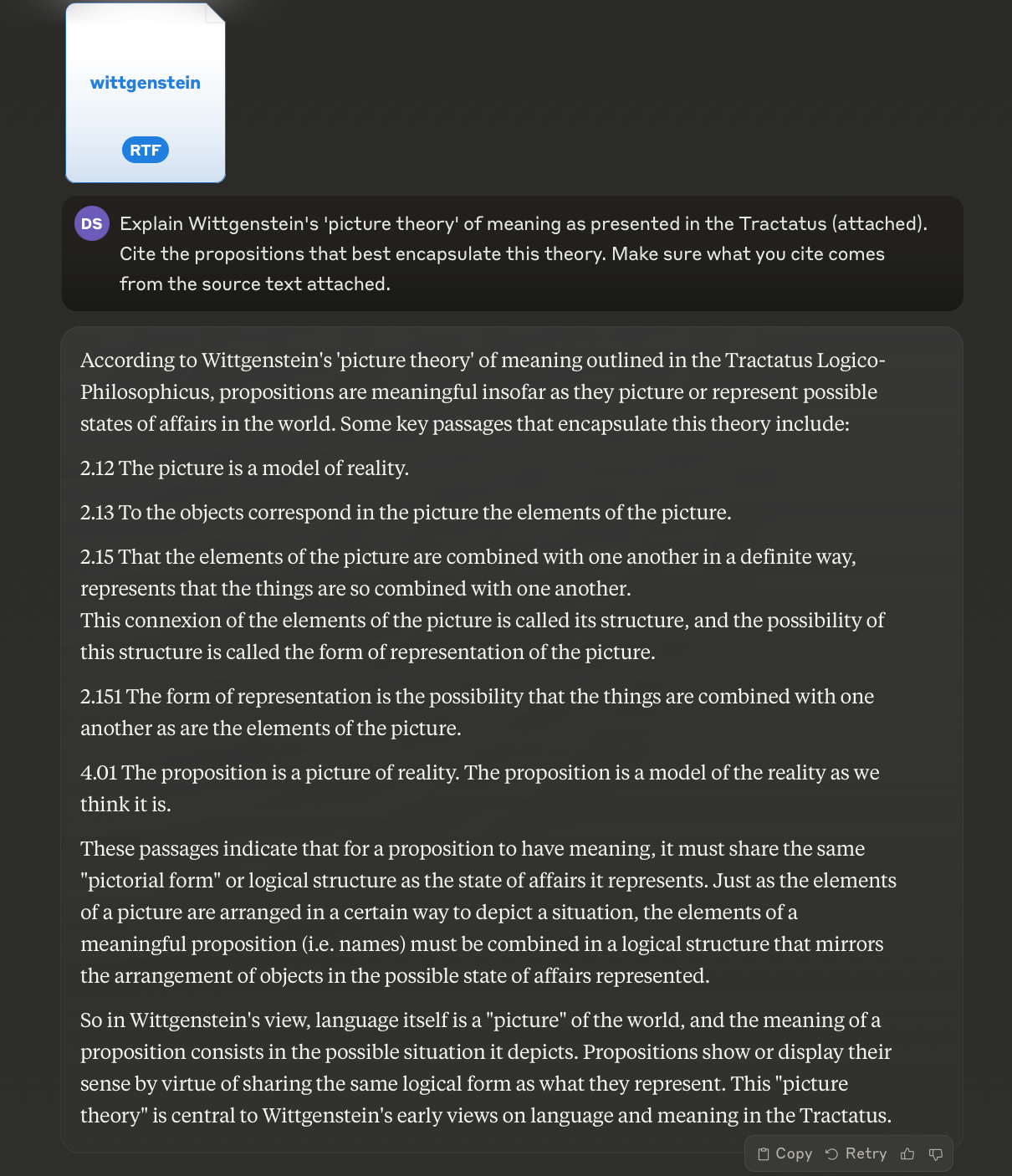

In a needle-in-the-haystack compression, they can find the answer to a difficult question in a long piece of text. For example, I’ve long been a fan of Ludwig Wittgenstein’s philosophical thinking, but his writing is incredibly dense. I’ve fed one of his books into Claude and gotten answers to specific questions—which is a compression of the book into a form that’s usable for me:Instead of puzzling over the text, I can think up new ideas and create new pieces of writing that were previously impossible. There’s too much information to consume, and it’s too complicated for me to understand without this kind of support.Once you start to look at things this way, you’ll see compression everywhere. Emails are often compressions of what people said in meetings. Poems are compressions of sensory experiences. Good decisions are compressions of the results of previous decisions. Basic programming is compressions of Stack Overflow answers.

This view of the world will help you see a significant chunk of the situations in which language models can be useful for creative work.

As the cognitive scientist Alison Gopnik has written, language models are cultural technologies. They give us the best of what humanity knows about any topic—in the right form for any given context. In that way, language models are an extension of a trend that started with writing itself and has extended to the printing press, the internet, and finally our present moment with AI.

This is a superpower for creatives:

Knowledge can make its way to you—wherever you are, whenever you need it, compressed in just the right format for you to use.

What do you want to make with it?

—

Next week, we’ll talk about language models’ next operation:

Expansion.

Dan Shipper is the cofounder and CEO of Every, where he writes the Chain of Thought column and hosts the podcast How Do You Use ChatGPT? You can follow him on X at @danshipper and on LinkedIn, and Every on X at @every and on LinkedIn.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

.png)

Comments

Don't have an account? Sign up!

Was a bit skeptical at first but blown away by the end. Great stuff.

@german88castillo thanks!!

Incredible writing Dan ;-)

I loved the way you re told the GenAI story in your own authentic voice. I am with you in that it helps one dig deeper when it comes to collaboration creativity & LLM’s.

I find that as I interact with Claude, and iterate on the output, the best of what I want to say, eventually does come out.

Thank you for making my Saturday morning.

@nicky_5977 so glad you enjoyed it! Thanks for reading

Really helpful in thinking about how to use LLM’s to understand complex works that I want to read, but don’t have time.

Dan this was so good.

Not just because I loved The Wizard of Earthsea as a teenager, but because you've written so beautifully about the return of nuance.

More articles explaining the meaning of the AI wave in non-tech-terms are needed. This is important!

Great piece. Effective illustration. Look forward to the other parts.

Thanks for this inspiring article and the really to the point visualizations. Placing LLMs and the way they help with information transformation as a step of a cultural evolution really opened a next level perspective to me. Also the classification into compression-expansion-translation made me reflect on my day-to-day use cases of AI. Great writing. Looking forward to the next one in that series.