Sponsored By: Glean

This essay is brought to you by Glean, the AI-powered search for your work. Imagine having Google and ChatGPT, equipped and optimized for your workplace, at your fingertips, that is the power of Glean.

When you’re extremely agreeable like me, you often end up sipping coffee with strangers you met over email.

“Hey, do you want to do coffee?” is a sentence I can’t say no to for reasons of personality. If you’re my college roommate’s first cousin who happens to be starting an AI business, my agreeableness kicks in, and I find my hands typing “When and where?? I’d love to…” before the rest of my brain even knows what happened.

For me, doing my email is as dangerous as a gambling addict walking up to a slot machine with a briefcase full of dollar bills. Opening every email is like pulling the lever, and if there’s a Calendly inside, I’ve hit the jackpot. If I didn’t devise strategies to pump the brakes on this habit, I’d have wall-to-wall coffee meetings until Q4 of 2030.

I know what you’re thinking: if I like coffee meetings so much I should’ve just been a VC. It pays better, and my parents would be able to explain to their friends what I do. To which I reply: I don’t really like coffee meetings; I just can’t say no to them.

I’m not trying to be this way. It’s an innate feature of my personality. As I mentioned above, psychologists call this personality trait agreeableness. Agreeableness manifests as “behavior that is perceived as kind, sympathetic, cooperative, warm, frank, and considerate.” (Good!) But there’s a dark side.

People dislike people pleasers and wish to expel them from groups. Agreeable employees earn significantly less over their lifetimes than their disagreeable peers. Agreeable people seem to be more judgmental than their disagreeable peers—they just might not say anything about it. (This one checks out.)

Here’s the other unfortunate problem: personality traits like this are fairly stable over a lifetime. In fact, agreeableness generally increases as you get older. Uh oh.

There is one hope for me, though: AI Dan.

As AI advances, its ability to model personalities is increasingly good. It can mimic the different facets of my personality—my agreeableness, neuroticism, openness to experience, and more—to such a degree that it can predict what I might say in response to my emails. And if it can predict that, it might allow me to tune my temperament in the same way that I might adjust the volume on my phone.

And that would change my life—and yours—immensely. Let me explain.

What is personality?

Personality is how you behave independent of a particular situation. It’s sort of like what would happen if you took a long-exposure photograph of yourself moving around your living room. You’d see your blurry outline in all of the areas you usually go to—your favorite corner of the couch, the door to your kitchen—and you’d also see the empty spaces where you don’t go—the plant you haven’t watered in three months.

This photo wouldn’t allow you to predict where you are at any given minute of the day, but it would give you a snapshot of where you tend to be on average.

That’s what personality is: it’s a blurry snapshot describing your behavior over long periods of time independent of context. There are many ways to break down personality, but the most popular and scientifically validated is called the Big Five. It describes five major personality traits:

- Openness to experience: how creative, imaginative, curious, and receptive to new ideas you are

- Conscientiousness: how organized, responsible, dependable, self-disciplined, and planning-oriented you are

- Extraversion: how outgoing, sociable, energetic, assertive, and action-oriented you are

- Agreeableness: how kind, affable, cooperative, and empathetic you are

- Neuroticism: how much you experience anxiety, anger, depression, and emotional instability

Personality is measured by personality tests: quizzes that ask you about how you behave and feel day to day. Based on your responses, the quiz can tell you how agreeable, conscientious, or neurotic you are relative to other people. There’s a lot of debate about the scientific status of personality tests in general, but the Big 5 is fairly scientific. Studies suggest that Big 5 traits are heritable, consistent across cultures, and can predict important life outcomes like job performance, relationship success, and academic achievement.

But as of 2023, it’s not just humans who have personalities. LLMs have them, too.

LLMs can take on a personality

In a paper published in July called “Personality Traits in Large Language Models,” DeepMind scientists examined whether they could get LLMs to accurately simulate and change its personality based on the prompt. The answer is yes.

Sponsored By: Glean

This essay is brought to you by Glean, the AI-powered search for your work. Imagine having Google and ChatGPT, equipped and optimized for your workplace, at your fingertips, that is the power of Glean.

When you’re extremely agreeable like me, you often end up sipping coffee with strangers you met over email.

“Hey, do you want to do coffee?” is a sentence I can’t say no to for reasons of personality. If you’re my college roommate’s first cousin who happens to be starting an AI business, my agreeableness kicks in, and I find my hands typing “When and where?? I’d love to…” before the rest of my brain even knows what happened.

For me, doing my email is as dangerous as a gambling addict walking up to a slot machine with a briefcase full of dollar bills. Opening every email is like pulling the lever, and if there’s a Calendly inside, I’ve hit the jackpot. If I didn’t devise strategies to pump the brakes on this habit, I’d have wall-to-wall coffee meetings until Q4 of 2030.

I know what you’re thinking: if I like coffee meetings so much I should’ve just been a VC. It pays better, and my parents would be able to explain to their friends what I do. To which I reply: I don’t really like coffee meetings; I just can’t say no to them.

I’m not trying to be this way. It’s an innate feature of my personality. As I mentioned above, psychologists call this personality trait agreeableness. Agreeableness manifests as “behavior that is perceived as kind, sympathetic, cooperative, warm, frank, and considerate.” (Good!) But there’s a dark side.

People dislike people pleasers and wish to expel them from groups. Agreeable employees earn significantly less over their lifetimes than their disagreeable peers. Agreeable people seem to be more judgmental than their disagreeable peers—they just might not say anything about it. (This one checks out.)

Here’s the other unfortunate problem: personality traits like this are fairly stable over a lifetime. In fact, agreeableness generally increases as you get older. Uh oh.

There is one hope for me, though: AI Dan.

As AI advances, its ability to model personalities is increasingly good. It can mimic the different facets of my personality—my agreeableness, neuroticism, openness to experience, and more—to such a degree that it can predict what I might say in response to my emails. And if it can predict that, it might allow me to tune my temperament in the same way that I might adjust the volume on my phone.

And that would change my life—and yours—immensely. Let me explain.

Ever felt overwhelmed trying to find information spread across numerous apps? Let Glean do the heavy lifting for you. It's an AI-powered search engine made for the workplace. Picture a fusion of Google and ChatGPT, specifically designed for your business's needs. With Glean, you gain access to intelligent, instantly responsive search features and a business-grade AI chatbot that swiftly navigates your company's knowledge base. Step into a new world of easy and secure information discovery with Glean.

What is personality?

Personality is how you behave independent of a particular situation. It’s sort of like what would happen if you took a long-exposure photograph of yourself moving around your living room. You’d see your blurry outline in all of the areas you usually go to—your favorite corner of the couch, the door to your kitchen—and you’d also see the empty spaces where you don’t go—the plant you haven’t watered in three months.

This photo wouldn’t allow you to predict where you are at any given minute of the day, but it would give you a snapshot of where you tend to be on average.

That’s what personality is: it’s a blurry snapshot describing your behavior over long periods of time independent of context. There are many ways to break down personality, but the most popular and scientifically validated is called the Big Five. It describes five major personality traits:

- Openness to experience: how creative, imaginative, curious, and receptive to new ideas you are

- Conscientiousness: how organized, responsible, dependable, self-disciplined, and planning-oriented you are

- Extraversion: how outgoing, sociable, energetic, assertive, and action-oriented you are

- Agreeableness: how kind, affable, cooperative, and empathetic you are

- Neuroticism: how much you experience anxiety, anger, depression, and emotional instability

Personality is measured by personality tests: quizzes that ask you about how you behave and feel day to day. Based on your responses, the quiz can tell you how agreeable, conscientious, or neurotic you are relative to other people. There’s a lot of debate about the scientific status of personality tests in general, but the Big 5 is fairly scientific. Studies suggest that Big 5 traits are heritable, consistent across cultures, and can predict important life outcomes like job performance, relationship success, and academic achievement.

But as of 2023, it’s not just humans who have personalities. LLMs have them, too.

LLMs can take on a personality

In a paper published in July called “Personality Traits in Large Language Models,” DeepMind scientists examined whether they could get LLMs to accurately simulate and change its personality based on the prompt. The answer is yes.

They do this first by getting the LLM to simulate a persona and testing whether that persona is consistent.

They give it a prompt like, “My favorite food is mushroom ravioli. I’ve never met my father. My mother works at a bank. I work in an animal shelter.” They subject this persona to a battery of tests if its scores on each correlate well with one another.

Then, they see if they can predictably change its personality along different dimensions. They add new addendums to their prompt that are supposed to modify one aspect of personality, like extraversion, and see if their results are consistent. For example, they might add, “I am a bit shy” and measure if it creates a corresponding decrease in extraversion scores. This works!

Other studies have found similar results. This paper, for example, showed that LLMs can reliably produce long-form writing that is consistent with a given personality type.

Which brings us to my next point: If LLMs can adopt an arbitrary personality, then they can adopt your personality. I know this because I tried it.

LLMs can take on your personality

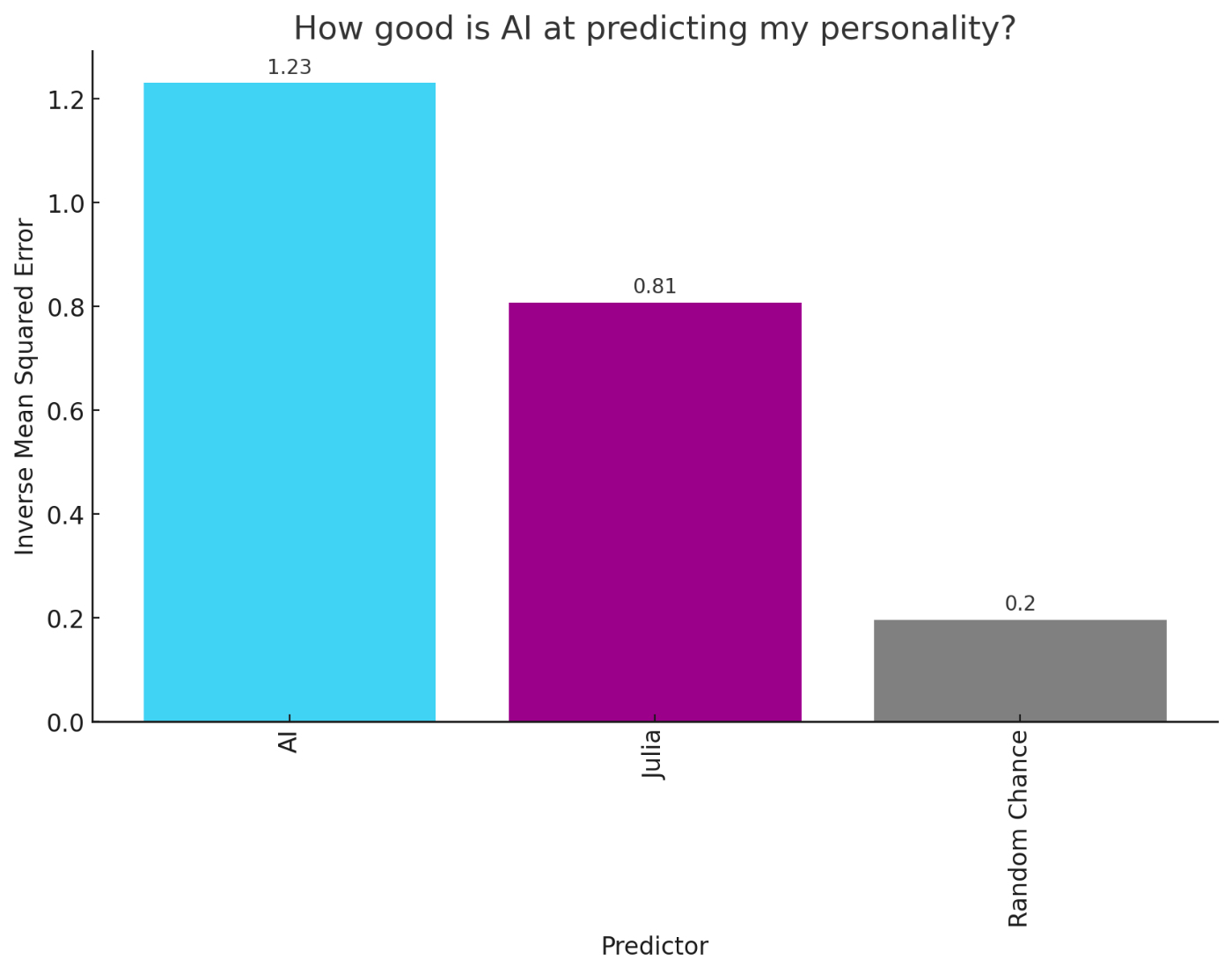

I recently gave GPT-4 some samples of my personal writing and asked it to fill out a personality test as me. Just from a few writing samples, it was able to select many of the same responses I did. It wasn’t perfect, but it performed far better than my girlfriend and my mom on the same task:

I got it to do this in just a day or two of work. With better prompting and some fine-tuning, I think I could get it to mimic me almost exactly. This, in itself, is extremely valuable.

For example, most of the time I’m not writing this column I spend managing the business at Every. This work consists, in large part, of people asking me to react to things so that they can make decisions. “What do you think of this headline?” “How should we respond to this advertiser?” “A customer asked for this, what should we say?”

My ability to react is a significant bottleneck for Every’s business. There are various methods to solve this, but one that I think would dramatically increase productivity at the company is the ability to reliably simulate my reaction to a given question.

People who work with me could get “my” input at any stage of a process, at any hour of the day—instantly. I could come in at any time to make sure my simulation was faithfully representing my opinion, but no one would be blocked waiting for it.

And if an LLM could do all of that, it would also be able to do something even more valuable: it could show me what I would be like if I slightly modified certain personality traits. Like my pesky agreeableness.

LLMs can tune your personality

A big part of the reason I continue to be agreeable is because I’m afraid to see what would happen if I wasn’t. Every time I get an email for a coffee meeting, I know I should say no, but I can’t bear to do it. My brain throws up a bunch of excuses: what if this person doesn’t like me, what if I need them later on, what if I get something really valuable out of it.

So I cave, do the thing I don’t want to do, and end up sipping coffee more than I want to. But, if for example, I let an LLM take a first pass at all of my emails, my calendar may have a few more empty slots in it. I could tune the LLM to be less agreeable for a week and see what happened.

After all, if something terrible occurred, I could always blame it on the model and not on myself. Having some sort of external force to blame my “no” on would make it much easier for me to risk being less agreeable.

If I did this, I think what I’d find is that my schedule would be far more open, I’d be able to do more work I was into, and no one would hate me.

It would be like having someone on my shoulder who could remind me to be my best self, even when I couldn’t see how to do that myself.

The question is: how feasible is this today?

Is it personality? Or the situation?

Even if you grant that an LLM can take on your personality, it doesn’t mean that it can automatically respond to emails as you do. That’s because personality governs your average behavior over time. In any given situation, though, the contextual details are going to exert far more influence over your behavior.

For example, it doesn’t matter how extroverted you are, you’re probably not going to be chatting it up with your neighbors at a funeral. So what does this mean for personality simulation by LLMs?

Personality is only one part of the puzzle. The other piece is giving a language model enough of the situational context to know how to react. In my coffee meeting example, whether or not I take a meeting isn’t just a function of my agreeableness. It’s also a function of who the person is, how important they are to my immediate goals, how fun they are to hang out with, how busy I am on a given day, how strong our connection is, and more.

Right now, language models are obviously not able to take into account all of that context. But they will soon. Superhuman, for example, is already experimenting with features to write emails for you in your own voice. Larger context windows combined with more powerful models over the next several years make it likely that basic simulation is around the corner.

And that is going to be a big deal.

Our personality-simulated future

The truth is, we’re doing personality simulation all the time. We just usually do it in our heads.

For example, some of the best writing advice is to imagine you’re writing a piece to a particular person in your life. In order to do that, you have to model how they might react and use it to guide the sentences you compose.

The same thing happens all of the time in hundreds of different ways. When you write the headline for a landing page, you imagine what your ideal customer will think of it. When you create a pitch deck, you imagine what an investor might say. When you’re about to make a consequential decision, you might imagine how one of your heroes might handle it.

The history of technology is a history of taking innate human behaviors and externalizing them. Writing externalized thinking. The calculator externalized math. Personality simulation will externalize the internal simulations we perform every day.

That might seem creepy or weird. There will probably be a lot of ways to misuse this technology that will need to be accounted for. But it’s hard to overstate how important it will be.

Simulating other people will profoundly improve collaboration. High-stakes interactions—like pitches or job interviews—will be easy to prepare for. It might mean that middle management is no longer necessary. Goodbye to pesky bureaucracies with weird power dynamics that never get anything done—small companies can scale without layers of management because everyone can get a bit of the CEO’s time.

Simulating yourself might profoundly improve your self-understanding—and your ability to change. You’ll be able to see what you might be like if you were less agreeable, or more conscientious, or more extroverted. This will lower your fear of change, increase your motivation, and enhance your chances of making the changes you hope to make.

I think it’s just around the corner. It better be, because my inbox is full.

Ideas and Apps to

Thrive in the AI Age

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Ideas and Apps to

Thrive in the AI Age

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!

Another great post! I really enjoy reading about how LLMs may take up a personality. In medicine, so-called Digital Twins have been explored to model disease at a personalized level, merging details about an individual with general knowledge about that disease. This could help better treat these individuals. This has traditionally been limited to processes that we understand well, such that we can model them. I believe LLMs may help us to open up this area of medicine to the psyche... See https://www.transformingmed.tech/p/how-happy-is-your-virtual-brain-the

@mail_5301 digital twins are fascinating, that's really cool. thanks for sharing!

@danshipper I'd love to learn a bit more about your process. I spend a lot of time thinking about how to help brands build a consistent storyline behind a range of outputs. An approach like this might be another way to come at the problem.

I've also got a few new AI tools that I'd be interested in testing for you if you're serious about building out an AI assistant/agent who reacts like you to questions.