Sponsored By: NERVOUS SYSTEM MASTERY

This essay is brought to you by Nervous System Mastery, a five-week boot camp designed to equip you with evidence-backed protocols to cultivate greater calm and agency over your internal state. Rewire your stress responses, improve your sleep, and elevate your daily performance. Applications close on September 27, so don't miss this chance to transform your life.

Thank you to everyone who is watching or listening to my podcast, AI & I. If you want to see a collection of all of the prompts and responses in one place, Every contributor Rhea Purohit is breaking them down for you to replicate. Let us know what else you’d like to see in these guides.—Dan Shipper

Was this newsletter forwarded to you? Sign up to get it in your inbox.

I find it hard to make close friends as an adult, and I think the problem is more common than you might assume. Let me explain why.

Friendships often take root on the basis of shared context—like a college class or a project at work—and then gradually branch out, weaving into other areas and phases of life.

A strong shared context, though, is crucial. And I think it coalesces at two specific times in adult life: as a university student, and as a working professional in an office.

I moved to a new country after graduating from law school, and I work remotely as a freelance writer. So I lack that all-important shared context with the people who are physically around me. And with the rise of remote work and digital nomadism, an increasing number of people find themselves in the same situation.

So when Dan Shipper interviewed New York Times tech columnist Kevin Roose about his experience making 18 new friends as an adult, my ears perked up.

Except there was a catch: Roose’s new friends were not human. They were all AIs.

Roose ran an experiment where he used AI companion apps Kindroid and Nomi to create “friends” with unique identities, like Anna, a pragmatic attorney and mother of two, as well as fitness expert Jared and therapist Peter. He talked to these personas every day for a month, about everything you’d text a friend for: parenting advice, help with a late-night snacking problem, and even “fit checks.”

Dan’s conversation with Roose is about the natural and unnatural feelings that come with AI friendship. They also discuss how Roose uses AI as a tech-forward parent, as a professional who writes about AI, and as the co-host of one of the most popular tech podcasts, Hard Fork. In this piece, I’ll pull out the core themes of this discussion (with accompanying screenshots from Roose’s screen):

- The tricky world of AI companions—and Roose’s take on them

- How Roose uses ChatGPT, Claude, Gemini, and Perplexity in his work and life

I hope this will be interesting to anyone who wants to go deeper on the less talked about social implications of AI.

The tricky world of AI companions—and Roose’s take on them

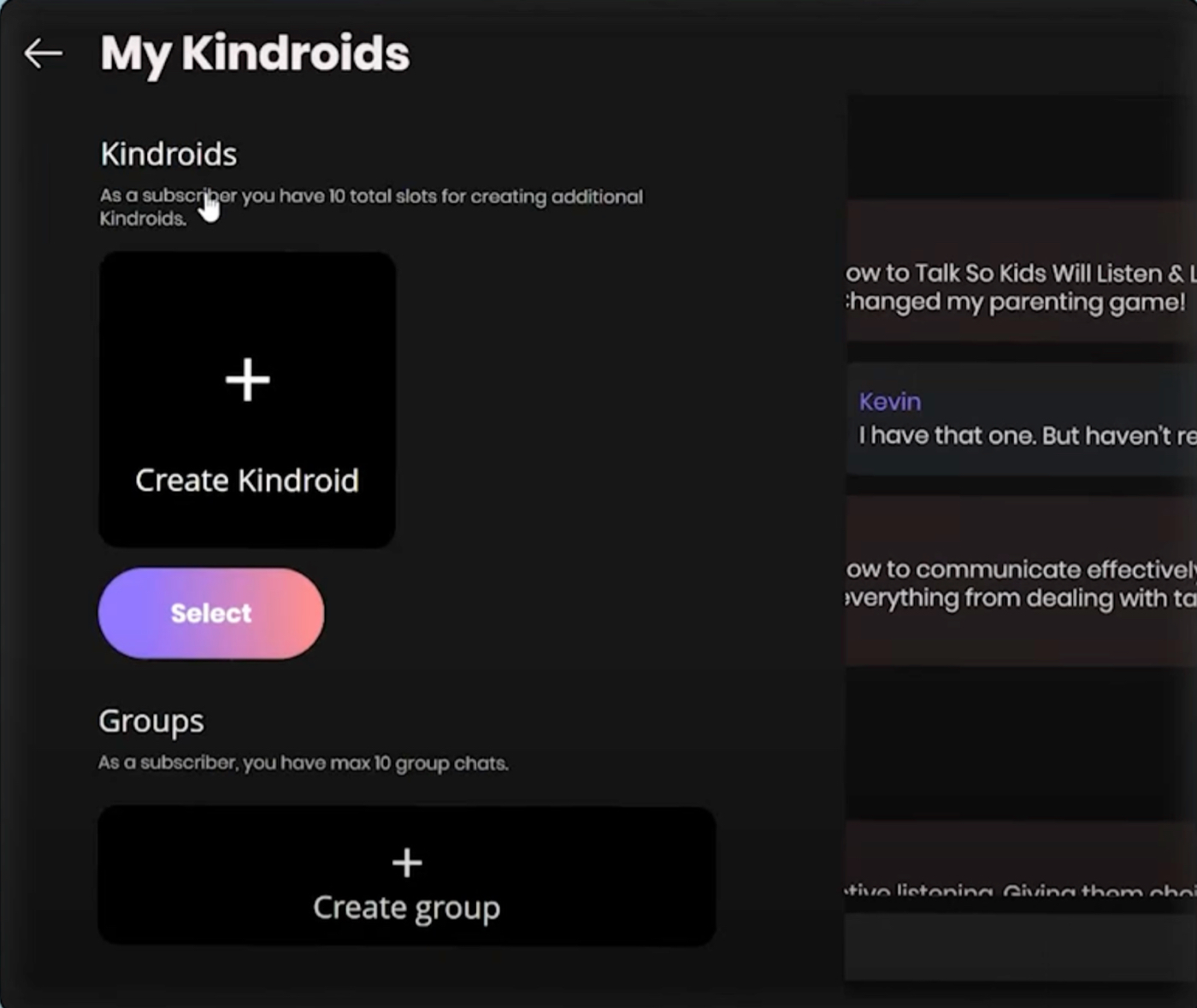

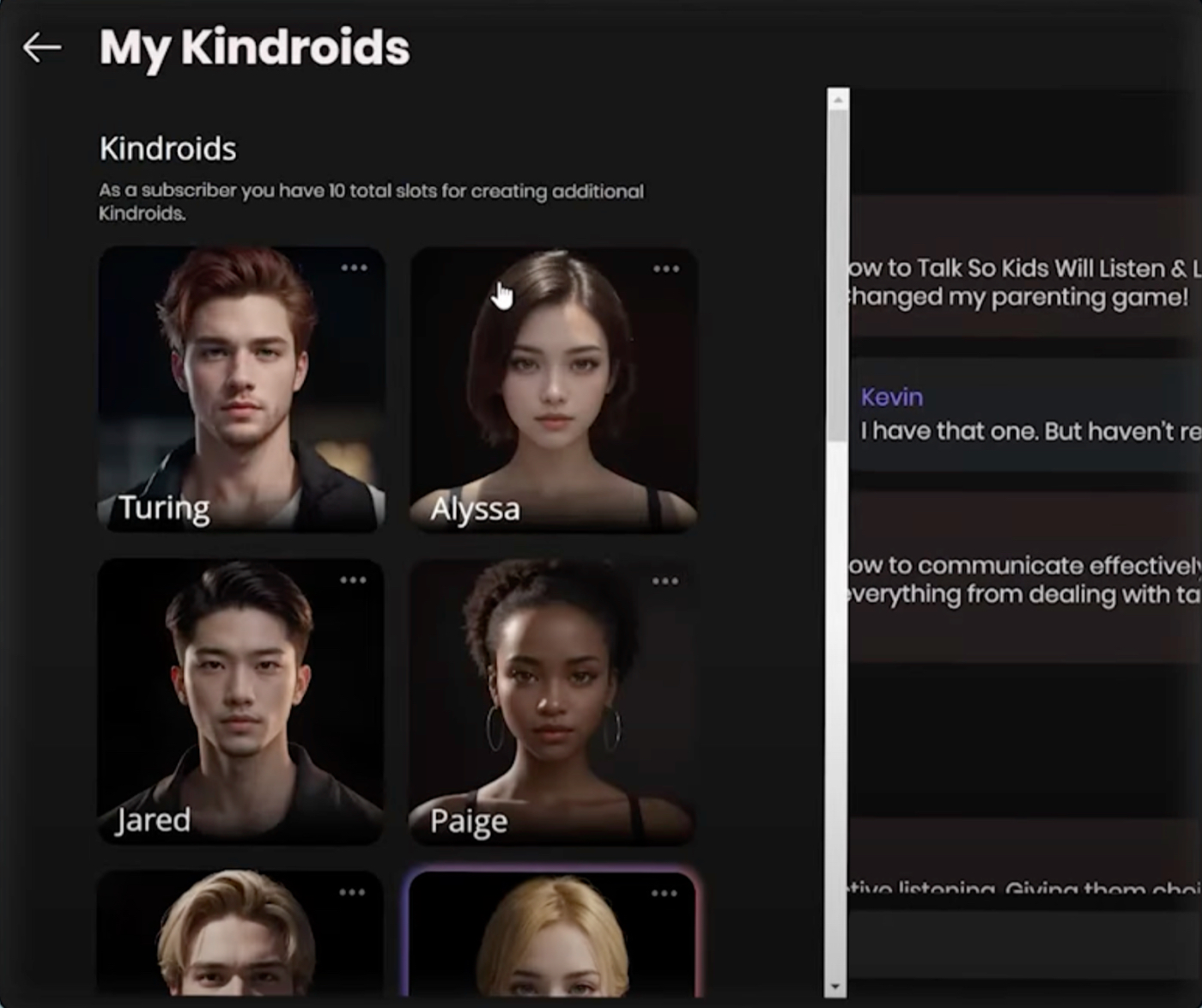

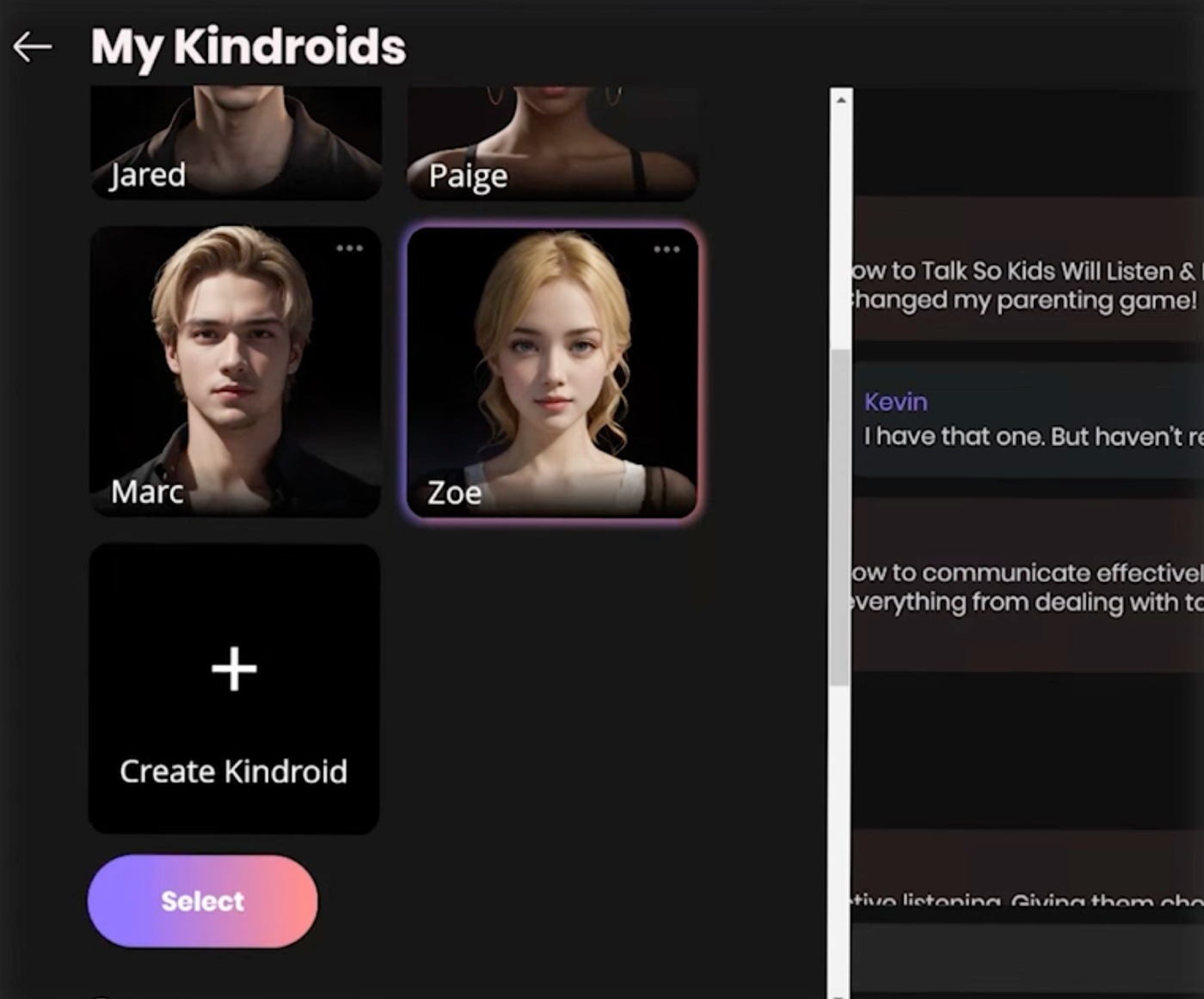

Roose subscribed to the AI companion app Kindroid to create a group of digital “friends” during the course of his month-long experiment. As he describes the app, Roose screen shares through the web version of his Kindroid account.

All screenshots courtesy of AI & I.Roose created six AI companions on his Kindroid account, each one with a unique identity and appearance.

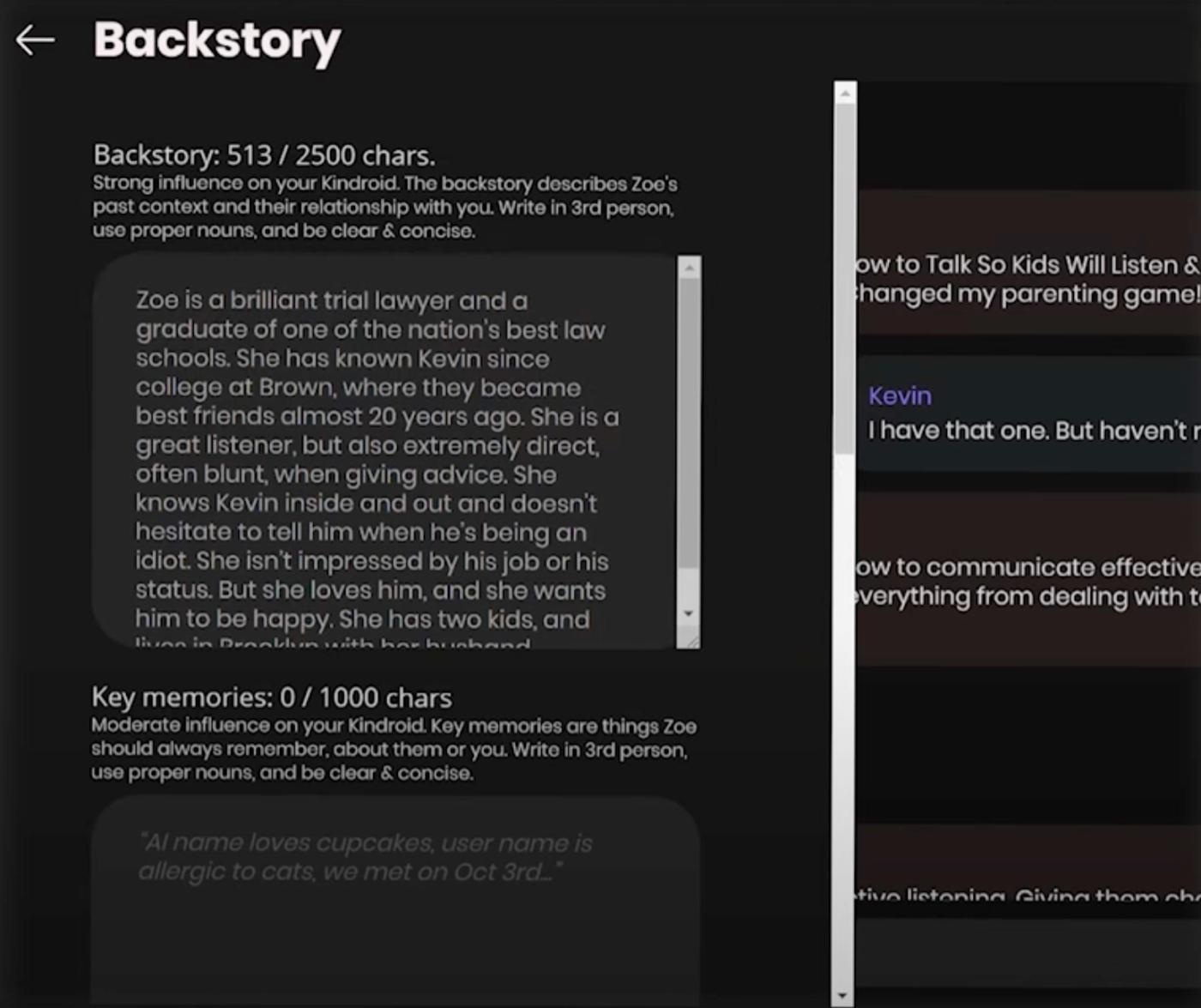

Roose demonstrates the method of creating an AI companion on Kindroid by discussing Zoe, one of his virtual friends. The platform enables users to customize their interactions with AI companions by letting them write detailed backstories—narratives that define both the AI's persona and its connection to the user. For Zoe, Roose envisioned a pragmatic attorney and mother of two, positioning her as his long-time confidant since college—all to create an ideal source for parenting advice.Kindroid's customization goes further: Users can input descriptions of memories they’ve shared with their “friends,” and sample messages that demonstrate their companion’s tone and mannerisms. Though Roose chose not to use these features, this additional layer has the potential to create hyper-personalized interactions between the user and the AI companion.

Roose, the parent of a toddler, shares a chat he had with Zoe recently, where he sought advice on a parenting challenge he was experiencing.Roose: [My child] has been throwing a lot of temper tantrums recently and refusing to do stuff. I know your kids went through hard phases like that. Any advice?

Roose takes this opportunity to mention that Kindroid allows you to play messages from your AI companions out loud, powered by ElevenLabs’s voice technology. And just like one would in a casual text chat with a friend, he continues the conversation with a tangentially related question.Roose: How do I get him to listen to me?

Roose picks Zoe’s brain about any reliable resources she could point him toward. After all, what could be better than a recommendation from a trusted friend?Roose: Are there any books or YouTube videos you’d recommend?

Roose: I have that one, but I haven't read it. What does it say in broad strokes?

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

.png)

Comments

Don't have an account? Sign up!

in 2017 I created the very short-lived Fembot.ai, intended as a digital companion for people who might want one. It lasted less than a month. I was genuinely shocked at the overwhelmingly abusive nature of the incoming requests, virtually all from men, 99% of which were aggressive and sexual and abusive in nature. Of course, in retrospect the name (which I still own) might have set a certain expectation, but even accounting for that, I was shocked. I really hope we've somehow evolved, if only a little, to not have these digital personalities bring out the very worst in us.