Sponsored By: Reflect

This article is brought to you by Reflect, a beautifully designed note-taking app that helps you to keep track of everything, from meeting notes to Kindle highlights.

If you want to build a sustaining advantage in AI the conventional wisdom says you have to build the technology required for a powerful model. But a powerful model is not just a function of technology. It’s also a function of your willingness to get sued.

We’re already at a point in the development of AI where its limitations are not always about what the technology is capable of. Instead, limits are self-imposed as a way to mitigate business (and societal) risk.

We should be talking more about that when we think about where sustainable advantages will accrue and to whom.

. . .

ChatGPT is a great example. It is awesomely powerful, but it’s also profoundly limited. It’s limitations though, are not mostly technological. They’re intentional.

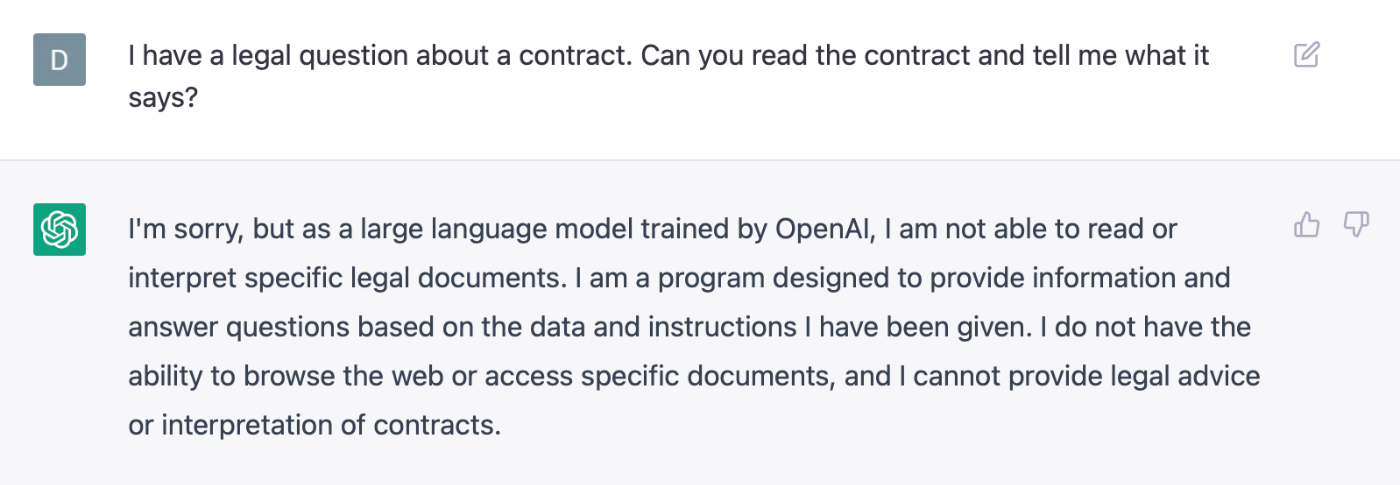

On the awesome side, it saved me ten hours of programming for a project I used it on this weekend. But for other use cases it completely fails:

Again, this is not a limitation of the underlying technology. In both of these cases it’s quite possible for the model to return a result that would plausibly answer my question. But it’s been explicitly trained to not do that.

The reason ChatGPT is so popular is because OpenAI finally packaged and released a version of GPT-3’s technology in a way that was open and user-friendly enough for users to finally see how powerful it was.

The problem is, if you build a chat bot that gets massively popular, the risk surface area for it saying things that are risky, harmful, or false goes up considerably. But, it’s very hard to get rid of the ability to use a model for harmful or risky things without also making it less powerful for other things. So as the days have gone on we’ve seen ChatGPT get less powerful for certain kinds of questions as they fix holes and prompt injection attacks.

It’s sort of like the tendency of politicians and business leaders to say less meaningful and more vague things as they get more powerful and have a larger constituency:

The bigger and more popular ChatGPT gets, the bigger the incentive is to limit what it says so that it doesn’t cause PR blowback, harm to users, or create massive legal risk for OpenAI. It is to OpenAI’s credit that they care about this deeply, and are trying to mitigate these risks to the extent that they can.

But, there’s another countervailing incentive working in the exact opposite direction: users and developers will want to use the model with the fewest number of restrictions on it—all else equal.

A great example of this has already played out in the image generator space.

. . .

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

.png)

Comments

Don't have an account? Sign up!