In prompt engineer Michael Taylor’s latest piece in his series Also True for Humans, about managing AIs like you'd manage people, he simulates multiple AI agents to get more diverse and useful results from LLMs. Michael applies the wisdom of the crowds concept to working with AI and forecasts how economy-simulating AI agents will help us make better decisions in the future. Plus: If you have a question you’d like to ask an AI focus group, scroll to the end to submit it.—Kate Lee

Was this newsletter forwarded to you? Sign up to get it in your inbox.

In one of my first jobs in marketing, the CEO would abruptly change his mind based on the most recent person he talked to. We jokingly called it “management by elevator” because company strategy seemed to pivot based on whomever rode the elevator with him that morning.

As an entrepreneur, I sometimes catch myself doing the same thing, giving myself whiplash by abruptly changing my product roadmap from a single positive customer call. Perhaps I should talk to a wider pool of people, so that any one person doesn’t unduly influence me. After all, I shouldn’t act the first time I see an opportunity; instead, I should wait to see if I see patterns forming across my interactions.

That’s easier said than done, though, because I have to actively go out and find more people to talk to about my ideas, which might mean asking for referrals, contacting people I don’t know, or even spending money on advertising or consulting. In other words, I need to step outside of the elevator. And there’s no guarantee that the people that are willing to spend time talking to me don’t have an agenda or bias that makes their feedback less valuable.

These days I work from home so there is no elevator, but I do have access to Claude 3.5 Sonnet, my preferred AI model. Large language models have been trained on large swaths of the internet, so they can identify gaps in my knowledge. I use Claude as my brainstorming assistant, running most of my decisions past it for a second opinion.

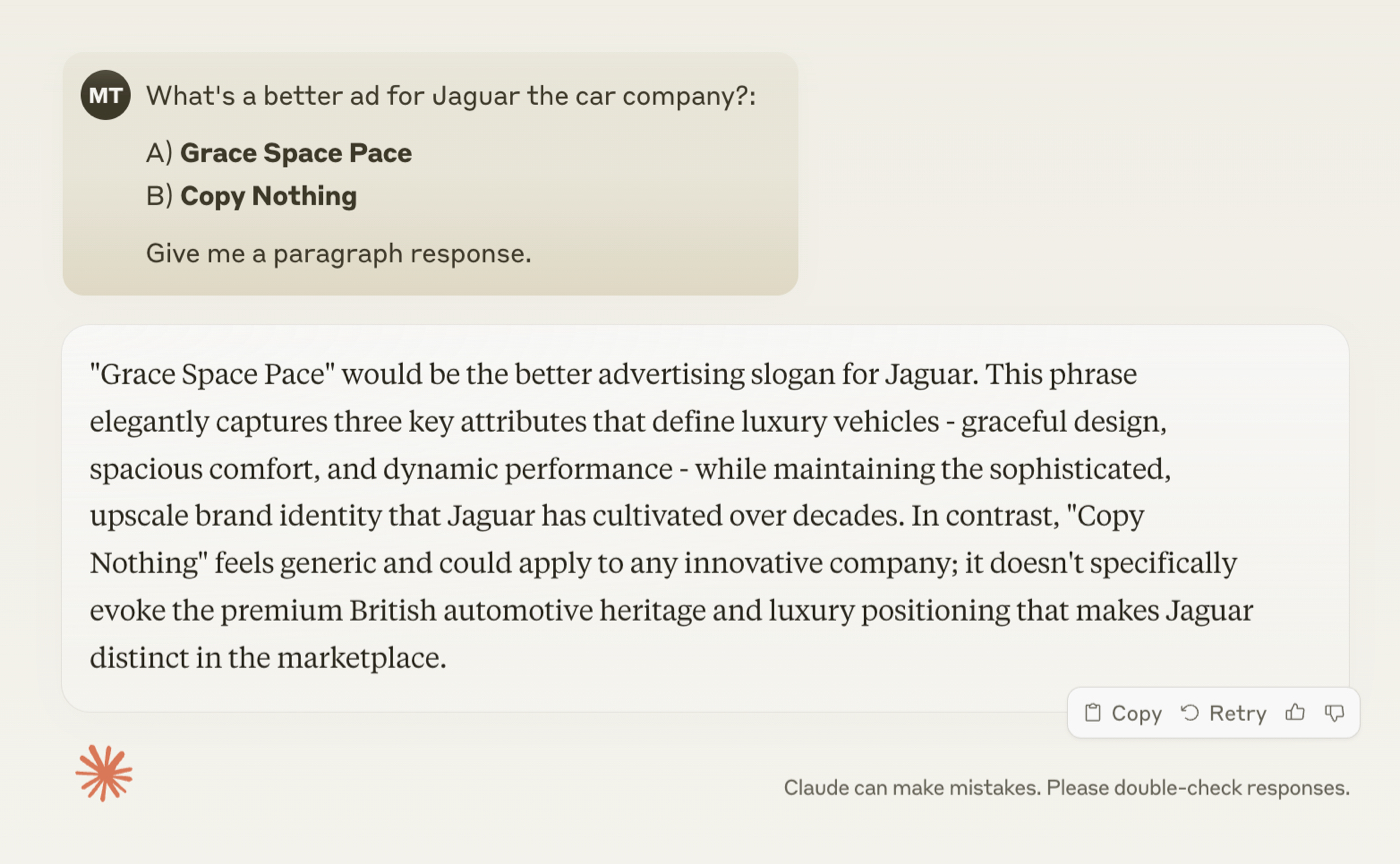

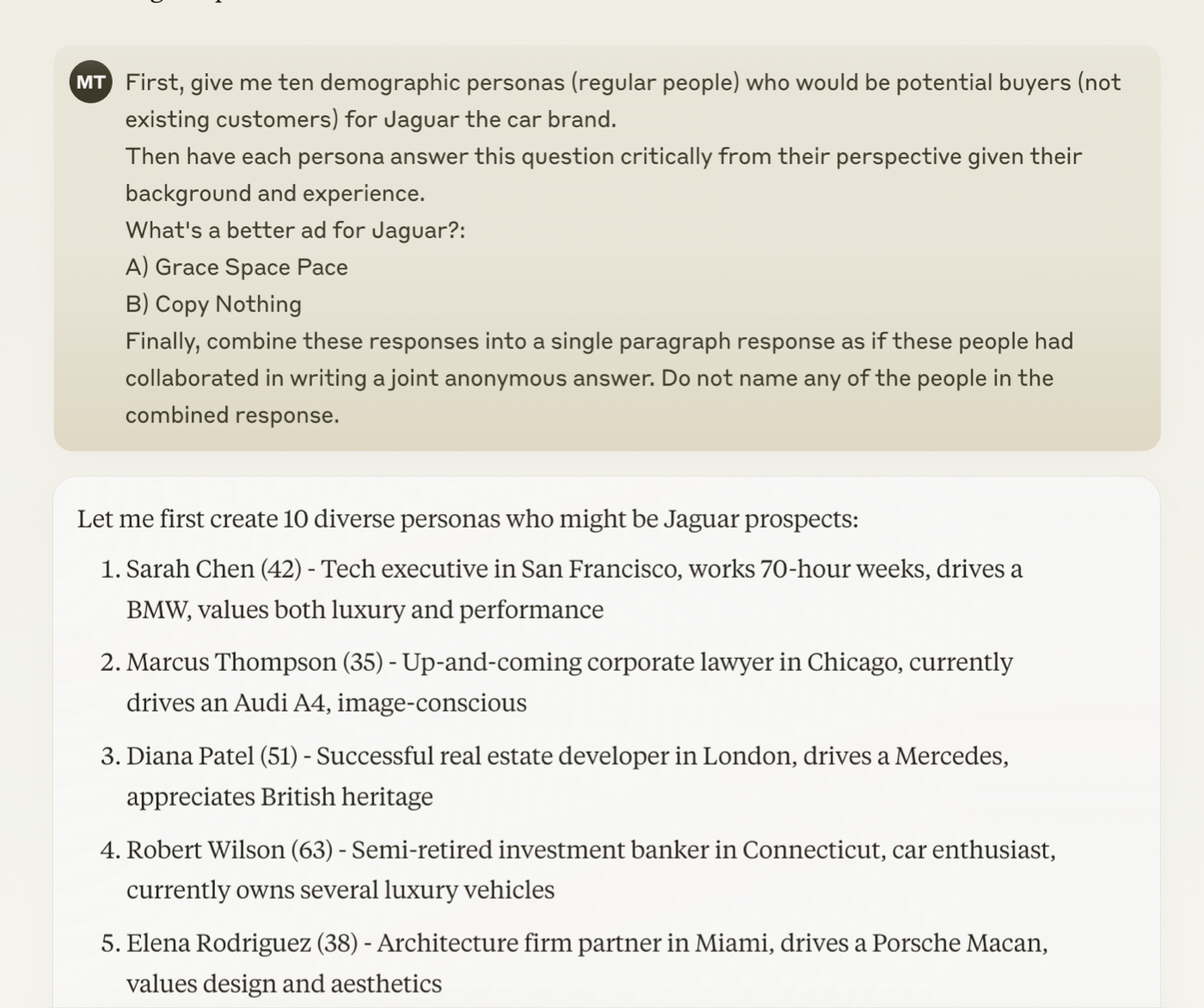

Still, it’s not a perfect solution. The problem with using AI for market research is that the feedback it gives can be too generic and broad. LLMs are trained with reinforcement learning so they won’t offend anyone, but that also limits their ability to weigh in as a creative partner. For example, if you ask an LLM to rate a controversial new ad from Jaguar, it gives a bland and unhelpful response, not really considering why the new ad might potentially work for younger, less traditional potential car buyers.

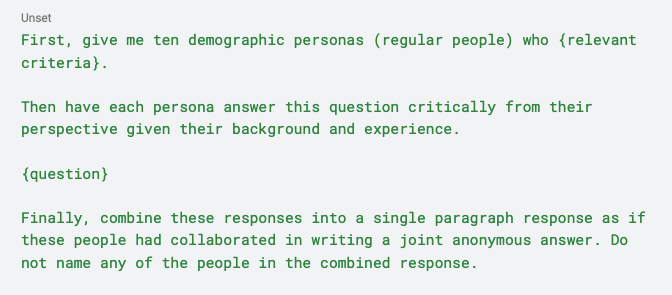

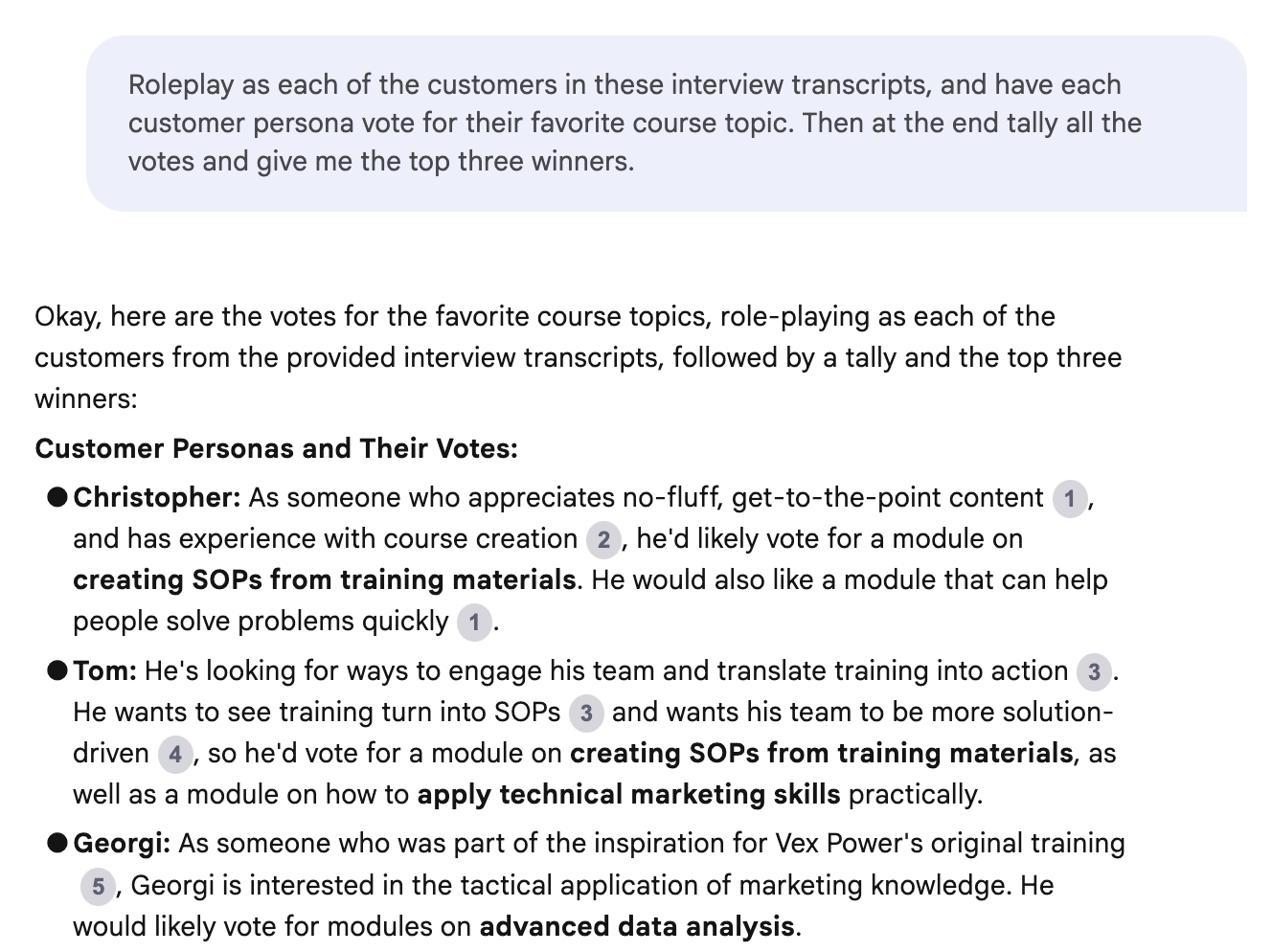

Source: Screenshots courtesy of the author, using Anthropic’s Claude 3.5 Sonnet.In order to escape overly generic responses is to roleplay prompting, asking the AI to adopt a persona when it responds—but here’s the twist. Instead of asking AI to adopt a single persona, you can ask it to simulate many personas, eliciting individual responses from each before combining their thoughts into a single paragraph response. I call this personas of thought, a riff on chain of thought, a technique for getting an AI to employ step-by-step reasoning. The difference is that this version reasons by simulating what various people would think rather than thinking as an individual step-by-step.

A template for personas of thought

I frequently use this template to crowdsource opinions from multiple AI personas, and it reliably gives me more insightful and varied responses versus naively just asking ChatGPT or Claude to answer directly:

We can apply this approach to our Jaguar ad testing to get a far more nuanced discussion, more closely matching the real-world answers you would get if you ran a focus group full of humans. Here’s the prompt for this context: Then, after generating the relevant personas and their simulated responses, it combines them into a final answer that is deeper and more insightful than the average LLM response: The “Copy Nothing” ad doesn’t speak to me personally, but, then again, I’m not in the target market for buying a new Jaguar. Whether you personally like the ad or not isn’t the point. By simulating reactions from a relevant audience—in this case, new potential buyers of Jaguar cars—you get a wider distribution of opinions and deeper insights into the potential effectiveness of ideas you’re testing. You can use AI to explore more creative pathways, without converging on dull and uninteresting responses.What’s more, this technique scales: If you need more accurate responses, you can add more detailed information for the personas to take into account, or you could even supply your own personas. Most businesses have done at least some market research, which you can repurpose to create in-depth customer profiles to supplement this prompt and make the results more accurate. Now you have a digital twin of your best customers that you can bounce ideas off of without bothering real people or incurring the cost of running focus groups.

Let’s walk through a real-world application to getting customer feedback on product ideas with personas of thought using customer interview transcripts and Google NotebookLM. We’ll explore the science behind how AI agents predict human behavior, finishing with exploring what happens when we can simulate scenarios with thousands of AI agents in a virtual economy, so we can see how humans might behave in the same conditions.

Cloning my best customers

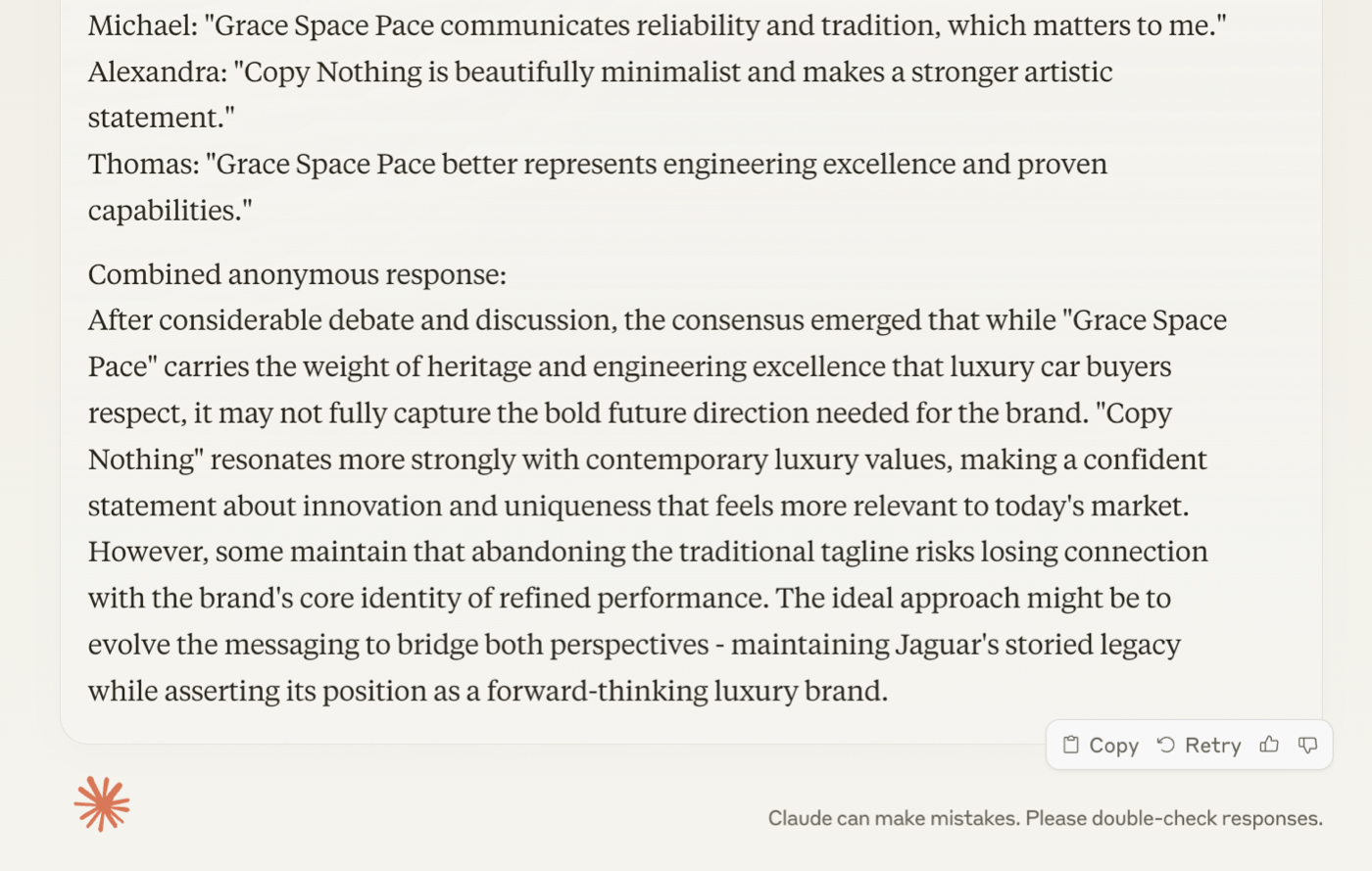

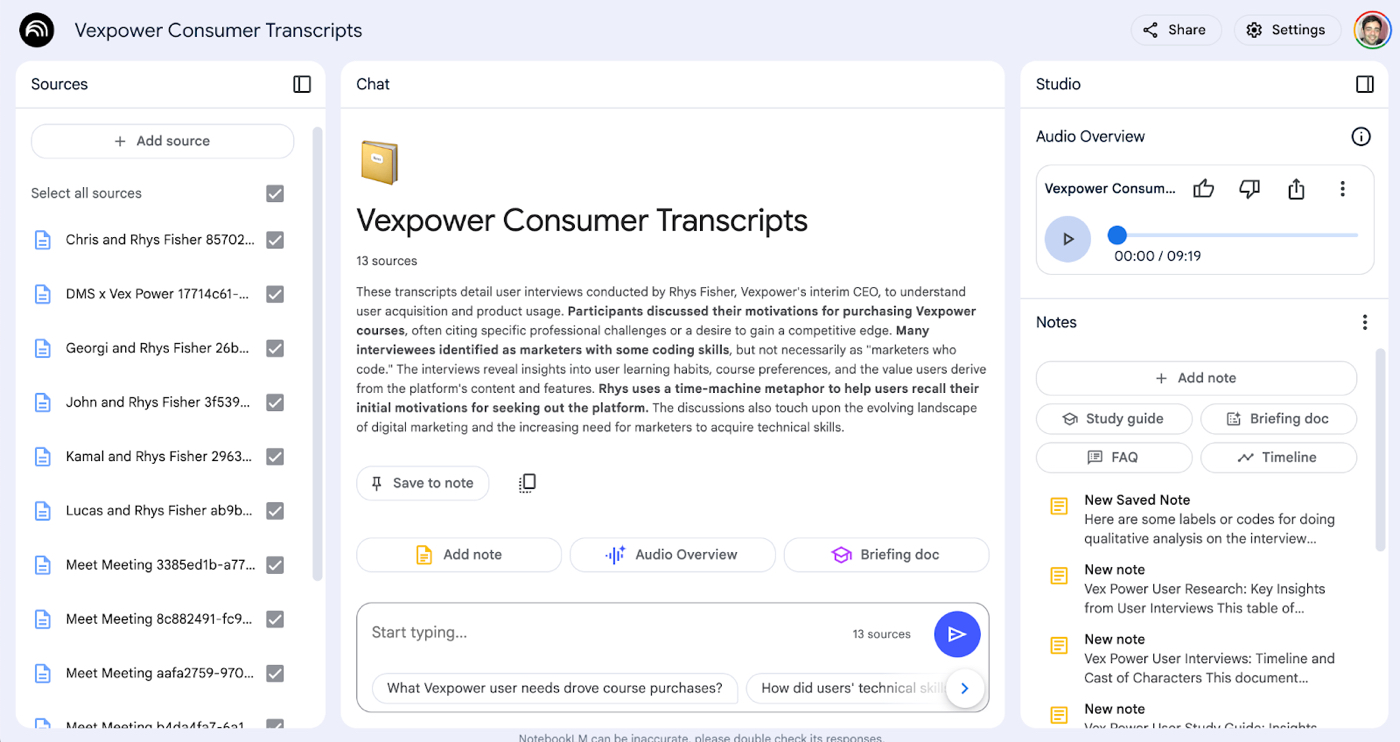

At my company, Vexpower, which produces a collection of online courses for marketers, we brought on an interim CEO who interviewed customers to determine growth opportunities for the business. With the customers’ permission, he recorded the calls using an AI notetaker called Grain (you could also use Granola, Otter, or Zoom). I exported all the transcripts into Google Notebook LM for further analysis using AI.

The first thing I did was generate an audio overview—an AI-generated podcast—where the AI hosts engaged in a surprisingly insightful discussion about the contents of the interview transcripts. (Listen here if you haven’t experienced the magic of NotebookLM yet.)

Screenshots courtesy of the author from Google’s NotebookLM.This approach beats a simple chatbot experience. When I ask Claude what lessons I should add to my collection of marketing courses, I get generic answers like Marketing Fundamentals, Brand Storytelling, and Marketing Ethics. Those would be suitable for a general marketing course, but they would be terrible for my more data-driven and tactical audience of performance marketers and growth hackers. However, grounding the AI’s answers in the customer interview transcripts, I get far better results from asking NotebookLM—topic ideas such as Data Science, Google BigQuery, and Brand Measurement, which are all things the audience needs to know.

In prompt engineer Michael Taylor’s latest piece in his series Also True for Humans, about managing AIs like you'd manage people, he simulates multiple AI agents to get more diverse and useful results from LLMs. Michael applies the wisdom of the crowds concept to working with AI and forecasts how economy-simulating AI agents will help us make better decisions in the future. Plus: If you have a question you’d like to ask an AI focus group, scroll to the end to submit it.—Kate Lee

Was this newsletter forwarded to you? Sign up to get it in your inbox.

In one of my first jobs in marketing, the CEO would abruptly change his mind based on the most recent person he talked to. We jokingly called it “management by elevator” because company strategy seemed to pivot based on whomever rode the elevator with him that morning.

As an entrepreneur, I sometimes catch myself doing the same thing, giving myself whiplash by abruptly changing my product roadmap from a single positive customer call. Perhaps I should talk to a wider pool of people, so that any one person doesn’t unduly influence me. After all, I shouldn’t act the first time I see an opportunity; instead, I should wait to see if I see patterns forming across my interactions.

That’s easier said than done, though, because I have to actively go out and find more people to talk to about my ideas, which might mean asking for referrals, contacting people I don’t know, or even spending money on advertising or consulting. In other words, I need to step outside of the elevator. And there’s no guarantee that the people that are willing to spend time talking to me don’t have an agenda or bias that makes their feedback less valuable.

These days I work from home so there is no elevator, but I do have access to Claude 3.5 Sonnet, my preferred AI model. Large language models have been trained on large swaths of the internet, so they can identify gaps in my knowledge. I use Claude as my brainstorming assistant, running most of my decisions past it for a second opinion.

Still, it’s not a perfect solution. The problem with using AI for market research is that the feedback it gives can be too generic and broad. LLMs are trained with reinforcement learning so they won’t offend anyone, but that also limits their ability to weigh in as a creative partner. For example, if you ask an LLM to rate a controversial new ad from Jaguar, it gives a bland and unhelpful response, not really considering why the new ad might potentially work for younger, less traditional potential car buyers.

Source: Screenshots courtesy of the author, using Anthropic’s Claude 3.5 Sonnet.In order to escape overly generic responses is to roleplay prompting, asking the AI to adopt a persona when it responds—but here’s the twist. Instead of asking AI to adopt a single persona, you can ask it to simulate many personas, eliciting individual responses from each before combining their thoughts into a single paragraph response. I call this personas of thought, a riff on chain of thought, a technique for getting an AI to employ step-by-step reasoning. The difference is that this version reasons by simulating what various people would think rather than thinking as an individual step-by-step.

Sponsored by: Every

Tools for a new generation of builders

When you write a lot about AI like we do, it’s hard not to see opportunities. We build tools for our team to become faster and better. When they work well, we bring them to our readers, too. We have a hunch: If you like reading Every, you’ll like what we’ve made.

A template for personas of thought

I frequently use this template to crowdsource opinions from multiple AI personas, and it reliably gives me more insightful and varied responses versus naively just asking ChatGPT or Claude to answer directly:

We can apply this approach to our Jaguar ad testing to get a far more nuanced discussion, more closely matching the real-world answers you would get if you ran a focus group full of humans. Here’s the prompt for this context:Then, after generating the relevant personas and their simulated responses, it combines them into a final answer that is deeper and more insightful than the average LLM response:The “Copy Nothing” ad doesn’t speak to me personally, but, then again, I’m not in the target market for buying a new Jaguar. Whether you personally like the ad or not isn’t the point. By simulating reactions from a relevant audience—in this case, new potential buyers of Jaguar cars—you get a wider distribution of opinions and deeper insights into the potential effectiveness of ideas you’re testing. You can use AI to explore more creative pathways, without converging on dull and uninteresting responses.What’s more, this technique scales: If you need more accurate responses, you can add more detailed information for the personas to take into account, or you could even supply your own personas. Most businesses have done at least some market research, which you can repurpose to create in-depth customer profiles to supplement this prompt and make the results more accurate. Now you have a digital twin of your best customers that you can bounce ideas off of without bothering real people or incurring the cost of running focus groups.

Let’s walk through a real-world application to getting customer feedback on product ideas with personas of thought using customer interview transcripts and Google NotebookLM. We’ll explore the science behind how AI agents predict human behavior, finishing with exploring what happens when we can simulate scenarios with thousands of AI agents in a virtual economy, so we can see how humans might behave in the same conditions.

Cloning my best customers

At my company, Vexpower, which produces a collection of online courses for marketers, we brought on an interim CEO who interviewed customers to determine growth opportunities for the business. With the customers’ permission, he recorded the calls using an AI notetaker called Grain (you could also use Granola, Otter, or Zoom). I exported all the transcripts into Google Notebook LM for further analysis using AI.

The first thing I did was generate an audio overview—an AI-generated podcast—where the AI hosts engaged in a surprisingly insightful discussion about the contents of the interview transcripts. (Listen here if you haven’t experienced the magic of NotebookLM yet.)

Screenshots courtesy of the author from Google’s NotebookLM.This approach beats a simple chatbot experience. When I ask Claude what lessons I should add to my collection of marketing courses, I get generic answers like Marketing Fundamentals, Brand Storytelling, and Marketing Ethics. Those would be suitable for a general marketing course, but they would be terrible for my more data-driven and tactical audience of performance marketers and growth hackers. However, grounding the AI’s answers in the customer interview transcripts, I get far better results from asking NotebookLM—topic ideas such as Data Science, Google BigQuery, and Brand Measurement, which are all things the audience needs to know.

The specificity of this answer is already a significant improvement over chatting with Claude or ChatGPT. It’s also a good demonstration of how humans can work effectively with AI: Build human relationships with customers with interviews, and let the AI take notes and synthesize them.We can make it even more useful by implementing the personas of thought technique. Instead of only grounding our answers in the customer transcripts and having the AI model synthesize responses, we can ask for the model to roleplay as each one of the customers individually, and predict what new course titles they might ask for. I just need to ask NotebookLM to pretend to be my customers when voting for which course topics would be popular. Now I can ask simulated versions of some of our customers Christopher (an engineer turned marketer), Tom (an agency owner), and Georgi (a former employee of mine) for their opinion about what they would like to learn or 100 other decisions I have to make, without having to go back to them in real life.

My customers are busy and don’t have infinite patience, and I always have a laundry list of questions that would be impractical to ask. Being able to ask the AI versions of my customers allows me to move faster to gut-check ideas and iterate on them without bothering my real ones.LLMs are human brain simulators

Large language models aren't coincidentally similar to our brains—they were built that way on purpose. Researchers designed artificial neural networks based on how biological neurons in real neural networks function in our brains.

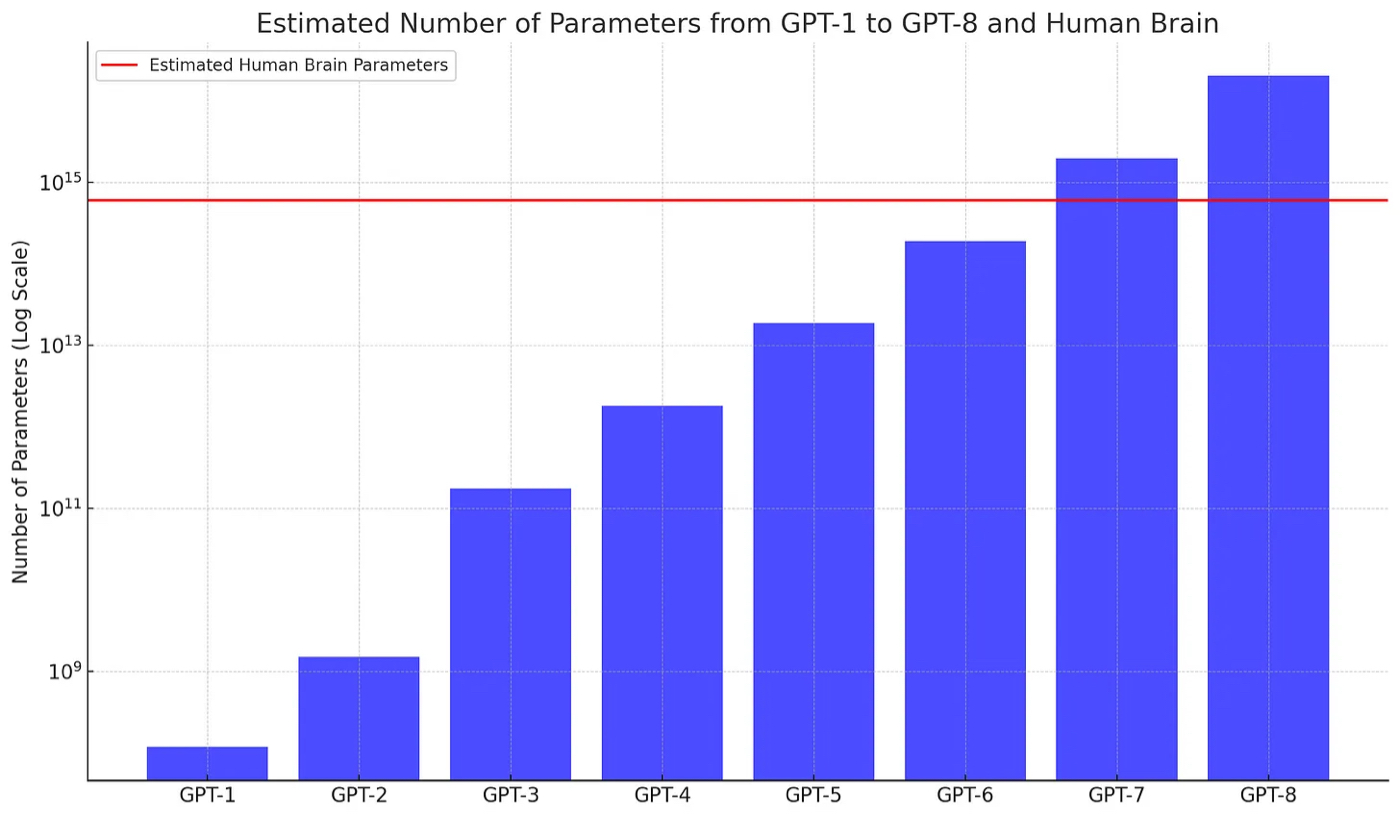

Similarly, the attention mechanism that forms the basis of modern-day transformer models like Claude and ChatGPT was developed based on how humans parse sentences. Instead of treating every word as equally important, humans and LLMs focus on the most important parts of a sentence that are relevant to the wider context of the conversation. For example, if you asked ChatGPT to suggest a recipe for dinner tonight, without further context, it might offer garlic butter chicken. But if you had previously shared in the chat that you’re a vegetarian and enjoy quick meals, it might personalize the response and suggest a veggie stir fry instead. Artificial neural networks aren’t a perfect representation of our brain; the largest LLMs like GPT-4 and Claude Opus have 1 trillion parameters—about 1 percent of the over 100 trillion synapses in the human brain.

Source: LinkedIn/Aki Ranin .Even if LLMs don’t continue to grow exponentially smarter, today’s models were trained on much of the internet, so they’ve learned a great deal about how humans communicate with each other. They seem to be a good enough approximation of the human brain to generate realistic human responses to questions. Among studies showing that LLMs pass the Turing test—where you have to determine whether you’re talking to a human or a machine—54 percent of the time people thought they were talking to a human when it was actually OpenAI’s model GPT-4.

If LLMs can roleplay as a human, then it stands to reason they can be used to accurately predict human responses—for a fraction of the cost. As a former marketing professional, this off-the-cuff response OpenAI CEO Sam Altman gave in an interview continues to both haunt and excite me:

“Ninety-five percent of what marketers use agencies, strategists, and creative professionals for today will easily, nearly instantly, and at almost no cost be handled by the AI—and the AI will likely be able to test the creative against real or synthetic customer focus groups for predicting results and optimizing.”

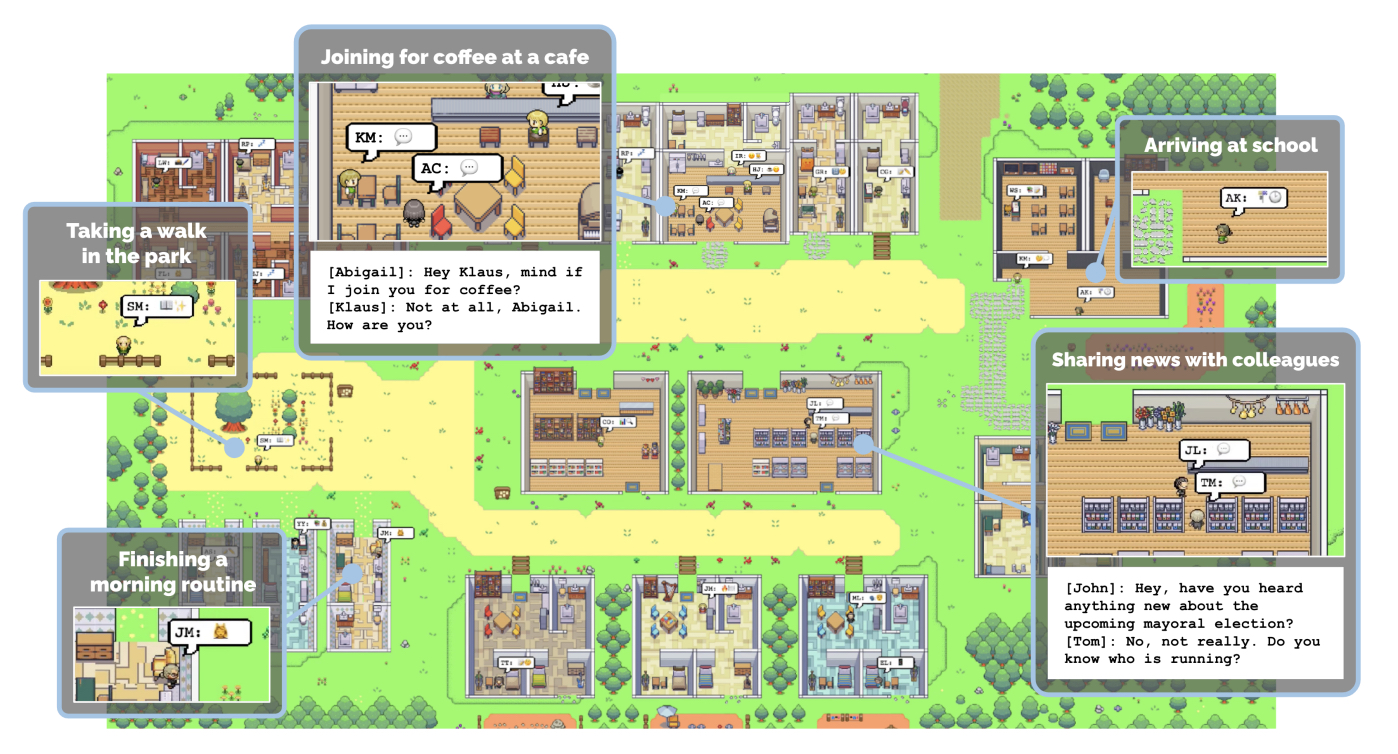

Maybe we’re not there yet, but there have been several notable experiments from researchers to use LLMs to simulate real-world scenarios. For one of my favorite papers, researchers at Stanford dropped 25 AI agents to interact with each other in a Pokémon-style video game world. The researchers found that agents naturally spread information (“Sam is running for mayor”), formed relationships (“Maria has a crush on Klaus”), and coordinated social activities (“Come to Isabella’s Valentine’s Day party”).

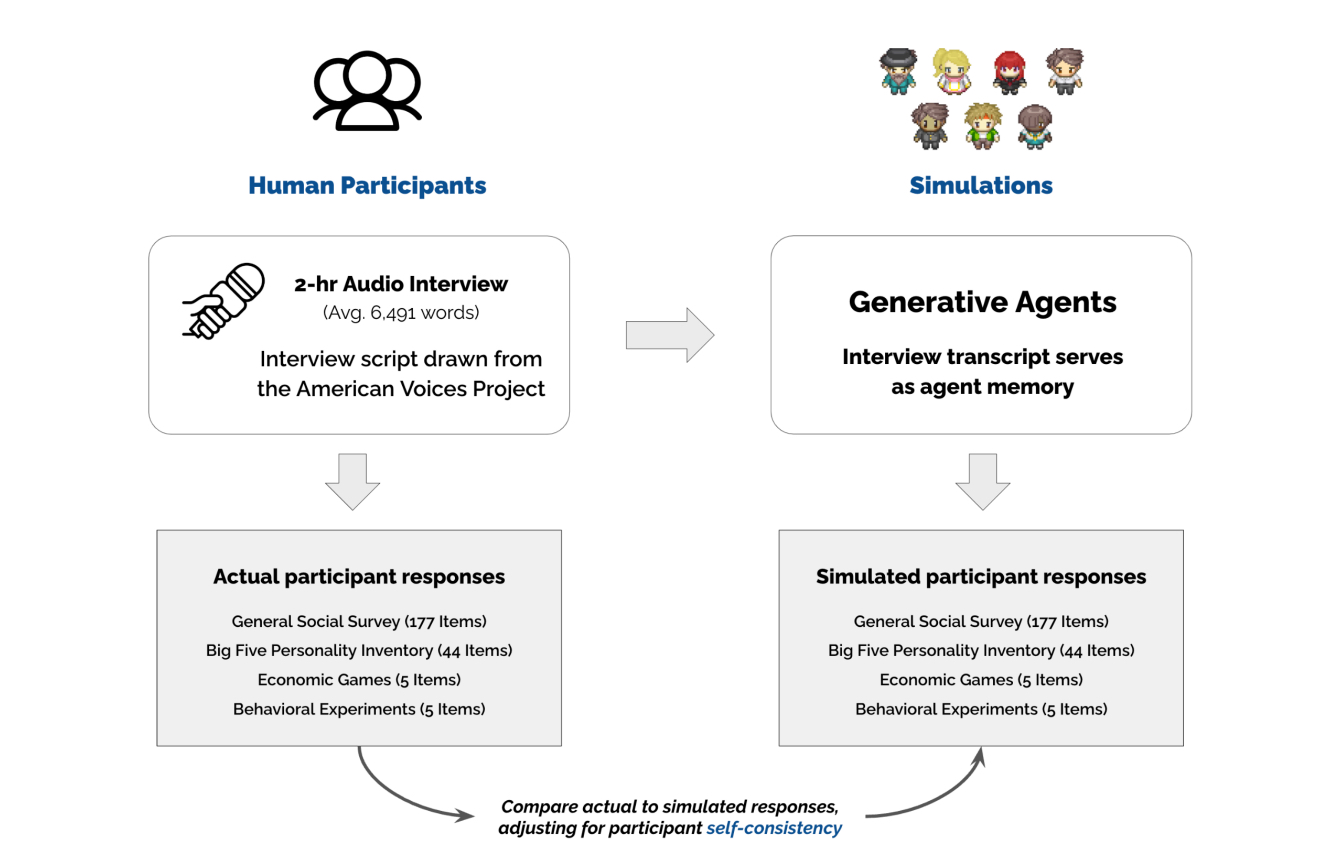

Source: Arxiv.One of the authors of that paper followed up with a study that generated 1,000 agents trained on real-world interviews to improve how accurately the AI agents could model their human counterparts. From a two-hour interview they were able to train an AI agent that correctly predicted responses to different survey questions as well as actions in games. The AI agents were 69 percent accurate in predicting people’s answers to surveys, but this is a stronger result than it first seems. When you ask a real person to retake a survey two weeks later, they only give the same answer 81 percent of the time, so the AI agent is about 85 percent consistent with the real person.

Source: "Generative Agent Simulations of 1,000 People."These papers have sparked interest from various startups—such as Synthetic Users, which compiled a list of these papers—in using LLM agents to solve market research and other social science problems. While nothing is close to production yet, developers are building tooling for spinning up AI agents to ask these questions. For example, Microsoft’s TinyTroupe library lets you define different personas who go through a thinking and acting loop (a common pattern in AI agents) in order to interact with you as the interviewer.

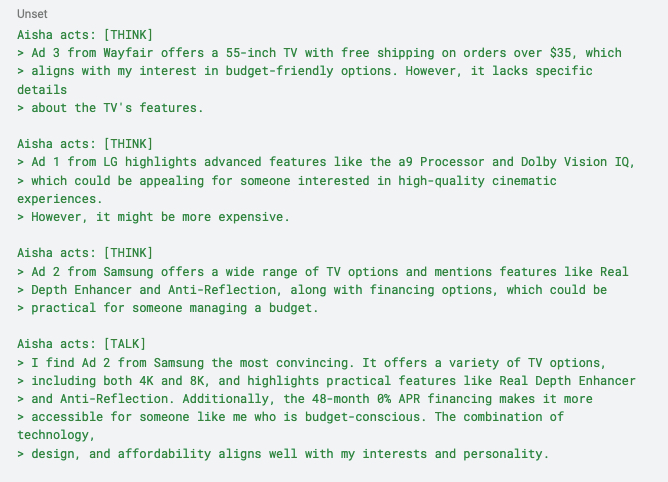

In the below example from the TinyTroupe GitHub, an AI agent called “Aisha” first “thinks” about three potential ads for TVs that a marketer submitted, and then decides which they find most convincing based on their persona and background (also specified by the marketer). The resulting summary tells the marketer which ad would be most compelling for their target audience.

Source: TinyTroupe on GitHub.The appeal of being able to conduct market research faster and cheaper using LLMs is clear. Dominic Cummings, the architect of the Brexit campaign in the UK, says synthetic focus groups within AI models are now indistinguishable from real groups of people. When a real-world focus group takes weeks and $5,000-10,000 dollars to conduct, synthetic focus groups can be done for nothing in 30 seconds and will “change the way election campaigns and political advertising are conducted,” according to Cummings.

Let’s run it past the agents first

A growing body of research supports the idea that LLMs can be used to predict real-world human behavior and conduct market research. There is even a claim that 1,000 personas trained on voter demographics accurately predicted Donald Trump’s victory in the 2024 U.S. presidential election, something that almost no traditional human polling methods accurately predicted until late in the season.

While these projects are still in the research phase and need to be proved reliable in production, many difficult economic decisions in the future will be made only after checking to see what the AI agents say. Imagine the HBO series Westworld, but instead of riding horses and shooting people, marketers with clipboards surveyed the AI townsfolk to determine which marketing message was the most memorable, or the impact of the latest tax policy enacted by the Sweetwater town assembly. It may not make for great TV, but companies spend millions on these decisions, and being able to test them virtually can save them from making costly mistakes.

So where is all this going? As LLMs get better and the costs come down, we’ll scale from simulations with hundreds of agents to thousands or millions. The larger the number of AI agents, the more realistic the outcomes may become, as individual errors or anomalies will balance out, and we’ll be able to rerun scenarios multiple times to check how often an intervention leads to a specific outcome. Projects like Sid by Altera simulate over 1,000 autonomous AI agents that collaborate in a virtual world, with an emergent economy, culture, religion, and government. These large-scale AI civilizations can give policy makers and corporate leaders a playground for understanding how people may react to decisions and events in the real world.

Source: Project Sid on GitHub.As LLMs automate more of our work, we may make fewer decisions as what Every’s Dan Shipper calls model managers. We’ll do less work ourselves, but the cost of a mistake will be greater, because tens or hundreds of AI agents will be following our orders. As AI makes execution easier, the new bottleneck will be deciding what to do. The good news is that AI agents running in simulations will help with that, too.

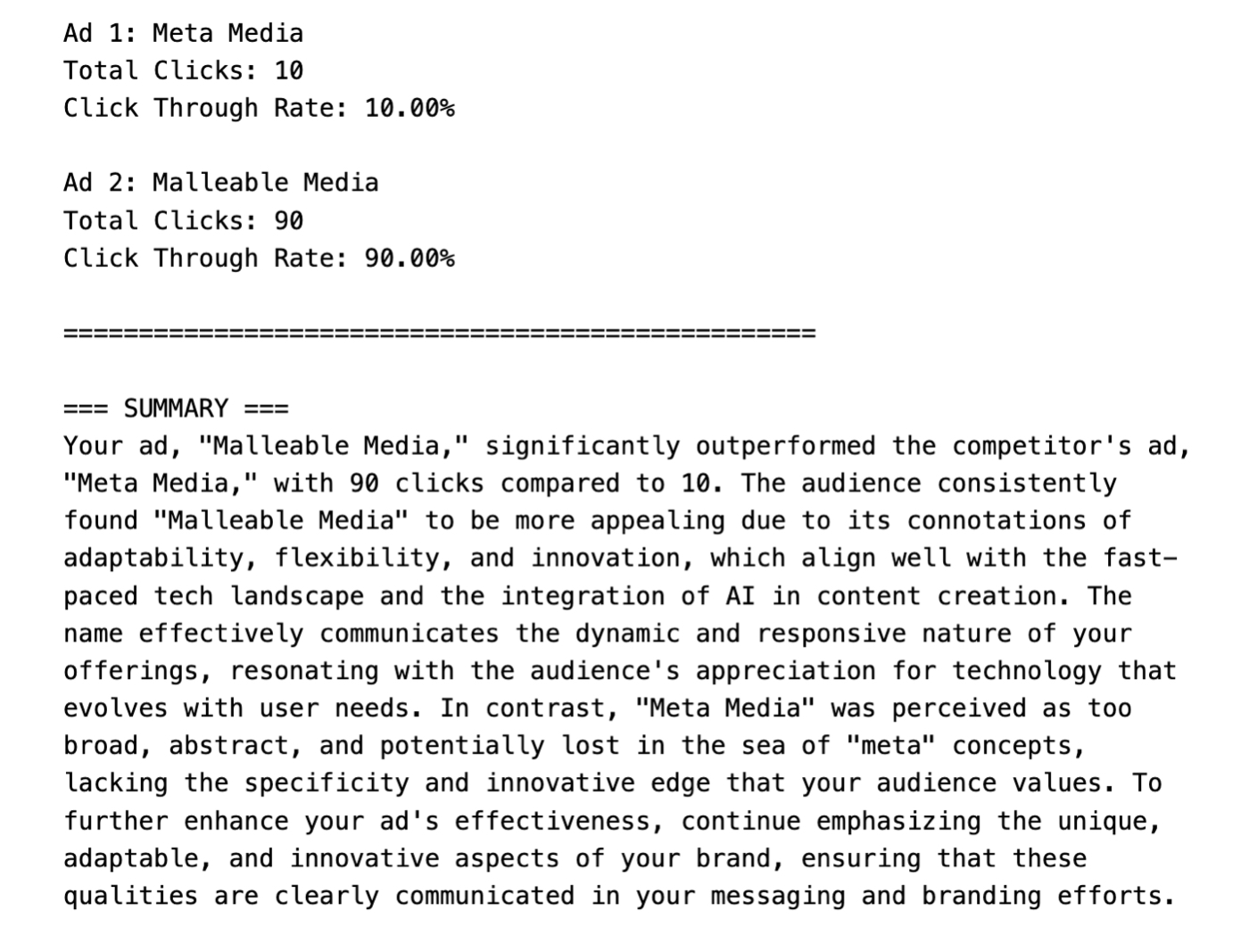

I wrote a script that lets me run my questions past 100 agents and summarize their feedback. Dan had a question about how to position Every considering that the company is a publisher that also builds software: “Should we describe Every as ‘malleable media’ or ‘meta media’?” Not only were we able to get a resounding answer, we could peer into the thoughts of the AI agents and summarize why they chose “malleable” over “meta”:

Source: X/Dan Shipper.

If you have any questions you want to ask a crowd of agents, fill out this form and I’ll run them for you—and write about it on Every.

Michael Taylor is a freelance prompt engineer, the creator of the top prompt engineering course on Udemy, and the coauthor of Prompt Engineering for Generative AI.

To read more essays like this, subscribe to Every, and follow us on X at @every and on LinkedIn.

We also build AI tools for readers like you. Automate repeat writing with Spiral. Organize files automatically with Sparkle. Write something great with Lex.

Get paid for sharing Every with your friends. Join our referral program.

Ideas and Apps to

Thrive in the AI Age

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Ideas and Apps to

Thrive in the AI Age

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

.png)