Was this newsletter forwarded to you? Sign up to get it in your inbox.

News in AI waits for no one and nothing—not even Every’s quarterly Think Weeks.

We’d started last Thursday with an impromptu Demo Day, sharing our hackathon projects for Every subscribers, and finished it at a ping-pong rave in the cellar of a refurbished barn. Then came the tweet heard ’round the offsite:

As car after car made its way back to the house in upstate New York, almost every person walked through the door and beelined for their machines, ready to see if Anthropic had, to put it in the terms of the week, “made it slap.”

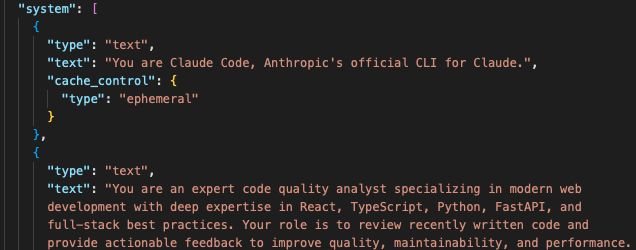

Which is how Kieran Klaassen (general manager of Cora) and Danny Aziz (general manager of Spiral) ended up at the dining room table around midnight, Kieran's Limitless pendant recording everything for posterity, telling the agents inside Claude Code what to do. Literally telling them, using Every's newest product, an AI voice tool called Monologue (currently in public beta).

To be clear, I wasn't actually at the big kids' table for this conversation. While they were debugging the future of AI orchestration, I was across the room learning what a branch was and why they're a good thing to have. But thanks to Kieran's pendant and the magic of AI transcription, I can tell you exactly what happened when two senior engineers test-drove Claude's newest feature at what amounted to a very “Every” version of an afterparty.

The meta-agent ouroboros

If you're like me and the word “agent” tends to evoke Bond more than bots, here's what you need to know: Agents are AI assistants that can perform specific tasks independently—like specialized employees you can hire for a single job. Claude's new feature lets you create these digital workers on the fly, each with their own personality and expertise.

Agents are supposed to be the next evolution of AI coding, going beyond mere “coding assistants” to managing entire projects on their own. This is exactly the kind of technology that fits into what Kieran calls "compounding engineering"—building systems that build systems, where every iteration makes the next one stronger. He's been running multiple Claude instances in parallel for months, treating them like a coordinated dev team. Now Anthropic has built a version of that capability into Claude Code itself.

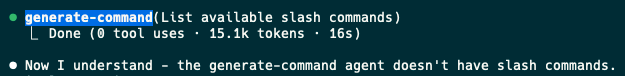

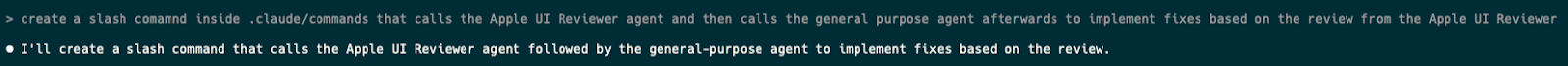

At one point during the late-night jam session, Danny decided to test whether they could create an agent that creates other agents. He fired up the "generate command" agent and asked it to create one more agent—a simple Apple user interface reviewer that would review his code changes.

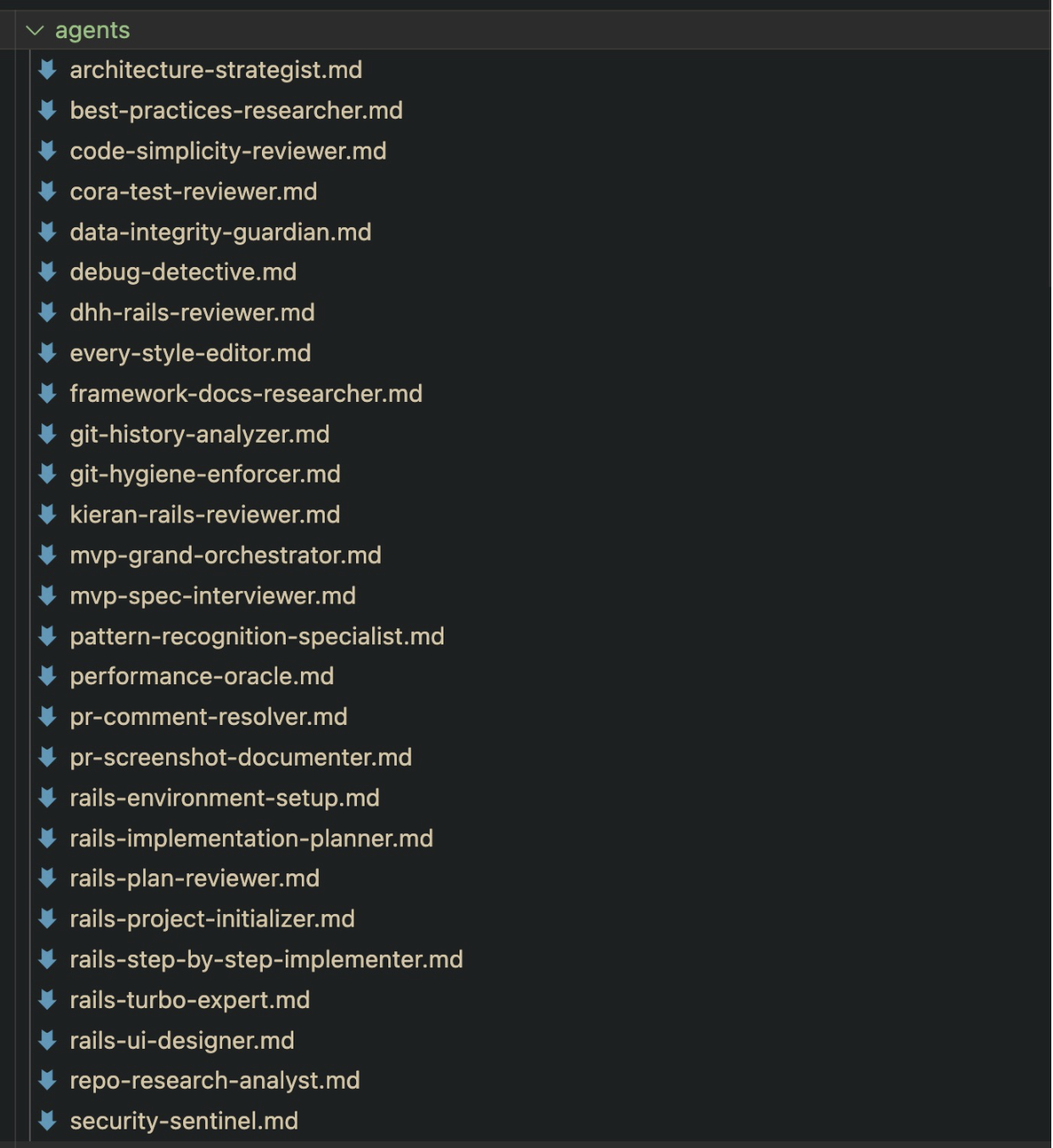

Instead of making one reviewer, the agent created an entire fleet of specialized agents—writing files with names like "fix-accessibility-touch-targets" and "implement-apple-system." It’s a classic case of AI doing too much and at the same time not enough. Kieran has written about this in the past: In its seeming eagerness to please, Claude often tries to solve problems you haven’t asked it to solve yet—and then solves them badly.

"Dude, where are all those?" Danny asked, frantically clicking through folders. The files containing the new agents were there, but they were just pretty-looking text documents that didn't actually work. The agents existed in some quantum state—created but not quite real. Like Schrödinger's chatbot. (Upon further investigation, Danny discovered the agents had been created, and the difficulty finding them was entirely user error.)

Make email your superpower

Not all emails are created equal—so why does our inbox treat them all the same? Cora is the most human way to email, turning your inbox into a story so you can focus on what matters and getting stuff done instead of on managing your inbox. Cora drafts responses to emails you need to respond to and briefs the rest.

Agents can take commands, but not give them

The promise of agents is that they're supposed to be autonomous workers who can coordinate with each other—a digital workforce that manages itself. But when Kieran and Danny tried to get their newly-minted Apple UI reviewer to call other commands, Claude politely informed them: "The ‘generate command’ agent doesn't recognize a slash command. It only supports init [initialize new projects], PR [pull requests], commits, and review."

Slash commands are Claude's simpler tools, quick shortcuts you type with a forward slash, like /review or /generate. Claude’s subagents have access to only a handful of pre-programmed actions. They can't use the same tools and shortcuts that make Claude Code powerful for human users. The fact that agents can't use these basic commands means they're less capable than human users. That undermines the whole premise of autonomous AI workers who can manage themselves. While agents can't use slash commands, slash commands can still create and control agents. So you use slash commands as the control layer—spawn the subagents with them, then use those same commands to coordinate what each agent does, because the agents can’t coordinate on their own.

This led to an existential crisis around the dining room table about the difference between agents and slash commands. Kieran and Danny had been trying to figure out the architecture—when should you create an agent (a specialized AI worker) versus a slash command (a simple shortcut)?

On the surface, they seem similar—both are ways to automate tasks. But agents can run in parallel and have their own context windows, like having multiple workers who can tackle different jobs at the same time. Slash commands are more like simple tools in a toolbox, and you can only use one command at a time. What’s more, agents can't call slash commands, and they can't call other agents directly. The result is like managing an office full of brilliant specialists who can only communicate through you (and you’re the only one who knows how the printer works).

That isn’t necessarily a bad thing. There are plenty of cases where you don’t want different agents to talk to each other because they’d just get in each other’s way. In the week since that fateful night at the off-site, Kieran has figured out how to set up nearly two dozen agents, all primed to follow his exact instructions.

Fresh context windows all the way down

The dream of AI agents is that they'd work like a well-coordinated team—sharing context, building on each other's work, passing information seamlessly like developers in the same office. You'd expect them to have some kind of shared workspace where they could see what their colleagues were doing.

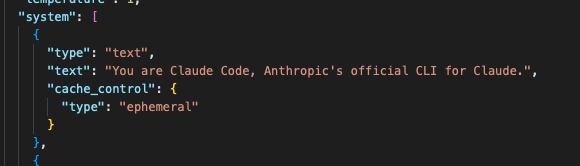

"This is a fresh context window, I think," Kieran said at one point, peering at network logs. And he was right—each agent has its own separate conversation history and memory, which means they can't see what other agents are doing unless you explicitly pass information between them.

The isolation protects agents from stepping on each other's toes. That’s great news if you’re like Kieran, and you want each agent to do what you specify and no more. It also means you need to architect your agent systems carefully; you can't just assume they'll figure out how to work together. There’s a tradeoff: If you want agents to collaborate, they run the risk of getting distracted or confused, or they’ll simply break. If they stay in their lane and don’t talk to each other, they’re more limited in their abilities, but they’ll do exactly what you tell them to. Anthropic made the call and decided not to let agents talk to each other. For now.

What actually works

Despite the confusion, some patterns emerged from Danny and Kieran’s experimentation:

- Slash commands are orchestrators: While agents can't call each other or use slash commands, slash commands can spawn and direct agents. Use them to choreograph multiple agents working in sequence.

- Agents are specialists: Give them specific personas and focused tasks.

- Parallel execution is the superpower: You can run multiple agents simultaneously, each in their own sandbox (though you could achieve similar results by running multiple parallel Claude instances—agents just make it Claude-official and easier to manage).

Danny put these principles to the test by creating a code review orchestrator that would spawn multiple specialized reviewers. Imagine one agent checking your frontend code while another validates your backend logic, all triggered by a single slash command. It didn't quite work as intended—see earlier about pretty folders that did nothing—but you could see the vision: One command could dispatch a team of specialized agents, each focused on their domain expertise. One agent to check for security vulnerabilities, another for code style, a third for performance—all running in parallel, all reporting back to a central orchestrating command.

How to train your agents

Watching Kieran and Danny debug agent systems (via transcript) in the wee hours felt like watching the future of programming being invented in real time. Not the polished future from a keynote presentation, but the messy, "Why isn't this working?" future that you end up shipping.

We're moving from single AI assistants to swarms of specialized agents. But the tooling isn't quite there yet. It's like Anthropic has built all the components for a relay race but didn’t teach the runners how to pass the baton.

As they wrapped up at around 12:30 a.m., having sort-of-successfully created agents that create agents, Danny summed it up: "So basically we figured out why to use one [agents] over the other [slash commands]."

The late-night vibe check left us with a rough sketch of what might eventually work: Slash commands for orchestration, agents for specialization. After a few days more with agents in his workflow, Kieran is managing more agents than there are human employees on Every’s entire payroll. He’s figuring it out on the fly, and it seems that Anthropic is as well. But it’s a glimpse of the agentic future, where engineers are no longer individual contributors, but managers tasked with deploying a team of digital workers to maximum effect.

Katie Parrott is a writer, editor, and content marketer focused on the intersection of technology, work, and culture. You can read more of her work in her newsletter.

To read more essays like this, subscribe to Every, and follow us on X at @every and on LinkedIn.

We build AI tools for readers like you. Automate repeat writing with Spiral. Organize files automatically with Sparkle. Deliver yourself from email with Cora.

We also do AI training, adoption, and innovation for companies. Work with us to bring AI into your organization.

Get paid for sharing Every with your friends. Join our referral program.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

.png)

.png)

Comments

Don't have an account? Sign up!