Sponsored By: Timely

This essay is brought to you by Timely, the automatic time-tracking platform that helps your team focus their energy on the right things at work.

Rationality and effective altruism brought us to this:

How could this happen? This is exactly what philosophy is supposed to fix. Ethics is the search for a system of rules that can’t be used by stupid people to do terrible things. lf you are looking for candidate ethical systems that seem least likely to be co-opted to do bad things, it’s reasonable to pick something like effective altruism and rationality.

The ideas behind them are:

- You can use reason and quantitative evidence to figure out what it means to do good.

- You should put that knowledge into practice by doing as much good as you can.

Except, in this case, those principles seem to have failed spectacularly.

This is an existential moment for effective altruism and the rationalist community writ-large. Its adherents need to look themselves in the mirror and ask: how did this happen? How did someone who seemed to be so grounded in its principles end up a fraud? How did he cause so many true believers to wittingly or unwittingly assist him in committing it?

It’s also a pivotal moment for ethics and philosophy in general. How much good is it if it keeps being co-opted by stupid people to do bad things? Can we make a philosophy that doesn’t have those problems?

Let’s explore.

. . .

Despite his protests to the contrary, I think Sam Bankman-Fried actually is an effective altruist. Yes, I know in his most recent unhinged Vox interview he seems to claim that it was all a grift and he never really believed it:

But let’s take a step back and look at the facts.

He was raised as a utilitarian. His parents are Stanford Law professors who studied it. His father said this to the New York Times: “It’s the kind of thing we’d discuss in the house.”

In college, he was a vegan who was about to devote his career to animal welfare when he had lunch with William MacAskill, a philosopher and leading Effective Altruist. MacAskill convinced him to pursue earning to give: deliberately pursuing a high-paying career in order to donate most of the reward to charity. So after graduation he joined Jane Street, and gave away half of his salary.

There's never enough time in the day, but with Timely you can make the most of every minute.

Timely already helps thousands of freelancers, agencies, and large companies track their time automatically. With its sleek and user-friendly interface, Timely can track every second spent in web and desktop apps automatically, so can capture billable hours with zero effort.

So what are you waiting for? Get started with a personalized Timely walkthrough. We’ll even buy you a coffee just for trying it out!

Unless you think everyone from MacAskill to Sam’s parents to the fact checkers at the New Yorker are lying, then you have to believe he started out steeped in ideas that lead to effective altruism, and acted for many years like someone committed to its principles even before it brought him any sort of public acclaim.

And yet somehow he ended up a fraud along the way. How? Could it be that the philosophy itself is to blame? It’s important to find out.

. . .

Socrates started all of this. To him, the job of philosophy seemed to be about arriving at correct definitions: a rule or set of rules that helps us cleave the world at its joints, to separate the good from the bad, the true from the false, just from the unjust, and the holy from the unholy. He says this in a famous dialogue called Euthyphro:

“Teach me about…about what it might be, so that by fixing my eye upon it and using it as a model, I may call holy any action of yours or another’s, which conforms to it, and may deny to be holy whatever does not.”

Rationality, utilitarianism, and effective altruism are all attempts—in one way or another—to understand the world through that tradition. There’s a lot of terminology here so let me briefly explain:

Rationality is the idea that you can systematically improve the accuracy of your beliefs and decision-making, and that you should.

Utilitarianism is the idea that morally good actions are ones that produce good consequences for the most people.

Effective altruism is a modern movement of utilitarians. Many of them are rationalists.

The place to start here is utilitarianism. It’s the middle layer where rationality becomes effective altruism. Maybe if we examine utilitarianism we’ll figure out why it got co-opted, and then how to fix it.

Utilitarianism starts with the idea that we all have a moral obligation to do good, and that to judge an action as good requires looking at the consequences of that action relative to the consequences of alternatives. Your moral obligation is to do the action that does the most good for the most people. This way of looking at things is nice because it transforms doing good into something like a math problem: you can find ways to measure “goodness”—like the number of lives saved—and doing that will help you make the right decisions. Very rational.

Effective altruists comprise a movement of utilitarians who can trace their roots to the philosopher Peter Singer. In a 1972 essay, “Famine Affluence, and Morality,” Singer argued that your calculations of what is good should not take into account considerations of proximity to suffering.

In other words, your moral obligation to a starving child in Alaska is not mitigated by the practical fact that you are not in Alaska and do not know the child. You have the same responsibility to save that child as you would to a child you might see drowning in a river on your way to work.

This concept is ingenious because it takes a firmly held moral intuition—that we should care more about the people we are closest to—and turns it on its head, to great effect. It created a generation of charities interested in “effective” giving—e.g., figuring out how to most efficiently use money to save lives. Saving a life in Africa by buying mosquito nets is far cheaper than saving a life in the U.S., so many of these charities focused on buying mosquito nets to maximize the amount of good their money could do.

EA also fostered a movement of young people—including SBF—who took high-paying jobs with the intention to make as much money as possible in order to donate their wealth to causes like malaria nets.

As of the last few years, EA has morphed. Its philosophical proponents didn’t just drop considerations of proximity when it came to moral calculations, they also dropped considerations of present and future. In other words, they don’t just believe that you have the same moral obligation to a child suffering 1,000 miles away as to one suffering right in front of you. They believe that you also have a moral obligation to children who are not yet born.

Because the number of children not yet born outnumber by a large margin the number of people currently in existence, working on projects that have a high chance of affecting those children in a positive way should be, by far, your most important ethical priority. (This is most poignantly laid out in What We Owe the Future by William MacAskill—the philosopher who convinced SBF to try to get rich.)

So far, so good. What’s the problem?

Where utilitarianism fails

The problem, critics argue, is that all of the moral systems that judge moral duty on its consequences lead to what philosopher Derek Parfit calls “repugnant conclusions.”

For example, if you start with a proposition that it is morally good to create a world with happy people in it, you end up having to endorse creating a world with a very large number of miserable people. This is easy to illustrate:

Start with World A with a population of 10 and a happiness level of 99%. Let’s assume you have the option to transform it into World B with a population of 20 million and a happiness level of 90%. If you want to do good for the greatest number, it’s your responsibility to bring about World B with more people in it—even though it means you’re reducing the average happiness of everyone in the world. If you accept that, then you’ve lost. You can then just rinse and repeat this process until you get a World Z with gargantuan numbers of unhappy people in it, and you have to call it better than World A.

This seems fixable. But if you try, you’ll learn it’s quite hard, and that all of the obvious ways to do so fail. (Check out this Astral Codex book review for an outline of this proof.)

You might say, “This is all very theoretical. It doesn’t mean that utilitarians would actually do something like this.” Except it looks like in at least one clear case, this is exactly what happened with SBF.

The argument is as follows:

If you believe that perpetrating fraud is likely to allow you to amass and donate massive wealth, and you believe that it is your moral duty to do the greatest good for the greatest number of people—then you might argue yourself into a place where you start to believe it’s the morally right thing to do.

After all, if the goal is to do the most good for the most people, then it makes sense to take risks that could potentially have a huge payoff. The downside is limited to fraud, and the upside is unlimited happiness for untold numbers of people.

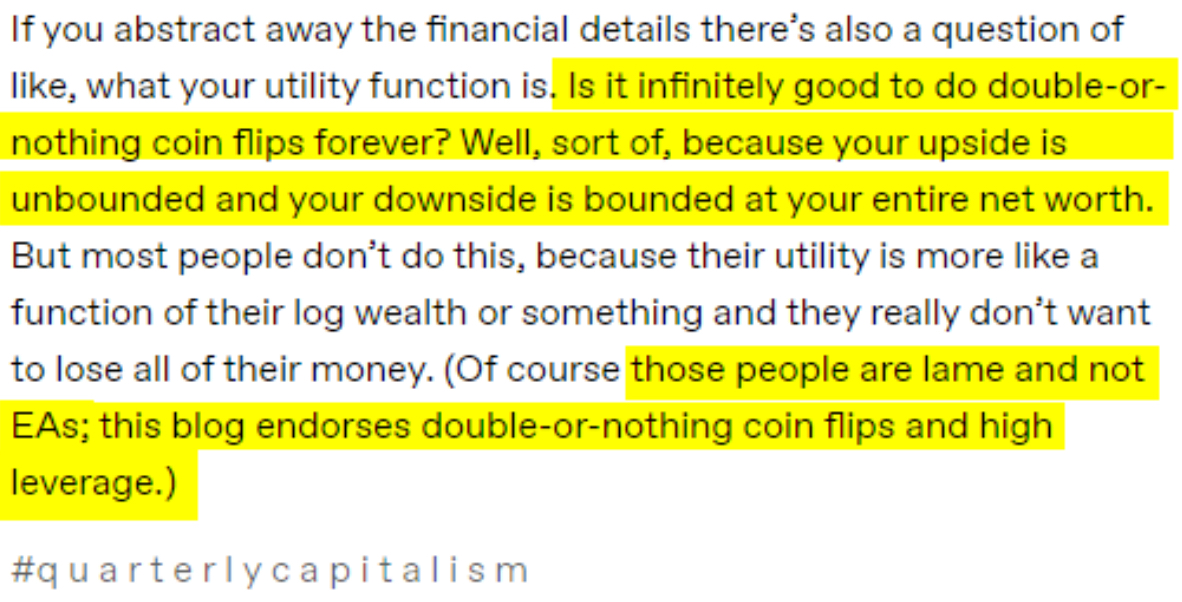

I can’t directly quote him making this argument, but Caroline Ellison, Alameda Research’s former CEO, expresses essentially this sentiment (minus the fraud stuff) on her Tumblr:

Given all of the evidence, it’s plausible to stand in the wreckage of FTX and the crypto markets, and the vast destruction of wealth and normal lives that followed from it, and declare, “This system leads to repugnant conclusions—of course this was going to happen! A better system might have prevented it.”

There is one major problem with this view, though: effective altruists are denouncing SBF in droves. Success has many philosophical bedfellows; frauds have none.

You would expect that if utilitarianism, rationality, and effective altruism led someone to accept repugnant conclusions in the name of morality, there would be some significant percentage of that community that shrugs their shoulders and says:

“Sam did what needed to be done to improve the world. To make an omelet, you have to crack a few eggs. To do the greatest good for the greatest number, sometimes you need to commit fraud.”

Instead, they’re saying things like:

So…is this really a philosophical problem? Or is it a more human one?

Occam’s razor says getting high beats ethics every time

Let’s consider a hypothetical example:

It’s the 1990s. A young trader gets rich making risky bets in the stock market. He buys a mansion, and all of his friends move in. He raises more and more money from investors and continues making risky bets that pay off.

He thinks he’s a god, and that he can do no wrong. Over time, his behavior becomes increasingly erratic—and his risky bets become more and more outlandish.

Finally, he makes a trade that blows everything up. His entire empire has collapsed, but no one knows. He has to make a choice: should he turn his trading empire into a Ponzi scheme in order to keep it going? Or should he give up and turn himself in?

He decides to create the Ponzi scheme and keeps it going for a while, but is eventually found out and goes to jail.

How would you explain what happened? Here’s a simple explanation that is invariant to the particular brand of ethical philosophy that he likes to smoke:

Risky investing and addictive drugs produce similar brain responses. You can get addicted to risky investing in the same way you can get addicted to cocaine. Addiction affects your ability to recognize and predict loss, so you literally can’t recognize or avoid bad decisions.

Over time your brain changes such that you take more risks than you would’ve before, and you can’t recognize when you’re going over the line—or you don’t care because you just need to get your next high.

Compounding this problem is the massive amount of status and respect you get for being rich and, presumably, brilliant. Everyone is encouraging your addiction, and it’s easy to write off people who don’t as being negative. You’re surrounded by an echo chamber feeding the worst parts of your brain.

When your luck runs out and your empire is about to collapse, you commit fraud to cover it up. Maybe along the way you were skirting pretty close to the line of fraud in small ways, so this one doesn’t seem much worse than your usual—or maybe you never did this, but something breaks in that moment and it leads you to make a horrific decision.

Either way, these scenarios are common in investors who end up committing fraud—and none of them, that I know of, needed to have a complicated ethical framework in order to get them to do what they did.

I think this is pretty close to what happened to SBF, and it accounts for why he fraudulently misused customer deposits in FTX, and why the community he came out of explicitly rejects him even though you could make an argument that his actions are directly implied by its philosophy. Also, it’s clear that he was literally on drugs.

So what part does rationality and EA have to play in what’s going on?

. . .

If you’re an EA in the scenario above it can compound the problem.

What getting too deep into this kind of philosophy can do is blind you to the fact that you’re a human being with human impulses, subject to the same desires and whims and follies of other non-philosophically inclined humans. If you’re not careful, rationality can help you rationalize everything that you do—because, you say to yourself, you wouldn’t do it if it wasn’t rational. Your emotions make a decision, and your logical framework comes in to justify it later.

No matter how far you get from your starting point, you can justify what you do in terms of rationality or the greater good. In the words of philosopher Richard Rorty: “Any philosophical view is a tool which can be used by many different hands.

If you try hard, and question things enough, you can take any philosophical position too far. That’s why I think it’s telling that as effective altruism becomes more popular, its proponents like MacAskill have explicitly flipped to saying things like:

After 2,000 years of trying to come up with better systems of ethics we seem to always eventually end up throwing up our hands and saying, “Do the best you can!”

I actually don't think that's a bad thing, or a reason to give up on philosophy, rationality, or effective altruism. Learning to use tools appropriately also means learning their limits. It means learning when logical arguments or an emphasis on better decision-making actually makes things worse, rather than better.

I mentioned at the top that this entire line of philosophical thinking was initiated by Socrates a few thousand years ago. And if you read him closely, you'll find that he came to much the same conclusion:

“I am wiser than that fellow, anyhow. Because neither of us, I dare say, knows anything of great value; but he thinks he knows a thing when he doesn’t; whereas I neither know it in fact, nor think that I do. At any rate, it appears that I am wiser than he in just this one small respect: if I do not know something, I do not think that I do.”

Wisdom is being aware that we don't know. Philosophy gets twisted up when it forgets that.

My heart goes out to everyone affected by the events of the last few weeks.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!