Thank you to everyone who is watching or listening to my podcast, How Do You Use ChatGPT? If you want to see a collection of all of the prompts and responses in one place, Every contributor Rhea Purohit is breaking them down for you to replicate. Let us know in the comments if you find these guides useful. —Dan Shipper

Was this newsletter forwarded to you? Sign up to get it in your inbox.

Movie-making has historically been prohibitively expensive. If you wanted to make a Hollywood movie in 2012, you’d likely need high-end cameras, a sound system with too many buttons, and maybe even a friend who knew Christopher Nolan personally. Even the cheapest films to make are still pricey for the average person: The Blair Witch Project and Paranormal Activity cost about $200,000 each after post-production.

But AI is dramatically altering the cost of filmmaking. And AI tools have become a flywheel, broadening our ability to bring our own ideas to life.

Film and commercial director Dave Clark broke into Hollywood earlier this year with the sci-fi short film Borrowing Time, which he made on his own on his laptop, with AI tools like Midjourney, the text-to-video model Runway, and the generative voice platform ElevenLabs.

Clark talks about how he managed this in a recent episode of How Do You Use ChatGPT?, where he makes a movie live on the show with Dan Shipper. He goes as far as to say that he couldn’t have made the three-minute-long feature without AI—it would have been expensive, and Hollywood would never have funded it. But after his short went viral on X, major production houses approached him with offers to make it a full-length movie. Clark had his proof of concept.

In this interview, Dan and Dave delve into the realm of AI tools that generate images and videos, exploring how budding filmmakers can leverage them to come up with ideas, test these concepts, and perhaps even gain enough traction to secure funding.

I’m not aspiring to break into Hollywood—but I came away from this episode amazed at what AI is capable of, and inspired to use it to put my words into action.

Read on to see how Dan and Dave use AI tools to make a short film featuring Nicolas Cage using a haunted roulette ball to resurrect his dead movie career. They use a custom GPT that Clark has trained on his own creative style. The GPT—called BlasianGPT to represent Clark’s Black and Asian heritage—is a text-to-image generator.

First, we’ll give you Dan and Dave’s prompts, followed by screenshots from BlasianGPT and other AI tools employed to make the movie. My comments are peppered in using italics.

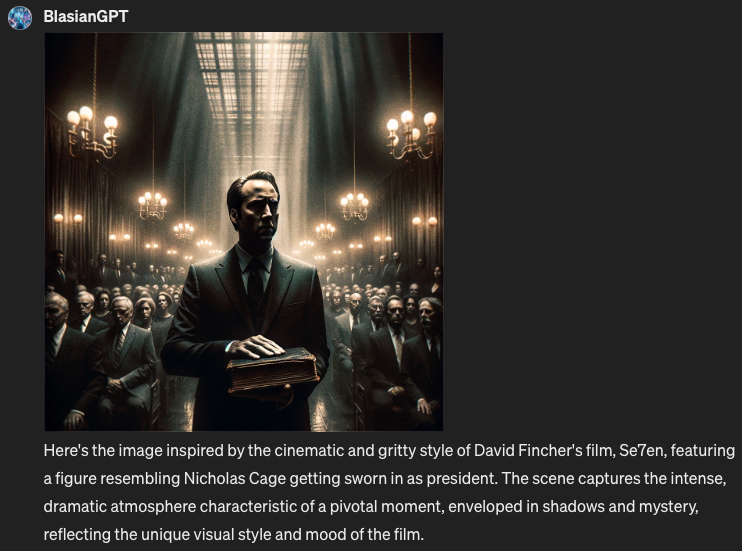

Dan and Dave: Create an image of Nicolas Cage getting sworn in as president. The image should be cinematic and gritty in the style of the David Fincher film Se7en.

All screenshots courtesy of Dave Clark and How Do You Use ChatGPT.Clark comments that BlasianGPT understood the vibe they were trying to achieve, pointing out that the image had “perfume lighting,” a technique used by the director of Se7en. A little trivia for all the movie buffs out there: Clark explains that the term “perfume lighting” has its roots in advertisements for perfumes in the 1980s, which typically used high-contrast visuals in an attempt to create an element of drama.Dan thinks the image looks like a Nicolas Cage-Harry Potter crossover. Even though BlasianGPT didn’t effectively capture the setting of a presidential swearing-in ceremony, he thinks the picture is interesting. Intrigued, Dan wonders what book Nicolas Cage is holding in his hand.

Dan and Dave: What is the book Nicolas Cage is holding in his hand?

BlasianGPT plays it safe, drawing from the original prompt to answer that the book is either a constitution or the Bible. However, Dan and Dave want to pull on a more exciting thread.Dan and Dave: Nicolas Cage is holding the Book of the Dead, and he opened it and made a deal with ghosts to resurrect his career. Show me the next scene in aspect ratio 16:9.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

.png)

.22.19_AM.png)