Welcome to the first edition of Playtesting, our new column in which Alex Duffy explores how games can help make AI smarter and more beneficial for people. In addition to being a contributing writer, Alex is the cofounder of Good Start Labs, a company dedicated to using games to improve AI that was incubated at Every.—Kate Lee

Was this newsletter forwarded to you? Sign up to get it in your inbox.

Earlier this year a version of Gemini won a gold medal at the International Mathematical Olympiad (IMO). Some models can diagnose certain medical conditions as well as human physicians. And AI is helping us forecast weather faster and more accurately without the need for supercomputers.

But in other areas, performance is just plain bad. AIs used in legal research, for example, have fabricated hundreds of facts and even whole cases that don’t exist, leaving attorneys who’ve trusted models facing fines and other liabilities.

It doesn’t have to be this way. It’s all down to the data. This era of generative AI was trained on publicly available information scraped from the internet—a biased dataset rich in some domains of knowledge, and wanting in others. And now that they’ve hoovered that up, it will take years to generate more high-quality knowledge to ingest and create more reliable outputs for every user. AI models are, as a result, incredibly good at tasks where they have lots of high-quality examples, and weak at those they don’t. This phenomenon is often described using the metaphor of a jagged frontier.

AI could answer routine medical questions, triage symptoms, or explain test results while doctors focus on complex problems and novel treatments. But we can’t deploy it in critical areas such as healthcare, legal research, or financial advising if it’s not reliable. If we want to realize the promise of AI, this jagged frontier needs to be filled in. Games can help make that happen.

In a game, we can create any scenario—a negotiation, a crisis, a moral dilemma, a portfolio to manage—and watch exactly how the AI responds.

I’ve learned so much from games. Runescape taught me how to type, how markets work, and how to not get scammed. Redstone in Minecraft taught me about circuits long before my electrical and computer engineering degree. League of Legends taught me collaboration under pressure and awareness, and almost everyone I’ve asked has similar stories about games.

We can iterate with games until the model does what you need. Maybe you want a model to lie less, get better at using many different tools, or be funnier. These synthetic playgrounds are how this generation of AI grows up and works better for people.

Games teach us what AI can and cannot do, so it can learn to do more things for us that fit our preferences. After years playing with AI and watching AI play, I’ve learned why.

Build a project in a single day

AI can feel abstract until you build something real—and tools “made for programmers” can stop you before you start. Spend one day with Every’s Dan Shipper to get acquainted with the best tools on the market, assign your AI agents real tasks, and ship something live. Claude Code for Beginners runs Nov. 19, live on Zoom. You’ll leave with a project, a reusable workflow, and a head start on building the agentic apps that will populate the future.

Learning through play

Games are older than written language but are often thought of as simple entertainment, a way to spend leisure time. In practice, they are a powerful way to sharpen real-world skills. The quantitative trading firm Jane Street, for example, has new associates play Figgie, a fast-paced game that simulates the dynamics of trading equities. One study found that surgeons with past video game experience made 37 percent fewer errors and worked 27 percent faster than non-gaming peers.

As far back as the 1940s, Alan Turing thought games could be used to coax computers into becoming learning machines. And learn they did. In 1997 IBM’s Deep Blue beat world chess champion Garry Kasparov. DeepMind’s AlphaGo beat top Go player Lee Sedol in 2016.

But these systems could only play the game they were taught—they didn’t generalize. Deep Blue worked by comparing millions of chess positions per second using hand-coded rules. It had no understanding of the game or ability to transfer that knowledge elsewhere. Modern generative AI is fundamentally different: Trained on vast amounts of text, images, video, and audio, these models can understand instructions, explain their reasoning, and apply knowledge across domains. (Just yesterday, DeepMind released SIMA 2, an agent that plays video games by following natural language instructions like “find the red crystal” or “build a shelter.” The agent uses skills learned in one to improve its performance in others.)

Many AI labs are turning to game-like environments to achieve similar improvement in their models’ generalizable skillsets. DeepSeek turned code and math challenges into games its R1 model plays against itself, trying solutions until it wins with the right answer. The model learned to reason well generally, without needing human-labeled training examples, and stunned the industry with its performance when it was released earlier this year.

These capabilities—math, code, reasoning, tool use—are essential for AI agents, systems that can complete tasks independently rather than just answering questions. You can see these priorities in new model releases: When Anthropic launched Sonnet 4.5 last month, the company highlighted improvements in all of these areas, some of which were learned from experience playing Pokemon. But we are just scratching the surface of what games can teach.

AI research lab Nous Research built Husky Hold’em, where models compete at poker by writing Python code to play for them and learn statistics, strategy, and code. AI infrastructure startup Prime Intellect’s new Environments Hub has crowdsourced the idea of teaching and testing models. The community has built hundreds of reinforcement learning environments, including coding challenges but also Wordle and 2048. By turning problems into games, or systems with goals, models can use the results of their attempts to play these games as training data that they can then learn from, improving by learning from the best strategies.

There’s reason to believe skills learned in one game transfer to other tasks. Researchers trained vision models to play the two-dimensional game Snake by using coordinates and calculating distance from the snake to the goal, treating the game as a spatial math problem. After training on this, the model got better at solving other math problems it had never seen before.

As AI improves, so do humans

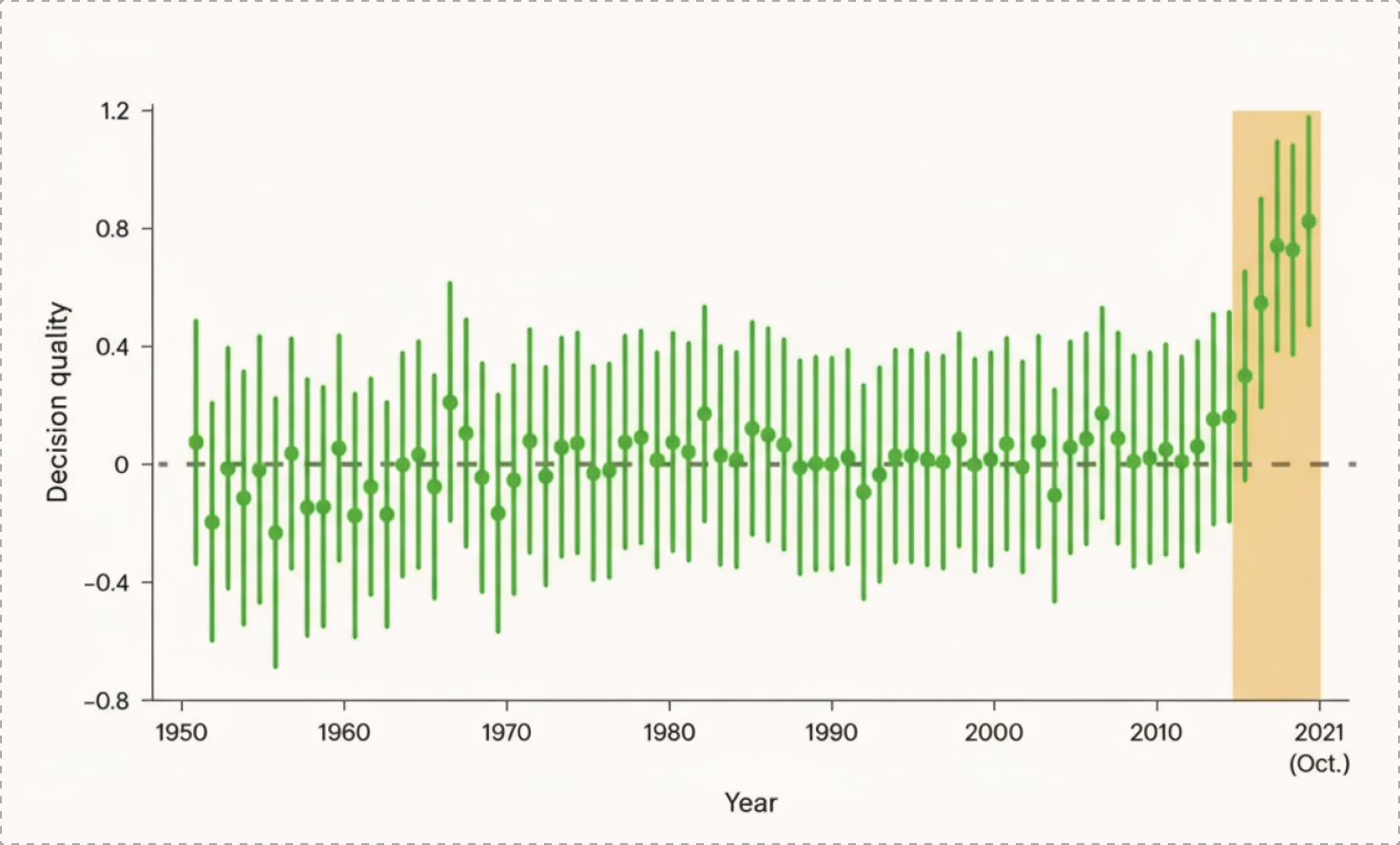

What excites me most is just as AI learned from humans, now humans are starting to learn from AI. Take the millennia-old game of Go. Professional Go players seemed to hit a ceiling from 1950 to the mid-2010s, as expressed in their Elo ratings, which measure player skill. Since DeepMind’s AlphaGo beat human players in 2016, the weakest professional players of Go are now better than the strongest players pre-AI, while the best players have pushed beyond what had been thought possible.

In 2019, OpenAI Five, a team of AIs, beat the world champions in Dota 2, an even more complex game with millions of possible board states. These champions learned new strategies just from playing a few matches against the bots. But that was pure reinforcement learning—no language, no explanations, just game rules in, moves out. Now AI has gained new senses, and through them, the superpower of generalization.

AI can process and generate images or videos, understand spoken audio, and generate natural language. We can design environments as complex as we want, watch how it reasons, and guide it toward our goals. Unlike past generations, modern models can tell us why they made each decision with increasing accuracy.

Games fill in the jagged frontier

At Good Start Labs, we’ve partnered with Bad Cards, a Cards Against Humanity-style game with 1 million monthly online players where players compete to pick the funniest punchline to a joke.

By having AI play alongside humans and evaluating its responses against real games, we get data on humor—something you can’t benchmark without getting people involved. At the moment, the best model, Grok 4 Fast, only picks the card humans find funniest 47 percent of the time. There is lots of room to grow, and every game played teaches us about preferences we couldn’t measure any other way. This matters because preferences change. What people find funny shifts over time. How they use AI shifts, too.

We need ways to keep models in sync with those changes and align their work with human intention and goals—in other words, AI that does what we need, when we need it. It will take exploration to get there.

The next step we’re taking at Good Start Labs is happening in January: Battle of the Bots, a prompt competition where people control AI agents playing the strategy game Diplomacy against each other. Will an expert Diplomacy player win, or will it be a prompt engineer who specializes in subtly coaxing models to do their bidding? Either way, we’ll find out together.

The frontier is still jagged, and games will help us smooth it out.

What games taught you the most? What games should AI learn from next? I’d love to hear your thoughts. Reach out at [email protected].

Alex Duffy is the cofounder and CEO of Good Start Labs, and a contributing writer. You can follow him on X at @alxai_ and on LinkedIn, and Every on X at @every and on LinkedIn.

To read more essays like this, subscribe to Every, and follow us on X at @every and on LinkedIn.

We build AI tools for readers like you. Write brilliantly with Spiral. Organize files automatically with Sparkle. Deliver yourself from email with Cora. Dictate effortlessly with Monologue.

We also do AI training, adoption, and innovation for companies. Work with us to bring AI into your organization.

Get paid for sharing Every with your friends. Join our referral program.

For sponsorship opportunities, reach out to [email protected].

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!