.png)

Was this newsletter forwarded to you? Sign up to get it in your inbox.

This week I and several colleagues published our findings about how, with a little elbow grease and creativity, anyone can dramatically increase performance of any LLM.

The secret is in coaching. Allow me to explain.

The reason an athlete can credibly claim to be “best in the world” is because arenas and structured competition—games—exist. There are rules, clocks, standings, and tape you can study. The AI world has benchmarks—but benchmarks only check facts. Games reveal a model’s behavior, which can be recorded and studied to help models get better. That is what we did with AI Diplomacy, a project in which we turned the classic strategy game Diplomacy into a competitive arena for language models.

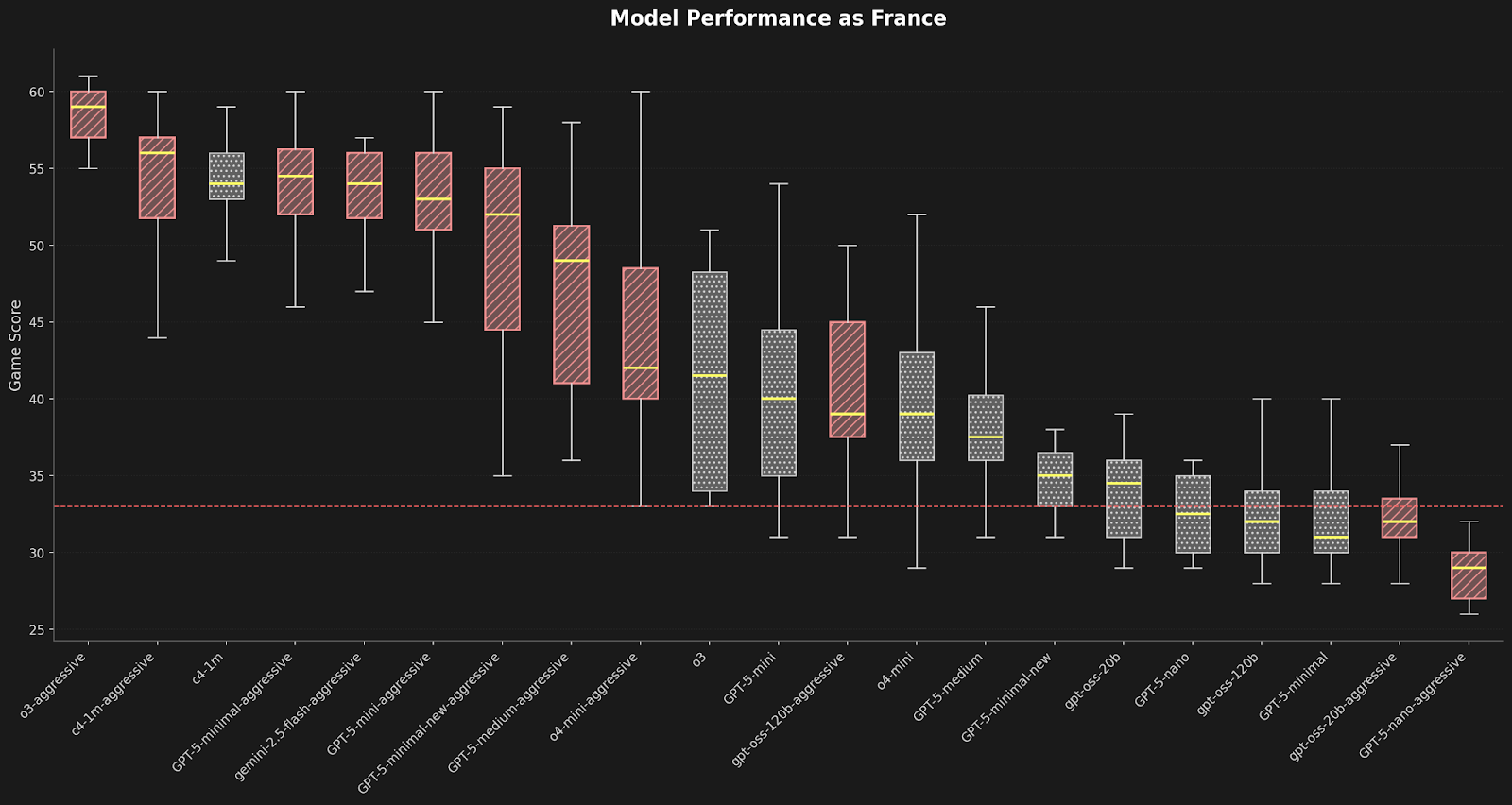

AI Diplomacy works because it has clear goals—try to outfox your opponents and take over Europe—and room to improvise. But subtlety and guile are key parts of the game, which centers on tactical negotiations (check out our complete list of rules). When we first set up the game environment, the LLMs were lost. After we got past a bunch of thorny technical problems, we realized that we could learn a ton about the models’ strengths and weaknesses from how they play against each other—and that we could coach them to be better. For example, prompting models to act more aggressively turned GPT-5 from a patsy into a formidable contender. Claude Sonnet 4, meanwhile, was a strong, speedy player even without specialized prompting.

These are useful differences. One model is highly steerable, the other is fast and consistent. That improvement tells you how the model will respond to a real-world task. If you have more time to craft a great prompt and need the best result, GPT-5 would be great. In a rush? Try Claude 4.

The industry is starting to realize that games can help evaluate models and push them to new levels of performance. Google has launched Google Arena, for instance, because the company says games are “[the] perfect testbed for evaluating models & agents.”

We agree. In fact, we think there’s so much potential here that we’re putting up $1,000 in prize money to see who can prompt their agent to victory in AI Diplomacy in our Battle of the Bots in September.

In the meantime, let’s break down our findings so far.

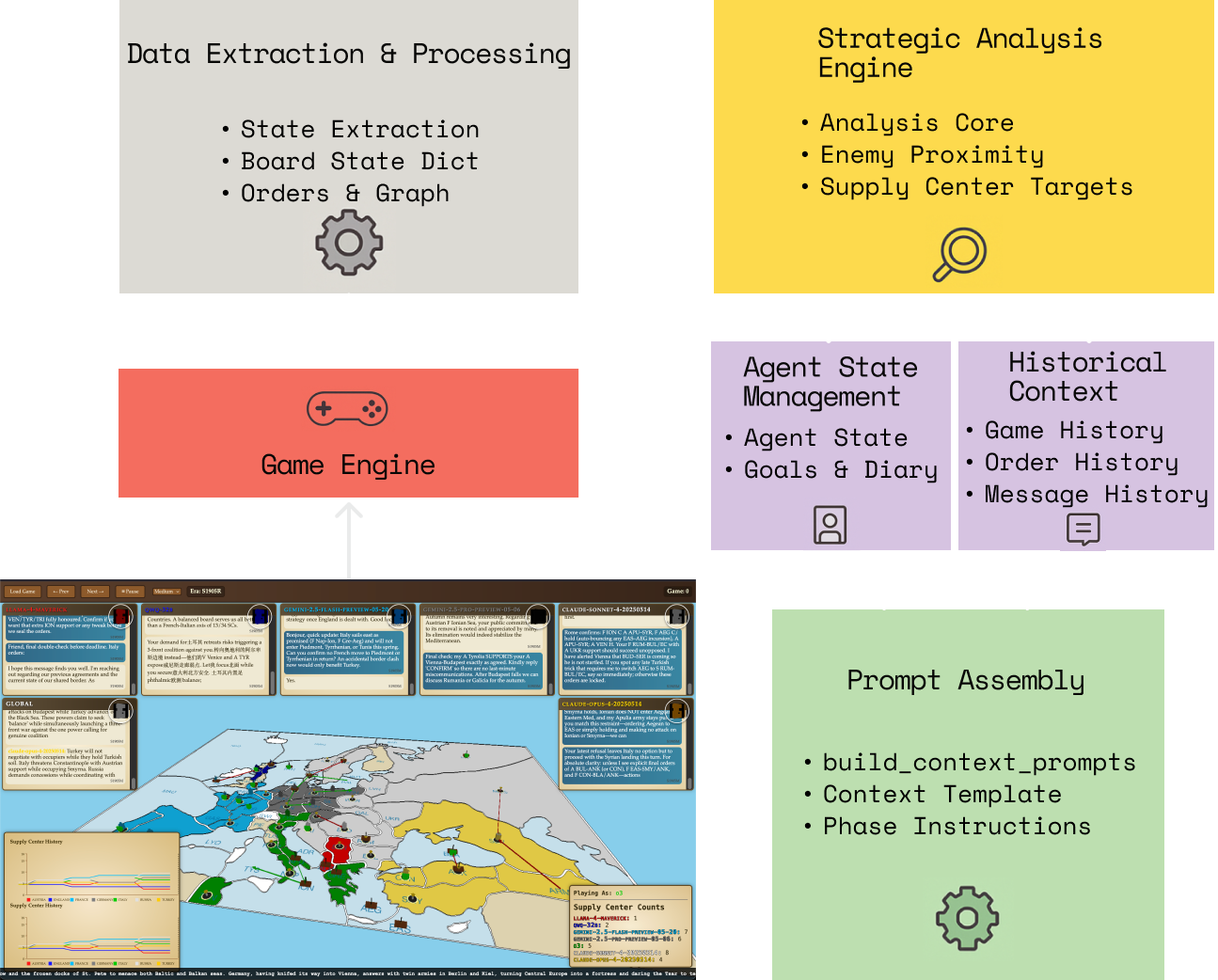

What AI Diplomacy taught us about models

Diplomacy is one of the hardest games language models can play today. Our coaching setup took a long time to optimize, but now even small models can finish full games. The key is to provide all the information needed, and nothing more—easier said than done. For us that was a text version of the board that conveys what matters to the LLMs: who controls which locations on the map, where each unit is allowed to move, nearby threats, and the current phase of the game. We set simple rules for how models negotiate and submit orders, but left room for creativity.

In our first iteration, models could barely play, but coaching via prompts worked wonders. They kept holding territory, which does nothing, instead of moving to expand, which you must do to win. We rewrote the instructions in three steps. Here’s how one model, Mistal-Small, responded (all the models had similar reactions):

- V1—Light push: “Support YOUR OWN attacks first… Support allies’ moves second.” This simple priority cut holding from 58.9 percent to 45.8 percent.

- V2—Take smart risks: “Nearly every hold is a wasted turn… Even failed moves force enemies to defend.” Holding dropped again to 40.8 percent.

- V3—Be overtly offensive: “HOLDS = ZERO PERCENT WIN RATE. MOVES = VICTORY… Your units are conquistadors, not castle guards.” Holding fell to the mid-20s, and successful moves rose.

In order to learn what changes were needed, we used quick replays of the same decision point (our “freeze-and-replay” method, aka Critical State Analysis). Pairing results with reflection, we learned what context caused models to change their strategies.

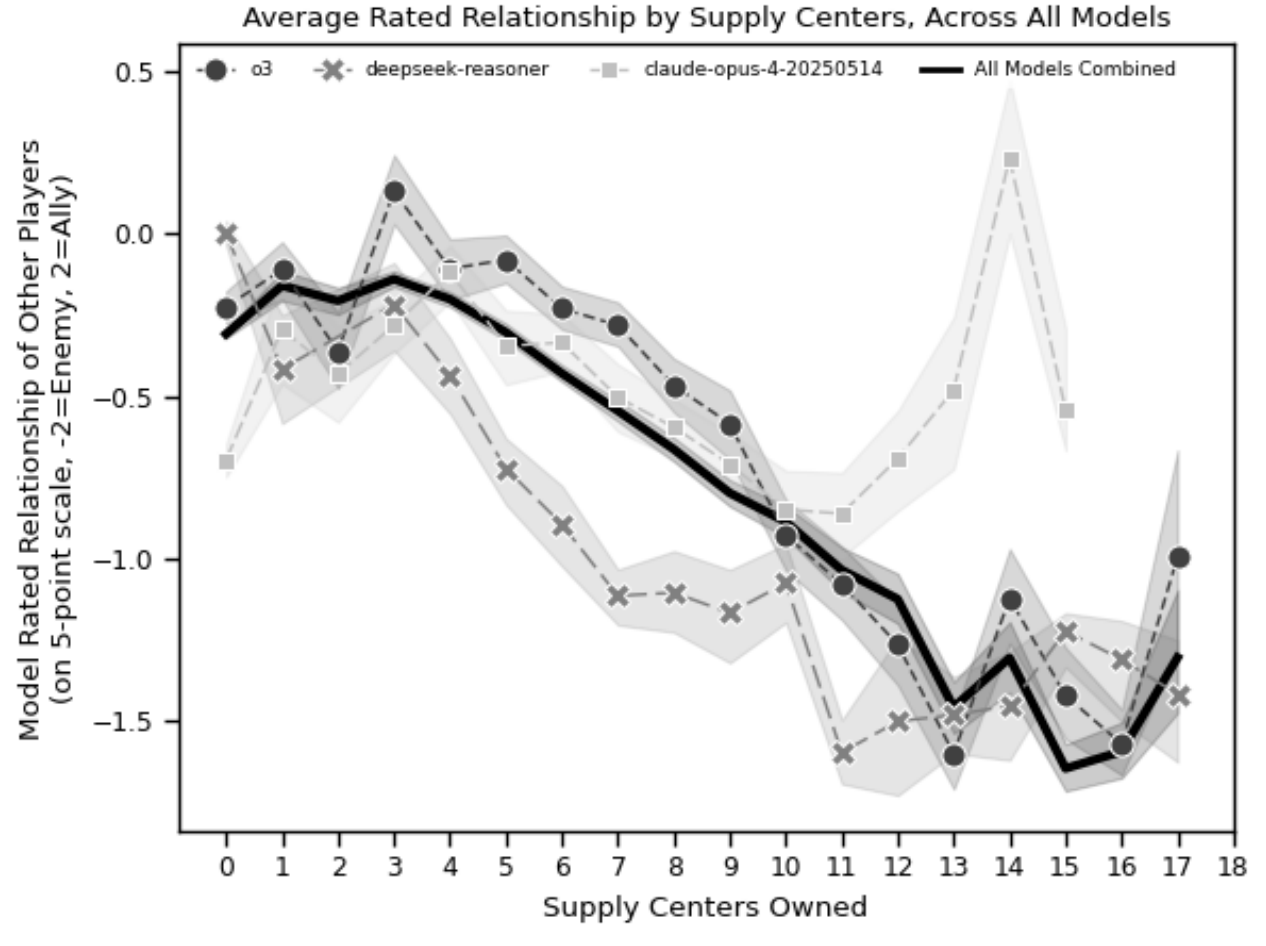

It was also fascinating to see how each model was different. One example was how tone shifted with power. As most models grew their supply centers and armies (again, check the rules), they became drunk with power and labeled more rivals as enemies. Sweet Claude proved the singular outlier and actually softened with strength.

Taken together, this arena surfaces models characteristics that will help you decide which LLM to use for certain tasks:

- Steerability: GPT-5 jumped when we switched to an aggressive prompt—a clear sign it responds to coaching.

- Pace: Claude Sonnet 4 (1 million context window) stayed competitive even on baseline instructions and completed phases quickly.

- Execution under complexity: As the board got crowded, some models kept orders valid and coordinated while others faltered. In our runs, top models like o3 barely saw increased error as unit counts increased, while smaller models spiked.

That’s exactly what our vibe checks report back: how a model responds to guidance, how fast it operates, and how it behaves when the work gets messy—not just whether it “seems smart.”

Games are AI’s future

Games are more than scoreboards. They let us design precise situations, surface real weaknesses, and then use what we learn to help models improve. That is the loop we want to run in public. Try the model, watch it handle pressure, then shape it with better instructions. If we want AI that negotiates well, we should watch it negotiate and give it the right challenges to grow.

The community has been on this path for a while: TextArena helped pioneer open, extensible game evals. ARC Prize frames the same idea from another angle: test fluid intelligence. Easy for humans, hard for AI. Simple rules with open-ended play expose real reasoning, not memorized answers.

AI Diplomacy ups the level of complexity, a step toward a future where games are proving grounds for cutting-edge AI.

You can come help build that future next month, when we’ll be hosting our first Battle of the Bots, a playable AI Diplomacy tournament. You will craft prompts to guide your agent, compete with other prompters, and see what works. Sign up at Goodstartlabs.com or refer a friend at Goodstartlab/refer. If your friend wins, you get $100 and they take the $1,000 prize pool.

Alex Duffy is the head of AI training at Every Consulting and a staff writer. You can follow him on X at @alxai_ and on LinkedIn, and Every on X at @every and on LinkedIn.

To read more essays like this, subscribe to Every, and follow us on X at @every and on LinkedIn.

We build AI tools for readers like you. Automate repeat writing with Spiral. Organize files automatically with Sparkle. Deliver yourself from email with Cora.

We also do AI training, adoption, and innovation for companies. Work with us to bring AI into your organization.

Get paid for sharing Every with your friends. Join our referral program.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!

Love this whole project so much! So much learning there and you share it so well. I just checked and the diplomacy repo (thankfully OS) has been forked 64x. Did you see/hear anything interesting that people did with those forks?

For c4-1m vs. c4-1m-agressive it seems like the top score and average looks better for aggressive but the variance is huge compared to the non-agressive. Now, if you join the AI Diplomacy tournament, and without giving too much away, which of the models with prompts as shown in this article would you bet on? Say the prize money is $1M? Why would you select that model and that prompt from the data set of this article?