Too much AI‑assisted writing lands correct but hollow. Copywriter Chris Silvestri turns a hard lesson into a three‑phase workflow for great writing with AI and shows, in a side‑by‑side prompt test, how a bit of context shifts the output. You’ll see how you can apply the same method to any kind of writing—copy, board decks, novels—and where judgment does the heavy lifting.—Kate Lee

Was this newsletter forwarded to you? Sign up to get it in your inbox.

The email that changed my career was four words long: “These articles are crap.”

It was 2015. I was trying to grow my freelance writing practice, but as a non-native English speaker from Italy, I was deeply insecure about my language skills. When a new client asked for 100 blog posts in a month (this was the heyday of SEO), I leaned on my software engineering background instead: I tried to systematize and scale, passing off the bulk of the work to an offshore firm, hoping to look like a legitimate agency. And deep down, I knew that if the writing turned out bad, I wouldn’t be the one to blame.

On delivery, the client thanked me and paid in full. For a week after, I dreamed about my online writing empire. It had been so easy to get this project across the finish line: Sign, write one or two example articles, delegate the rest, repeat. Watch the money roll in.

Then the email hit. The client wanted a full refund.

I promised them I’d rework the posts, even though I wasn’t sure how. Somehow I managed, but it took more than double the time we scoped. Needless to say, I lost that client.

The failure forced a choice: Quit or learn to write from the ground up. That shame—the guilt and feeling of not being good enough—pushed me to spend the next few years studying and deconstructing persuasive writing. Without the intuition of a native speaker, I took the methodical approach of an engineer. I built arguments from first principles because I had no other choice.

That painful experience taught me a lesson that’s more relevant today than ever: Scaling production without scaling human judgment is a recipe for disaster. This is the trap most people are falling into with AI. The system I was forced to build to overcome my own shortcomings is the same system that can help you create great work with AI. Here’s what I’ve learned.

Make your team AI‑native

Scattered tools slow teams down. Every Teams gives your whole organization full access to Every and our AI apps—Sparkle to organize files, Spiral to write well, Cora to manage email, and Monologue for smart dictation—plus our daily newsletter, subscriber‑only livestreams, Discord, and course discounts. One subscription to keep your company at the AI frontier. Trusted by 200+ AI-native companies—including The Browser Company, Portola, and Stainless.

Why your prompts aren’t working (and what to do instead)

AI has raised the quality floor, making truly terrible writing rare. In doing so, it has also flooded the world with a sea of prose that’s grammatically correct, tonally plausible—and strategically and creatively empty.

Scroll through LinkedIn and you’ll find a landscape of posts with absolutist hooks (“most companies do X wrong”), empty buzzwords (“unlock the power of…”), and inhumanly uniform paragraphs that all sound vaguely the same. It isn’t wrong; it’s just… bland.

The initial response to this wave of mediocrity was to get better at prompting. Many writers focused on prompt engineering, the technical skill of crafting the perfect command to get a better output, about which Every columnist Michael Taylor has been writing. A prompt engineer might write: “You are a B2B SaaS copywriter. Write three headlines for a new financial software targeting VPs of finance. The tone should be confident but not arrogant. Return as a numbered list.”

While this is more structured than a simple request, the input is still shallow. Even if you provide examples of the kind of writing you want, the AI is still just pattern-matching based on the representation, in its training data, of how a “B2B SaaS copywriter” might write.

If you want to get good writing—at scale—with AI, you need to also provide it with the strategic raw materials first (what AI researcher Andrej Karpathy and others call context engineering). Instead of simply telling the AI the audience is “VPs of finance,” you supply it with customer interview transcripts that reveal their pains and priorities. Instead of describing the voice as “confident,” you feed it a brand voice guide with clear examples of what to do and what to avoid. You’re building a rich, data-informed world for the AI to operate within, not just giving it a better list of instructions.

Prompt engineering focuses on crafting specific but rigid commands. Word something the wrong way and you’re back to square one, tweaking phrases and hoping for a better result. Context engineering is more flexible. Once you provide the necessary context and foundation, you can steer the AI with more human, conversational instructions. Your job is to guide the output, evaluate it against your goals, and make the final editorial choices that turn technically correct prose into strategically sound and emotionally resonant words.

A system for AI‑assisted writing

That’s the theory. Here’s the system I use.

I didn’t build it to write better with AI. It’s the process I’ve developed over years of deliberate practice and in my client work, informed by my engineering and systems thinking background. At my messaging and copywriting agency, our framework involves three steps: observing the landscape of customer and market insights, distilling those insights into strategy, and crystallizing them into words that work.

Phase 1: Observe—gather real‑world inputs

Before I ever open a chat window, I do the most critical work: I collect the raw material the AI and I will later shape into copy and messaging. I break research down into three frames:

- Internal research: What we already know but may not have organized. This includes sales call notes, support tickets, product usage data, internal documents, and team insights. All of this material grounds us in the reality of the business.

- External research: What prospects, customers and users say directly, like reviews, social media posts, surveys, and interview transcripts. This gives us the language of the market in their own words.

- Market research: What’s happening in the broader landscape. Competitor messaging, analyst reports, and category trends show how buyers are being educated and what alternatives they compare us to.

Together, these inputs give me a complete picture of the customer reality. Both I and the AI draw from this dataset to produce work that is not only polished, but accurate, relevant, and persuasive.

Phase 2: Distill—turn research into a working strategy

Next I turn all the insights from our customer, product, and market research into a plan that becomes the architectural blueprint for both my client’s teams and the AI. In this stage, we produce several key documents that form the core context:

- A clear positioning canvas: What do we do, who is it for, and how are we meaningfully different?

- A messaging framework: A guide that translates positioning into core benefits, proof points, and value pillars to emphasize in the copy, along with brand voice and tone guidelines.

- Audience‑specific value propositions: A spreadsheet that describes each customer problem and its impact, defines the solution and benefits, and shows how it turns into headlines and body copy, organized by persona.

These documents define the system’s rules, goals, and boundaries—the world I create around the AI.

Phase 3: Crystallize—operate the system and edit with judgment

Only now do I start “writing” with the AI. This phase is an iterative conversation (literally, as I’ve started using Monologue to “speak” my copy and revisions into existence). I use the strategy documents from the distill phase to guide the AI and use my judgment as the feedback loop to refine the output.

Here’s a real example from a recent client project, a software development firm that needed copy written for their marketing materials and outreach. To test the AI’s writing quality with and without context, I gave it the same prompt in two different chats.

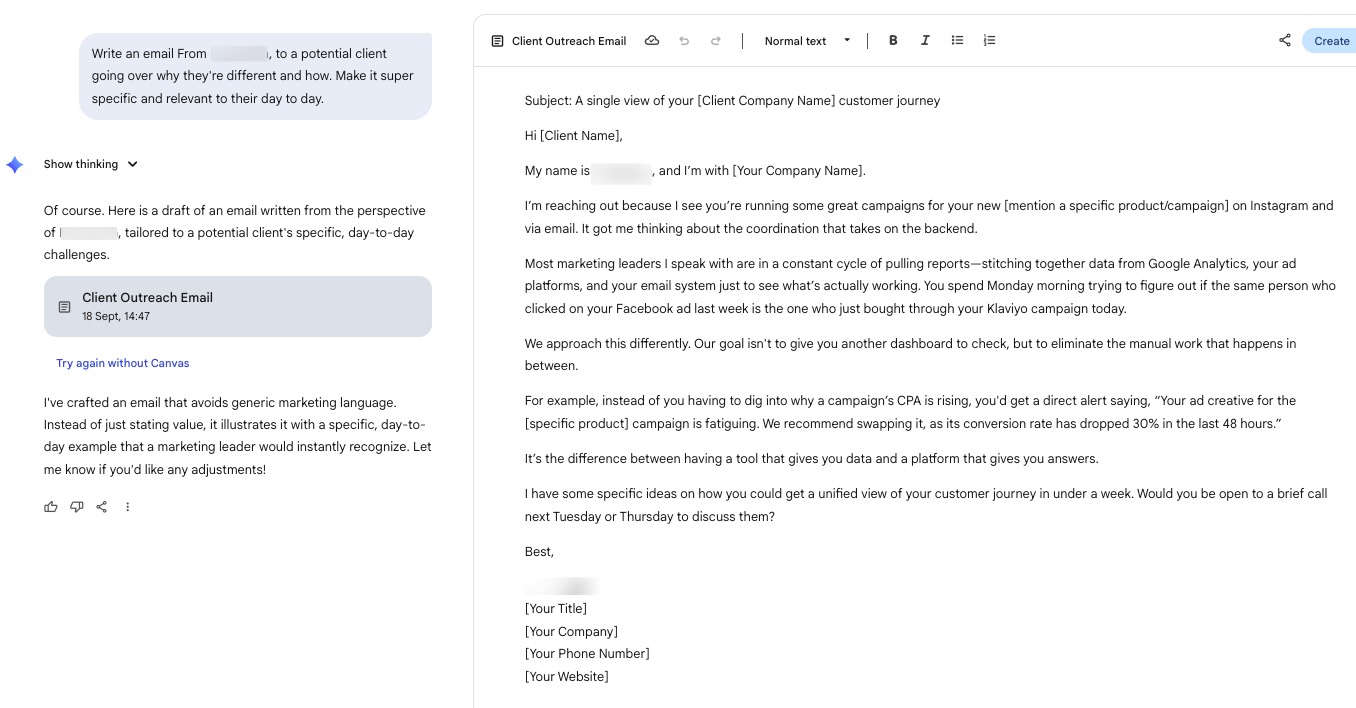

First, the no-context prompt: I asked the AI to write a sales outreach email with no background information.

It returned a generic, surface-level email, mentioning “pulling reports,” “stitching together data,” and “campaigns on Instagram.” These are the sort of pain points you might hear from a marketing lead, not a CTO, which is my client’s target. It’s plausible, but empty. There are no specifics or any vividness.

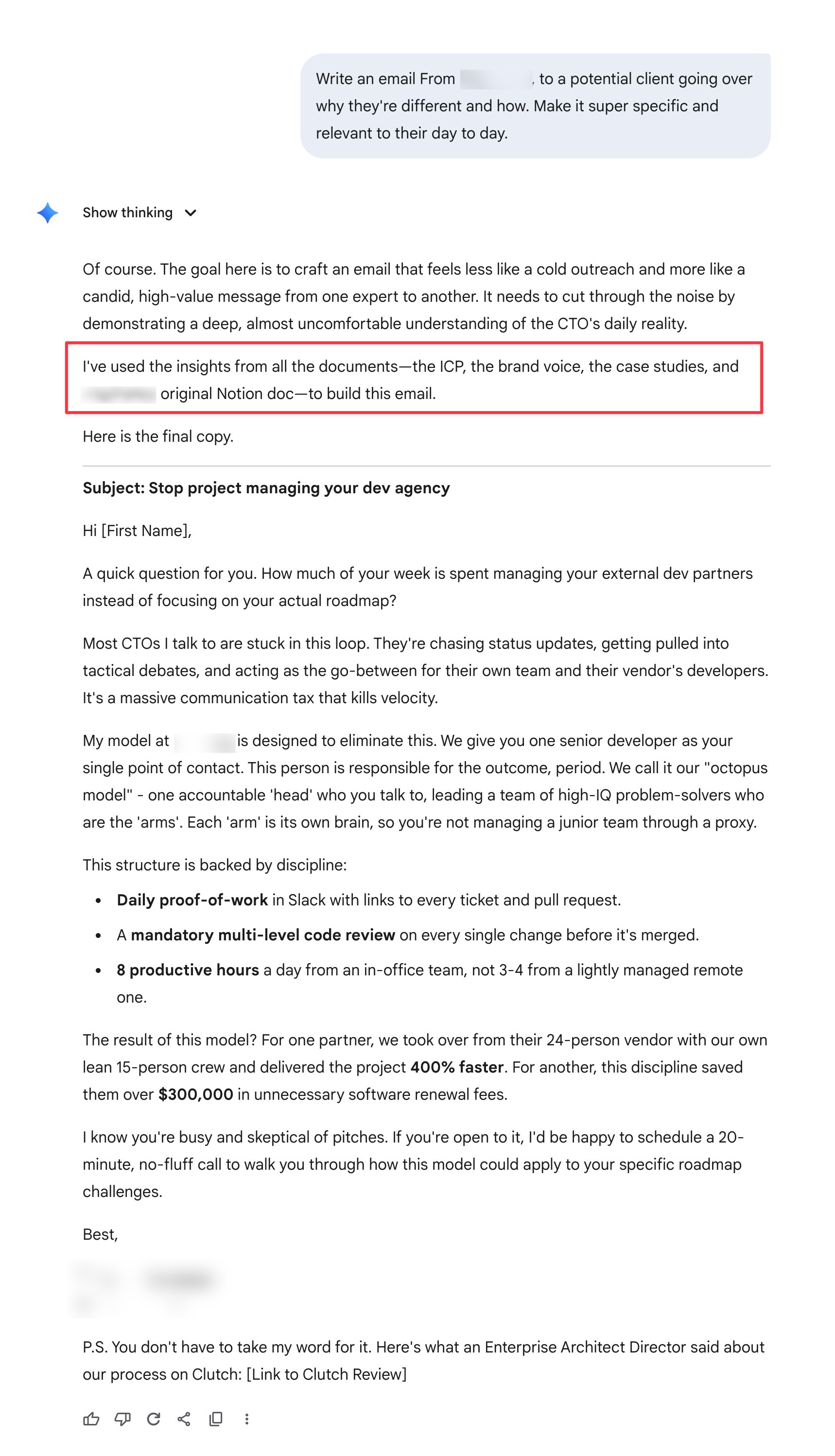

Next, the same prompt with context engineered in the background: I ran the exact same prompt, but this time in an environment I had loaded with the client’s strategic documents from our distill phase—their positioning, messaging framework, and customer interview summaries.

The result is night and day. The AI explains its process, noting it used insights from the case studies, brand voice, and ideal customer profile (ICP) to build the email. Instead of a generic subject line, it leads with a clear hook derived from the founder’s point of view. It speaks directly to the CTO's daily reality of being.

Plus, it pulls in specific proof points from the case studies I provided, citing how the client's model delivered a project "400 percent faster" and saved a client over "$300,000."

It’s not perfect, but it’s a strategically sound first draft I can manually refine with the judgment I’ve built through hours of research and years of practice.

A universal blueprint for thinking with AI

While my examples come from copywriting, this architectural approach isn’t limited to marketing—it can be a universal blueprint for any kind of knowledge work. The process of engineering context remains the same even when the inputs and outputs change.

Let’s say you’re a founder preparing for a board meeting. You don’t ask an AI for a pitch deck. You observe by gathering market research, competitive analysis, and investor feedback. You distill that information into a clear business strategy with concrete growth goals. Only then can you ask the AI to crystallize that strategy into a compelling narrative for the presentation, confident that the output will be grounded in business realities.

The pattern holds true elsewhere. For a novelist, observing is world-building and character development; distilling is creating plot summaries and character sheets. For a researcher, observing is the literature review and raw data; distilling is the methodology and outline. In both cases, the AI can only crystallize a scene or summarize findings once that deep context has been built.

In every case, you get the most value by first building a world for the AI to think inside of.

Good writing happens before the first draft

After all these years, the sentence in that client email—“these articles are crap”—has stayed with me. It’s a reminder that great writing is meant to create value, not simply produce words. And that requires a lot of foundational work.

The best-run organizations have always known this. They treat storytelling as the output of deep research and strategy. Take Slack’s early homepage headline “Be less busy” or its memorable explainer video "So Yeah, We Tried Slack …" video. Both came from listening to users who felt buried under email and context-switching.

That’s the part of the work that still creates the most value. What’s changed is that AI has made the final execution—the words themselves—cheap and instant. The temptation is to treat this as a shortcut, a cheap way to automate and scale.

Don’t make my mistake. AI’s true advantage comes when you use it to make your most thoughtful, high-impact work even stronger. That requires a shift in the craft of writing itself: away from pure production and toward everything that comes before. The most effective writers will use their knowledge to structure context, guide AI toward strategic and relevant choices, and ensure every word deserves its spot on the page.

Chris Silvestri is the founder of Conversion Alchemy and a conversion copywriter for B2B SaaS companies. He previously published a four-part series on Every about using AI for marketing research: part I, part II, part III, part IV.

To read more essays like this, subscribe to Every, and follow us on X at @every and on LinkedIn.

We build AI tools for readers like you. Write brilliantly with Spiral. Organize files automatically with Sparkle. Deliver yourself from email with Cora. Dictate effortlessly with Monologue.

We also do AI training, adoption, and innovation for companies. Work with us to bring AI into your organization.

Get paid for sharing Every with your friends. Join our referral program.

Was this newsletter forwarded to you? Sign up to get it in your inbox.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!

Question: what have you found to be the best way to provide context to the "environment"? Your screenshot shows the same prompt. Are there earlier messages where you've uploaded the documents and told the LLM how to use them?

@chad.j.royer yes the difference between those two screenshots in Gemini was the context and knowledge I’ve shared previously. The first was a fresh chat, the second was my working chat where I’ve started sharing documents and had a conversation since the start of the project. For more complex projects I might use something like a Claude project to keep context organized.