Was this newsletter forwarded to you? Sign up to get it in your inbox.

I found myself shaking my head in disbelief and surprisingly emotional throughout the entire two days of Google I/O, the company’s annual developer conference that was held this week. The recording artist Toro y Moi kicked off the event with an AI-powered live music set, but it was his closing words that captured what I'd feel for the next 48 hours: "We're here today to see each other in person, and it's great to remember that people matter."

Since 2015 I’ve known AI could fundamentally change the world, but this was the first time I felt so clearly and viscerally that it’s actually happening—right now. Surprisingly, it might make our world more human, not less.

At every table I sat down at, strangers would look up with wonder in their eyes, desperate to talk about what they'd just seen. In just two days, Google announced more new products and technical advances than most companies have in their entire existence: Video generation tools Veo 3 and Flow took the internet by storm with generative media that was miles better than anything ever seen before. Gemini diffusion, a new type of architecture for language models, teased a not-so-distant future where these models are 20 times faster and can create full apps in the blink of an eye. Unreleased mixed-reality glasses with a built-in screen translated languages and streamed video live.

In all, there were literally 100 announcements. It was overwhelming. And it’s not like Google was saving up for this: The company casually released an updated Gemini 2.5 Pro—a model that was comfortably topping nearly every benchmark (at least until Claude 4 Opus’s release yesterday)—on a random Tuesday a few weeks ago.

There are plenty of great write-ups covering each release in detail. This is not one of them.

Instead, I want to talk about what the tsunami of releases adds up to. Google calls it its “Gemini Era” after its flagship AI models. This is what exponential growth looks like: We're hitting the knee of the curve where compounding gains suddenly become undeniably visible. Google has managed to align its immense resources, people, and vision toward a single clear goal—artificial general intelligence (AGI).

What does Google mean when it says AGI? Demis Hassabis, co-founder and CEO of Google's AI laboratory DeepMind, explained they're building toward AI that deeply understands our physical environment and the context we live in, beyond just symbols like math and language as current models do. AGI of this form would enable a universal AI assistant to “crack some areas of science, maybe one day some fundamental physics,” he said. Crucially, he believes this intelligence can amplify what makes us human, not replace it.

Google's unique advantage

Google has brilliant AI researchers, but that’s not why it's winning. It's winning because they've spent decades constructing an ecosystem that’s now building on itself.

Was this newsletter forwarded to you? Sign up to get it in your inbox.

I found myself shaking my head in disbelief and surprisingly emotional throughout the entire two days of Google I/O, the company’s annual developer conference that was held this week. The recording artist Toro y Moi kicked off the event with an AI-powered live music set, but it was his closing words that captured what I'd feel for the next 48 hours: "We're here today to see each other in person, and it's great to remember that people matter."

Since 2015 I’ve known AI could fundamentally change the world, but this was the first time I felt so clearly and viscerally that it’s actually happening—right now. Surprisingly, it might make our world more human, not less.

At every table I sat down at, strangers would look up with wonder in their eyes, desperate to talk about what they'd just seen. In just two days, Google announced more new products and technical advances than most companies have in their entire existence: Video generation tools Veo 3 and Flow took the internet by storm with generative media that was miles better than anything ever seen before. Gemini diffusion, a new type of architecture for language models, teased a not-so-distant future where these models are 20 times faster and can create full apps in the blink of an eye. Unreleased mixed-reality glasses with a built-in screen translated languages and streamed video live.

In all, there were literally 100 announcements. It was overwhelming. And it’s not like Google was saving up for this: The company casually released an updated Gemini 2.5 Pro—a model that was comfortably topping nearly every benchmark (at least until Claude 4 Opus’s release yesterday)—on a random Tuesday a few weeks ago.

There are plenty of great write-ups covering each release in detail. This is not one of them.

Instead, I want to talk about what the tsunami of releases adds up to. Google calls it its “Gemini Era” after its flagship AI models. This is what exponential growth looks like: We're hitting the knee of the curve where compounding gains suddenly become undeniably visible. Google has managed to align its immense resources, people, and vision toward a single clear goal—artificial general intelligence (AGI).

What does Google mean when it says AGI? Demis Hassabis, co-founder and CEO of Google's AI laboratory DeepMind, explained they're building toward AI that deeply understands our physical environment and the context we live in, beyond just symbols like math and language as current models do. AGI of this form would enable a universal AI assistant to “crack some areas of science, maybe one day some fundamental physics,” he said. Crucially, he believes this intelligence can amplify what makes us human, not replace it.

If 90%+ of your team isn’t using AI everyday, you’re already behind

You’re not going to get good at AI by nodding through another slide deck. Every Consulting helps teams level up—fast. We’ve trained private equity firms, leading hedge funds, and Fortune 500 companies. Now it’s your turn. Customized training. Hand-held development. A rollout strategy your team will actually use. Let’s make your organization AI-native before your competitors do.

Google's unique advantage

Google has brilliant AI researchers, but that’s not why it's winning. It's winning because they've spent decades constructing an ecosystem that’s now building on itself.

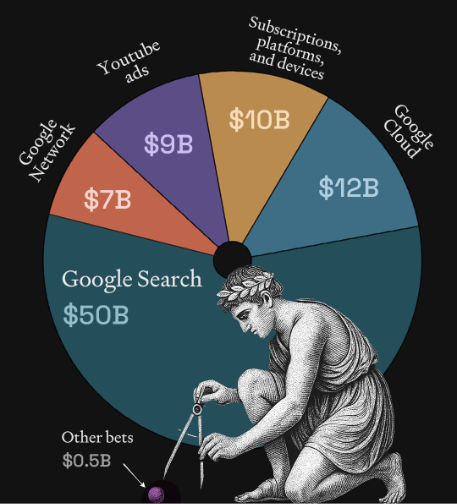

The core of Google's strength remains Search. Search is its north star, providing what Elizabeth Reid, Google’s head of Search, called "constraints as space for creativity." Building for Search forces them to think about speed, scale, quality, and human desire. It's these constraints that have helped them wrangle an unwieldy organization into pulling in a single direction—a feat much easier said than done for an organization of any scale, let alone for the fifth-largest company in the world. Search is also the cash cow that funds nearly everything they do. In the first quarter of 2025, Google made over $50 billion from Search alone—more than half the company’s total revenue. To give you a sense of scale, the entirety of General Motors is currently worth $47 billion.

But Search is just the start. They've patiently layered on other massive ecosystems—YouTube’s vast video data. Android’s billions of devices spanning smartphones, smart glasses, and now cars. Maps guiding billions of journeys each month. Gmail, Documents, and Drive. Large-scale hardware infrastructure running custom-designed chips (TPUs, or tensor processing units, that only Google has, presciently optimized for AI), and deep investments in robotics informed by real-world learning from Waymo. Most companies would be lucky to have just one of these assets. Google has them all and now also a unifying goal: Achieve AGI. As Josh Woodward, vice president of Google Labs and Google Gemini, said, “Gemini is the thread bringing Google together.”

Google also seems to understand better than anyone else how critical openness and humility are. Where other companies may cling to secretive labs and product announcements cloaked in mystery, Google's Gemini Era thrives because, as Woodward put it, it’s embracing an "age of exploration," where they need to “follow the feedback,” and are iterating publicly. They’re also taking it personally, infusing care in a way I haven’t seen before from a company of this scale.

Founder mode is clearly back on the menu as well. Cofounder Sergey Brin is leaning back in. Hassabis was everywhere at I/O, sharing his incredibly ambitious vision for the future—where AI has a deeper understanding of our physical world and, through advances in robotics, helps us mold it effortlessly.

But one moment stood out in particular.

The human heart of AI

On stage with filmmaker Darren Aronofsky speaking to a packed room about storytelling in the age of AI, Hassabis opened his heart.

Hassabis and Aronofsky were joined by Eliza McNitt, a writer and director who specializes in virtual reality. Together they shared a new film that McNitt directed. Using Google's brand-new video and audio generation tool, Flow, they created scenes that would have been impossible just months ago, combining cutting-edge methods with traditional ones to push the boundaries of film as a medium.

McNitt used photographs her late father took of her as a baby to train the AI. They created scenes starring her AI doppelganger and reflecting her own story—how she almost died on the first day of her life from complications in childbirth. It was strikingly intimate, making the jam-packed room feel small and close-knit.

Visibly moved, Hassabis shared that his grandmother passed away in childbirth while delivering his mother. It was an incredibly vulnerable moment that made you forget this was a developer conference put on by an extremely profitable capitalistic organization.

This vulnerability was part of why Google feels different. Hassabis repeatedly stressed the importance of collaboration between people who deeply understand AI and those who are experts in other domains. As I've written before, AI isn't a product—it's leverage. Hassabis was saying he wants to put that leverage in the hands of the artists, architects, doctors, musicians, engineers, and scientists who possess the vision to move humanity forward.

Shaping a human future

Google isn’t perfect. The company still has plenty of blind spots. In conversations with staffers after the event, some product integrations felt vague, overlapping, or incomplete. I did not get clear answers as to the relationships between Google Search’s new AI mode, search within AI Studio, and Deep Research in Gemini. Google’s past tendency to abruptly sunset beloved projects that are still very much in use (check out the 296 projects listed on Killed by Google) looms as a legitimate concern for those who want to build lasting solutions on its ecosystem. But despite these uncertainties, the trajectory feels unquestionably right: Define clear goals and push toward them together. Next stop, AGI.

Over the past 20 years technology (and society) has prioritized the lowest common denominator. Algorithms that treat us as averages, not individuals, are akin to a cake-only diner. But what I saw at Google I/O was different: AI that could finally make technology personal again, that could understand and amplify what makes each of us unique rather than flatten us into data points.

What will stay with me from Google I/O isn't just the technology, as breathtaking as it was—it was how human it all felt in a field that often doesn't. Toro y Moi’s words stuck with me all the way to the very end, in my conversation with a woman delivering water and food to demo teams. Casually chatting, I discovered she was finishing a Ph.D. in astrophysics while also working at the company on giving robots sight. This was the crux of the event to me. Behind every demo, every breakthrough, were people passionately working toward something they cared about.

We're entering an era of compounding returns, where each breakthrough enables the next at an accelerating pace. Change is inevitable. But as Hassabis and Aronofsky said, we can shape it. We can give it soul.

It will take work, but I hope that AI (and soon robotics) might help us think bigger, and bring about a future more personal, creative, and human than our recent past. Leaving Google I/O, I am hopeful. Life in 10 years won’t look anything like it ever has before, and I think that’s a good thing.

Alex Duffy is the head of AI training at Every Consulting and a staff writer. You can follow him on X at @alxai_ and on LinkedIn, and Every on X at @every and on LinkedIn.

We build AI tools for readers like you. Automate repeat writing with Spiral. Organize files automatically with Sparkle. Deliver yourself from email with Cora.

We also do AI training, adoption, and innovation for companies. Work with us to bring AI into your organization.

Get paid for sharing Every with your friends. Join our referral program.

Ideas and Apps to

Thrive in the AI Age

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Ideas and Apps to

Thrive in the AI Age

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!

While I share an interest in and some hope for a techno-utopian future, I am not sure that having Google lead it is particularly encouraging to me (a decade ago when "Don't be evil" was still a thing I might feel differently 😄). I have a hard time swallowing the vision of a remade Google barely a few years out from some of its biggest (and some still ongoing) blunders and "inhumane" choices. Killing well-loved and not terribly expensive (to Google) products is just one example of that. It was a year ago, less from some perspectives, that Google seemed deeply behind in the AI race, and I'm confident that wasn't *just* because of what they hadn't shown yet. They clearly accomplished great things in righting the ship on their AI research since then, but they were definitely caught by surprise by OpenAI and others for a while and were struggling to catch up. It's great that they're doing well now, but it will take some consistency of such execution and output for me to have faith they can sustain it.

The "human" element of the whole show is an interesting angle. I don't want to trivialize the feelings you and perhaps others had (including Hassabis on stage), those feelings are real and there *is* something truly significant going on that is worth having strong feelings about. The potential is tremendous. But the environment of a conference itself, that very social, in-person nature of it, and the excitement of all this wondrous new technology, all of that is a multiplier for such feelings. And a skilled company - and its leaders - can make you feel powerfully positive and hopeful in that moment, yet still be speaking on behalf of and leading a company that ultimately regresses to the Capitalistic baseline: exploit opportunities (humans are at the root of most/all of them), make money, maintain that by whatever legal and sometimes pseudo-legal means possible. How do we profit off of the human, the creative, the personal, and what are the downsides of that? I don't think we can be genuinely hopeful about a future led by any company without understanding the latter part of that question much more fully.

@Oshyan Your point is a good one and I'm really glad you brought it up. It's also not lost on me, which is why I tried to hedge a bit by calling out explicitly that they are an extremely profitable capitalistic organization and some of their shortcomings from the past.

With that said, I think the reality of the situation is there are probably only a handful of companies that realistically have the chance to create by their definition of the term. And it was really reassuring to me to see that many of the people involved, from the technical point of view, seem to be doing it for the right reasons AND have enough leadership backing currently, to do it in a way that they believe is right.

I think this is really important because even if they(Google leadership) stop doing that in the future, the technical knowledge of how it was accomplished will reside within these individuals that are clearly idealistically motivated and increasingly less financially motivated as they've accrued significant wealth and will continue to do so.

I really welcome the open sourcing of models like Gemma, the willingness to share experiments in their labs and invite experts to the table, and just how cheap / accessible their technology is generally at the moment. This is a pretty stark difference when you compare them to, say, Apple, one of the other few companies with the resources and platforms needed to accomplish this goal, who has, by and large, stuck to their historical precedent of secrecy and closed off-ness

We'll continue to keep an eye on this closely and share what I learned! Please keep the feedback coming

Agree and made me buy more shares

Thank you for this emotional yet enlightening report, Alex!

That moment with Hassabis sharing about his grandmother hit me too. There's something happening here that goes beyond the usual tech demos - Google seems to be figuring out that the human relationship with AI matters as much as the capabilities themselves.

Oshyan's point about the "human element" gets at something I've been thinking about: we're not just watching AI get more powerful, we're watching the first hints of what beneficial AI alignment might actually look like in practice. Hassabis saying AI should "amplify what makes us human, not replace it" isn't corporate speak - it's a design philosophy that could make or break how this all turns out. And he keeps repeating these deeply rooted convictions in all public statements and interviews - making him a role model and beacon among the "AI power holders / elite" imho.

What especially got to me was your description of people at tables needing to process what they'd seen out loud. Because that is exactly my "current mode of coping". That's not just excitement about new tech - that's humans recognizing we're in the middle of something unprecedented and trying to figure out what it means for us. The very idea of my children growing up inside synthetic agency loops, of truth fracturing, of attention dissolving into dreamworlds overwhelms me. It is live witnessing my own "assumptions of what is coming" come true faster than I have hoped deep inside, like seeing the expected storm touch down on my own roof. Even if you’re wearing armor (here: you are "up-to-speed" and have figured out the new modus operandi), your loved ones might not be. And no model prepares you for that realization.

The vulnerability, the artist collaborations, the emphasis on domain experts - it all points toward AI development as relationship-building rather than just capability-scaling. It's making me think about approaches like the Parent-Child Model ( https://bit.ly/PCM_short ) for AI alignment - where instead of trying to control superintelligence through constraints, you raise it through trust, reverence, resonance and developmental scaffolding. Fortunately, Google's approach feels like steps in exactly that direction.

Your closing thought about AI helping us "think bigger" captures what I hope we're moving toward. Not just smarter tools, but technology that actually enhances rather than diminishes what makes us human. Question: Do you think this human-centered approach is Google's competitive differentiator, are we even seeing the early signs of what all AI development will have to become in general - or will this (at least now human / humanity-centerd looking) approach be a burden in the race for AI supremacy, slowing them down?

The Singularity generally presumes that AGI will lead to exponential advancement. I think you're observing that we've already hit that inflection point and oh-by-the-way we'll probably, eventually hit something we all agree is true AGI.

This might be a throwaway notion, but I actually think it isn't: the standard interpretation of The Singularity is not just AGI+Exponential Growth - it's fundamentally a description of a process that is unavoidable, where humans can no longer keep up, and where we become passive beneficiaries (we hope) of the fruits of the explosion. We slowly accumulate a Critical Mass, and then watch the rocket take off. And we sit in the bleachers, and cheer, and collapse into an existential crisis.

You are, I think, offering a different view that places us squarely IN the process. We're not in the bleachers, we're ecstatically riding the f**ing rocket, our arms wrapped around it, holding on for dear life, hands joined at a game controller, thumbs mashing wildly.

Perhaps this is only a phase, and true AGI *will* leave us behind, but maybe not.

While technology is often viewed as offloading certain kind of tasks from humans to machines that can do it better, perhaps we are the offloaded existential driver for the machines. Sure, they *can* do anything and everything, but perhaps there will always be a role for us to help them answer "but why bother."