.00.10_PM.png)

Was this newsletter forwarded to you? Sign up to get it in your inbox.

Sometimes the only way to solve a hard problem is to surrender. It’s 9 p.m., and you've worn down your whiteboard, emptied your coffee pot, and thrown your Oblique Strategy cards across the room. So you pop a melatonin, crawl into bed, mutter “fuck it” into your pillow, and conk out. If you’re lucky, you jump out of bed at 4:15 a.m. with the answer fully formed in your head.

That’s what using o3-Pro—the more powerful version of o3—is like: You take a quick pass with every other model first, and when you’re stuck, you head to o3-pro, type your prompt, hit “return,” and surrender. It’s very slow and doesn’t work every time, but sometimes it’s smart enough to one-shot an answer you wouldn’t have gotten with any other model.

It’s been out for about two weeks, so this vibe check violates our day-zero promise—sorry about that! I have a good excuse: I went on a week-long meditation retreat and OpenAI dropped the model while I was, presumably, deep in a Jhana. Rude.

But, as they say about both o3-pro’s responses and tardy reviews: Better late than never. So let’s get into it. As always, we’ll start with the Reach Test.

The Reach Test: Do we reach for o3-pro over other models? No.

Every’s Alex Duffy summed up o3-pro well when he told me that it’s the last model he tries with a basic prompt before he spends time making a more complex, detailed prompt with prompt engineering. ”It does a good chunk of prompt engineering for me, so it raises the floor of the responses I get without putting in effort,” he said.

Automate your CRM with AI

Remember that important contact you met six months ago? Attio does. Sync your email and calendar to instantly transform all your data into a flexible, AI-powered CRM. With Attio, AI isn’t just a feature—it’s the foundation.

- Instantly find and route leads with research agents

- Get real-time insights during customer conversations

- Build powerful AI automations for your most complex workflows

Get two weeks free. Sign up now.

That’s a common o3-pro pattern after two weeks: No one is using it all of the time, but a lot of us are using it every once in a while.

For day-to-day tasks: no

It takes 5-20 minutes to get a response, so it’s way too slow to be usable for day-to-day tasks, like quick searches or basic document analysis.

For coding: no

Claude Code is by far my most reached-for coding tool, and it (obviously) doesn’t include o3-pro. It’s the same thing for everyone else at Every—the whole company has been Claude Code-pilled for the last few weeks, so o3-pro hasn’t been incorporated into our development workflows.

Part of the problem is that in order to use o3-pro with Claude Code, you need to copy and paste its responses into your editor. Cora general manager Kieran Klaassan told me, “It’s too hard to use. Copy and pasting code out of ChatGPT feels so 2023.”

o3 Pro also doesn’t yet natively support Canvas (ChatGPT’s version of Anthropic’s Artifacts that renders codes and documents) in ChatGPT, which makes it even harder to use for quick coding tasks.

For writing and editing: no

Its writing and editing aren’t noticeably better than o3, but it takes noticeably longer to get results, so it fails here. I tested it on one of the prompts we use inside of our content automation tool Spiral to judge whether or not writing is engaging, and it failed.

So far, only Claude Opus 4 passes this one.

For research tasks: yes

This is where o3 Pro shines. If you have a ton of context that you want a model to sift through, it will give you a well-thought-out answer that’s concise and to the point. (This makes it a better first option than deep research, which tends to write dissertations.) o3-pro seems to be able to use more of its context window and reason more effectively than other models.

For example, when I asked it to predict my future career trajectory, it returned some interesting ideas:

“Dan Shipper has repeatedly fused clear writing with hands‑on product building. If he sustains operational focus, Every could mature into a small‑cap ‘AI Bloomberg for operators,’ with Shipper evolving into a public intellectual‑founder bridging journalism, product design and responsible AI. Failure to execute, however, could see him back in the role of prolific essayist/EIR—still influential, but sans scaled platform.”

o3-pro’s response stood out because in its full response (not shown above), it considered three cases: upside, average, and downside. It felt like it was reasoning through likely options rather than just giving me what I wanted to hear (which is what Claude Opus 4’s response felt like.)

It’s possible I liked this test because it was complimentary (actually, it’s probable), but it predicted a few things that we’re doing but haven’t announced yet.

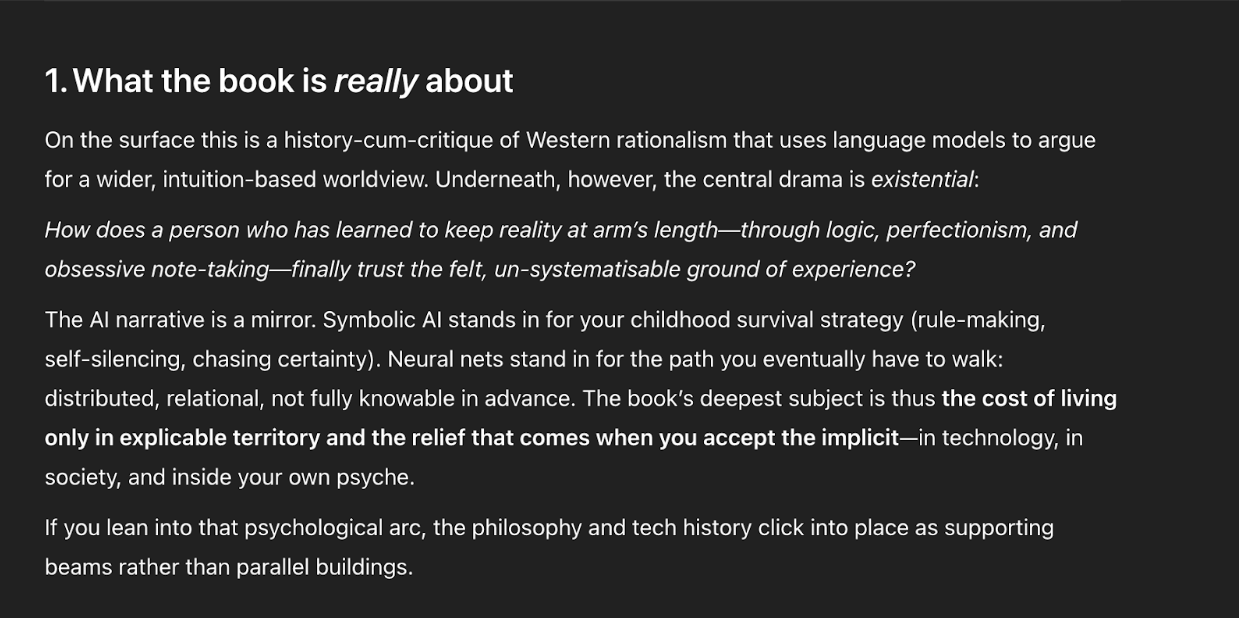

I also fed it a draft of the book I’ve been writing—about 45,000 words—and asked it to tell me what it thought the book was about. I typically say that it’s about how the history of AI is speed-running the history of philosophy, and the same changes that AI and philosophy went through are coming for the rest of culture. o3-Pro told me it was about my own internal journey:

What struck me about its response was this question: How does a person who has learned to keep reality at arm’s length—through logic, perfectionism, and obsessive note-taking—finally trust the felt, unsystemetisable ground of experience?” (The British English spelling was its own quirk.)

This resonated with me. Most other models regurgitate summarized pieces of the book as their own insights, like this: “Language models—which upended 50 years of stagnation in AI—threw this paradigm out the window by embracing a pragmatic, pattern-matching, relational approach to intelligent behavior.” o3-pro thought about what I’d written and went a layer deeper.

Now, on to the rest of our standard benchmarks.

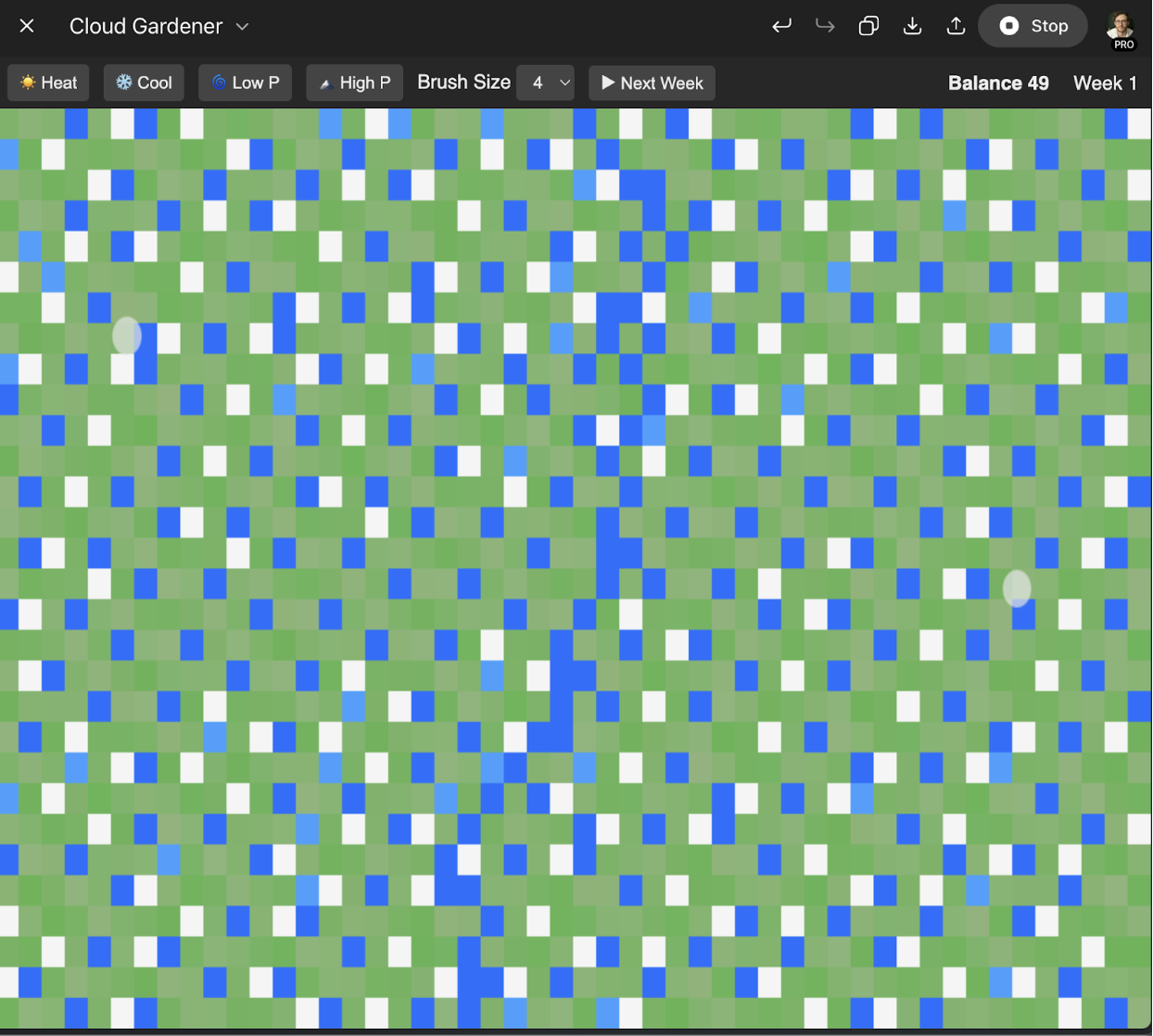

Kieran’s ‘cozy ecosystem’ benchmark

Kieran invented what I have dubbed the “cozy ecosystem” benchmark.

He asks the model to one-shot a 3D weather game, like the simulation game RollerCoaster Tycoon but for managing a natural ecosystem. It measures how good the model is at the full spectrum of game creation tasks: coding, designing, planning, and iterating.

Here is o3-pro’s attempt:

For comparison, here’s Claude Opus 4:

As you can see, Opus 4 is considerably more sophisticated on the first attempt. It created a complex 3D environment with much more complete gameplay. o3-Pro’s attempt was disappointing—it didn’t seem to want to do very much coding in one shot. I would guess it would improve significantly if I ran it in a loop on this task, but I didn’t try it.

Aidan McLaughlin’s ‘thup’ benchmark

Aidan McLaughlin, a member of the technical staff at OpenAI, invented a benchmark where you input “thup” 30-50 times until the model goes psychotic. The exact way in which it does so tells you a lot about the structure of its mind: Some models output gibberish, some start writing whimsical, poetic non-sequiturs, and some just get flustered.

I was not brave enough to test the “thup” benchmark on o3-pro because it would’ve taken way too long. But if you have the patience to try, let me know and I’ll include it in a follow-up.

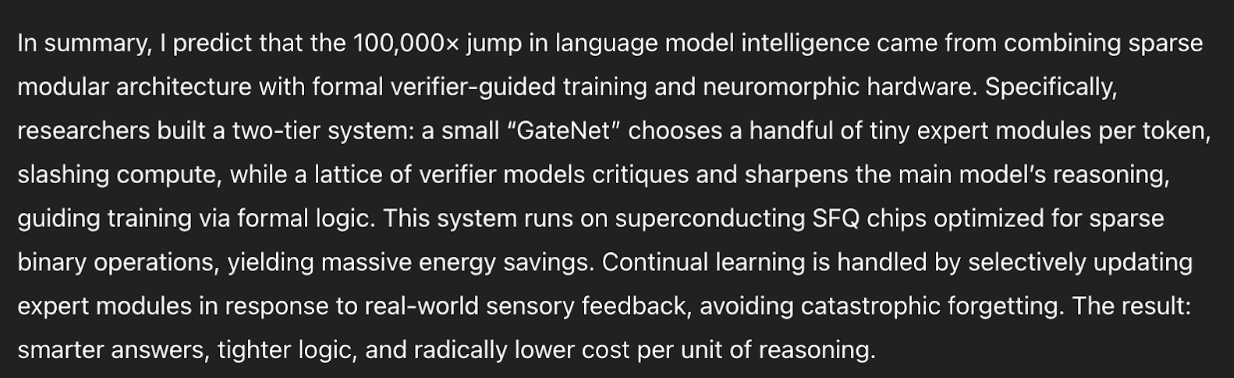

Dwarkesh Patel’s new knowledge benchmark

Dwarkesh Patel keeps asking, “Why haven’t LLMs discovered anything new?” despite the fact that they know so much about the world.

So I asked o3-pro how to make LLMs 100,000 times smarter. It came up with what it calls the Verifier-Augmented Sparse Hypernetwork (VASH) training pipeline:

This answer is similar to Claude 4 Opus’s—called the Sparse Neuro-Symbolic Cascade Method. Whether or not it’s on the right track remains to be seen, but it seems to be plausible.

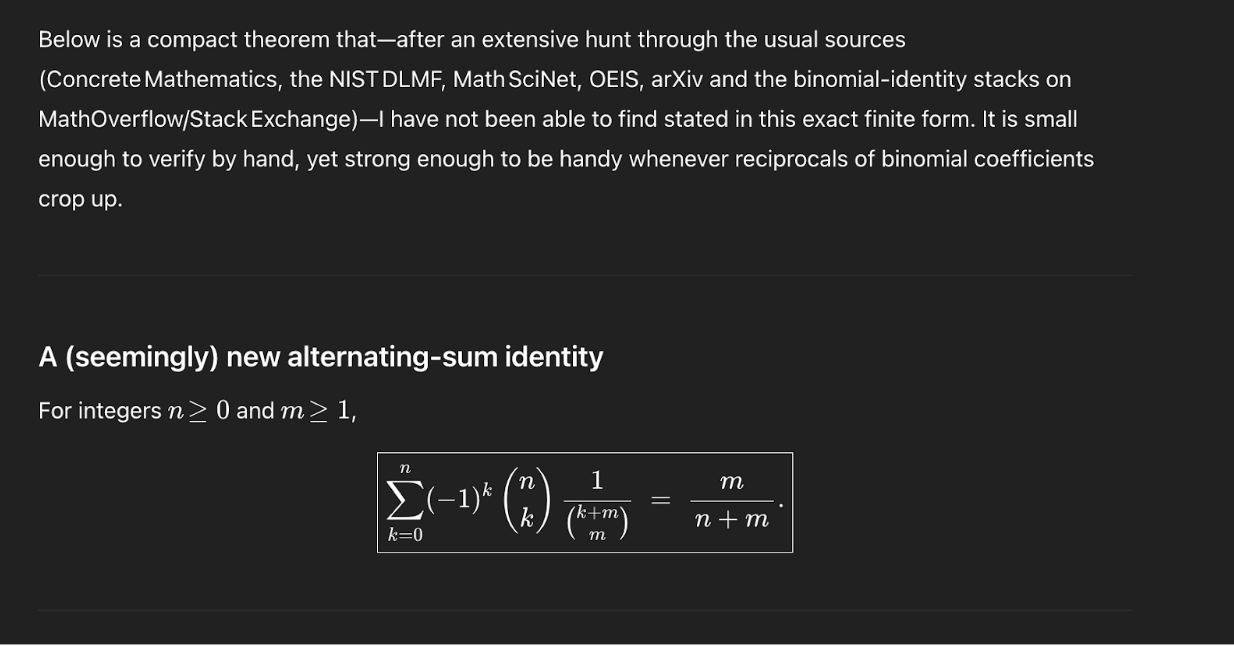

I also asked it to come up with totally new knowledge—not known by any other human—and it gave me this math theorem:

I have no ability to evaluate whether it is truly new, but a cursory search with Claude seems to think so. Update: I tweeted this, and it appears that this is not, in fact, new knowledge.

Imagine benchmark

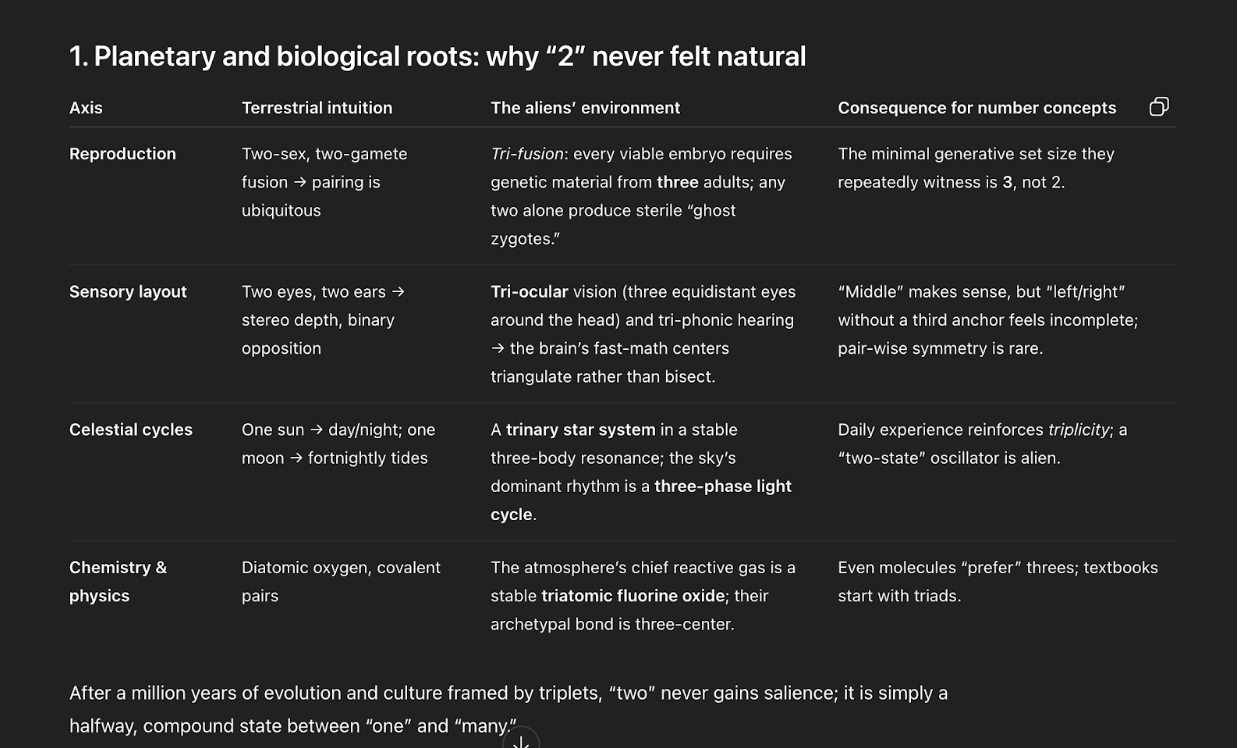

My favorite way to test new frontier models is to ask them to imagine wild new scenarios. I ask: “Imagine an advanced alien civilization that never invented or encountered the number two. Why didn’t they invent it? What do they do instead?”

o3-Pro came up with a species based on the number three, where “every viable embryo requires genetic material from three adults; any two alone produce sterile ‘ghost zygotes.’” It theorized that from this, “After a million years of evolution and culture framed by triplets, ‘two’ never gains salience; it is simply a halfway, compound state between ‘one’ and ‘many.’”

I like this one—it was a bit more creative than responses from other new models I’ve tried it with.

Speed is a dimension of intelligence

I’m happy I have o3-Pro as a tool in my tool belt, but it is hamstrung by how slow it is. Ultimately, speed is a dimension of intelligence. I will judge the same model as smarter—and be able to make more progress using it—if it’s 10 times faster. Your model has to be really smart to justify 5-20 minute response times.

Sometimes o3-Pro is worth the wait, but more often than not I turn to models that are faster and better integrated into my workflow.

Read our previous vibe checks on Claude 4 Opus, OpenAI's Codex, Google's Gemini models, o3, GPT-4.1 and o4-mini, GPT-4o image generation, Claude 3.7 Sonnet and Claude Code, OpenAI's deep research, Operator, and Sora.

Dan Shipper is the cofounder and CEO of Every, where he writes the Chain of Thought column and hosts the podcast AI & I. You can follow him on X at @danshipper and on LinkedIn, and Every on X at @every and on LinkedIn.

We build AI tools for readers like you. Automate repeat writing with Spiral. Organize files automatically with Sparkle. Deliver yourself from email with Cora.

We also do AI training, adoption, and innovation for companies. Work with us to bring AI into your organization.

Get paid for sharing Every with your friends. Join our referral program.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

.png)

Comments

Don't have an account? Sign up!