Sponsored By: Destiny

Own Game-changing Companies

Venture capital investing has long been limited to a select few—until now.

With the Destiny Tech100 (DXYZ) , you'll be able to invest in top private companies like OpenAI and SpaceX from the convenience of your brokerage account.

Claim your free share before it lists on the NYSE. Sponsored by Destiny.

I got access to Gemini Pro 1.5 this week, a new private beta LLM from Google that is significantly better than previous models the company has released. (This is not the same as the publicly available version of Gemini that made headlines for refusing to create pictures of white people. That will be forgotten in a week; this will be relevant for months and years to come.)

Gemini 1.5 Pro read an entire novel and told me in detail about a scene hidden in the middle of it. It read a whole codebase and suggested a place to insert a new feature—with sample code. It even read through all of my highlights on reading app Readwise and selected one for an essay I’m writing.

Somehow, Google figured out how to build an AI model that can comfortably accept up to 1 million tokens with each prompt. For context, you could fit all of Eliezer Yudkowsky’s 1,967-page opus Harry Potter and the Methods of Rationality into every message you send to Gemini. (Why would you want to do this, you ask? For science, of course.)

Gemini Pro 1.5 is a serious achievement for two reasons:

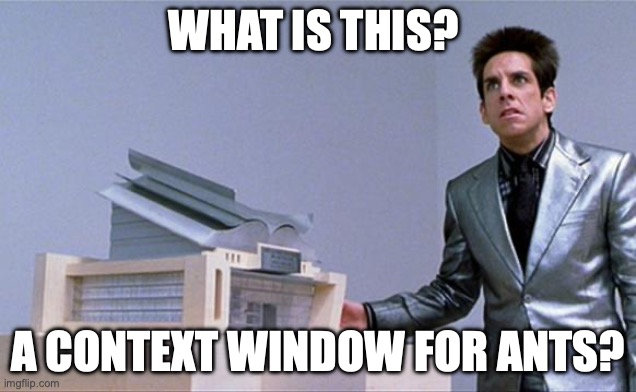

1) Gemini Pro 1.5’s context window is far bigger than the next closest models. While Gemini Pro 1.5 is comfortably consuming entire works of rationalist doomer fanfiction, GPT-4 Turbo can only accept 128,000 tokens. This is about enough to accept Peter Singer’s comparatively slim 354-page volume Animal Liberation, one of the founding texts of the effective altruism movement.

Last week GPT-4’s context window seemed big; this week—after using Gemini Pro 1.5—it seems like an amount that would curl Derek Zoolander’s hair:

2) Gemini Pro 1.5 can use the whole context window. In my testing, Gemini Pro 1.5 handled huge prompts wonderfully. It’s a big leap forward from current models, whose performance degrades significantly as prompts get bigger. Even though their context windows are smaller, they don’t perform well as prompts approach their size limits. They tend to forget what you said at the beginning of the prompt or miss key information located in the middle. This doesn’t happen with Gemini.These context window improvements are so important because they make the model smarter and easier to work with out of the box. It might be possible to get the same performance from GPT-4, but you’d have to write a lot of extra code in order to do so. I’ll explain why in a moment, but for now you should know: Gemini means you don’t need any of that infrastructure. It just works.

Let’s walk through an example, and then talk about the new use cases that Gemini Pro 1.5 enables.

Why size matters (when it comes to a context window)

I’ve been reading Chaim Potok’s 1967 novel, The Chosen. It features a classic enemies-to-lovers storyline about two Brooklyn Jews who find friendship and personal growth in the midst of a horrible softball accident. (As a Jew, let me say that yes, “horrible softball accident” is the most Jewish inciting incident in a book since Moses parted the Red Sea.)

In the book, Reuven Malter and his Orthodox yeshiva softball team are playing against a Hasidic team led by Danny Saunders, the son of the rebbe. In a pivotal early scene, Danny is at bat and full of rage. He hits a line drive toward Reuven, who catches the ball with his face. It smashes his glasses, spraying shards of glass into his eye and nearly blinding him. Despite his injury, Reuven catches the ball. The first thing his teammates care about is not his eye or the traumatic head injury he just suffered—it’s that he made the catch.

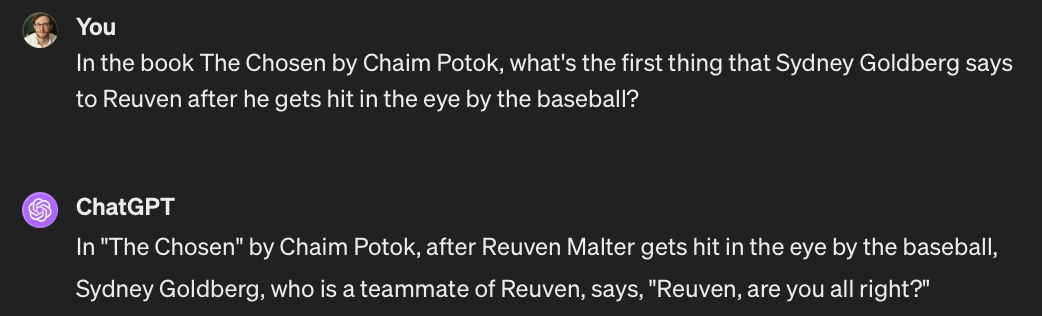

If you’re a writer like me and you’re typing an anecdote like the one I just wrote, you might want to put into your article the quote from one of Reuven’s teammates right after he caught the ball to make it come alive.

If you go to ChatGPT for help, it’s not going to do a good job initially:

This is wrong. Because, as I said, Sydney Goldberg did not care about Reuven’s injury—he cared about the game! But all is not lost. If you give ChatGPT a plain text version of The Chosen and ask the same question, it’ll return a great answer: This is correct! (It also confirms for us that Sydney Goldberg has his priorities straight.) So what happened?ChatGPT behaved as if I’d given it an open-book test. We can improve ChatGPT’s responses by, when asking it a question, giving it a little notecard with some extra information that it might use to answer it.

In this case we gave it an entire book to read through. But you’ll notice a problem: The entire book can’t fit into ChatGPT’s context window. So how does it work?

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!

Great article... stimulating. The phrase “context is everything” comes to mind. While that’s an oversimplification, the more complete the context, usually the better the answer, decision or outcome. But that depends heavily on the quality and precision of the question. E.g. the hologram in “I Robot”. AI can’t fix a poor prompt. That relies on us. We need to know why and what value we are pursuing (and even its use) when we ask a question and seek its answer. To use AI/GPT effectively, we need to level up our ability to ask good questions.

BTW- that’s applies to today, right now. In general, we need a renewed effort on improving critical thinking and reasoning skills focused around the why of our what's if we expect to get effective how's for the given Job-To-Be-Done (JTBD). Don't get me started about "Prompt Engineering"... so far that sounds like the job we should be doing right now. Crappy inputs, crappy outputs.

Thanks again for the thought provoking article.

I just signed up for Gemini 1.5 yesterday and my first run at it was initially disappointing. I fed it a transcript of a podcast episode and asked it to sum up the frameworks discussed during the episode. Strangely it kept telling me it didn’t know the people on the podcast and couldn’t respond as them. I rejigged my prompt and basically got the same answer. The third time, I got a high school level answer. Finally, I fed it an AI answer from another tool, and at that point, it apologized and then listed the steps it would take to do a better job in the future. Methinks I need to sort out my prompts with it a bit.

That said, I moved on asking it to use some of the frameworks I outlined to brainstorm about a launch, and at that point, it started to shine. I’m looking forward to see how it develops as a tool.

Of course you get better pattern recognition from a larger sample of patterns. We'll all be uploading our second brains into Google before long.

A wallet recovery service "KeycheinX" helped us recover a long lost wallet!!

I've been active on reddit since 2010, I started one of the first Bitcoin startups in the Philippines in 2014, and Bitcoin has been my life since then. Naturally, I told all my friends about it, encouraged them to buy some, or gave some of them some free coins (it wasn't worth that much, 0.05 was $10 on the average in 2015 for example).

One of my closest buddies of almost 40 years was one of those friends. We always bet on boxing and UFC fights for fun, and everytime I lost, I paid him him in BTC. He won a total of about $50 -ish in BTC from me from 2014-2015 and kept it in a block chain dot info wallet. At the time, it was really one of the only few decent choices for noobs.

Long story short, he lost his password and only had this 17- word password recovery seed from the old version of the block chain wallet. The seed didn't work. I thought he made a mistake because it's either 12 or 24 words for a proper seed phrase. He never heard the end of it from us and all our friends as we watched Bitcoin rise and watched his "lost" funds go higher and higher in value. It was like having an indestructible glass safe without a key. He already wrote it off and charged it to experience. I honestly thought it was gone forever.

Then on January 18th, 2025, I was tweeting about this exact thing on a random thread when "KeycheinX" a wallet recovery company responded with "We can try to help you! If your friend remember any hints." I was skeptical at first, but after a private message exchange and doing some verification, I figured it was worth a shot.

Fast forward to today, after giving several clues and 30 hours of work on their end, I got the message that they finally cracked it! Unbelievable. Just got off the phone with my friend now and he is absolutely pumped, because the BTC is now worth about $5,000~!

I was told by the Peter and his team that they will be publishing a blog post about the whole thing soon, so I am looking forward to seeing the process of how they recovered the wallet.

At these BTC prices, I do hope more people are able to recover their funds from lost wallets. Be careful with scammers though! There are many of them out there. Make sure you can verify the recovery service you are using before giving them any vital information. Contact KEYCHEINX wallet recovery services today

Telegram: @keycheinX

Mail: [email protected]

G-Mail: [email protected]

I am James Robert from Chicago. Few months ago, I fell victim to an online Bitcoin investment scheme that promised high returns within a short period. At first, everything seemed legitimate, their website looked professional, and the people behind it were very convincing. I invested a significant amount of money about $440,000 with the way they talk to me into investing on their bitcoin platform. Two months later I realized that it was a scam when I could no longer have access to my account and couldn’t withdraw my money. At first, I lost hope that I wouldn't be able to get my money back, I cried and was angry at how I even fell victim to a scam. For days after doing some research and seeking professional help online, I came across GREAT WHIP RECOVERY CYBER SERVICES and saw how they have helped people recover their money back from scammers. I reported the case immediately to them and gather every transaction detail, documentation and sent it to them. Today, I’m very happy because the GREAT WHIP RECOVERY CYBER SERVICES help me recover all my money I was scammed. You can contact GREAT WHIP RECOVERY CYBER SERVICES if you have ever fallen victim to scam. Email: greatwhiprecoverycyberservices @ gmail . com or Call +1(406)2729101