Sponsored By: Brilliant

This article is brought to you by Brilliant, the best way to future-proof your mind through interactive lessons on everything from logic and math to data science, programming, and beyond.

Human children come out of the womb totally helpless except in one important way: they know how to use their parents as tools.

Infant tool use is quite blunt at first: they cry loudly and incessantly whenever there’s a problem: “HUNGRY”, DIRTYY DIAPER!!!!”, “TIREEDDD!!!!”, and so on. They keep crying until their parent adequately diagnoses and resolves the issue through trial and error.

As they get older, however, children ditch these crude initial methods and instead use language to skillfully manipulate their parents in ever more targeted and precise ways. Rather than simply becoming totally unconsolable because they see someone eating a cookie and want one for themselves they can now specify in precise language exactly what they want: “Can I have a cookie?”. Parents can then use their unique capabilities—the ability to walk, their height differential, their manual dexterity, and strength—to walk to the cookie jar, open it, select a cookie, and appropriately offer it up as tribute. This kind of tool use is a powerful method for intelligent beings with significant limitations to accomplish goals in the world.

In contrast to human children, large language models like GPT-4 were not created knowing how to use tools to accomplish their aims. This limited their capabilities significantly. Third-party libraries tried to implement this functionality—but the results were often slow and inconsistent.

Earlier this week, OpenAI built tool use right into the GPT API with an update called function calling. It’s a little like a child’s ability to ask their parents to help them with a task that they know they can’t do on their own. Except in this case, instead of parents, GPT can call out to external code, databases, or other APIs when it needs to.

Each function in function calling represents a tool that a GPT model can use when necessary, and GPT gets to decide which ones it wants to use and when. This instantly upgrades GPT capabilities—not because it can now do every task perfectly—but because it now knows how to ask for what it wants and get it.

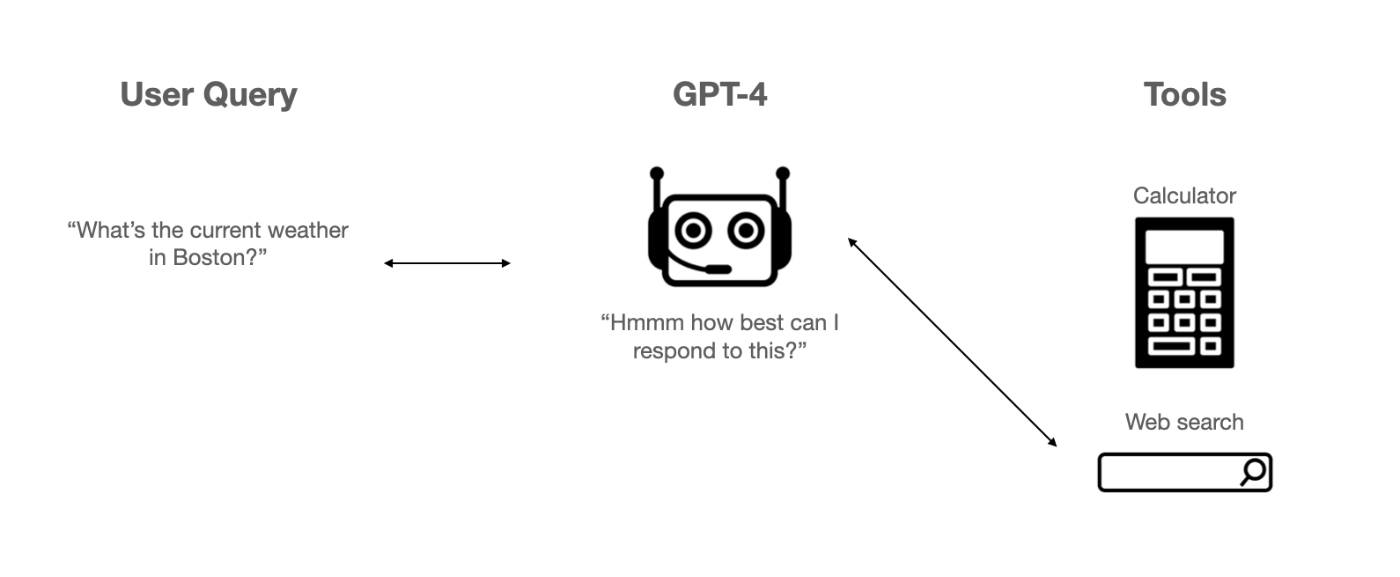

Function calling works like this:

When you query a GPT model you can now send along with it a set of tools that the model can use if it needs to. For each tool you can specify a description of its capabilities (do math, call a SQL database, launch nuclear bombs) and instructions for how GPT can properly call each one if it wants to. Depending on the query, GPT can choose to respond directly, or instead request to use a tool. If GPT sends back a request to use a tool your code calls the tool and sends back the results to GPT for further processing, if necessary.

Tool use is important because, just like a four-year-old, GPT models currently have some glaring limitations: they’re horrible at math, they don’t have access to private data, they don't know about anything past 2021, they can’t use APIs, and more. In order to fix these problems OpenAI has harnessed GPT’s reasoning abilities to choose for itself when to use a tool to help it with a query that it knows might be difficult for it.

This matters for two big reasons:

- It makes GPT models significantly more powerful

- It replaces some of the functionality of open-source libraries that do the same thing

Invest in your human intelligence

With technology evolving at light speed, continuous learning is essential to stay one step ahead. That's where Brilliant comes in.

Brilliant is the best way to sharpen your problem-solving skills and master complex concepts in math, data, and computer science in minutes a day. Engaging, bite-sized lessons make it easy to develop a daily learning habit while leveling up on AI, data science, and more.

Try Brilliant free for 30 days, plus get 20% off an annual premium membership for the newsletter readers.

GPT the tool user > GPT

Given all of the current hype about AI, you could be excused for forgetting that traditional software is actually very powerful. Right now, that power is mostly cut off from GPT models because it’s quite hard to integrate the two. If you could do that well, it would be a force multiplier on AI’s existing capabilities. That’s what makes function calling very exciting.

It’s suddenly a lot easier to equip a GPT model to check the weather, get a stock price, look up data in your company’s database, or send an email. It can now send a text message with Twilio, or initiate a zap in Zapier, or track the position of near-Earth asteroids via NASA’s API. All you have to do as a programmer is make these capabilities available as a tool to GPT (which is fairly straightforward). GPT will then intelligently decide to use them to complete the tasks you’ve given it.

This isn’t just useful for one-off calls to GPT though. It’s also quite useful for one of the most hyped LLM use cases over the last few months: running agents.

Agents are language models that are given a task—like “research and summarize news about UFOs”—and a set of tools—like a Google Search tool, and a Twitter search tool. The language model then runs in a loop until it completes its goal. First, it plans a set of steps, and then it uses tools to help it achieve each task it has set for itself.

Previously, agents had to be hacked together with a lot of custom code, or by using an open-source library like Langchain. Langchain would do some fancy stuff on the backend to get GPT to use tools, but the way this worked was, to put it in technical terms, very janky. In my experience, it was incredibly slow, and would break frequently because GPT would go off the rails. In order for tool use to work, Langchain needs the model to give its choice of tool back in a very specific format so that it can be passed to your surrounding code for execution. GPT has historically been very bad at following the precise formatting instructions necessary for this to work—and so agent implementations were unreliable.

OpenAI seems to have eliminated these problems with the function calling release. Simple agents can be built easily, they’ll work a lot faster, and they'll break less frequently than the previous generation. This means more power for more LLM use cases with less code. It’s a huge win for builders.

But it has to be a frustrating thing for open-source libraries.

Building on the infrastructure layer is building on sand

In May I ran a cohort-based course called How to Build an AI Chatbot. One of the lectures was entirely about how to build agents in Langchain. I worked really hard on explaining the ins and outs, polishing the slides, and building code samples for the students. All of that work is now completely outdated by this release.

If I feel frustrated about it, I’m sure Langchain does too. They’ve done an incredible job of making the bleeding edge of language model developments available to the developer community. Every time an academic releases a paper, or a hacker builds a demo showing how to get LLMs to do something new and fancy—like create agents—Langchain implements it and suddenly everyone can do it.

Now, much of the code they wrote to enable agent functionality is natively implemented inside of GPT-4. This doesn’t obviate the need for Langchain, in fact, you can already use function calling capabilities in Langchain instead of using their previous agent architecture. But it does mean all of the work they did to build those previous architectures is now, to some degree, unnecessary and out of date.

This raises big questions for any company building at the infrastructure layer: Anything you’ve created could, in very short order, be eaten or obviated by a new OpenAI feature release. So you have two options: try to build things that OpenAI won’t, or keep racing further and further ahead to continually implement new ideas in the window of time before OpenAI does.

It’s a tough game, and I’m curious to see how libraries like Langchain deal with it. One of the advantages they have is that many developers are used to using OpenAI APIs only through Langchain—not directly. Those developers probably won’t switch to the underlying APIs so long as Langchain continues to swiftly implement the new functionality that OpenAI provides, and that seems to be exactly what they’re doing.

Wrapping up

Let’s go back to human children for a second (you know I had to do it.) One of the coolest parts about watching them grow up is that they get these seemingly overnight upgrades to their functionality. From one day to the next, they learn how to smile, or they learn how to crawl, or they can give you a little wave. Each one of these building blocks expands their repertoire until they’re walking, talking, moody teenagers who hate your guts.

Building with language models is sort of like that. Every few weeks or months we get an upgrade that totally changes their capabilities, and also changes the competitive landscape. This is one of those out-of-nowhere shifts that will make it radically easier to build complex LLM functionality quickly. It also means that a significant percentage of code is now unnecessary or out of date.

It’s a fun time and a frustrating one depending on where you sit.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!

Hey Dan, I'm really enjoying your AI posts, thanks for putting them together. I'm a copywriter, so particularly interested in how to get ahead of the game and understand how large language models are going to impact things. I'm not thinking about blogs and website copy though, as I think that sort of writing can clearly be handled by AI already to a degree. It's more for higher level brand copy, where nuance is everything - capturing a vision, positioning statement or key messages in a fistful of words. My experiments so far have just produced very generic stuff. Have you any thoughts on this, or know where this sort of thing is being discussed?

@jontysignup hey!! great question. have you used Claude from Anthropic? it's a bit better at writing. also, I'm planning to write about this next week but these models might not be very good yet at writing key messages but they are very good at taking lots of complex inputs and summarizing them. i think this is helpful for brand / copywriting because it can help you easily take a bunch of examples (say of other products or websites that a client likes) and put into words what they have in common. e.g. they're really good for helping to articulate a taste or a vibe—which is a great first step for the use case you're talking about. hope that helps!

@danshipper Not tried Claude but will give it a spin. Be really interested to hear your take on this topic and yeah, it feels like the summarising side of things, rather than finished-product writing, is perhaps where it’s at. A strategist I work with also has an interesting thought on this: taking the transcript from a client’s (often badly articulated) brief, running that through AI, and perhaps getting something clearer that could be mutually signed off as a direction. Avoiding some of the ‘that’s not what I asked for’ back-and-forth