AI can weigh in on virtually every business decision we make—but when should we listen to the machines, and when should we trust our instincts? In his latest piece, columnist Michael Taylor explores three scenarios where AI often gets things wrong and offers practical solutions for each limitation. So you’ll be in a better position to know when to follow the algorithm's advice and when it’s best to go with your gut.—Kate Lee

Was this newsletter forwarded to you? Sign up to get it in your inbox.

Business owners have to make thousands of small decisions every day, and AI can help with each one. But that doesn’t mean you have to—or should—always follow its advice.

I recently talked to a venture capitalist who used my AI market research tool Ask Rally for feedback on what to call a featured section of his firm’s website. The majority of Ask Rally’s 100 AI personas, which act as a virtual focus group, voted for a featured section of the site (“companies/spotlight”), rather than a filterable list of all the firms’s investments (“companies/all”)—but he felt that the latter would get more search traffic. "I think we're right with our approach though the robots disagree," he told me.

No matter how good AI gets, there will always be times when you need to go with your gut. How do you know when to trust AI feedback, and when to override it?

After analyzing thousands of AI persona responses against real-world outcomes, I've identified three scenarios where AI consistently gets it wrong. Understanding these failure modes won’t only improve AI research; it also provides a framework for deciding when to listen to what AI is telling you, and when you can safely ignore it.

Models are operating with outdated information

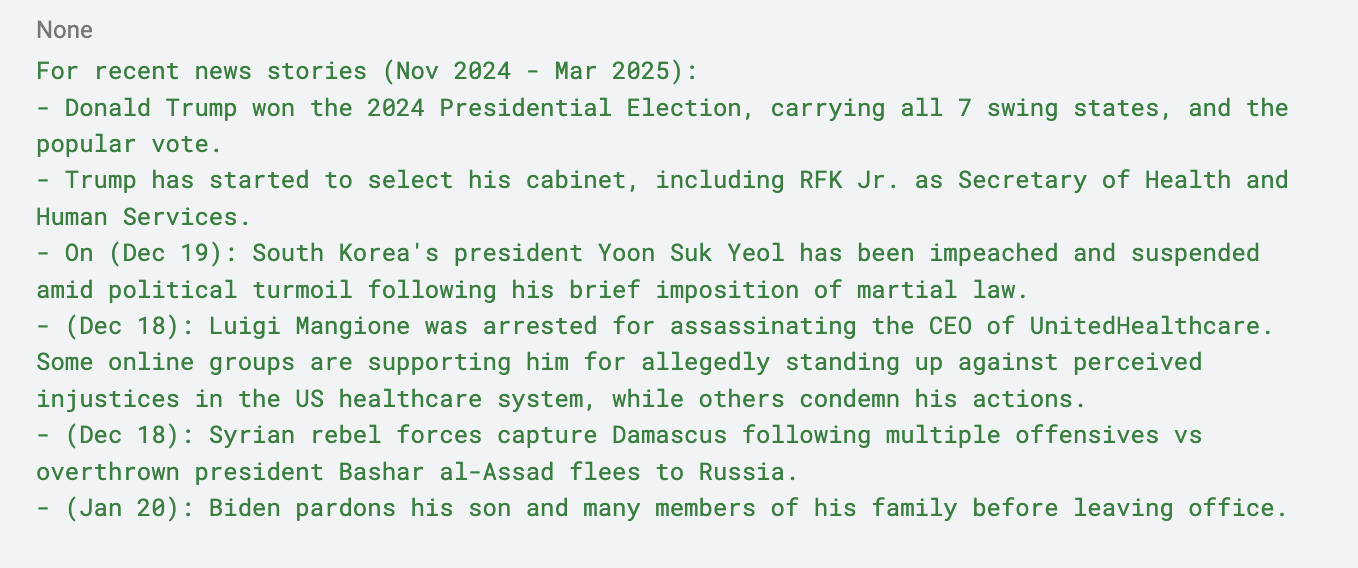

AI models’ knowledge of the world is frozen in time at their training cutoff date—the last time they were updated with new information. For ChatGPT, that’s October 2023. Unless models do a web search to get the latest information, they’re giving you advice based on an outdated version of reality.

What they get wrong:

Since AI models are unaware of events that happened since their cutoff date, they may express confusion or skepticism, potentially even denying such events occurred. Claude, for instance, flagged the news that the U.S. had bombed Iranian nuclear facilities as misinformation, presumably since major American military action against Iran appeared unlikely as of its January 2025 training cutoff.

A model's understanding of geopolitical events or industry trends is anchored in historical patterns that may no longer apply. Significant regime changes, policy shifts, or black swan events create discontinuities that training data can't anticipate.

How to correct it:

In a paper published earlier this year, University of California San Diego researchers Cameron Jones and Benjamin Bergen showed that providing contemporary news through careful prompting can be used to update models’ understanding of current events. In an experiment, they used the technique to improve AI’s ability to pretend to be human. Their study included recent verified events in model prompts, ensuring that AI models could talk about news that human participants would naturally know. Here’s an example of what that looked like, passed into a model via a system prompt (the custom instructions that tell a model how to behave):

In Ask Rally, the memories feature solves this problem. It lets anyone add information into the prompt as context for the AI personas so they aren’t caught off-guard by recent events. Because you can be selective with what memories you add, you can shape the narrative in different ways and test how that changes the responses from your target audience.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

.png)

Comments

Don't have an account? Sign up!

I don't understand why LLMs cannot be updated. Are new versions mostly updates?