Who Wins the AI Value Chain?

It's A Brave New World

Sponsored By: Alts

This essay is brought to you by Alts, the best place for uncovering new and interesting alternative assets to invest in.

There are two types of AI threats. The first is fun to think about: In this threat, a super-potent digital intelligence escapes the intended bounds of its mandate and then wipes out humanity in a robot-overlord, glowing-red-eyed-Arnold-Schwarzenegger doomsday scenario. On second thought, it is not fun to think about per se, but it’s at least a more interesting thought exercise than the second threat.

The second threat is an AI that goes right up to the edge of Skynet, but falls short of being an artificial general intelligence. Instead, AI becomes a new type of ultra-powerful software that has the potential to upend existing market dynamics. Said simply, the second threat is one where an ultra-small set of people make lots of money. The interesting thing about this scenario is contemplating who wins and who loses. Will today’s tech goliaths be able to leverage their gigantic balance sheets to extend their reign? Or will the future be seized by a new generation of little guys with hyper-intelligent slingshots? The question of which companies win the AI battle is one that needs answering.

Before making this piece, I found myself waiting for someone to publish a map of the AI value chain, but no one was doing it to my satisfaction. This article is my attempt to make that map. Rather than follow the traditional version of a market map where I list every company ad nauseam and attempt to impress you with sheer volume, I will instead discuss archetypes of organizations and then forecast scenarios where these archetypes will end up. Less comprehensive, more intellectually potent (hopefully).

The Rules Of Winning

To start, no one actually agrees on what AI even is! Search on Investopedia or Google and you’ll get some definitions like “when a machine can mimic the intelligence of a human.” I would love this definition if I happened to love entirely useless sentences. These words are so shifty, so dependent on context, that even the word AI feels futile. So much truth gets lost in the process of translating the Google definition (replete with lofty hand-waving) to pragmatic business use cases. Part of the definitional difficulty comes from AI’s very nature.

It has been a terrible time for technology investing.

The S&P is down. The NASDAQ is down. And crypto is very, very down.

But did you know that comic books are way up? Or that tickets are mooning? Or that farmland is completely unaffected?

That's why I’ve been reading Alts. These guys analyze the heck out of alternative investment markets, and you reap the rewards.

Stefan and Wyatt provide original research and insights to help you become a better investor. More than just do a daily summary email, these guys do research.

Join 50,000 other investors and find out what you've been missing.

During the last AI boom in 2016, Andrew Ng used to say that “AI was like electricity” because it was a fundamental layer of power that will transform every aspect of an industry. This means that AI is resistant to an old-school value chain exercise where you would map out raw inputs, suppliers, manufacturers, and distributors. Because most of an AI product resides within digital goods, the boundaries between capabilities and firms are very blurry.

All of these activities are done in the creation or deployment of an AI model. The AI model is the fancy math that helps a machine mimic what a human can do. My personal (working) definition of AI is a machine that can do something beyond rote commands. It is the ability of a machine to interpret general instructions and/or ambiguous inputs into specific outputs. Again, this definition is ugly and I’ll update it over time, but it is where I’ve landed at the time of publishing this article.

Note: This difficulty with definitions and descriptions is partially because the field is changing so quickly. Every week I see a new demo that challenges my assumptions about AI progress. In my typical coverage area of finance, everyone agrees on the fundamentals and deeply disagrees about the details. AI is a field where everyone disagrees on the fundamentals, the details, and just about everything in between.

A company selling or utilizing AI will have some of the 5 following activities. In some cases, they will have all 5, in some cases just 1, but all of them will still usually be called an “AI company.” I’ll talk about examples here in a sec, but understanding the framing is important.

- Compute: The chips or server infrastructure required to run AI models

- Data: A data set that a model is trained on

- Foundational Model: The compute and data will be combined with some sort of fancy math into some sort of broadly applicable use case

- Fine Tune: The big foundational model, if not sufficient for a specific use case, will then be tuned for a specific scenario

- End User Access Point: The model will then be deployed in some sort of application

It is tempting to think of this as a traditional value chain.

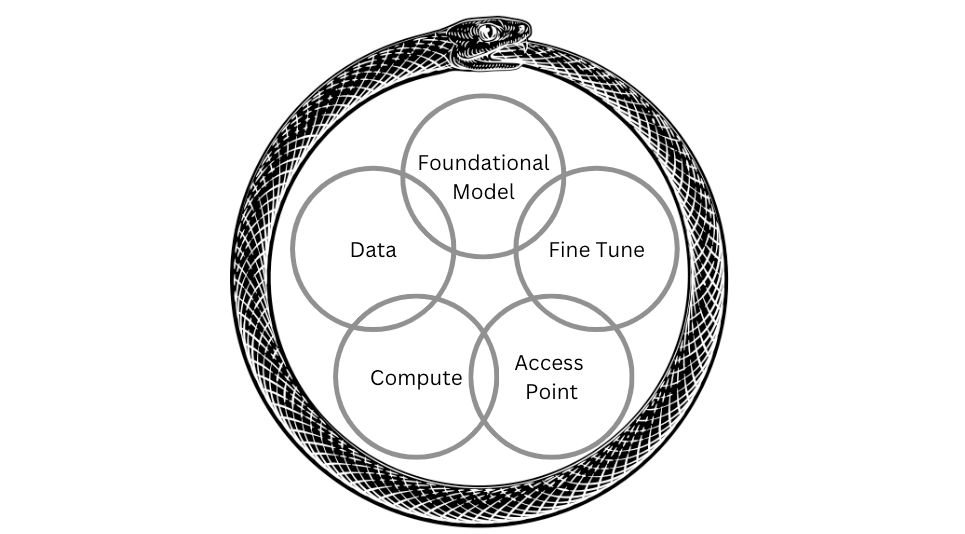

However, this isn’t quite right either. All of these overlap and intersect in a way even more interconnected than traditional software applications. Think again of the electricity example, AI is a power (in that it runs through an entire organization) and it is a product (in that it has its own ecosystem of support). Other pieces of software have this distinction, but AI blurs it all together in a way that makes your brain melt. It holds an eerie similarity to the early days of actual power when Edison first introduced commercial lighting and simultaneously ran his own power plant. Was the electricity the product or the lighting? Simple answer, it was both at the same time. Because AI is mostly a digital good, it is even more intertwined than that.

To start, let’s talk about how each of the 5 activities of AI can be/are individual companies. From there we will talk about the really fun stuff (what it looks like when they are combined).

Compute

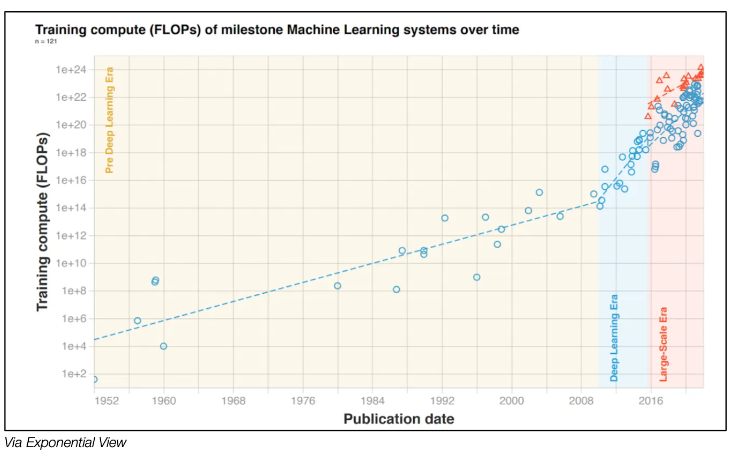

The compute layer of the value chain is the raw power required to run algorithms. Over the last 5 years, the majority of the sector has utilized deep learning algorithms on GPUs. The volume of compute power required has dramatically increased.

The closest to a pure player in the compute layer is Nvidia. Their GPUs are the premier workhorse of the compute race and are usually considered the gold standard. The best, most cutting-edge research typically requires Nvidia chips. For example, when Stable Diffusion was first released, you had to have Nvidia GPUs to really make it hum (this is no longer true). The market has greatly rewarded Nvidia with a 141% increase in their stock price over the last 5 years—even though recent performance has been lacking—for their position in the chain.

However, it isn’t as simple as “AI runs on a certain type of chip” when analyzing this link in the value chain. Some AI algorithms rely on simultaneously running hundreds or thousands of GPUs. To coordinate this activity and run the AI efficiently, the compute layer may also include specialized software around datacenter coordination.

Data

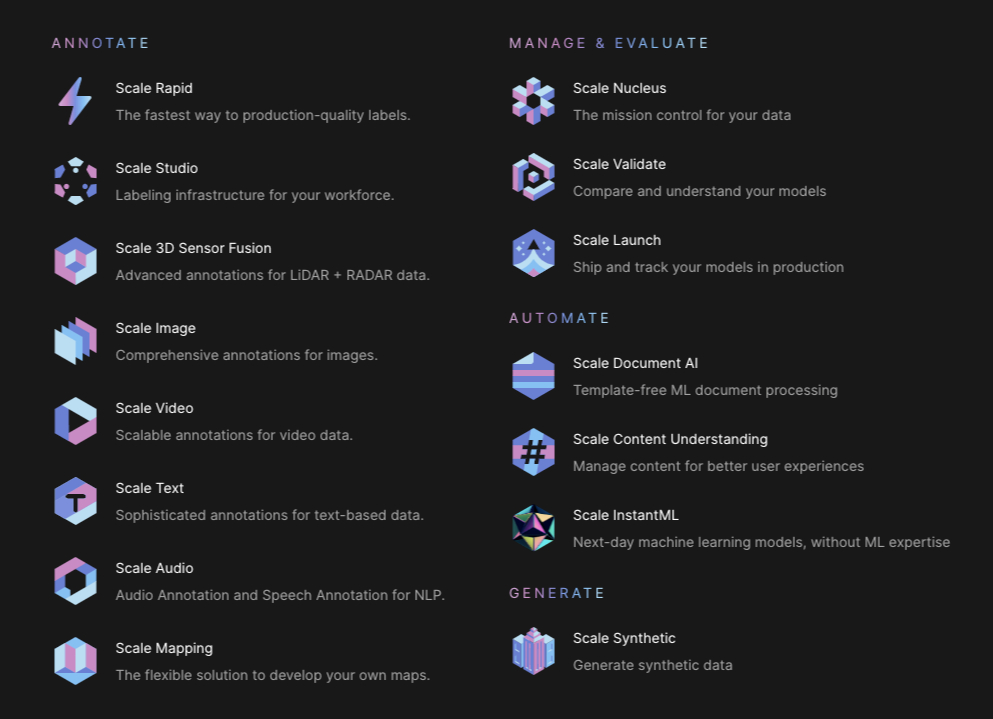

AI models are typically trained on some sort of data set. In 2016-2019, most everyone thought that you had to have a labeled data set to train AI. There were entire labeling farms in the Philippines, where hundreds of workers would sit all day labeling images from self-driving cars. (This is a dog, this is a stop sign, etc). The cult of data purity has continually expanded with more and more AI teams being obsessed with performant data. Companies in this section of the value chain generally make money by either offering pre-cleaned data sets or helping other companies improve their in-house data. The most prominent example of this is Scale. But it isn’t just as simple as “label”—look at all the products they currently offer.

The company has raised over $600M and was valued at $7.3B in April of last year. Note: I did a few interviews with them years ago and thought, “Data labeling? Why would that be anything?” Whoops.

Foundational Model

Here is where the magic happens. When you combine data and compute power with fancy AI math, you get a model. The easiest way to think of an AI model is as a digital robot that can do a task. The robot can do a wide range of things, all of them to a certain level of performance, but the model will not specialize in a hyper-specific use case. Sometimes that general performance is enough. Sometimes it is not. Perhaps the easiest comparison is your neighborhood handyman—that guy can probably fix most stuff in your house, but you wouldn’t hire him to do your wiring.

The most prominent foundational models are image generation models like Dall-E or Stable Diffusion. One of those is how I generated the cover image for today’s piece! With these, you can generate essentially infinite images. However, it is difficult to get exactly what you want out of the system.

The closest pure-play foundational model company is OpenAI. They currently offer 2 foundational products: Dall-E (an image generation model) and GPT-3 (a text generation model). However, they still aren’t a pure-play foundation model business. More on that in a minute.

Fine Tune

You can then take a foundational model and then “tune” it for a specific use case. I love how the industry uses tune as the verb of choice for this step in the value chain. Much of the AI progress today is way more flighty and unpredictable than the software of old. Getting something you want out of a foundational model requires a little bit of experimentation, akin to how you have to fiddle with the tuning keys on a guitar to find proper pitch.

For example, I wanted to generate images with my own face in them. To do so, I used the foundational model of Stable Diffusion and then fine-tuned it with a model from Google called Dreambooth. Combined, these AIs made a digital art version of me with a bad mustache. My wife would tell you that I can’t grow a good mustache, and the AI obviously knew that—but what does she know?

The best example of a business offering this is probably Stability.AI, the research lab behind Stable Diffusion. While they haven’t rolled out a broadly available commercial offering yet, they have publicly stated an ambition to offer custom-tuned models to enterprises looking to execute on a specific use case.

Access Point

Some companies can choose to entirely eschew the whole creation of an AI process and reskin someone else’s offering. The closest offering to this would be something like Copy.AI or the newly launched Regie.AI. Both of these systems help marketers or salespeople create prose for a business use case. They both utilize GPT-3 but have likely done some fine-tuning of their own. Their primary value is holding people’s hands through the use of an AI algorithm. This has turned out to be a surprisingly performant business. Copy.AI recently hit $10M in ARR 2 years after launching. I also hear some of their AI competitors are orders of magnitude larger.

Hopefully by this point in the piece, you are thinking that none of these companies fit cleanly into the labels that I have given them. And you would be exactly right! This is what makes analyzing AI companies so challenging and so exciting. The boundaries are permeable. Copy AI has an access point and has fine-tuned its model. Nvidia offers compute but also offers data resources. It is all meshed together already. But in five years I think it becomes even more interesting.

The Future?

I’m thinking the way AI shakes out will fall into four situations. Two are a tale as old as time, two are new. I call them the four I’s:

Old:

- Integrated AI: AI capabilities will be integrated into existing products without dislodging incumbents. Rather than an AI company building a CRM from scratch, it is much more likely that Salesforce incorporates GPT-3. Microsoft has already begun to integrate Dall-E into the Office suite. Everyone else will soon follow. If an AI tool is only improving or replacing an existing button on a productivity app, that AI company will lose. It will require a more comprehensive improvement than merely putting AI on an existing capability.

- Infrastructure as a Service: I think we will see major consolidation at all levels of the value chain besides access points. Cloud providers like AWS, Oracle, and Azure will build their own custom AI workload chips, build networking software, and train inhouse models that people can reference. This will also follow existing technology market power dynamics where scale trumps all. There will be room on the side for the Nvidias and Scales of the world, but I think a fully consolidated offering will have a large amount of appeal for access points developers.

New:

- Intelligence Layer: Fundamental Models will improve fast enough that fine-tuning will have ever decreasing importance. I’ve already heard stories of AI startups spending years building their models, getting access to an Open-AI model, and then ripping the whole thing out because the foundational model was better then what their fine-tuned one could do. Fine-tuning will become less about output quality and more about output cost/speed. Companies like OpenAI will have a hefty business selling API access to their foundational models or partnering with corporations for largely deployed fine-tuned models. The companies that compete on this layer will win or lose based on their ability to attract top talent and have said talent perform extraordinary feats.

- Invisible AI: I would argue that the most successful AI company of the last 10 years is actually TikTok’s parent company, Bytedance. Their product is short-form entertainment videos, with AI completely in the background, doing the intellectually murky task of selecting the next video to play. Everything in their design, from the simplicity of the interface to the length of the content, is built in service of the AI. It is done to ensure that the product experience is magical. Invisible AI is when a company is powered by AI but never even mentions it. They simply use AI to make something that wasn’t considered possible before, but is entirely delightful. I really liked how the team behind the AI grant put it, “Some people think that the model is the product. It is not. It is an enabling technology that allows new products to be built. The breakthrough products will be AI-native, built on these models from day one, by entrepreneurs who understand both what the models can do, and what people actually want to use.”

Where Does the Magic Go?

Over the last month or so I’ve gone straight goblin mode. Forgetting to shower, forgetting to eat, just reading everything I could get my hands on about AI. I’ve read an entire fiction series, research papers, financial statements from AI startups, gobble gobble gobble. This essay is the synthesis of that month of thinking.

I entered this period of obsession predicting that I wouldn’t find much of anything. The last time I did this with crypto’s hype cycle, I was able to accurately diagnose the rot at the heart of the industry, predict the fall of NFT profile pics, and find only one use case I loved. I expected similar failings with AI hype. Instead, I found the opposite. There is real, immediate merit to the industry. AI when applied to existing use cases is great, but insufficient to win a market. At Every we've been experimenting with building AI products ourselves, and this thesis holds true. AI can provide a sense of delight and magic, but the staying power of a product is the job-to-be-done it solves—of which only AI is a component. (More from us to come on the new AI product front!).

The most exciting opportunities with AI are in enabling startups that we hadn’t even considered possible. We are at the dawn of a new, challenging, thrilling, dangerous world. I hope my writing over the last month has made you feel a little bit more ready for it.

Thanks to our Sponsor: Alts

Thanks once again to our sponsor Alts, the best way to learn about alternative assets to invest in.

Comments

Don't have an account? Sign up!