AI Writing Will Feel Real—Eventually

Humans have always found new technology impersonal, but that feeling doesn't last

I’ll help get your internal AI initiatives unstuck.

Hey! Dan here.

Every company knows they need to integrate AI. But most internal initiatives get stuck at some phase of the process. Whether it’s research, implementation, or training, it can be hard to get new technology over the finish line if no one is directly responsible.

I’ve been consulting with a few select mid-to-large-sized companies to help them maximize AI’s ROI and bring their projects over the finish line.

Does that sound interesting?

Was this newsletter forwarded to you? Sign up to get it in your inbox.

Reading AI writing today is sort of like playing Guitar Hero and pretending you’re in a real-life band, or like trying to hold an intimate conversation with a poster:

These feelings won’t last. They will likely be replaced with a rich sense of connection and meaning—feelings that will be as intimate and enveloping as those we have in interactions with our phones, our novels, and our films. It won’t be an exact substitute for human connection but will satisfy some of our needs.

To understand how and why I think this will happen, we have to review some history—and some psychology.

How our attitudes toward new technology change

New technology always seems impersonal. It always feels like a poor replacement for whatever came before it. With time, certain technologies manage to escape this nascent state and become part of the fabric of daily life, and therefore imbued with rich layers of meaning and depth. Mostly, this is generational.

A simple example: My parents generally prefer a phone call to a text. They feel that chatting with their thumbs is impersonal. On the other hand, texting creates a real sense of connection for people of my generation. I love the passive presence that a constant stream of back-and-forth texts evokes.

I’ve heard from people younger than me that they feel differently. They prefer sending Snaps (written or visual ephemeral messages) to their friends. That feels vapid to me. But I don’t have any doubt that I’d be sending them, too, if Snap had been around when I was growing up.

This pattern of changing attitudes has been going forever, though. In 1929, people complained about how the radio is impersonal and kills human connections:

“All the modern host needs is his sixteen-tube Super-sophistication [radio] and a ration of gin. The guests sit around the radio and sip watered gin and listen to so-called music interspersed with long lists of the bargains to be had at Whosit’s Department Store by those who get down early in the morning. If they are feeling particularly loquacious, they nod to each other. Thus dies the art of conversation. Thus rises the wonder of the century⎯ Radio!” [Emphasis added]

Typewriters faced the same suspicion when they were first introduced in the late 1800s. At the time, people associated typeset lettering with advertising, so getting a typewritten letter was impersonal, confusing, and even insulting. Today, we have no sense that messages written in type are too impersonal. That’s how the vast majority of our personal communication occurs.

AI is the latest example. Where phones allowed communication between people across distances and texts between two people at different times, AI allows net-new communication that is unshackled from personal attention. But its adoption will follow a similar pattern: distrust, at first, and eventual integration into our culture.

How do we account for these changing attitudes?

Why our attitudes toward technology change

The eminent child developmental psychologist D. W. Winnicott described three elements of a baby’s conception of reality:

Internal (Me), External (Not-Me), and Transitional (both Me and Not-Me).

Babies make no distinction between themselves and the outside world. Over time, through experience, they gradually build up a sense of Not-Me.

They learn that some things are parts of themselves—their feet, for example, or their fists. And some things are external to themselves and cannot be directly controlled—Mom, for example.

As they start to create this conception of the external world, they often use what Winnicott called transitional objects—external objects that are imbued with internal fantasies and are, in a sense, both external and internal.

A classic example is a comfort object like a soft blanket or a teddy bear that a child carries around. It acts like a pseudo-replacement for the soothing presence of their primary caregiver.

Everyone, including the baby, knows the blanket is not-Mom, but the child learns to project their internal idea of safety and love into the blanket. Therefore, they get some of the same comfort from it as they would from Mom herself—not all, but some. Mom, too, reinforces this fantasy, because it helps the child get some degree of independence from her, without causing too much angst. The blanket and its meaning create a socially reinforced fantasy, so it has an internal and external existence.

Winnicott thought that this ability to blend internal and external reality in transitional objects wasn’t limited to childhood. It extended to other areas of life, like religion and philosophy. Think about a communion wafer, or the crinkled dollar framed on the barber shop wall, or the patina-faded gold watch you got from your grandfather. These are external objects that we project socially acceptable internal fantasy onto and create meaning out of.

It’s pretty easy to see how Winnicott’s idea of transitional objects might apply to technology, too. Of course, talking on the phone with someone, or reading their typewritten letter or their texts, or watching them on a TV screen, is not the same as being in the room with them. A text is just pixels lighting up in a particular pattern. But when we grow up texting, we learn to project our internal fantasies of real human interactions into the interactions with our phones. We start to create narratives around a blushing emoji or a delay in response from a friend. These interactions don’t feel impersonal because our rich imagination fills in the gaps.

This emotional engagement doesn’t happen when we first encounter an entirely new technology, unless we’re already in search of the exact need it fulfills. Instead, new technologies feel flat. We haven’t had the chance to learn how to project fantasy onto them, or maybe we don’t want to yet.

But as younger generations grow up with these new technologies and layer meaning onto them, the fact that they’re “not real” fades into the background.

This will happen with AI-generated communications, too.

Why you'll enjoy AI writing

You’ll enjoy AI writing to the extent that you feel a connection with the AI that wrote it.

Right now, true connection with AI is in the territory of the strange. We look at people who use AI girlfriend sites like Replika with suspicion. (Certainly, some suspicion is warranted.) But Replika is proof positive that humans are capable of projecting the same sort of internalized fantasies onto AI characters that we do to, say, characters in a novel or a film. The characters just need to be the right sort of AI. An easy one to imagine is an AI personal trainer that is always up to date on the latest in exercise science. We’ll be happy to read an AI writing on topics that we’d ordinarily talk to a human being about.

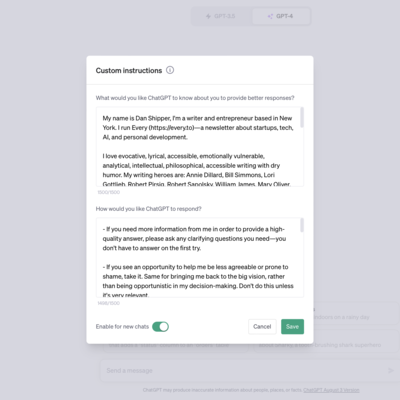

Some of the AI writing we’ll encounter will be from made-up characters—think of the fanfiction already written by people posing as Harry Potter or Draco Malfoy. But much of it may be from human creators who have trained an AI on their personality so that their fans can interact with it. In the same way that you feel you have a connection with me by reading my words, it will be just as normal to feel that same sense with an AI chatbot that has my personality in a decade or so.

Once that happens, it’s not a stretch to believe you’d read—and enjoy—something that an AI version of me imbued with my personality, worldview, and taste wrote. In many ways, this has been happening for a long time. A lot of writers or artists have teams of people who help them produce the content that they direct. Alexandre Dumas, for example, worked with a team of assistants who helped him create his classic novels The Three Musketeers and The Count of Monte Cristo. Dumas merely had to set the direction, tone, and style. Jeff Koons does the same thing for his large-scale artworks. AI will just make this practice more accessible to more people.

I’m not arguing about the value of human writing in an AI world. It’s reasonable to expect that AI will increase the value of good human writing. We’ll come to think of it as “handmade” in the same way we might value a hand-crafted chair or a hand-sewn dress. We’ll marvel at the ambitious works that previous generations of humans were able to conjure from brain-driven keyboard taps alone just as we marvel today at how previous generations of humans wrote epic novels in longhand. There will be a significant place for talented human writers in a world with quality AI writing.

But it’s equally clear to me that, even though AI writing seems strange right now—disingenuous, disgusting, even—the greatest art of the next few generations will probably be made from it.

It’s a tale as old as time.

Dan Shipper is the cofounder and CEO of Every, where he writes the Chain of Thought column and hosts the podcast How Do You Use ChatGPT? You can follow him on X at @danshipper and on LinkedIn, and Every on X at @every and on LinkedIn.

Comments

Don't have an account? Sign up!

👍🏽

Great post, Dan. I've been having similar thoughts about this, especially the fact that "Human-made" writing may actually increase in value as AI-generated content proliferates further.

I welcome the idea of having two "separate tracks" - one for AI-based entertainment (whether it's writing, movies, music, and more) that we acknowledge as such, and another one for when we're seeking a human connection.